Gustav Eje Henter

The Case for Translation-Invariant Self-Attention in Transformer-Based Language Models

Jun 03, 2021

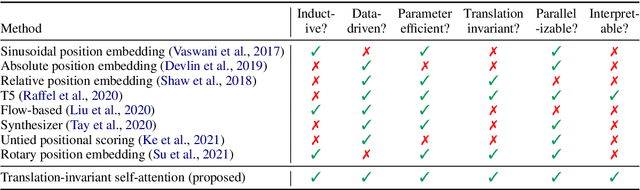

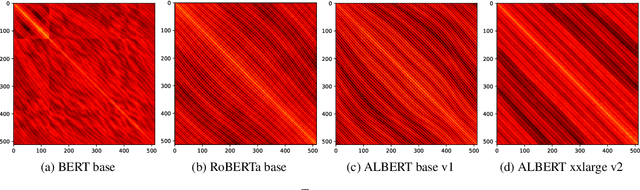

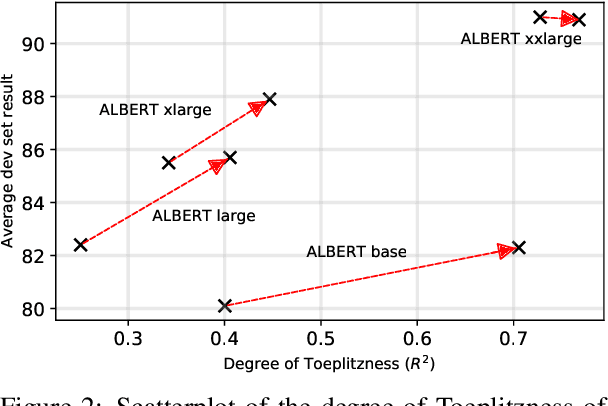

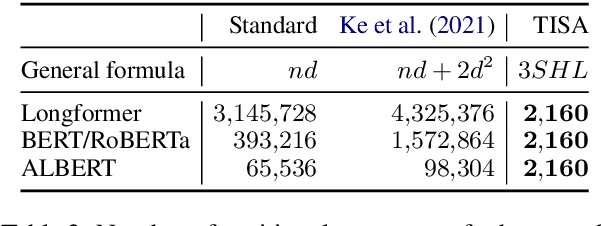

Abstract:Mechanisms for encoding positional information are central for transformer-based language models. In this paper, we analyze the position embeddings of existing language models, finding strong evidence of translation invariance, both for the embeddings themselves and for their effect on self-attention. The degree of translation invariance increases during training and correlates positively with model performance. Our findings lead us to propose translation-invariant self-attention (TISA), which accounts for the relative position between tokens in an interpretable fashion without needing conventional position embeddings. Our proposal has several theoretical advantages over existing position-representation approaches. Experiments show that it improves on regular ALBERT on GLUE tasks, while only adding orders of magnitude less positional parameters.

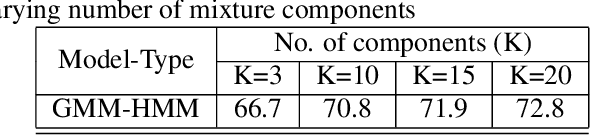

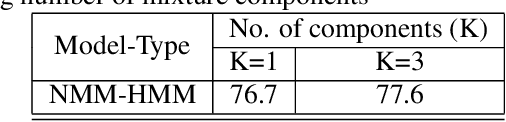

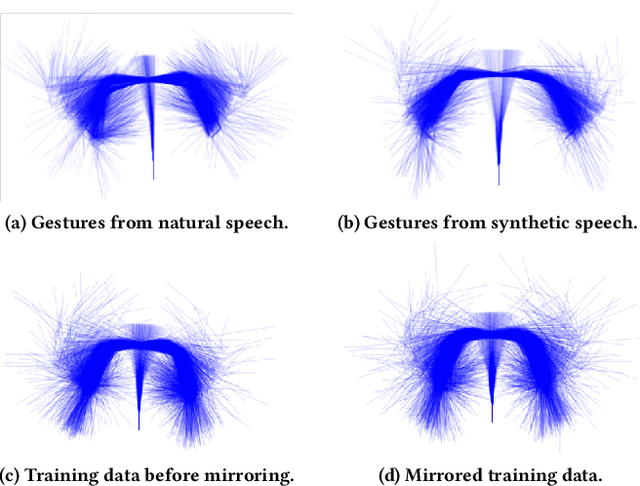

Robust Classification using Hidden Markov Models and Mixtures of Normalizing Flows

Feb 15, 2021

Abstract:We test the robustness of a maximum-likelihood (ML) based classifier where sequential data as observation is corrupted by noise. The hypothesis is that a generative model, that combines the state transitions of a hidden Markov model (HMM) and the neural network based probability distributions for the hidden states of the HMM, can provide a robust classification performance. The combined model is called normalizing-flow mixture model based HMM (NMM-HMM). It can be trained using a combination of expectation-maximization (EM) and backpropagation. We verify the improved robustness of NMM-HMM classifiers in an application to speech recognition.

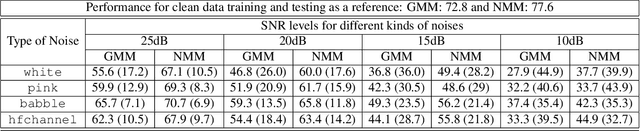

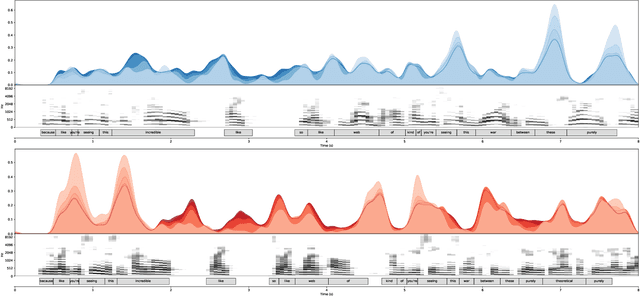

Generating coherent spontaneous speech and gesture from text

Jan 14, 2021

Abstract:Embodied human communication encompasses both verbal (speech) and non-verbal information (e.g., gesture and head movements). Recent advances in machine learning have substantially improved the technologies for generating synthetic versions of both of these types of data: On the speech side, text-to-speech systems are now able to generate highly convincing, spontaneous-sounding speech using unscripted speech audio as the source material. On the motion side, probabilistic motion-generation methods can now synthesise vivid and lifelike speech-driven 3D gesticulation. In this paper, we put these two state-of-the-art technologies together in a coherent fashion for the first time. Concretely, we demonstrate a proof-of-concept system trained on a single-speaker audio and motion-capture dataset, that is able to generate both speech and full-body gestures together from text input. In contrast to previous approaches for joint speech-and-gesture generation, we generate full-body gestures from speech synthesis trained on recordings of spontaneous speech from the same person as the motion-capture data. We illustrate our results by visualising gesture spaces and text-speech-gesture alignments, and through a demonstration video at https://simonalexanderson.github.io/IVA2020 .

* 3 pages, 2 figures, published at the ACM International Conference on Intelligent Virtual Agents (IVA) 2020

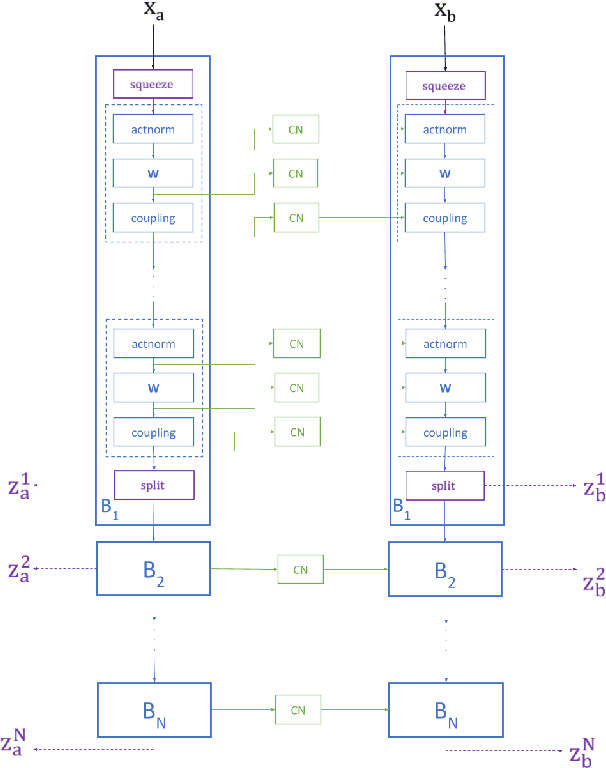

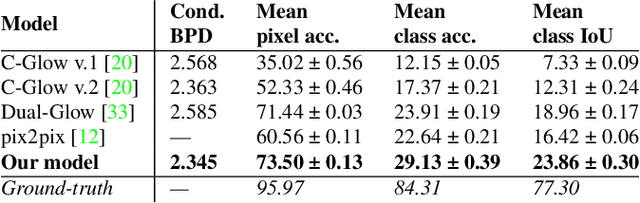

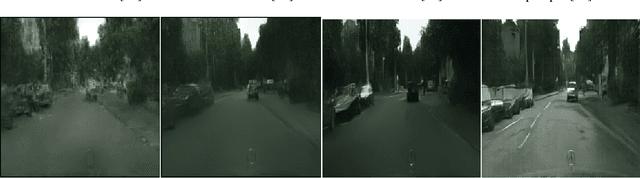

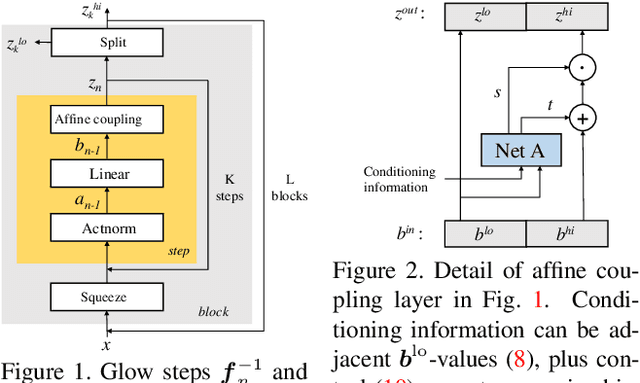

Full-Glow: Fully conditional Glow for more realistic image generation

Dec 10, 2020

Abstract:Autonomous agents, such as driverless cars, require large amounts of labeled visual data for their training. A viable approach for acquiring such data is training a generative model with collected real data, and then augmenting the collected real dataset with synthetic images from the model, generated with control of the scene layout and ground truth labeling. In this paper we propose Full-Glow, a fully conditional Glow-based architecture for generating plausible and realistic images of novel street scenes given a semantic segmentation map indicating the scene layout. Benchmark comparisons show our model to outperform recent works in terms of the semantic segmentation performance of a pretrained PSPNet. This indicates that images from our model are, to a higher degree than from other models, similar to real images of the same kinds of scenes and objects, making them suitable as training data for a visual semantic segmentation or object recognition system.

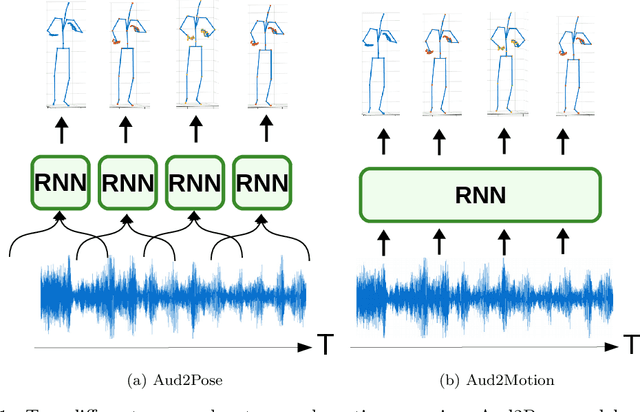

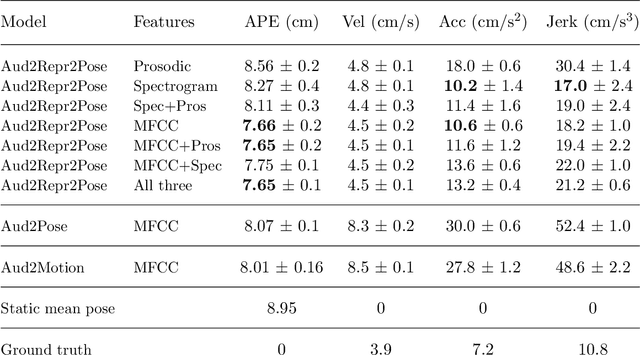

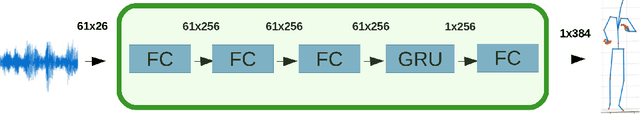

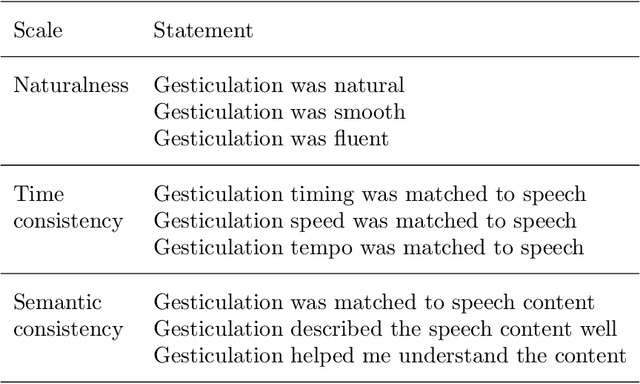

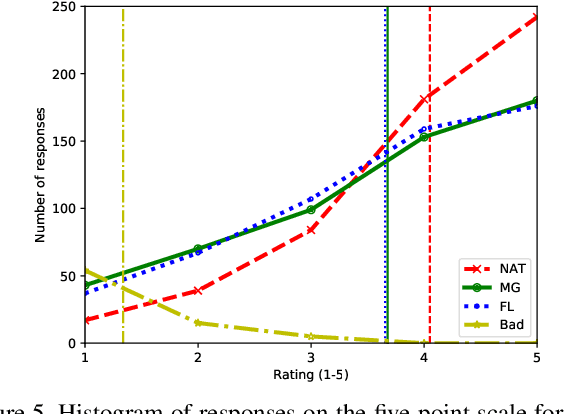

Moving fast and slow: Analysis of representations and post-processing in speech-driven automatic gesture generation

Jul 16, 2020

Abstract:This paper presents a novel framework for speech-driven gesture production, applicable to virtual agents to enhance human-computer interaction. Specifically, we extend recent deep-learning-based, data-driven methods for speech-driven gesture generation by incorporating representation learning. Our model takes speech as input and produces gestures as output, in the form of a sequence of 3D coordinates. We provide an analysis of different representations for the input (speech) and the output (motion) of the network by both objective and subjective evaluations. We also analyse the importance of smoothing of the produced motion. Our results indicated that the proposed method improved on our baseline in terms of objective measures. For example, it better captured the motion dynamics and better matched the motion-speed distribution. Moreover, we performed user studies on two different datasets. The studies confirmed that our proposed method is perceived as more natural than the baseline, although the difference in the studies was eliminated by appropriate post-processing: hip-centering and smoothing. We conclude that it is important to take both feature representation, model architecture and post-processing into account when designing an automatic gesture-production method.

Robust model training and generalisation with Studentising flows

Jul 11, 2020

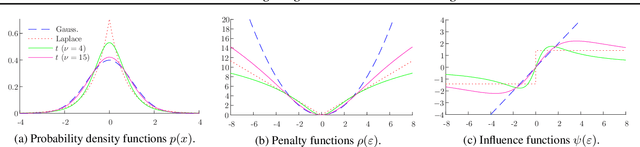

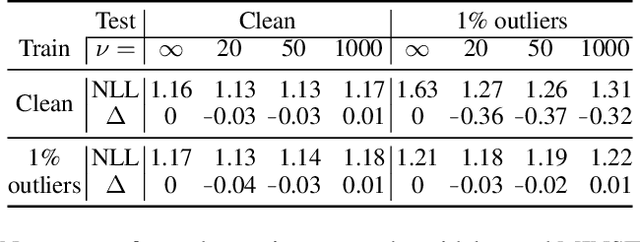

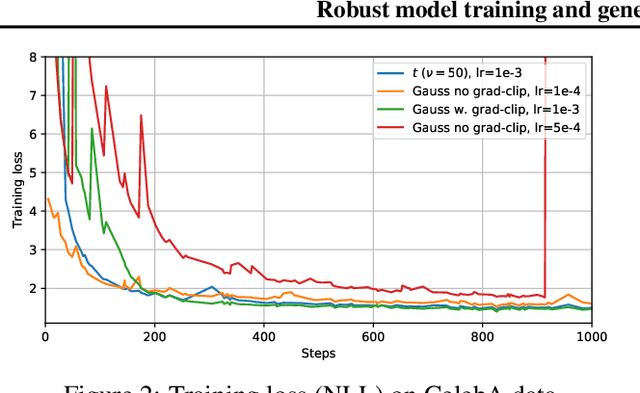

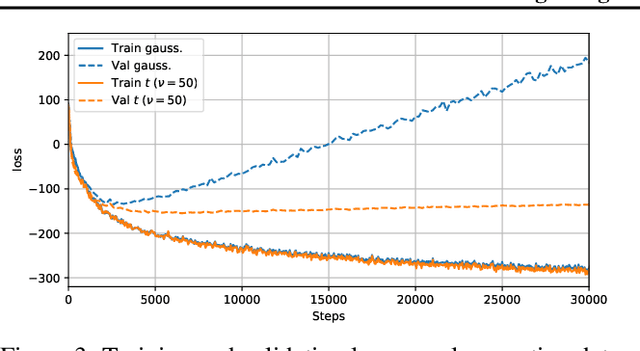

Abstract:Normalising flows are tractable probabilistic models that leverage the power of deep learning to describe a wide parametric family of distributions, all while remaining trainable using maximum likelihood. We discuss how these methods can be further improved based on insights from robust (in particular, resistant) statistics. Specifically, we propose to endow flow-based models with fat-tailed latent distributions such as multivariate Student's $t$, as a simple drop-in replacement for the Gaussian distribution used by conventional normalising flows. While robustness brings many advantages, this paper explores two of them: 1) We describe how using fatter-tailed base distributions can give benefits similar to gradient clipping, but without compromising the asymptotic consistency of the method. 2) We also discuss how robust ideas lead to models with reduced generalisation gap and improved held-out data likelihood. Experiments on several different datasets confirm the efficacy of the proposed approach in both regards.

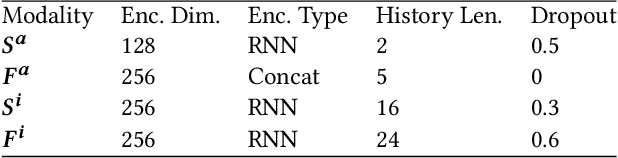

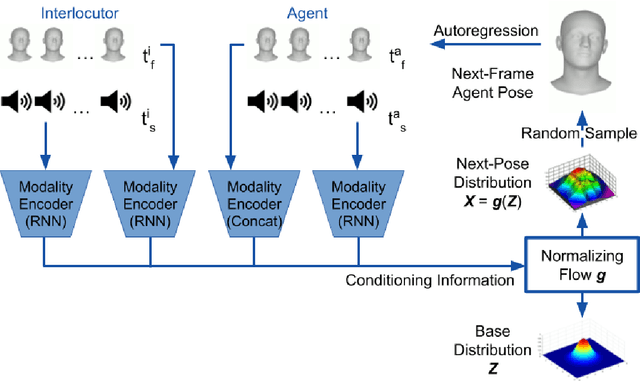

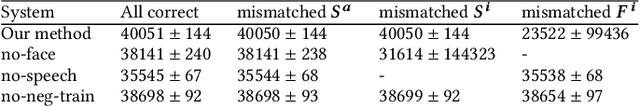

Let's face it: Probabilistic multi-modal interlocutor-aware generation of facial gestures in dyadic settings

Jun 11, 2020

Abstract:To enable more natural face-to-face interactions, conversational agents need to adapt their behavior to their interlocutors. One key aspect of this is generation of appropriate non-verbal behavior for the agent, for example facial gestures, here defined as facial expressions and head movements. Most existing gesture-generating systems do not utilize multi-modal cues from the interlocutor when synthesizing non-verbal behavior. Those that do, typically use deterministic methods that risk producing repetitive and non-vivid motions. In this paper, we introduce a probabilistic method to synthesize interlocutor-aware facial gestures - represented by highly expressive FLAME parameters - in dyadic conversations. Our contributions are: a) a method for feature extraction from multi-party video and speech recordings, resulting in a representation that allows for independent control and manipulation of expression and speech articulation in a 3D avatar; b) an extension to MoGlow, a recent motion-synthesis method based on normalizing flows, to also take multi-modal signals from the interlocutor as input and subsequently output interlocutor-aware facial gestures; and c) subjective and objective experiments assessing the use and relative importance of the different modalities in the synthesized output. The results show that the model successfully leverages the input from the interlocutor to generate more appropriate behavior.

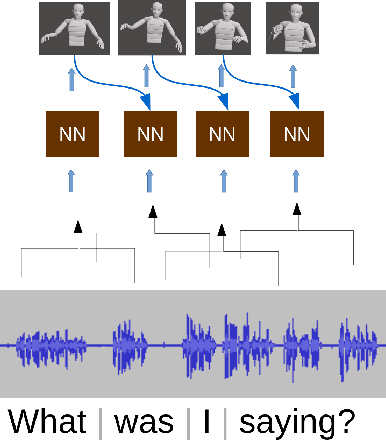

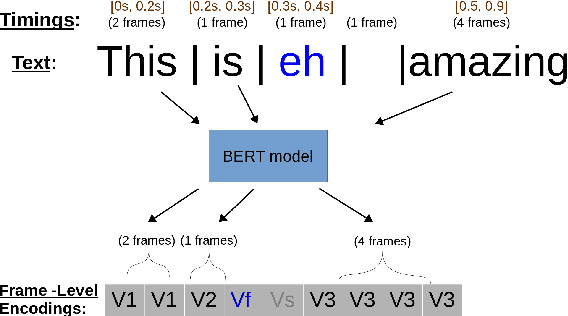

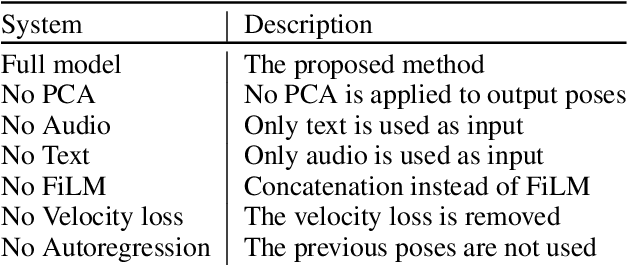

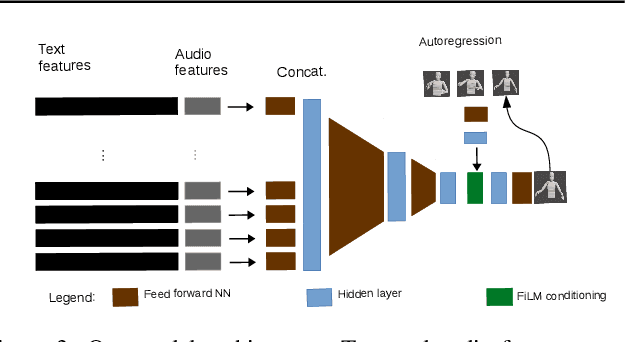

Gesticulator: A framework for semantically-aware speech-driven gesture generation

Jan 25, 2020

Abstract:During speech, people spontaneously gesticulate, which plays a key role in conveying information. Similarly, realistic co-speech gestures are crucial to enable natural and smooth interactions with social agents. Current data-driven co-speech gesture generation systems use a single modality for representing speech: either audio or text. These systems are therefore confined to producing either acoustically-linked beat gestures or semantically-linked gesticulation (e.g., raising a hand when saying ``high''): they cannot appropriately learn to generate both gesture types. We present a model designed to produce arbitrary beat and semantic gestures together. Our deep-learning based model takes both acoustic and semantic representations of speech as input, and generates gestures as a sequence of joint angle rotations as output. The resulting gestures can be applied to both virtual agents and humanoid robots. We illustrate the model's efficacy with subjective and objective evaluations.

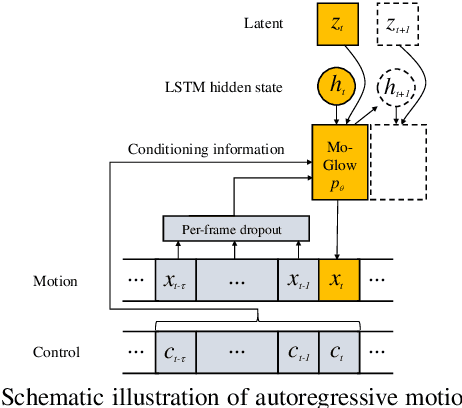

MoGlow: Probabilistic and controllable motion synthesis using normalising flows

May 16, 2019

Abstract:Data-driven modelling and synthesis of motion data is an active research area with applications that include animation and games. This paper introduces a new class of probabilistic, generative motion-data models based on normalising flows, specifically Glow. Models of this kind can describe highly complex distributions (unlike many classical approaches like GMMs) yet can be trained stably and efficiently using standard maximum likelihood (unlike GANs). Several model variants are described: unconditional fixed-length sequence models, conditional (i.e., controllable) fixed-length sequence models, and finally conditional, variable-length sequence models. The last type uses LSTMs to enable arbitrarily long time-dependencies and is, importantly, causal, meaning it only depends on control and pose information from current and previous timesteps. This makes it suitable for generating controllable motion in real-time applications. Every model type can in principle be applied to any motion since they do not make restrictive assumptions such as the motion being cyclic in nature. Experiments on a motion-capture dataset of human locomotion confirm that motion (sequences of 3D joint coordinates) sampled randomly from the new methods is judged as convincingly natural by human observers.

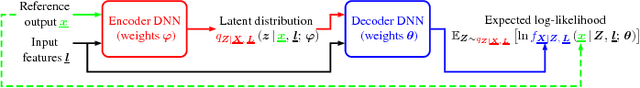

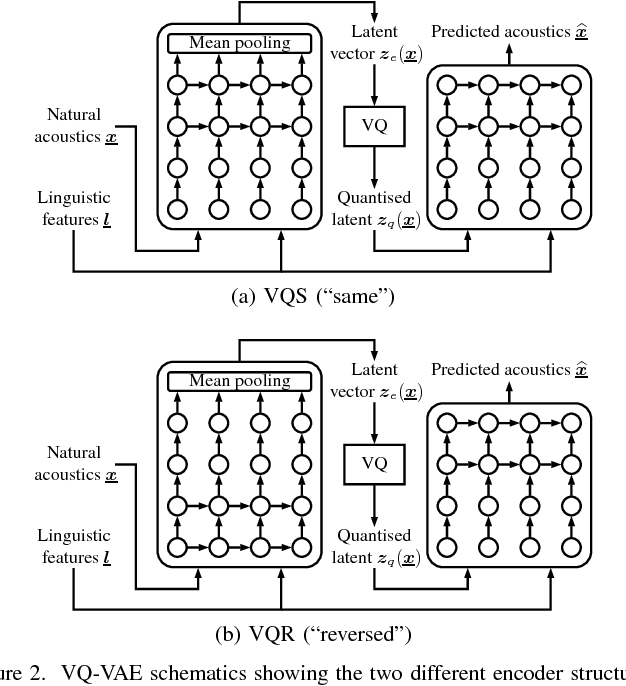

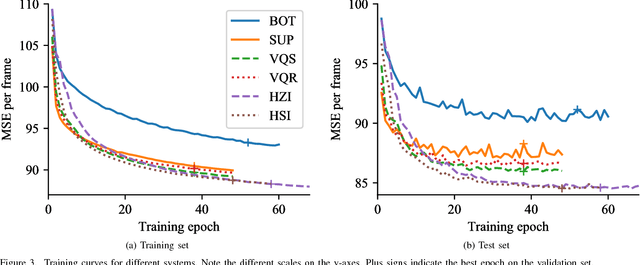

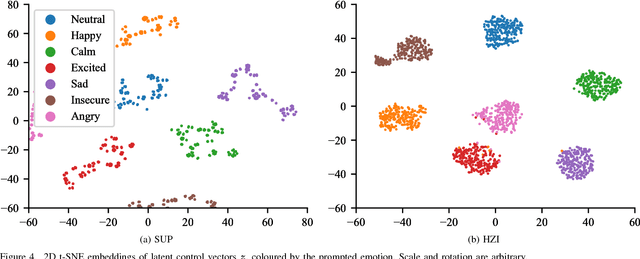

Deep Encoder-Decoder Models for Unsupervised Learning of Controllable Speech Synthesis

Sep 09, 2018

Abstract:Generating versatile and appropriate synthetic speech requires control over the output expression separate from the spoken text. Important non-textual speech variation is seldom annotated, in which case output control must be learned in an unsupervised fashion. In this paper, we perform an in-depth study of methods for unsupervised learning of control in statistical speech synthesis. For example, we show that popular unsupervised training heuristics can be interpreted as variational inference in certain autoencoder models. We additionally connect these models to VQ-VAEs, another, recently-proposed class of deep variational autoencoders, which we show can be derived from a very similar mathematical argument. The implications of these new probabilistic interpretations are discussed. We illustrate the utility of the various approaches with an application to acoustic modelling for emotional speech synthesis, where the unsupervised methods for learning expression control (without access to emotional labels) are found to give results that in many aspects match or surpass the previous best supervised approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge