Guangquan Zhang

Bridging the Theoretical Bound and Deep Algorithms for Open Set Domain Adaptation

Jun 23, 2020

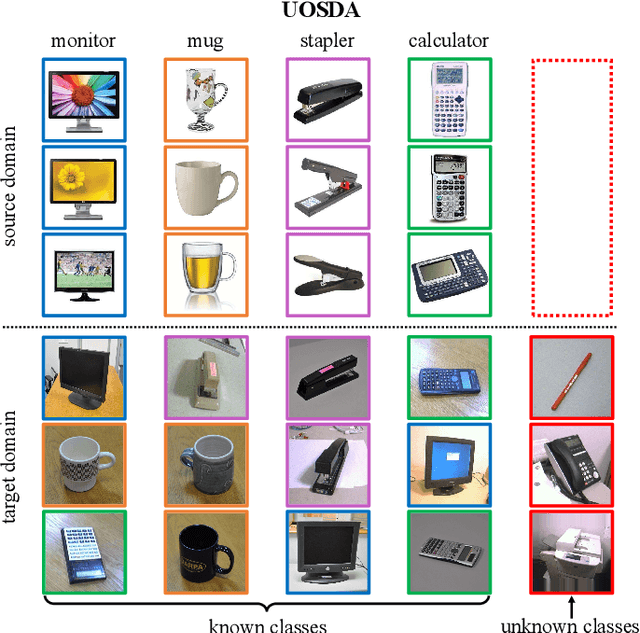

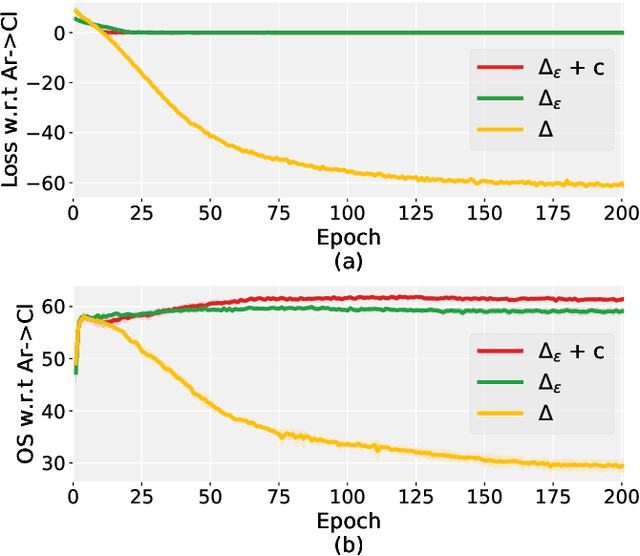

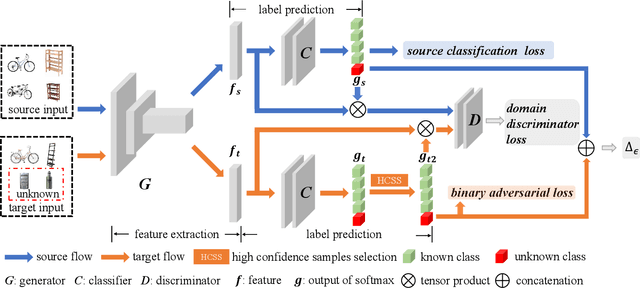

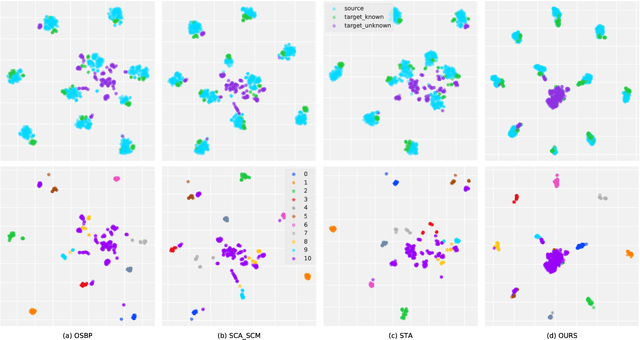

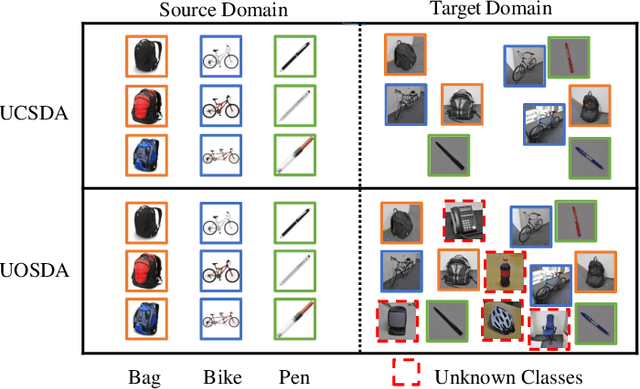

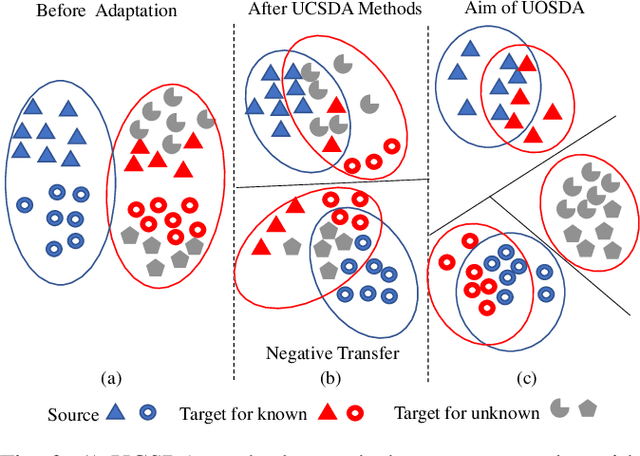

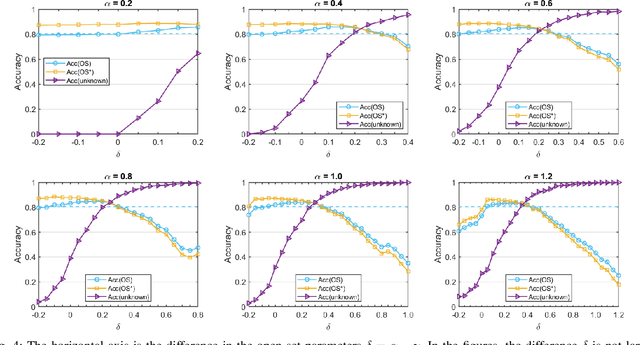

Abstract:In the unsupervised open set domain adaptation (UOSDA), the target domain contains unknown classes that are not observed in the source domain. Researchers in this area aim to train a classifier to accurately: 1) recognize unknown target data (data with unknown classes) and, 2) classify other target data. To achieve this aim, a previous study has proven an upper bound of the target-domain risk, and the open set difference, as an important term in the upper bound, is used to measure the risk on unknown target data. By minimizing the upper bound, a shallow classifier can be trained to achieve the aim. However, if the classifier is very flexible (e.g., deep neural networks (DNNs)), the open set difference will converge to a negative value when minimizing the upper bound, which causes an issue where most target data are recognized as unknown data. To address this issue, we propose a new upper bound of target-domain risk for UOSDA, which includes four terms: source-domain risk, $\epsilon$-open set difference ($\Delta_\epsilon$), a distributional discrepancy between domains, and a constant. Compared to the open set difference, $\Delta_\epsilon$ is more robust against the issue when it is being minimized, and thus we are able to use very flexible classifiers (i.e., DNNs). Then, we propose a new principle-guided deep UOSDA method that trains DNNs via minimizing the new upper bound. Specifically, source-domain risk and $\Delta_\epsilon$ are minimized by gradient descent, and the distributional discrepancy is minimized via a novel open-set conditional adversarial training strategy. Finally, compared to existing shallow and deep UOSDA methods, our method shows the state-of-the-art performance on several benchmark datasets, including digit recognition (MNIST, SVHN, USPS), object recognition (Office-31, Office-Home), and face recognition (PIE).

Diverse Instances-Weighting Ensemble based on Region Drift Disagreement for Concept Drift Adaptation

Apr 13, 2020

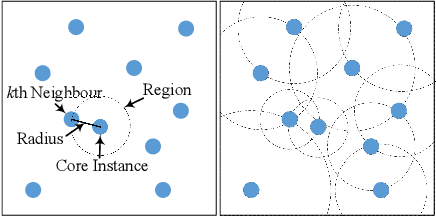

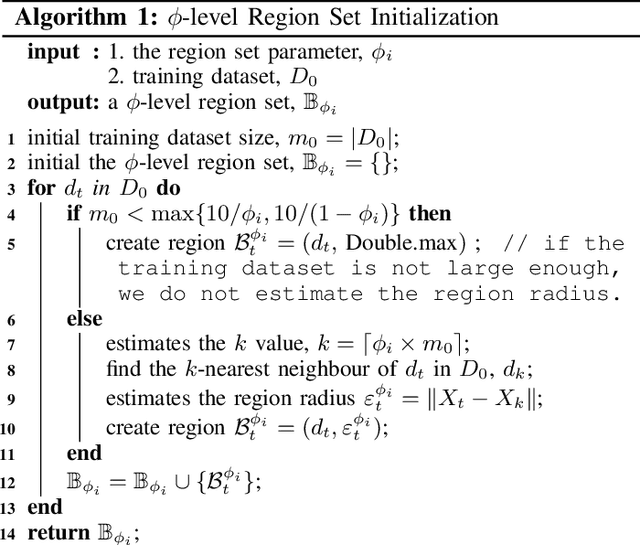

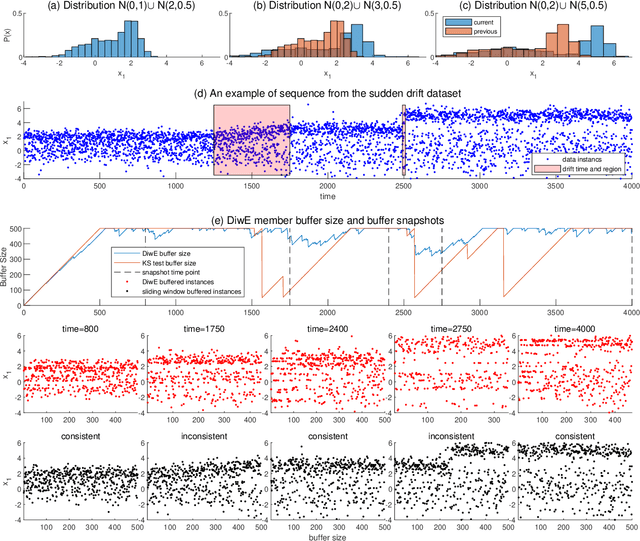

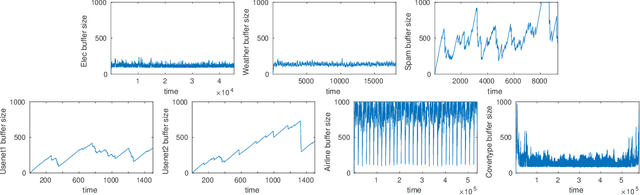

Abstract:Concept drift refers to changes in the distribution of underlying data and is an inherent property of evolving data streams. Ensemble learning, with dynamic classifiers, has proved to be an efficient method of handling concept drift. However, the best way to create and maintain ensemble diversity with evolving streams is still a challenging problem. In contrast to estimating diversity via inputs, outputs, or classifier parameters, we propose a diversity measurement based on whether the ensemble members agree on the probability of a regional distribution change. In our method, estimations over regional distribution changes are used as instance weights. Constructing different region sets through different schemes will lead to different drift estimation results, thereby creating diversity. The classifiers that disagree the most are selected to maximize diversity. Accordingly, an instance-based ensemble learning algorithm, called the diverse instance weighting ensemble (DiwE), is developed to address concept drift for data stream classification problems. Evaluations of various synthetic and real-world data stream benchmarks show the effectiveness and advantages of the proposed algorithm.

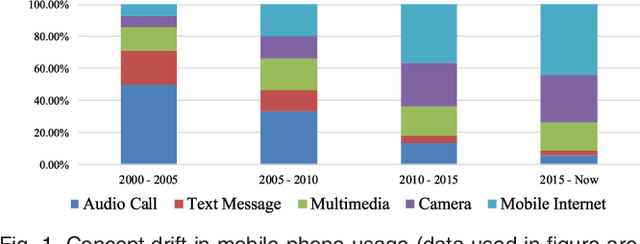

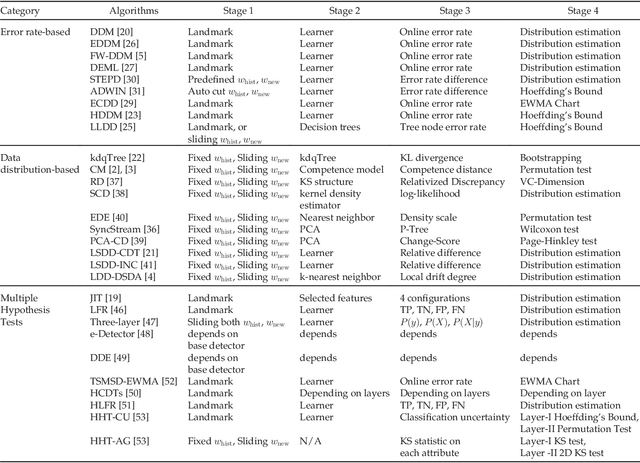

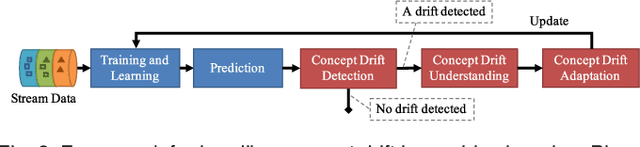

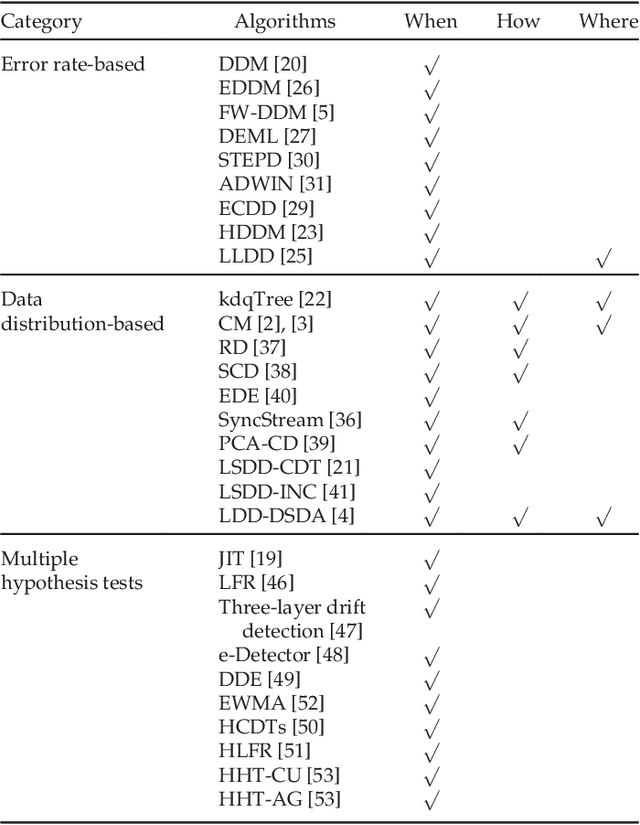

Learning under Concept Drift: A Review

Apr 13, 2020

Abstract:Concept drift describes unforeseeable changes in the underlying distribution of streaming data over time. Concept drift research involves the development of methodologies and techniques for drift detection, understanding and adaptation. Data analysis has revealed that machine learning in a concept drift environment will result in poor learning results if the drift is not addressed. To help researchers identify which research topics are significant and how to apply related techniques in data analysis tasks, it is necessary that a high quality, instructive review of current research developments and trends in the concept drift field is conducted. In addition, due to the rapid development of concept drift in recent years, the methodologies of learning under concept drift have become noticeably systematic, unveiling a framework which has not been mentioned in literature. This paper reviews over 130 high quality publications in concept drift related research areas, analyzes up-to-date developments in methodologies and techniques, and establishes a framework of learning under concept drift including three main components: concept drift detection, concept drift understanding, and concept drift adaptation. This paper lists and discusses 10 popular synthetic datasets and 14 publicly available benchmark datasets used for evaluating the performance of learning algorithms aiming at handling concept drift. Also, concept drift related research directions are covered and discussed. By providing state-of-the-art knowledge, this survey will directly support researchers in their understanding of research developments in the field of learning under concept drift.

Learning Deep Kernels for Non-Parametric Two-Sample Tests

Feb 21, 2020

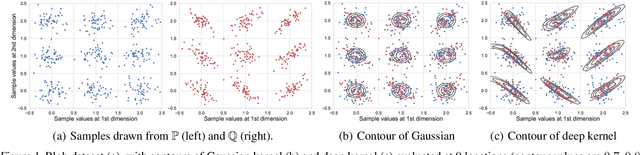

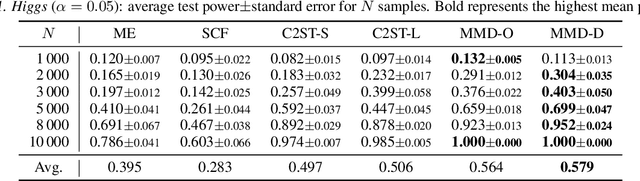

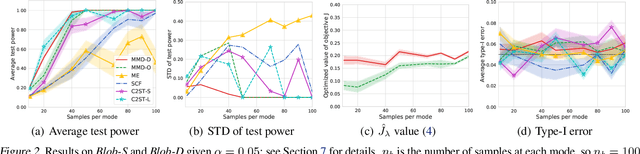

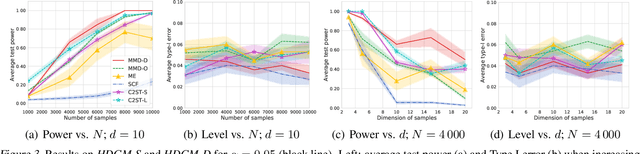

Abstract:We propose a class of kernel-based two-sample tests, which aim to determine whether two sets of samples are drawn from the same distribution. Our tests are constructed from kernels parameterized by deep neural nets, trained to maximize test power. These tests adapt to variations in distribution smoothness and shape over space, and are especially suited to high dimensions and complex data. By contrast, the simpler kernels used in prior kernel testing work are spatially homogeneous, and adaptive only in lengthscale. We explain how this scheme includes popular classifier-based two-sample tests as a special case, but improves on them in general. We provide the first proof of consistency for the proposed adaptation method, which applies both to kernels on deep features and to simpler radial basis kernels or multiple kernel learning. In experiments, we establish the superior performance of our deep kernels in hypothesis testing on benchmark and real-world data. The code of our deep-kernel-based two sample tests is available at https://github.com/fengliu90/DK-for-TST.

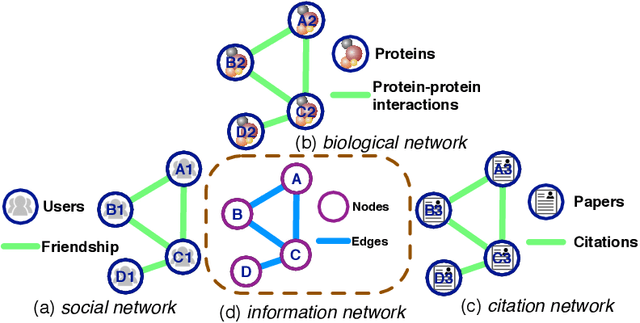

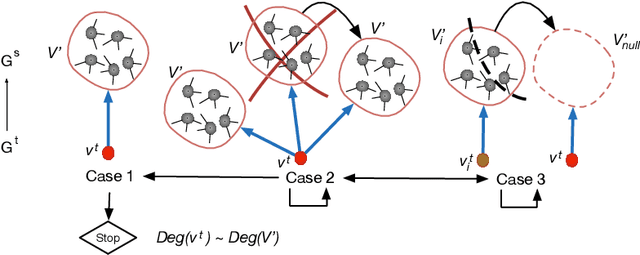

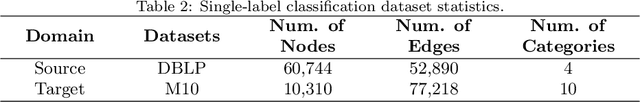

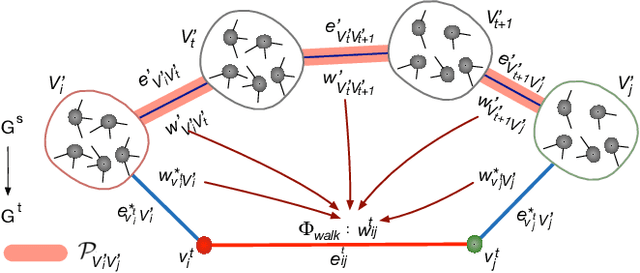

Cross-domain Network Representations

Aug 01, 2019

Abstract:The purpose of network representation is to learn a set of latent features by obtaining community information from network structures to provide knowledge for machine learning tasks. Recent research has driven significant progress in network representation by employing random walks as the network sampling strategy. Nevertheless, existing approaches rely on domain-specifically rich community structures and fail in the network that lack topological information in its own domain. In this paper, we propose a novel algorithm for cross-domain network representation, named as CDNR. By generating the random walks from a structural rich domain and transferring the knowledge on the random walks across domains, it enables a network representation for the structural scarce domain as well. To be specific, CDNR is realized by a cross-domain two-layer node-scale balance algorithm and a cross-domain two-layer knowledge transfer algorithm in the framework of cross-domain two-layer random walk learning. Experiments on various real-world datasets demonstrate the effectiveness of CDNR for universal networks in an unsupervised way.

Open Set Domain Adaptation: Theoretical Bound and Algorithm

Jul 19, 2019

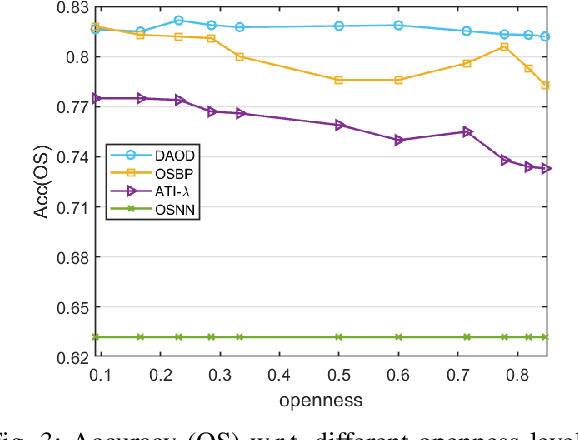

Abstract:Unsupervised domain adaptation for classification tasks has achieved great progress in leveraging the knowledge in a labeled (source) domain to improve the task performance in an unlabeled (target) domain by mitigating the effect of distribution discrepancy. However, most existing methods can only handle unsupervised closed set domain adaptation (UCSDA), where the source and target domains share the same label set. In this paper, we target a more challenging but realistic setting: unsupervised open set domain adaptation (UOSDA), where the target domain has unknown classes that the source domain does not have. This study is the first to give the generalization bound of open set domain adaptation through theoretically investigating the risk of the target classifier on the unknown classes. The proposed generalization bound for open set domain adaptation has a special term, namely open set difference, which reflects the risk of the target classifier on unknown classes. According to this generalization bound, we propose a novel and theoretically guided unsupervised open set domain adaptation method: Distribution Alignment with Open Difference (DAOD), which is based on the structural risk minimization principle and open set difference regularization. The experiments on several benchmark datasets show the superior performance of the proposed UOSDA method compared with the state-of-the-art methods in the literature.

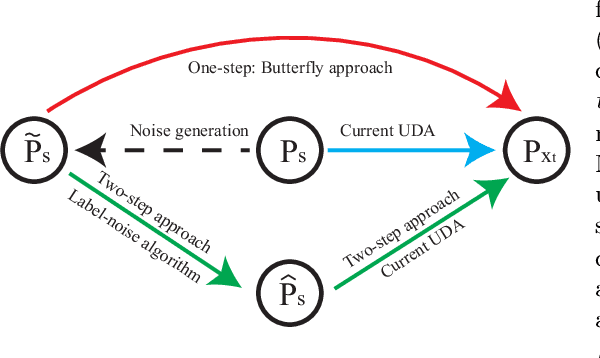

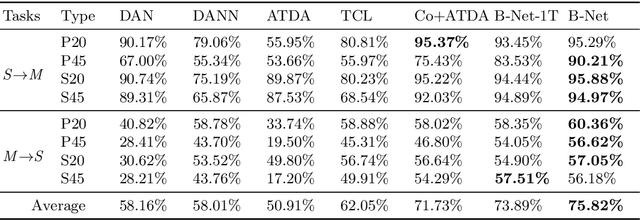

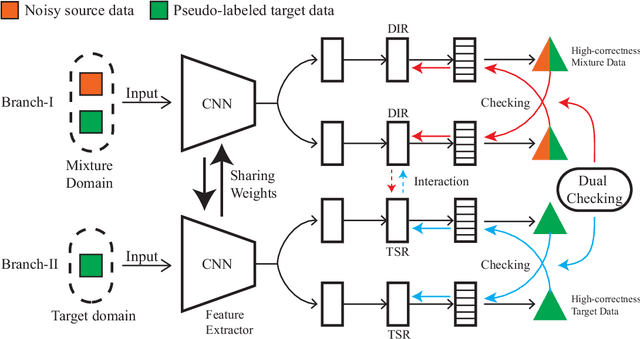

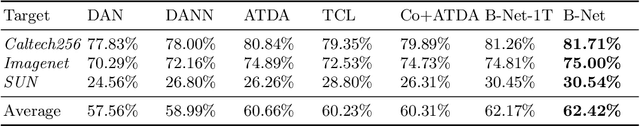

Butterfly: A Panacea for All Difficulties in Wildly Unsupervised Domain Adaptation

May 23, 2019

Abstract:In unsupervised domain adaptation (UDA), classifiers for the target domain (TD) are trained with clean labeled data from the source domain (SD) and unlabeled data from TD. However, in the wild, it is hard to acquire a large amount of perfectly clean labeled data in SD given limited budget. Hence, we consider a new, more realistic and more challenging problem setting, where classifiers have to be trained with noisy labeled data from SD and unlabeled data from TD---we name it wildly UDA (WUDA). We show that WUDA provably ruins all UDA methods if taking no care of label noise in SD, and to this end, we propose a Butterfly framework, a panacea for all difficulties in WUDA. Butterfly maintains four models (e.g., deep networks) simultaneously, where two take care of all adaptations (i.e., noisy-to-clean, labeled-to-unlabeled, and SD-to-TD-distributional) and then the other two can focus on classification in TD. As a consequence, Butterfly possesses all the necessary components for all the challenges in WUDA. Experiments demonstrate that under WUDA, Butterfly significantly outperforms existing baseline methods.

Deep Uncertainty Learning: A Machine Learning Approach for Weather Forecasting

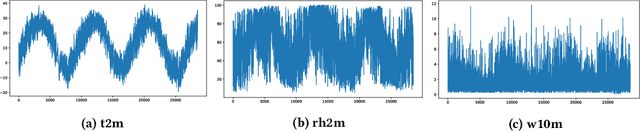

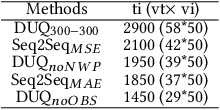

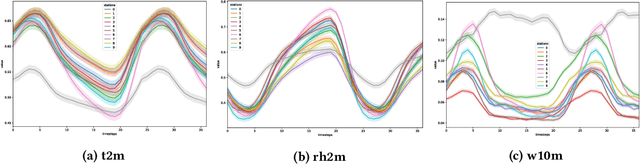

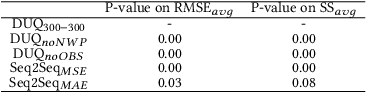

Dec 27, 2018

Abstract:This paper uses the weather forecasting as an application background to illustrate the technique of \textit{deep uncertainty learning} (DUL). Weather forecasting has great significance throughout human history and is traditionally approached through numerical weather prediction (NWP) in which the atmosphere is modelled as differential equations. However, due to the instability of these differential equations in the presence of uncertainties, weather forecasting through numerical simulations may not be reliable. This paper explores weather forecasting as a data mining problem. We build a deep prediction interval (DPI) model based on sequence-to-sequence (seq2seq) that predicts spatio-temporal patterns of meteorological variables in the future 37 hours, which incorporates the informative knowledge of NWP. A big contribution and surprising finding in the training process of DPI is that training by mean variance error (MVE) loss instead of mean square error loss can significantly improve the generalization of point estimation, which has never been reported in previous researches. We think this phenomenon can be regarded as a new kind of regularization which can not only be on a par with the famous Dropout but also provide more uncertainty information, and hence comes into win-win situation. Based on single DPI, we then build deep ensemble. We evaluate our method on dataset from 10 realistic weather stations in Beijing of China. Experimental results shown DPI has better generalization than traditional point estimation and deep ensemble can further improve the performance. The deep ensemble method also achieved top-2 online score ranking in the competition of AI Challenger 2018. It can dramatically decrease up to 56\% error compared with NWP.

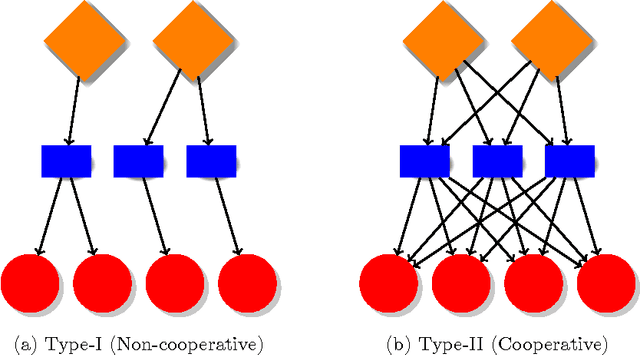

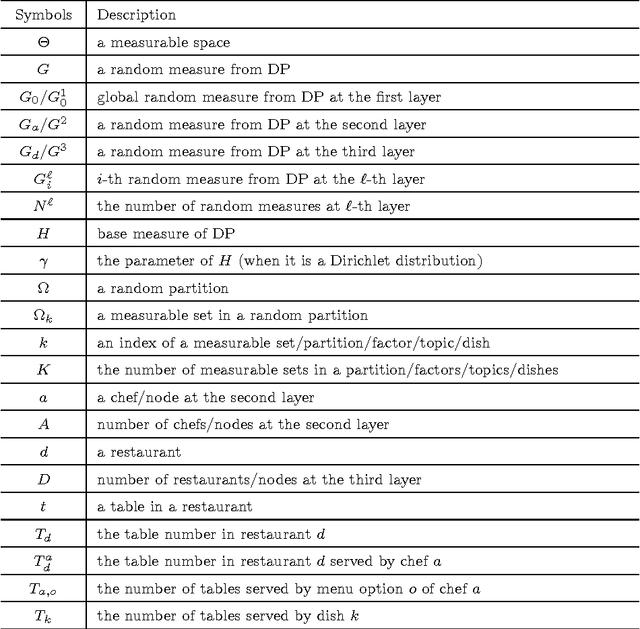

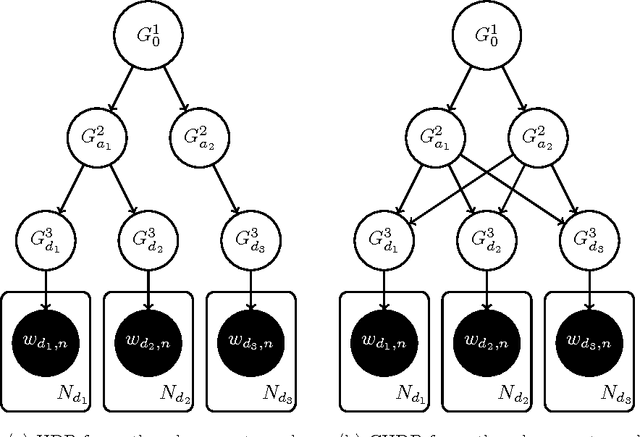

Cooperative Hierarchical Dirichlet Processes: Superposition vs. Maximization

Jul 18, 2017

Abstract:The cooperative hierarchical structure is a common and significant data structure observed in, or adopted by, many research areas, such as: text mining (author-paper-word) and multi-label classification (label-instance-feature). Renowned Bayesian approaches for cooperative hierarchical structure modeling are mostly based on topic models. However, these approaches suffer from a serious issue in that the number of hidden topics/factors needs to be fixed in advance and an inappropriate number may lead to overfitting or underfitting. One elegant way to resolve this issue is Bayesian nonparametric learning, but existing work in this area still cannot be applied to cooperative hierarchical structure modeling. In this paper, we propose a cooperative hierarchical Dirichlet process (CHDP) to fill this gap. Each node in a cooperative hierarchical structure is assigned a Dirichlet process to model its weights on the infinite hidden factors/topics. Together with measure inheritance from hierarchical Dirichlet process, two kinds of measure cooperation, i.e., superposition and maximization, are defined to capture the many-to-many relationships in the cooperative hierarchical structure. Furthermore, two constructive representations for CHDP, i.e., stick-breaking and international restaurant process, are designed to facilitate the model inference. Experiments on synthetic and real-world data with cooperative hierarchical structures demonstrate the properties and the ability of CHDP for cooperative hierarchical structure modeling and its potential for practical application scenarios.

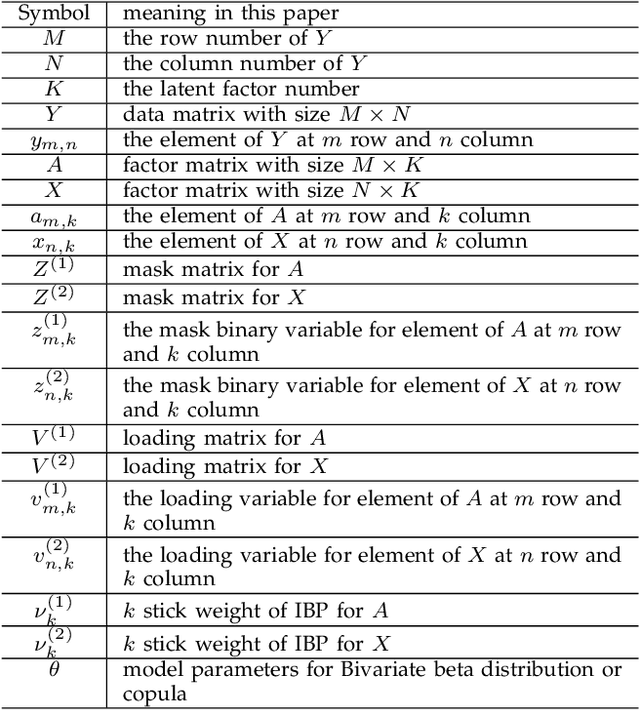

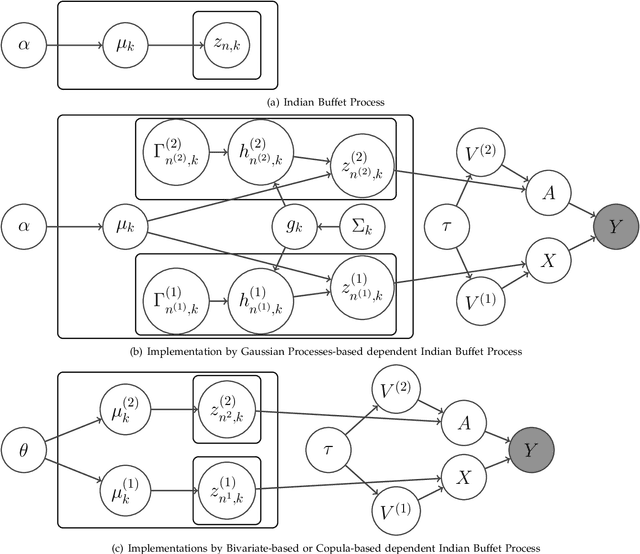

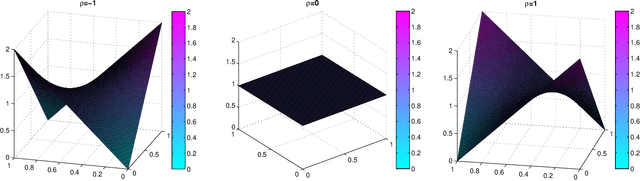

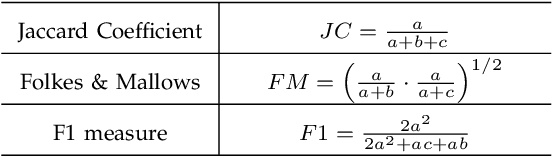

Dependent Indian Buffet Process-based Sparse Nonparametric Nonnegative Matrix Factorization

Jul 12, 2015

Abstract:Nonnegative Matrix Factorization (NMF) aims to factorize a matrix into two optimized nonnegative matrices appropriate for the intended applications. The method has been widely used for unsupervised learning tasks, including recommender systems (rating matrix of users by items) and document clustering (weighting matrix of papers by keywords). However, traditional NMF methods typically assume the number of latent factors (i.e., dimensionality of the loading matrices) to be fixed. This assumption makes them inflexible for many applications. In this paper, we propose a nonparametric NMF framework to mitigate this issue by using dependent Indian Buffet Processes (dIBP). In a nutshell, we apply a correlation function for the generation of two stick weights associated with each pair of columns of loading matrices, while still maintaining their respective marginal distribution specified by IBP. As a consequence, the generation of two loading matrices will be column-wise (indirectly) correlated. Under this same framework, two classes of correlation function are proposed (1) using Bivariate beta distribution and (2) using Copula function. Both methods allow us to adopt our work for various applications by flexibly choosing an appropriate parameter settings. Compared with the other state-of-the art approaches in this area, such as using Gaussian Process (GP)-based dIBP, our work is seen to be much more flexible in terms of allowing the two corresponding binary matrix columns to have greater variations in their non-zero entries. Our experiments on the real-world and synthetic datasets show that three proposed models perform well on the document clustering task comparing standard NMF without predefining the dimension for the factor matrices, and the Bivariate beta distribution-based and Copula-based models have better flexibility than the GP-based model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge