Germain Forestier

Deep Learning for Skeleton Based Human Motion Rehabilitation Assessment: A Benchmark

Jul 28, 2025Abstract:Automated assessment of human motion plays a vital role in rehabilitation, enabling objective evaluation of patient performance and progress. Unlike general human activity recognition, rehabilitation motion assessment focuses on analyzing the quality of movement within the same action class, requiring the detection of subtle deviations from ideal motion. Recent advances in deep learning and video-based skeleton extraction have opened new possibilities for accessible, scalable motion assessment using affordable devices such as smartphones or webcams. However, the field lacks standardized benchmarks, consistent evaluation protocols, and reproducible methodologies, limiting progress and comparability across studies. In this work, we address these gaps by (i) aggregating existing rehabilitation datasets into a unified archive called Rehab-Pile, (ii) proposing a general benchmarking framework for evaluating deep learning methods in this domain, and (iii) conducting extensive benchmarking of multiple architectures across classification and regression tasks. All datasets and implementations are released to the community to support transparency and reproducibility. This paper aims to establish a solid foundation for future research in automated rehabilitation assessment and foster the development of reliable, accessible, and personalized rehabilitation solutions. The datasets, source-code and results of this article are all publicly available.

Look Into the LITE in Deep Learning for Time Series Classification

Sep 04, 2024

Abstract:Deep learning models have been shown to be a powerful solution for Time Series Classification (TSC). State-of-the-art architectures, while producing promising results on the UCR and the UEA archives , present a high number of trainable parameters. This can lead to long training with high CO2 emission, power consumption and possible increase in the number of FLoating-point Operation Per Second (FLOPS). In this paper, we present a new architecture for TSC, the Light Inception with boosTing tEchnique (LITE) with only 2.34% of the number of parameters of the state-of-the-art InceptionTime model, while preserving performance. This architecture, with only 9, 814 trainable parameters due to the usage of DepthWise Separable Convolutions (DWSC), is boosted by three techniques: multiplexing, custom filters, and dilated convolution. The LITE architecture, trained on the UCR, is 2.78 times faster than InceptionTime and consumes 2.79 times less CO2 and power. To evaluate the performance of the proposed architecture on multivariate time series data, we adapt LITE to handle multivariate time series, we call this version LITEMV. To bring theory into application, we also conducted experiments using LITEMV on multivariate time series representing human rehabilitation movements, showing that LITEMV not only is the most efficient model but also the best performing for this application on the Kimore dataset, a skeleton based human rehabilitation exercises dataset. Moreover, to address the interpretability of LITEMV, we present a study using Class Activation Maps to understand the classification decision taken by the model during evaluation.

Establishing a Unified Evaluation Framework for Human Motion Generation: A Comparative Analysis of Metrics

May 13, 2024

Abstract:The development of generative artificial intelligence for human motion generation has expanded rapidly, necessitating a unified evaluation framework. This paper presents a detailed review of eight evaluation metrics for human motion generation, highlighting their unique features and shortcomings. We propose standardized practices through a unified evaluation setup to facilitate consistent model comparisons. Additionally, we introduce a novel metric that assesses diversity in temporal distortion by analyzing warping diversity, thereby enhancing the evaluation of temporal data. We also conduct experimental analyses of three generative models using a publicly available dataset, offering insights into the interpretation of each metric in specific case scenarios. Our goal is to offer a clear, user-friendly evaluation framework for newcomers, complemented by publicly accessible code.

The impact of data set similarity and diversity on transfer learning success in time series forecasting

Apr 09, 2024

Abstract:Models, pre-trained on a similar or diverse source data set, have become pivotal in enhancing the efficiency and accuracy of time series forecasting on target data sets by leveraging transfer learning. While benchmarks validate the performance of model generalization on various target data sets, there is no structured research providing similarity and diversity measures explaining which characteristics of source and target data lead to transfer learning success. Our study pioneers in systematically evaluating the impact of source-target similarity and source diversity on zero-shot and fine-tuned forecasting outcomes in terms of accuracy, bias, and uncertainty estimation. We investigate these dynamics using pre-trained neural networks across five public source datasets, applied in forecasting five target data sets, including real-world wholesales data. We identify two feature-based similarity and diversity measures showing: Source-target similarity enhances forecasting accuracy and reduces bias, while source diversity enhances forecasting accuracy and uncertainty estimation and increases the bias.

Finding Foundation Models for Time Series Classification with a PreText Task

Nov 24, 2023

Abstract:Over the past decade, Time Series Classification (TSC) has gained an increasing attention. While various methods were explored, deep learning - particularly through Convolutional Neural Networks (CNNs)-stands out as an effective approach. However, due to the limited availability of training data, defining a foundation model for TSC that overcomes the overfitting problem is still a challenging task. The UCR archive, encompassing a wide spectrum of datasets ranging from motion recognition to ECG-based heart disease detection, serves as a prime example for exploring this issue in diverse TSC scenarios. In this paper, we address the overfitting challenge by introducing pre-trained domain foundation models. A key aspect of our methodology is a novel pretext task that spans multiple datasets. This task is designed to identify the originating dataset of each time series sample, with the goal of creating flexible convolution filters that can be applied across different datasets. The research process consists of two phases: a pre-training phase where the model acquires general features through the pretext task, and a subsequent fine-tuning phase for specific dataset classifications. Our extensive experiments on the UCR archive demonstrate that this pre-training strategy significantly outperforms the conventional training approach without pre-training. This strategy effectively reduces overfitting in small datasets and provides an efficient route for adapting these models to new datasets, thus advancing the capabilities of deep learning in TSC.

ShapeDBA: Generating Effective Time Series Prototypes using ShapeDTW Barycenter Averaging

Sep 28, 2023

Abstract:Time series data can be found in almost every domain, ranging from the medical field to manufacturing and wireless communication. Generating realistic and useful exemplars and prototypes is a fundamental data analysis task. In this paper, we investigate a novel approach to generating realistic and useful exemplars and prototypes for time series data. Our approach uses a new form of time series average, the ShapeDTW Barycentric Average. We therefore turn our attention to accurately generating time series prototypes with a novel approach. The existing time series prototyping approaches rely on the Dynamic Time Warping (DTW) similarity measure such as DTW Barycentering Average (DBA) and SoftDBA. These last approaches suffer from a common problem of generating out-of-distribution artifacts in their prototypes. This is mostly caused by the DTW variant used and its incapability of detecting neighborhood similarities, instead it detects absolute similarities. Our proposed method, ShapeDBA, uses the ShapeDTW variant of DTW, that overcomes this issue. We chose time series clustering, a popular form of time series analysis to evaluate the outcome of ShapeDBA compared to the other prototyping approaches. Coupled with the k-means clustering algorithm, and evaluated on a total of 123 datasets from the UCR archive, our proposed averaging approach is able to achieve new state-of-the-art results in terms of Adjusted Rand Index.

An Approach to Multiple Comparison Benchmark Evaluations that is Stable Under Manipulation of the Comparate Set

May 19, 2023

Abstract:The measurement of progress using benchmarks evaluations is ubiquitous in computer science and machine learning. However, common approaches to analyzing and presenting the results of benchmark comparisons of multiple algorithms over multiple datasets, such as the critical difference diagram introduced by Dem\v{s}ar (2006), have important shortcomings and, we show, are open to both inadvertent and intentional manipulation. To address these issues, we propose a new approach to presenting the results of benchmark comparisons, the Multiple Comparison Matrix (MCM), that prioritizes pairwise comparisons and precludes the means of manipulating experimental results in existing approaches. MCM can be used to show the results of an all-pairs comparison, or to show the results of a comparison between one or more selected algorithms and the state of the art. MCM is implemented in Python and is publicly available.

Deep Learning for Time Series Classification and Extrinsic Regression: A Current Survey

Feb 06, 2023

Abstract:Time Series Classification and Extrinsic Regression are important and challenging machine learning tasks. Deep learning has revolutionized natural language processing and computer vision and holds great promise in other fields such as time series analysis where the relevant features must often be abstracted from the raw data but are not known a priori. This paper surveys the current state of the art in the fast-moving field of deep learning for time series classification and extrinsic regression. We review different network architectures and training methods used for these tasks and discuss the challenges and opportunities when applying deep learning to time series data. We also summarize two critical applications of time series classification and extrinsic regression, human activity recognition and satellite earth observation.

Surgical Data Science -- from Concepts to Clinical Translation

Oct 30, 2020

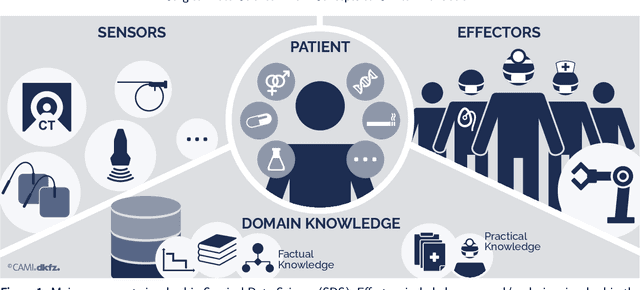

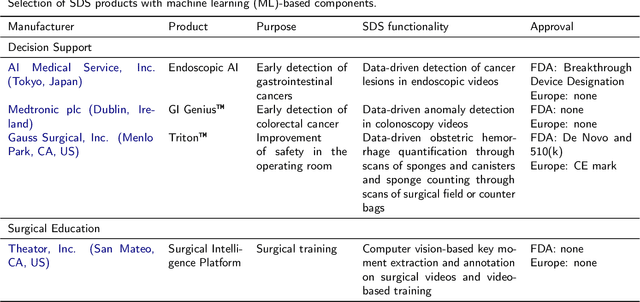

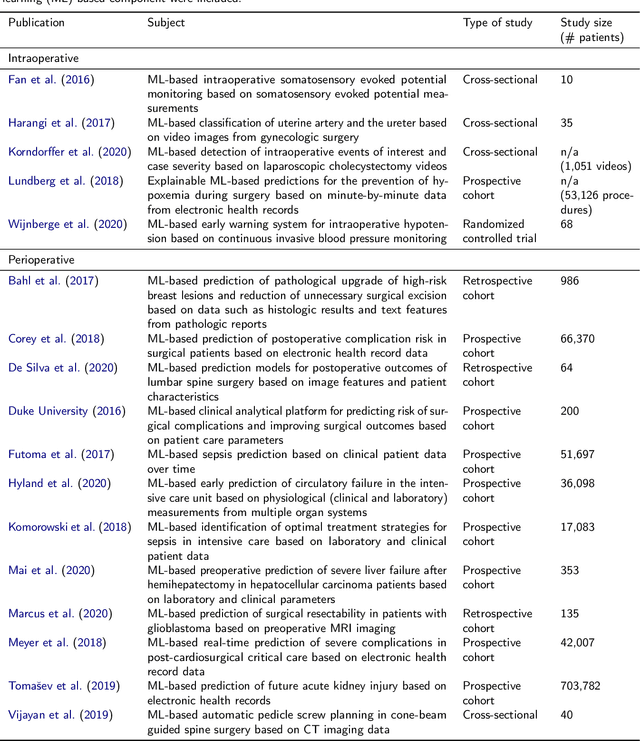

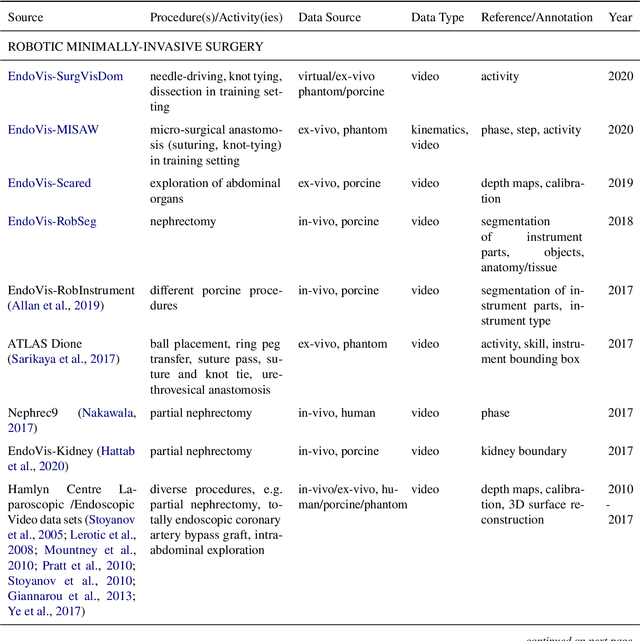

Abstract:Recent developments in data science in general and machine learning in particular have transformed the way experts envision the future of surgery. Surgical data science is a new research field that aims to improve the quality of interventional healthcare through the capture, organization, analysis and modeling of data. While an increasing number of data-driven approaches and clinical applications have been studied in the fields of radiological and clinical data science, translational success stories are still lacking in surgery. In this publication, we shed light on the underlying reasons and provide a roadmap for future advances in the field. Based on an international workshop involving leading researchers in the field of surgical data science, we review current practice, key achievements and initiatives as well as available standards and tools for a number of topics relevant to the field, namely (1) technical infrastructure for data acquisition, storage and access in the presence of regulatory constraints, (2) data annotation and sharing and (3) data analytics. Drawing from this extensive review, we present current challenges for technology development and (4) describe a roadmap for faster clinical translation and exploitation of the full potential of surgical data science.

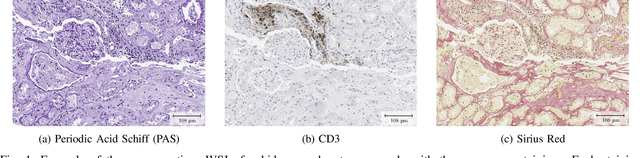

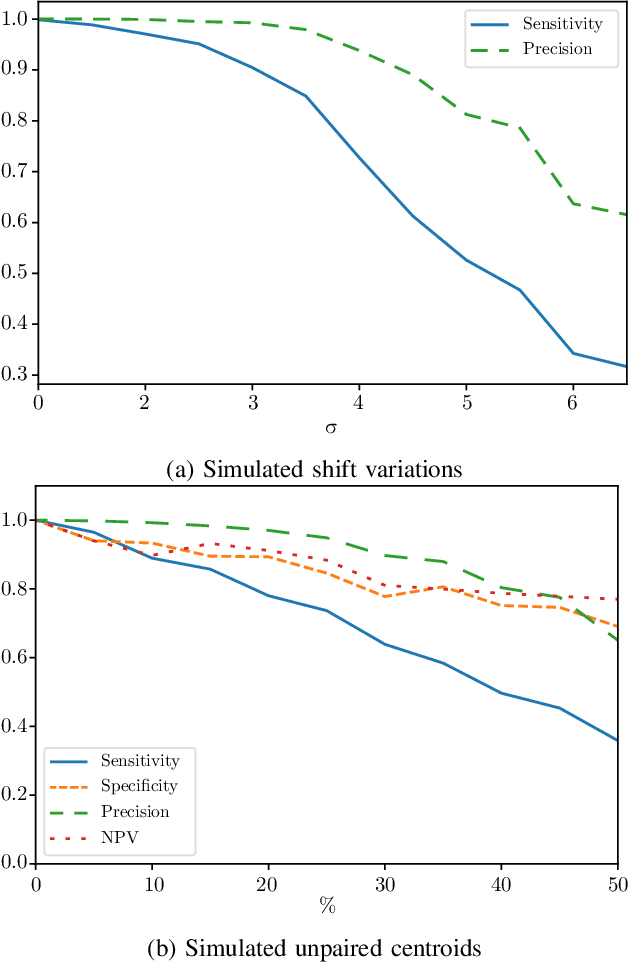

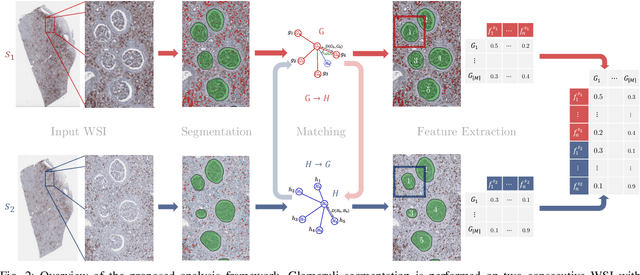

An automatic framework to study the tissue micro-environment of renal glomeruli in differently stained consecutive digital whole slide images

Aug 29, 2020

Abstract:Objective: This article presents an automatic image processing framework to extract quantitative high-level information describing the micro-environment of glomeruli in consecutive whole slide images (WSIs) processed with different staining modalities of patients with chronic kidney rejection after kidney transplantation. Methods: This three step framework consists of: 1) cell and anatomical structure segmentation based on colour deconvolution and deep learning 2) fusion of information from different stainings using a newly developed registration algorithm 3) feature extraction. Results: Each step of the framework is validated independently both quantitatively and qualitatively by pathologists. An illustration of the different types of features that can be extracted is presented. Conclusion: The proposed generic framework allows for the analysis of the micro-environment surrounding large structures that can be segmented (either manually or automatically). It is independent of the segmentation approach and is therefore applicable to a variety of biomedical research questions. Significance: Chronic tissue remodelling processes after kidney transplantation can result in interstitial fibrosis and tubular atrophy (IFTA) and glomerulosclerosis. This pipeline provides tools to quantitatively analyse, in the same spatial context, information from different consecutive WSIs and help researchers understand the complex underlying mechanisms leading to IFTA and glomerulosclerosis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge