Gan Zheng

Multibeam High Throughput Satellite: Hardware Foundation, Resource Allocation, and Precoding

Aug 01, 2025

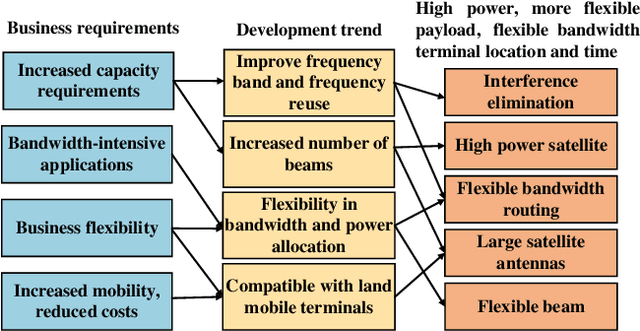

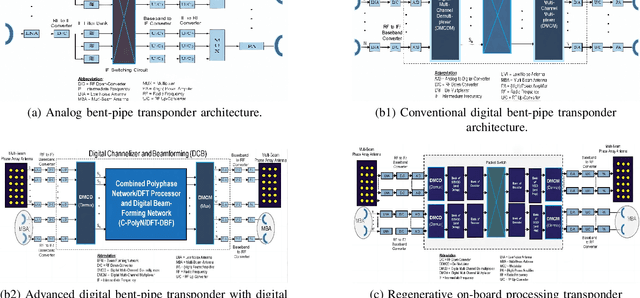

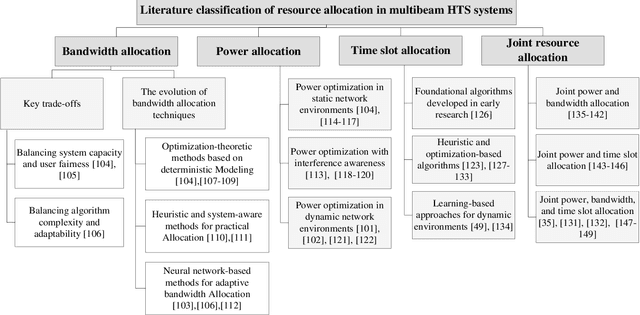

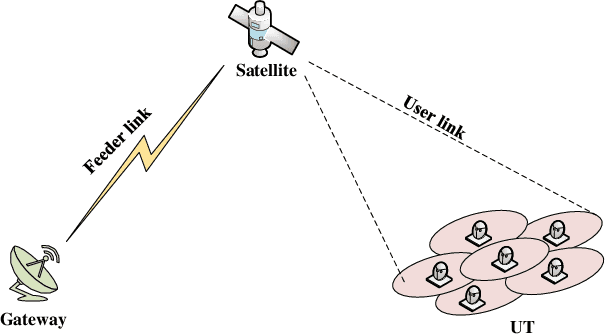

Abstract:With its wide coverage and uninterrupted service, satellite communication is a critical technology for next-generation 6G communications. High throughput satellite (HTS) systems, utilizing multipoint beam and frequency multiplexing techniques, enable satellite communication capacity of up to Tbps to meet the growing traffic demand. Therefore, it is imperative to review the-state-of-the-art of multibeam HTS systems and identify their associated challenges and perspectives. Firstly, we summarize the multibeam HTS hardware foundations, including ground station systems, on-board payloads, and user terminals. Subsequently, we review the flexible on-board radio resource allocation approaches of bandwidth, power, time slot, and joint allocation schemes of HTS systems to optimize resource utilization and cater to non-uniform service demand. Additionally, we survey multibeam precoding methods for the HTS system to achieve full-frequency reuse and interference cancellation, which are classified according to different deployments such as single gateway precoding, multiple gateway precoding, on-board precoding, and hybrid on-board/on-ground precoding. Finally, we disscuss the challenges related to Q/V band link outage, time and frequency synchronization of gateways, the accuracy of channel state information (CSI), payload light-weight development, and the application of deep learning (DL). Research on these topics will contribute to enhancing the performance of HTS systems and finally delivering high-speed data to areas underserved by terrestrial networks.

Attention-based Adversarial Robust Distillation in Radio Signal Classifications for Low-Power IoT Devices

Jun 13, 2025Abstract:Due to great success of transformers in many applications such as natural language processing and computer vision, transformers have been successfully applied in automatic modulation classification. We have shown that transformer-based radio signal classification is vulnerable to imperceptible and carefully crafted attacks called adversarial examples. Therefore, we propose a defense system against adversarial examples in transformer-based modulation classifications. Considering the need for computationally efficient architecture particularly for Internet of Things (IoT)-based applications or operation of devices in environment where power supply is limited, we propose a compact transformer for modulation classification. The advantages of robust training such as adversarial training in transformers may not be attainable in compact transformers. By demonstrating this, we propose a novel compact transformer that can enhance robustness in the presence of adversarial attacks. The new method is aimed at transferring the adversarial attention map from the robustly trained large transformer to a compact transformer. The proposed method outperforms the state-of-the-art techniques for the considered white-box scenarios including fast gradient method and projected gradient descent attacks. We have provided reasoning of the underlying working mechanisms and investigated the transferability of the adversarial examples between different architectures. The proposed method has the potential to protect the transformer from the transferability of adversarial examples.

A Neural Rejection System Against Universal Adversarial Perturbations in Radio Signal Classification

Jun 13, 2025Abstract:Advantages of deep learning over traditional methods have been demonstrated for radio signal classification in the recent years. However, various researchers have discovered that even a small but intentional feature perturbation known as adversarial examples can significantly deteriorate the performance of the deep learning based radio signal classification. Among various kinds of adversarial examples, universal adversarial perturbation has gained considerable attention due to its feature of being data independent, hence as a practical strategy to fool the radio signal classification with a high success rate. Therefore, in this paper, we investigate a defense system called neural rejection system to propose against universal adversarial perturbations, and evaluate its performance by generating white-box universal adversarial perturbations. We show that the proposed neural rejection system is able to defend universal adversarial perturbations with significantly higher accuracy than the undefended deep neural network.

Cell-free Fluid Antenna Multiple Access Networks

Apr 29, 2025Abstract:Fluid antenna enables position reconfigurability that gives transceiver access to a high-resolution spatial signal and the ability to avoid interference through the ups and downs of fading channels. Previous studies investigated this fluid antenna multiple access (FAMA) approach in a single-cell setup only. In this paper, we consider a cell-free network architecture in which users are associated with the nearest base stations (BSs) and all users share the same physical channel. Each BS has multiple fixed antennas that employ maximum ratio transmission (MRT) to beam to its associated users while each user relies on its fluid antenna system (FAS) on one radio frequency (RF) chain to overcome the inter-user interference. Our aim is to analyze the outage probability performance of such cell-free FAMA network when both large- and small-scale fading effects are considered. To do so, we derive the distribution of the received \textcolor{black}{magnitude} for a typical user and then the interference distribution under both fast and slow port switching techniques. The outage probability is finally obtained in integral form in each case. Numerical results demonstrate that in an interference-limited situation, although fast port switching is typically understood as the superior method for FAMA, slow port switching emerges as a more effective solution when there is a large antenna array at the BS. Moreover, it is revealed that FAS at each user can serve to greatly reduce the burden of BS in terms of both antenna costs and CSI estimation overhead, thereby enhancing the scalability of cell-free networks.

A Hybrid Quantum-Classical Autoencoder Framework for End-to-End Communication Systems

Dec 28, 2024

Abstract:This paper investigates the application of quantum machine learning to End-to-End (E2E) communication systems in wireless fading scenarios. We introduce a novel hybrid quantum-classical autoencoder architecture that combines parameterized quantum circuits with classical deep neural networks (DNNs). Specifically, we propose a hybrid quantum-classical autoencoder (QAE) framework to optimize the E2E communication system. Our results demonstrate the feasibility of the proposed hybrid system, and reveal that it is the first work that can achieve comparable block error rate (BLER) performance to classical DNN-based and conventional channel coding schemes, while significantly reducing the number of trainable parameters. Additionally, the proposed QAE exhibits steady and superior BLER convergence over the classical autoencoder baseline.

Dynamic Spectrum Access for Ambient Backscatter Communication-assisted D2D Systems with Quantum Reinforcement Learning

Oct 23, 2024

Abstract:Spectrum access is an essential problem in device-to-device (D2D) communications. However, with the recent growth in the number of mobile devices, the wireless spectrum is becoming scarce, resulting in low spectral efficiency for D2D communications. To address this problem, this paper aims to integrate the ambient backscatter communication technology into D2D devices to allow them to backscatter ambient RF signals to transmit their data when the shared spectrum is occupied by mobile users. To obtain the optimal spectrum access policy, i.e., stay idle or access the shared spectrum and perform active transmissions or backscattering ambient RF signals for transmissions, to maximize the average throughput for D2D users, deep reinforcement learning (DRL) can be adopted. However, DRL-based solutions may require long training time due to the curse of dimensionality issue as well as complex deep neural network architectures. For that, we develop a novel quantum reinforcement learning (RL) algorithm that can achieve a faster convergence rate with fewer training parameters compared to DRL thanks to the quantum superposition and quantum entanglement principles. Specifically, instead of using conventional deep neural networks, the proposed quantum RL algorithm uses a parametrized quantum circuit to approximate an optimal policy. Extensive simulations then demonstrate that the proposed solution not only can significantly improve the average throughput of D2D devices when the shared spectrum is busy but also can achieve much better performance in terms of convergence rate and learning complexity compared to existing DRL-based methods.

Countermeasures Against Adversarial Examples in Radio Signal Classification

Jul 09, 2024Abstract:Deep learning algorithms have been shown to be powerful in many communication network design problems, including that in automatic modulation classification. However, they are vulnerable to carefully crafted attacks called adversarial examples. Hence, the reliance of wireless networks on deep learning algorithms poses a serious threat to the security and operation of wireless networks. In this letter, we propose for the first time a countermeasure against adversarial examples in modulation classification. Our countermeasure is based on a neural rejection technique, augmented by label smoothing and Gaussian noise injection, that allows to detect and reject adversarial examples with high accuracy. Our results demonstrate that the proposed countermeasure can protect deep-learning based modulation classification systems against adversarial examples.

A Hybrid Training-time and Run-time Defense Against Adversarial Attacks in Modulation Classification

Jul 09, 2024

Abstract:Motivated by the superior performance of deep learning in many applications including computer vision and natural language processing, several recent studies have focused on applying deep neural network for devising future generations of wireless networks. However, several recent works have pointed out that imperceptible and carefully designed adversarial examples (attacks) can significantly deteriorate the classification accuracy. In this paper, we investigate a defense mechanism based on both training-time and run-time defense techniques for protecting machine learning-based radio signal (modulation) classification against adversarial attacks. The training-time defense consists of adversarial training and label smoothing, while the run-time defense employs a support vector machine-based neural rejection (NR). Considering a white-box scenario and real datasets, we demonstrate that our proposed techniques outperform existing state-of-the-art technologies.

A Data and Model-Driven Deep Learning Approach to Robust Downlink Beamforming Optimization

Jun 05, 2024Abstract:This paper investigates the optimization of the long-standing probabilistically robust transmit beamforming problem with channel uncertainties in the multiuser multiple-input single-output (MISO) downlink transmission. This problem poses significant analytical and computational challenges. Currently, the state-of-the-art optimization method relies on convex restrictions as tractable approximations to ensure robustness against Gaussian channel uncertainties. However, this method not only exhibits high computational complexity and suffers from the rank relaxation issue but also yields conservative solutions. In this paper, we propose an unsupervised deep learning-based approach that incorporates the sampling of channel uncertainties in the training process to optimize the probabilistic system performance. We introduce a model-driven learning approach that defines a new beamforming structure with trainable parameters to account for channel uncertainties. Additionally, we employ a graph neural network to efficiently infer the key beamforming parameters. We successfully apply this approach to the minimum rate quantile maximization problem subject to outage and total power constraints. Furthermore, we propose a bisection search method to address the more challenging power minimization problem with probabilistic rate constraints by leveraging the aforementioned approach. Numerical results confirm that our approach achieves non-conservative robust performance, higher data rates, greater power efficiency, and faster execution compared to state-of-the-art optimization methods.

A Low-Cost Multi-Band Waveform Security Framework in Resource-Constrained Communications

Feb 01, 2024Abstract:Traditional physical layer secure beamforming is achieved via precoding before signal transmission using channel state information (CSI). However, imperfect CSI will compromise the performance with imperfect beamforming and potential information leakage. In addition, multiple RF chains and antennas are needed to support the narrow beam generation, which complicates hardware implementation and is not suitable for resource-constrained Internet-of-Things (IoT) devices. Moreover, with the advancement of hardware and artificial intelligence (AI), low-cost and intelligent eavesdropping to wireless communications is becoming increasingly detrimental. In this paper, we propose a multi-carrier based multi-band waveform-defined security (WDS) framework, independent from CSI and RF chains, to defend against AI eavesdropping. Ideally, the continuous variations of sub-band structures lead to an infinite number of spectral features, which can potentially prevent brute-force eavesdropping. Sub-band spectral pattern information is efficiently constructed at legitimate users via a proposed chaotic sequence generator. A novel security metric, termed signal classification accuracy (SCA), is used to evaluate the security robustness under AI eavesdropping. Communication error probability and complexity are also investigated to show the reliability and practical capability of the proposed framework. Finally, compared to traditional secure beamforming techniques, the proposed multi-band WDS framework reduces power consumption by up to six times.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge