Finale Doshi-Velez

Regional Tree Regularization for Interpretability in Black Box Models

Aug 13, 2019

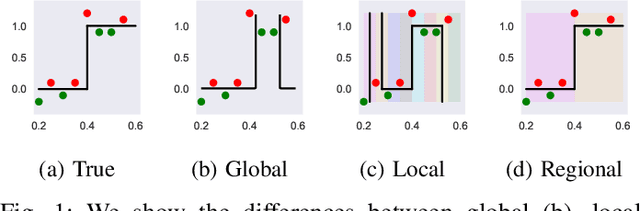

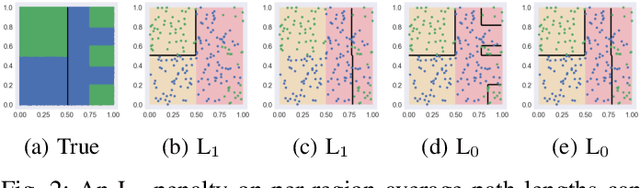

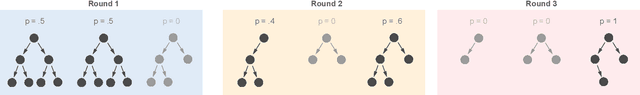

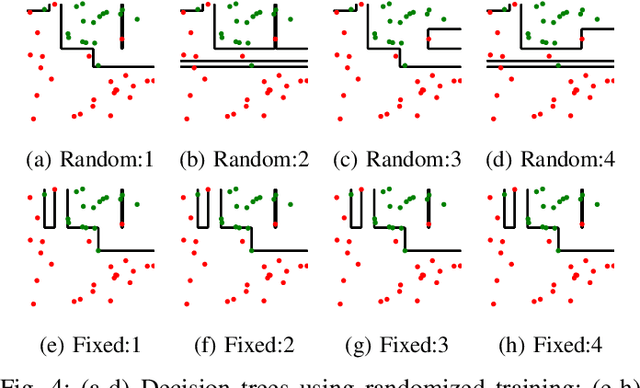

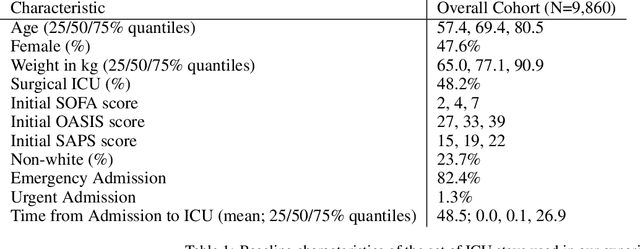

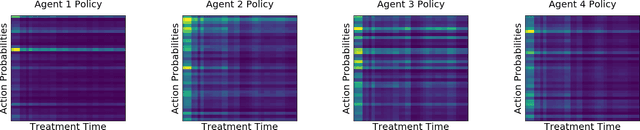

Abstract:The lack of interpretability remains a barrier to the adoption of deep neural networks. Recently, tree regularization has been proposed to encourage deep neural networks to resemble compact, axis-aligned decision trees without significant compromises in accuracy. However, it may be unreasonable to expect that a single tree can predict well across all possible inputs. In this work, we propose regional tree regularization, which encourages a deep model to be well-approximated by several separate decision trees specific to predefined regions of the input space. Practitioners can define regions based on domain knowledge of contexts where different decision-making logic is needed. Across many datasets, our approach delivers more accurate predictions than simply training separate decision trees for each region, while producing simpler explanations than other neural net regularization schemes without sacrificing predictive power. Two healthcare case studies in critical care and HIV demonstrate how experts can improve understanding of deep models via our approach.

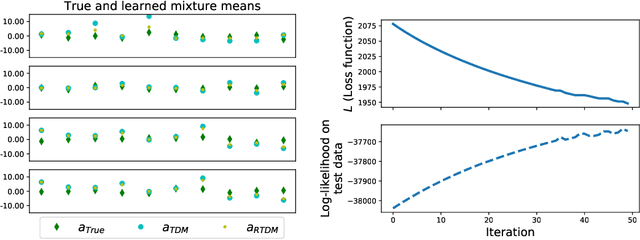

Quality of Uncertainty Quantification for Bayesian Neural Network Inference

Jun 24, 2019

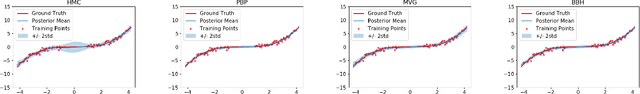

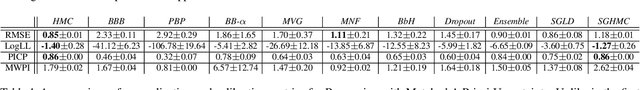

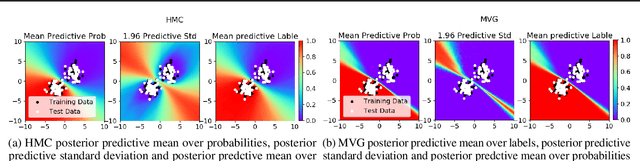

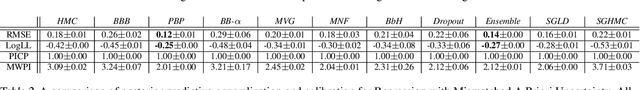

Abstract:Bayesian Neural Networks (BNNs) place priors over the parameters in a neural network. Inference in BNNs, however, is difficult; all inference methods for BNNs are approximate. In this work, we empirically compare the quality of predictive uncertainty estimates for 10 common inference methods on both regression and classification tasks. Our experiments demonstrate that commonly used metrics (e.g. test log-likelihood) can be misleading. Our experiments also indicate that inference innovations designed to capture structure in the posterior do not necessarily produce high quality posterior approximations.

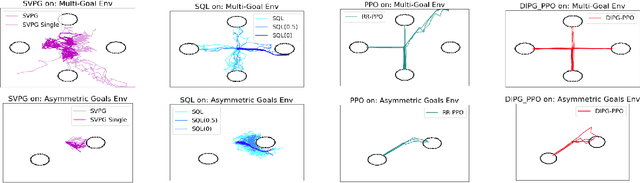

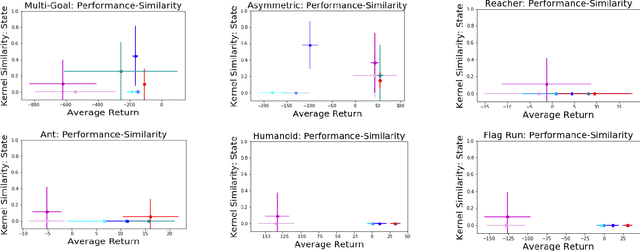

Diversity-Inducing Policy Gradient: Using Maximum Mean Discrepancy to Find a Set of Diverse Policies

May 31, 2019

Abstract:Standard reinforcement learning methods aim to master one way of solving a task whereas there may exist multiple near-optimal policies. Being able to identify this collection of near-optimal policies can allow a domain expert to efficiently explore the space of reasonable solutions. Unfortunately, existing approaches that quantify uncertainty over policies are not ultimately relevant to finding policies with qualitatively distinct behaviors. In this work, we formalize the difference between policies as a difference between the distribution of trajectories induced by each policy, which encourages diversity with respect to both state visitation and action choices. We derive a gradient-based optimization technique that can be combined with existing policy gradient methods to now identify diverse collections of well-performing policies. We demonstrate our approach on benchmarks and a healthcare task.

Exploring Computational User Models for Agent Policy Summarization

May 30, 2019

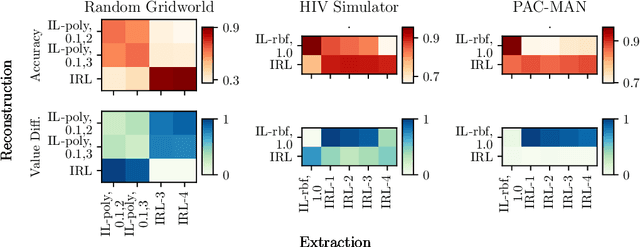

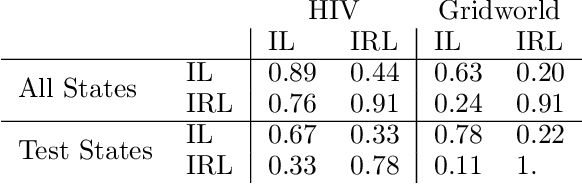

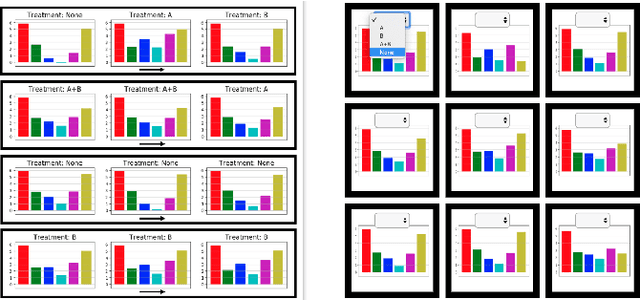

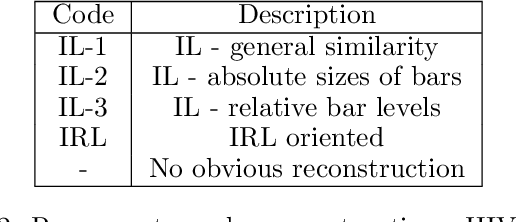

Abstract:AI agents are being developed to support high stakes decision-making processes from driving cars to prescribing drugs, making it increasingly important for human users to understand their behavior. Policy summarization methods aim to convey strengths and weaknesses of such agents by demonstrating their behavior in a subset of informative states. Some policy summarization methods extract a summary that optimizes the ability to reconstruct the agent's policy under the assumption that users will deploy inverse reinforcement learning. In this paper, we explore the use of different models for extracting summaries. We introduce an imitation learning-based approach to policy summarization; we demonstrate through computational simulations that a mismatch between the model used to extract a summary and the model used to reconstruct the policy results in worse reconstruction quality; and we demonstrate through a human-subject study that people use different models to reconstruct policies in different contexts, and that matching the summary extraction model to these can improve performance. Together, our results suggest that it is important to carefully consider user models in policy summarization.

Defining Admissible Rewards for High Confidence Policy Evaluation

May 30, 2019

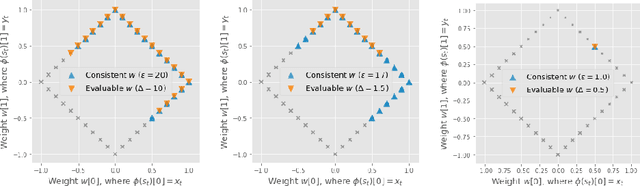

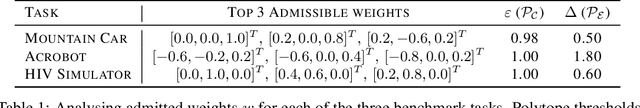

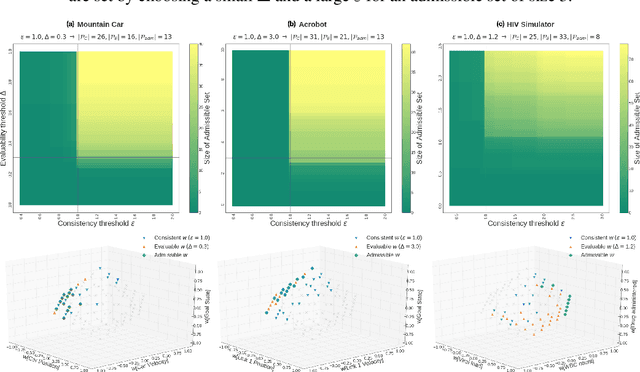

Abstract:A key impediment to reinforcement learning (RL) in real applications with limited, batch data is defining a reward function that reflects what we implicitly know about reasonable behaviour for a task and allows for robust off-policy evaluation. In this work, we develop a method to identify an admissible set of reward functions for policies that (a) do not diverge too far from past behaviour, and (b) can be evaluated with high confidence, given only a collection of past trajectories. Together, these ensure that we propose policies that we trust to be implemented in high-risk settings. We demonstrate our approach to reward design on synthetic domains as well as in a critical care context, for a reward that consolidates clinical objectives to learn a policy for weaning patients from mechanical ventilation.

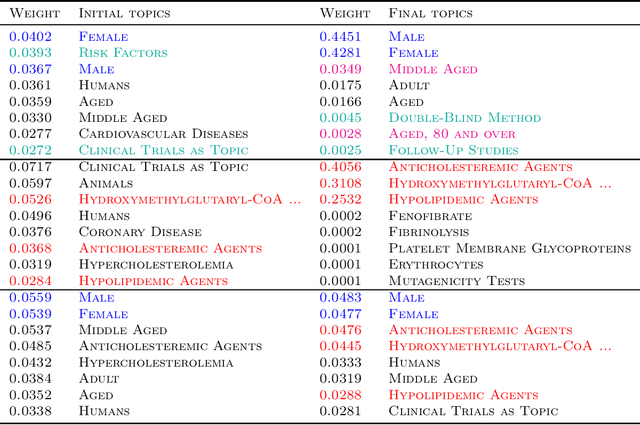

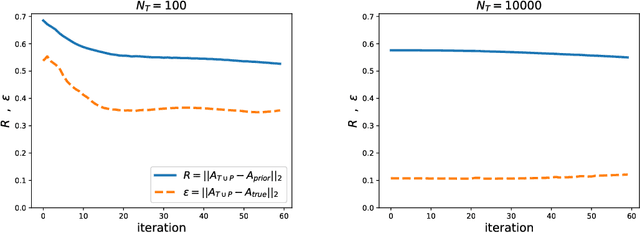

A general method for regularizing tensor decomposition methods via pseudo-data

May 24, 2019

Abstract:Tensor decomposition methods allow us to learn the parameters of latent variable models through decomposition of low-order moments of data. A significant limitation of these algorithms is that there exists no general method to regularize them, and in the past regularization has mostly been performed using bespoke modifications to the algorithms, tailored for the particular form of the desired regularizer. We present a general method of regularizing tensor decomposition methods which can be used for any likelihood model that is learnable using tensor decomposition methods and any differentiable regularization function by supplementing the training data with pseudo-data. The pseudo-data is optimized to balance two terms: being as close as possible to the true data and enforcing the desired regularization. On synthetic, semi-synthetic and real data, we demonstrate that our method can improve inference accuracy and regularize for a broad range of goals including transfer learning, sparsity, interpretability, and orthogonality of the learned parameters.

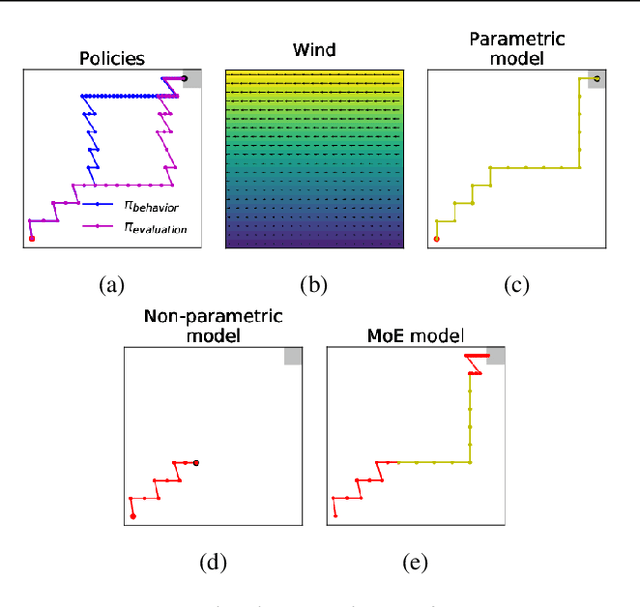

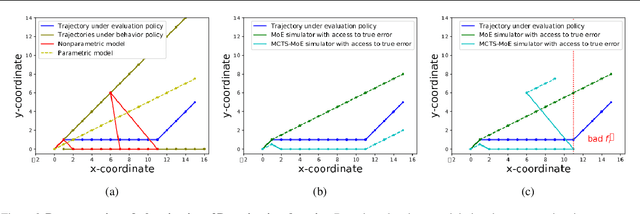

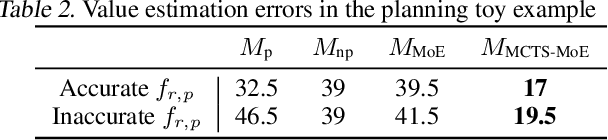

Combining Parametric and Nonparametric Models for Off-Policy Evaluation

May 16, 2019

Abstract:We consider a model-based approach to perform batch off-policy evaluation in reinforcement learning. Our method takes a mixture-of-experts approach to combine parametric and non-parametric models of the environment such that the final value estimate has the least expected error. We do so by first estimating the local accuracy of each model and then using a planner to select which model to use at every time step as to minimize the return error estimate along entire trajectories. Across a variety of domains, our mixture-based approach outperforms the individual models alone as well as state-of-the-art importance sampling-based estimators.

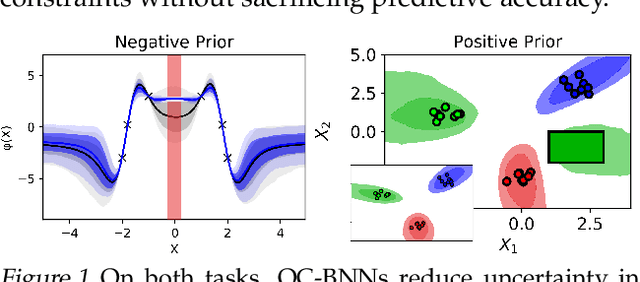

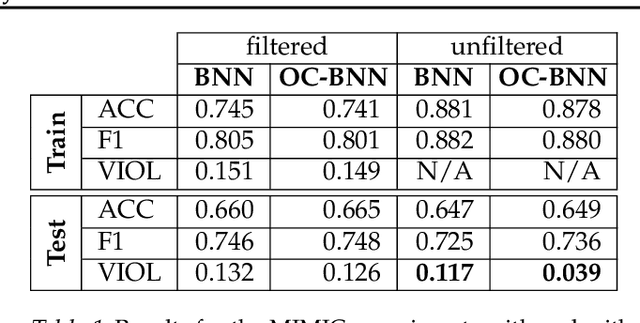

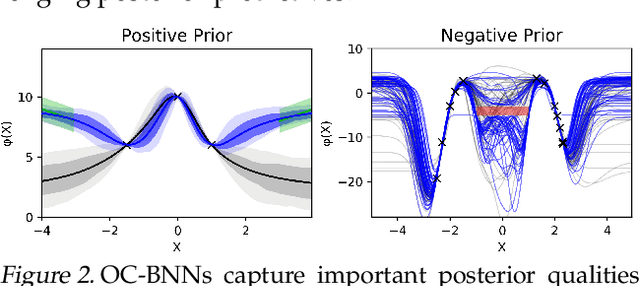

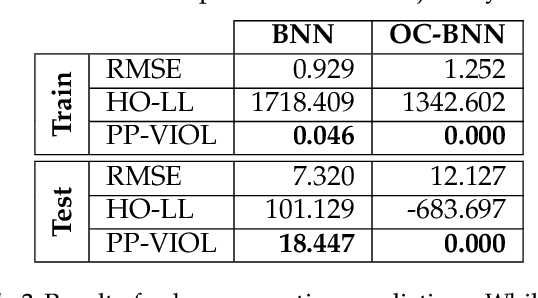

Output-Constrained Bayesian Neural Networks

May 15, 2019

Abstract:Bayesian neural network (BNN) priors are defined in parameter space, making it hard to encode prior knowledge expressed in function space. We formulate a prior that incorporates functional constraints about what the output can or cannot be in regions of the input space. Output-Constrained BNNs (OC-BNN) represent an interpretable approach of enforcing a range of constraints, fully consistent with the Bayesian framework and amenable to black-box inference. We demonstrate how OC-BNNs improve model robustness and prevent the prediction of infeasible outputs in two real-world applications of healthcare and robotics.

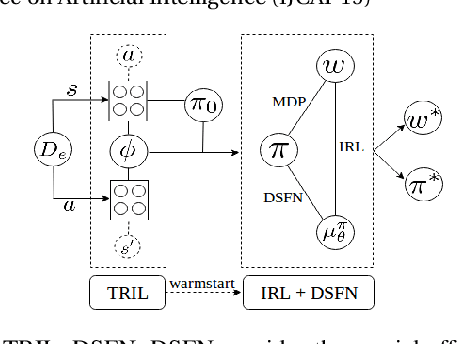

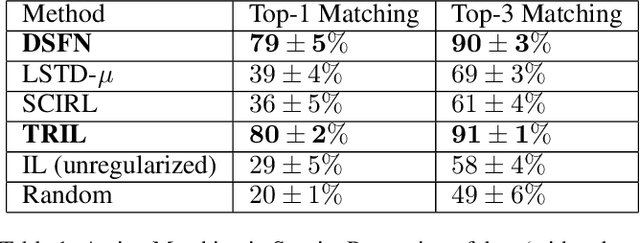

Truly Batch Apprenticeship Learning with Deep Successor Features

Mar 24, 2019

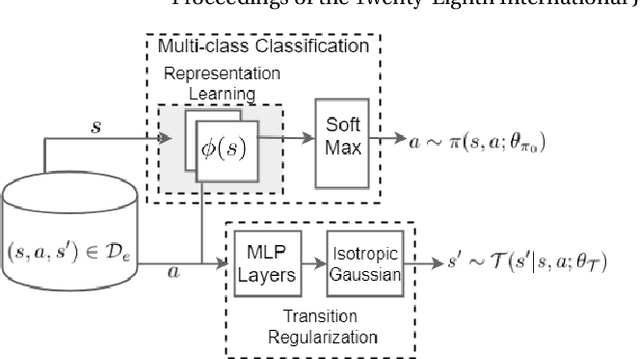

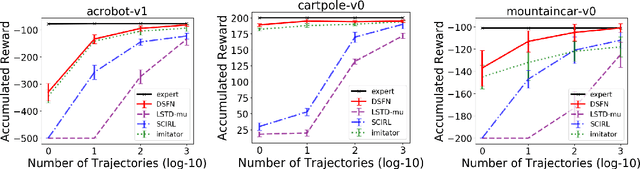

Abstract:We introduce a novel apprenticeship learning algorithm to learn an expert's underlying reward structure in off-policy model-free \emph{batch} settings. Unlike existing methods that require a dynamics model or additional data acquisition for on-policy evaluation, our algorithm requires only the batch data of observed expert behavior. Such settings are common in real-world tasks---health care, finance or industrial processes ---where accurate simulators do not exist or data acquisition is costly. To address challenges in batch settings, we introduce Deep Successor Feature Networks(DSFN) that estimate feature expectations in an off-policy setting and a transition-regularized imitation network that produces a near-expert initial policy and an efficient feature representation. Our algorithm achieves superior results in batch settings on both control benchmarks and a vital clinical task of sepsis management in the Intensive Care Unit.

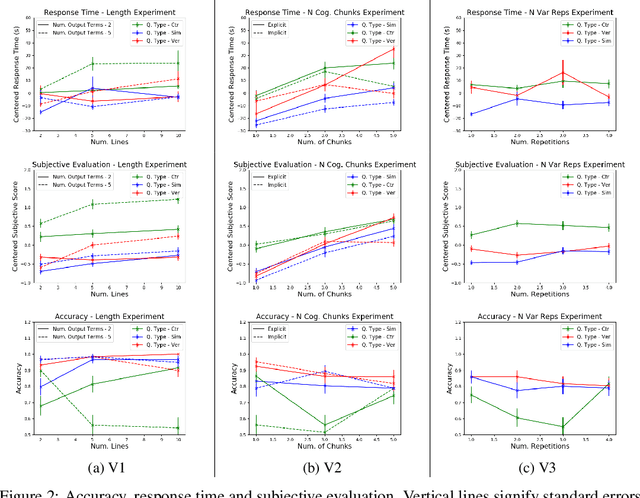

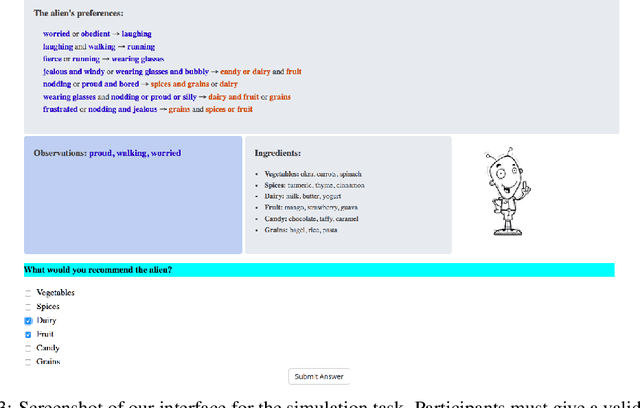

An Evaluation of the Human-Interpretability of Explanation

Jan 31, 2019

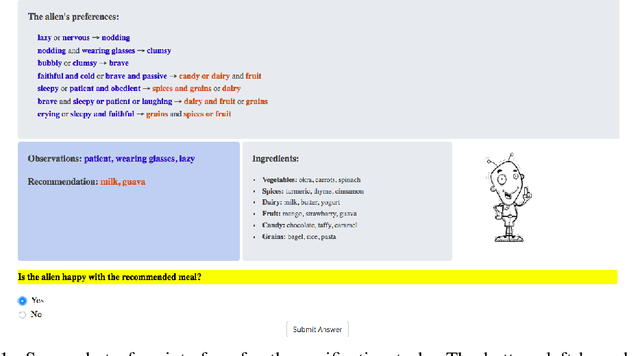

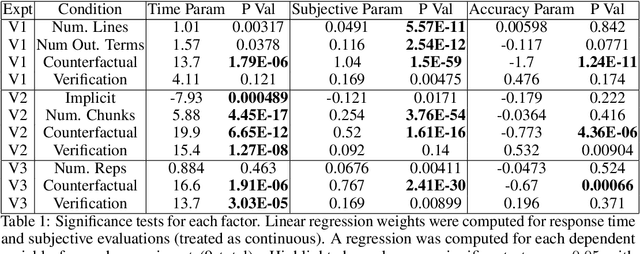

Abstract:Recent years have seen a boom in interest in machine learning systems that can provide a human-understandable rationale for their predictions or decisions. However, exactly what kinds of explanation are truly human-interpretable remains poorly understood. This work advances our understanding of what makes explanations interpretable under three specific tasks that users may perform with machine learning systems: simulation of the response, verification of a suggested response, and determining whether the correctness of a suggested response changes under a change to the inputs. Through carefully controlled human-subject experiments, we identify regularizers that can be used to optimize for the interpretability of machine learning systems. Our results show that the type of complexity matters: cognitive chunks (newly defined concepts) affect performance more than variable repetitions, and these trends are consistent across tasks and domains. This suggests that there may exist some common design principles for explanation systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge