Eytan Bakshy

Real-world Video Adaptation with Reinforcement Learning

Aug 28, 2020

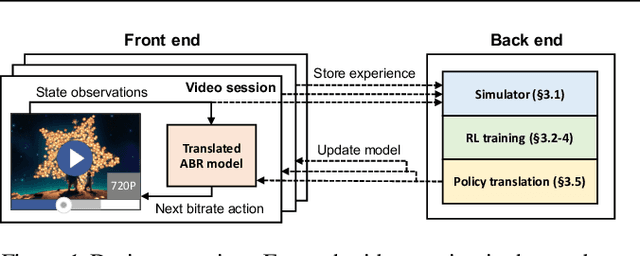

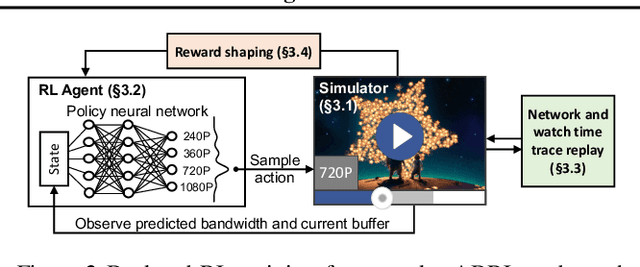

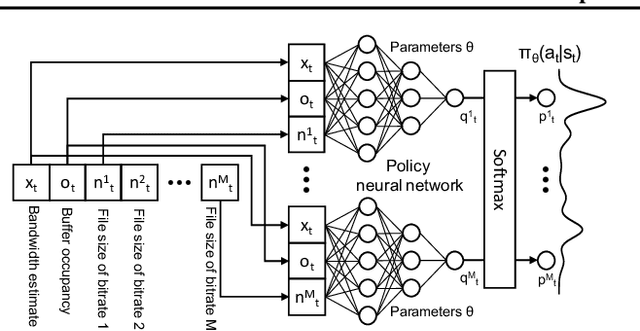

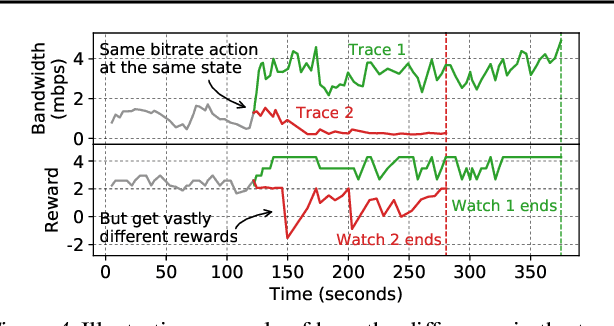

Abstract:Client-side video players employ adaptive bitrate (ABR) algorithms to optimize user quality of experience (QoE). We evaluate recently proposed RL-based ABR methods in Facebook's web-based video streaming platform. Real-world ABR contains several challenges that requires customized designs beyond off-the-shelf RL algorithms -- we implement a scalable neural network architecture that supports videos with arbitrary bitrate encodings; we design a training method to cope with the variance resulting from the stochasticity in network conditions; and we leverage constrained Bayesian optimization for reward shaping in order to optimize the conflicting QoE objectives. In a week-long worldwide deployment with more than 30 million video streaming sessions, our RL approach outperforms the existing human-engineered ABR algorithms.

Differentiable Expected Hypervolume Improvement for Parallel Multi-Objective Bayesian Optimization

Jun 11, 2020

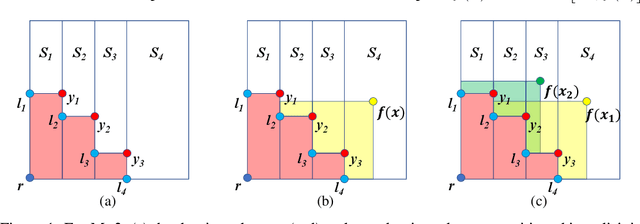

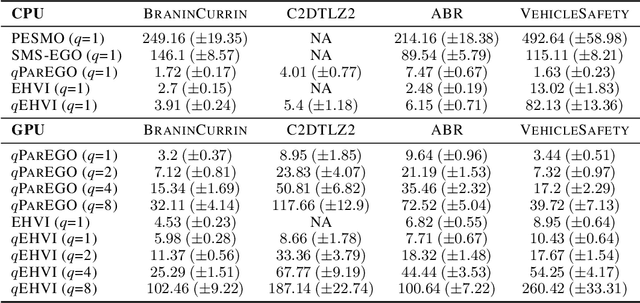

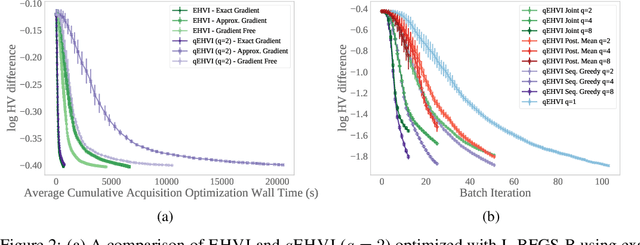

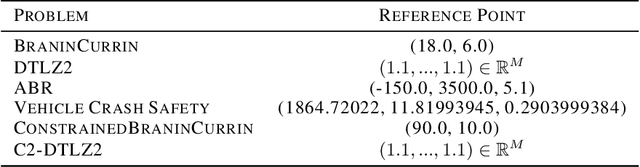

Abstract:In many real-world scenarios, decision makers seek to efficiently optimize multiple competing objectives in a sample-efficient fashion. Multi-objective Bayesian optimization (BO) is a common approach, but many existing acquisition functions do not have known analytic gradients and suffer from high computational overhead. We leverage recent advances in programming models and hardware acceleration for multi-objective BO using Expected Hypervolume Improvement (EHVI)---an algorithm notorious for its high computational complexity. We derive a novel formulation of $q$-Expected Hypervolume Improvement ($q$EHVI), an acquisition function that extends EHVI to the parallel, constrained evaluation setting. $q$EHVI is an exact computation of the joint EHVI of $q$ new candidate points (up to Monte-Carlo (MC) integration error). Whereas previous EHVI formulations rely on gradient-free acquisition optimization or approximated gradients, we compute exact gradients of the MC estimator via auto-differentiation, thereby enabling efficient and effective optimization using first-order and quasi-second-order methods. Lastly, our empirical evaluation demonstrates that $q$EHVI is computationally tractable in many practical scenarios and outperforms state-of-the-art multi-objective BO algorithms at a fraction of their wall time.

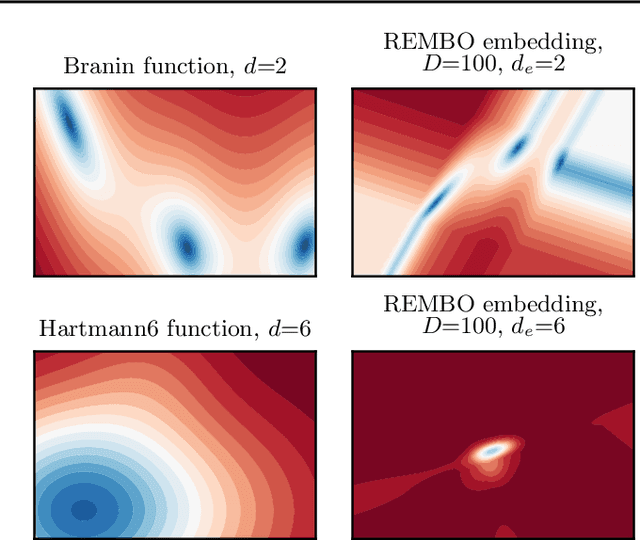

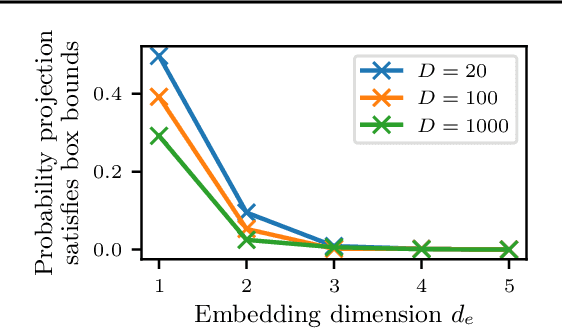

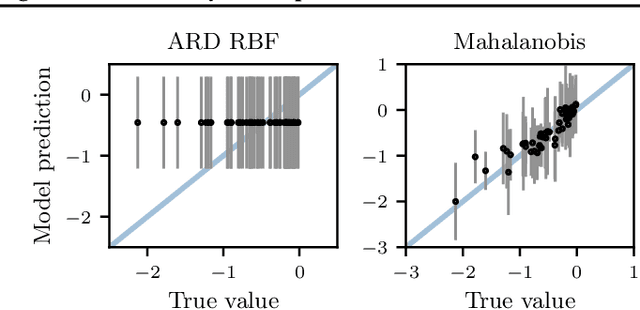

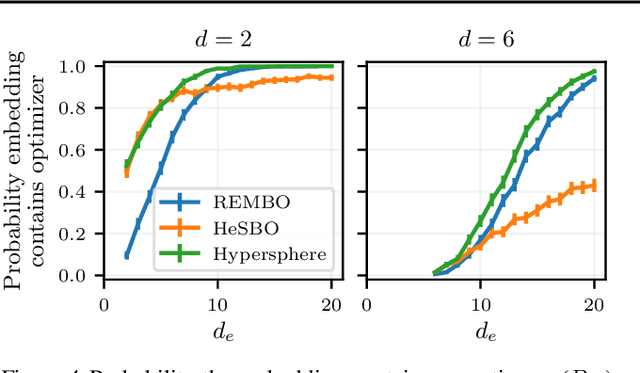

Re-Examining Linear Embeddings for High-Dimensional Bayesian Optimization

Jan 31, 2020

Abstract:Bayesian optimization (BO) is a popular approach to optimize expensive-to-evaluate black-box functions. A significant challenge in BO is to scale to high-dimensional parameter spaces while retaining sample efficiency. A solution considered in existing literature is to embed the high-dimensional space in a lower-dimensional manifold, often via a random linear embedding. In this paper, we identify several crucial issues and misconceptions about the use of linear embeddings for BO. We study the properties of linear embeddings from the literature and show that some of the design choices in current approaches adversely impact their performance. We show empirically that properly addressing these issues significantly improves the efficacy of linear embeddings for BO on a range of problems, including learning a gait policy for robot locomotion.

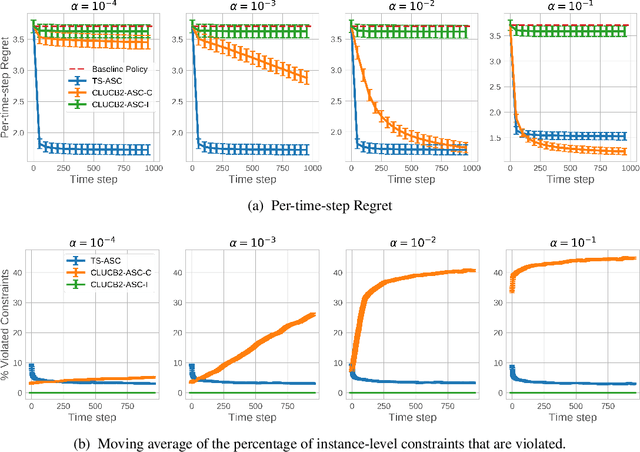

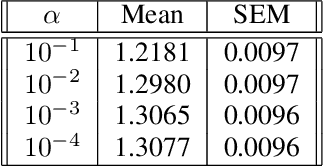

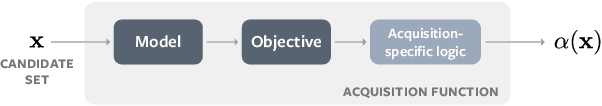

Thompson Sampling for Contextual Bandit Problems with Auxiliary Safety Constraints

Nov 02, 2019

Abstract:Recent advances in contextual bandit optimization and reinforcement learning have garnered interest in applying these methods to real-world sequential decision making problems. Real-world applications frequently have constraints with respect to a currently deployed policy. Many of the existing constraint-aware algorithms consider problems with a single objective (the reward) and a constraint on the reward with respect to a baseline policy. However, many important applications involve multiple competing objectives and auxiliary constraints. In this paper, we propose a novel Thompson sampling algorithm for multi-outcome contextual bandit problems with auxiliary constraints. We empirically evaluate our algorithm on a synthetic problem. Lastly, we apply our method to a real world video transcoding problem and provide a practical way for navigating the trade-off between safety and performance using Bayesian optimization.

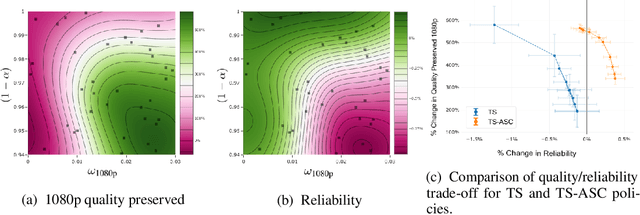

BoTorch: Programmable Bayesian Optimization in PyTorch

Oct 14, 2019

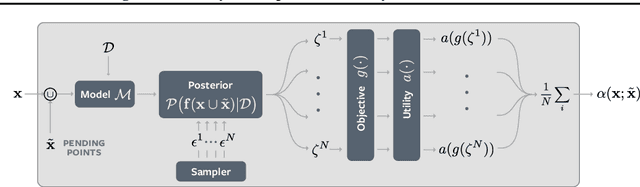

Abstract:Bayesian optimization provides sample-efficient global optimization for a broad range of applications, including automatic machine learning, molecular chemistry, and experimental design. We introduce BoTorch, a modern programming framework for Bayesian optimization. Enabled by Monte-Carlo (MC) acquisition functions and auto-differentiation, BoTorch's modular design facilitates flexible specification and optimization of probabilistic models written in PyTorch, radically simplifying implementation of novel acquisition functions. Our MC approach is made practical by a distinctive algorithmic foundation that leverages fast predictive distributions and hardware acceleration. In experiments, we demonstrate the improved sample efficiency of BoTorch relative to other popular libraries. BoTorch is open source and available at https://github.com/pytorch/botorch.

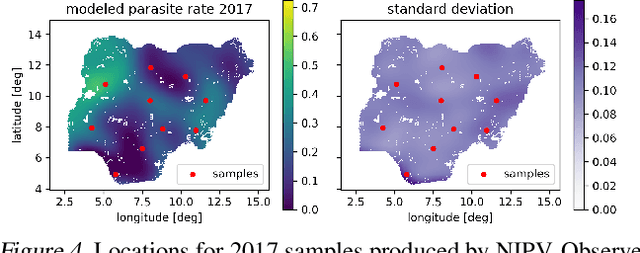

Bayesian Optimization for Policy Search via Online-Offline Experimentation

Apr 29, 2019

Abstract:Online field experiments are the gold-standard way of evaluating changes to real-world interactive machine learning systems. Yet our ability to explore complex, multi-dimensional policy spaces - such as those found in recommendation and ranking problems - is often constrained by the limited number of experiments that can be run simultaneously. To alleviate these constraints, we augment online experiments with an offline simulator and apply multi-task Bayesian optimization to tune live machine learning systems. We describe practical issues that arise in these types of applications, including biases that arise from using a simulator and assumptions for the multi-task kernel. We measure empirical learning curves which show substantial gains from including data from biased offline experiments, and show how these learning curves are consistent with theoretical results for multi-task Gaussian process generalization. We find that improved kernel inference is a significant driver of multi-task generalization. Finally, we show several examples of Bayesian optimization efficiently tuning a live machine learning system by combining offline and online experiments.

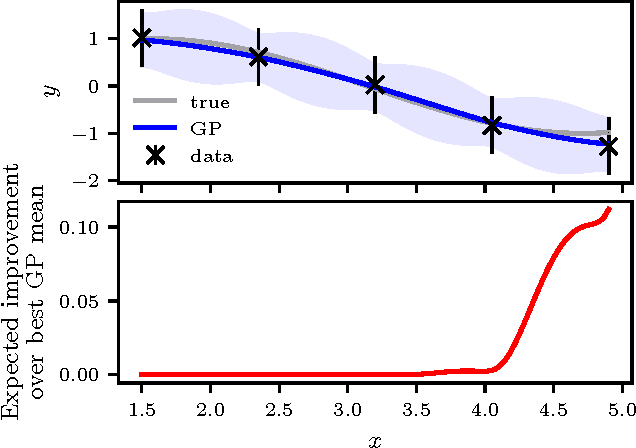

Constrained Bayesian Optimization with Noisy Experiments

Jun 26, 2018

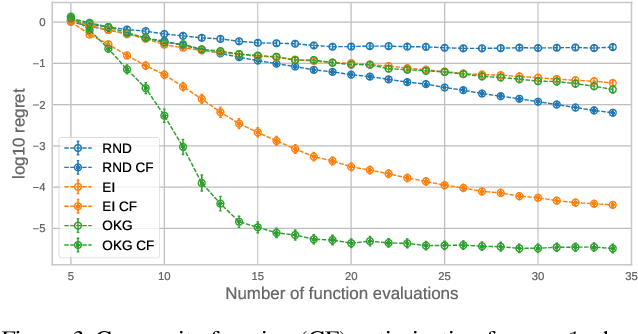

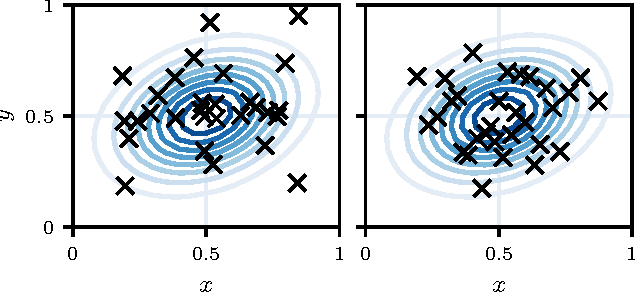

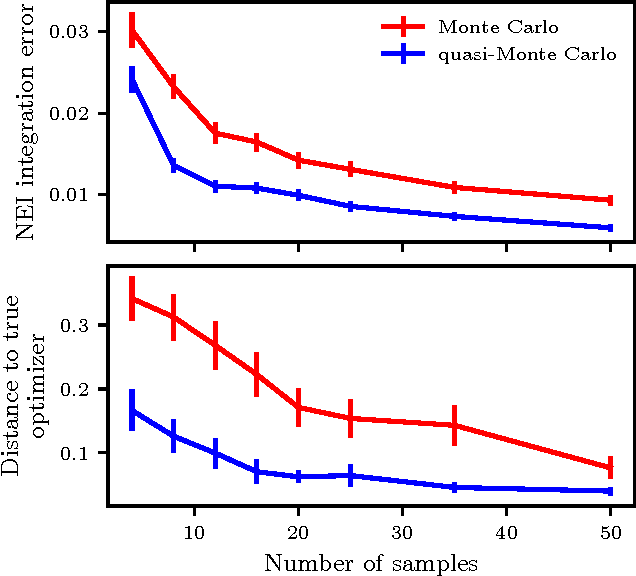

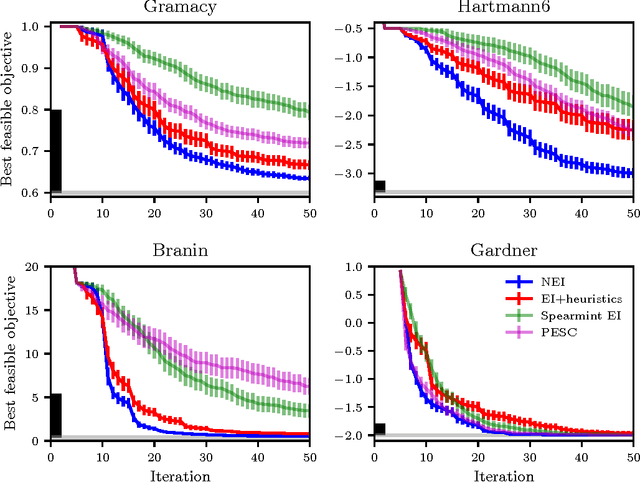

Abstract:Randomized experiments are the gold standard for evaluating the effects of changes to real-world systems. Data in these tests may be difficult to collect and outcomes may have high variance, resulting in potentially large measurement error. Bayesian optimization is a promising technique for efficiently optimizing multiple continuous parameters, but existing approaches degrade in performance when the noise level is high, limiting its applicability to many randomized experiments. We derive an expression for expected improvement under greedy batch optimization with noisy observations and noisy constraints, and develop a quasi-Monte Carlo approximation that allows it to be efficiently optimized. Simulations with synthetic functions show that optimization performance on noisy, constrained problems outperforms existing methods. We further demonstrate the effectiveness of the method with two real-world experiments conducted at Facebook: optimizing a ranking system, and optimizing server compiler flags.

Scalable Meta-Learning for Bayesian Optimization

Feb 06, 2018

Abstract:Bayesian optimization has become a standard technique for hyperparameter optimization, including data-intensive models such as deep neural networks that may take days or weeks to train. We consider the setting where previous optimization runs are available, and we wish to use their results to warm-start a new optimization run. We develop an ensemble model that can incorporate the results of past optimization runs, while avoiding the poor scaling that comes with putting all results into a single Gaussian process model. The ensemble combines models from past runs according to estimates of their generalization performance on the current optimization. Results from a large collection of hyperparameter optimization benchmark problems and from optimization of a production computer vision platform at Facebook show that the ensemble can substantially reduce the time it takes to obtain near-optimal configurations, and is useful for warm-starting expensive searches or running quick re-optimizations.

Bias and high-dimensional adjustment in observational studies of peer effects

Jun 14, 2017

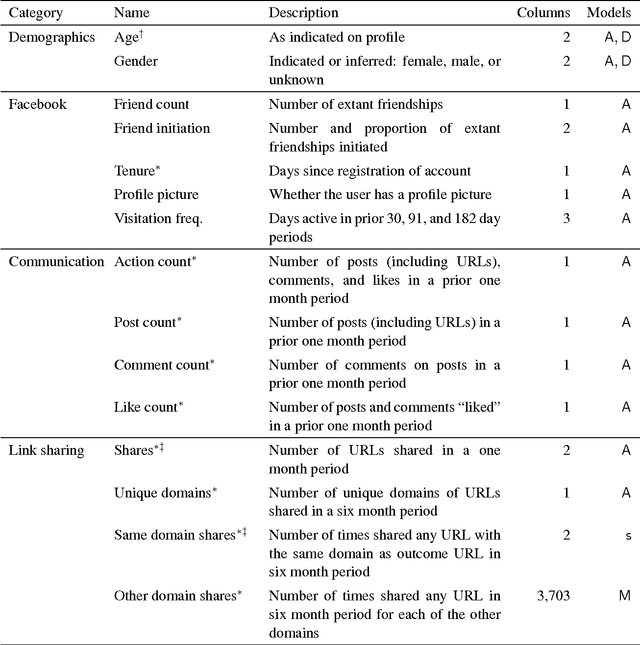

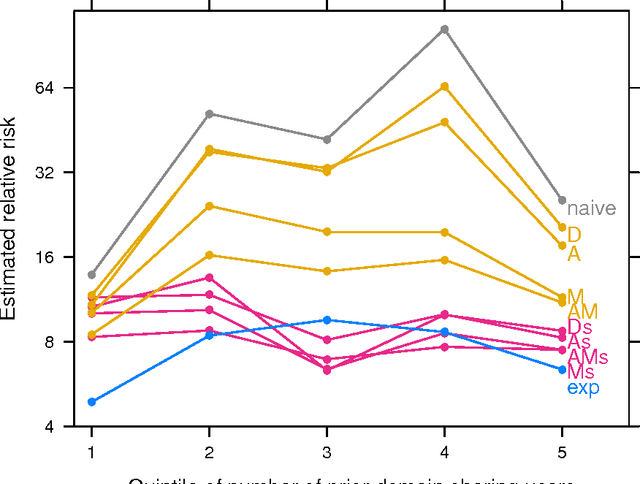

Abstract:Peer effects, in which the behavior of an individual is affected by the behavior of their peers, are posited by multiple theories in the social sciences. Other processes can also produce behaviors that are correlated in networks and groups, thereby generating debate about the credibility of observational (i.e. nonexperimental) studies of peer effects. Randomized field experiments that identify peer effects, however, are often expensive or infeasible. Thus, many studies of peer effects use observational data, and prior evaluations of causal inference methods for adjusting observational data to estimate peer effects have lacked an experimental "gold standard" for comparison. Here we show, in the context of information and media diffusion on Facebook, that high-dimensional adjustment of a nonexperimental control group (677 million observations) using propensity score models produces estimates of peer effects statistically indistinguishable from those from using a large randomized experiment (220 million observations). Naive observational estimators overstate peer effects by 320% and commonly used variables (e.g., demographics) offer little bias reduction, but adjusting for a measure of prior behaviors closely related to the focal behavior reduces bias by 91%. High-dimensional models adjusting for over 3,700 past behaviors provide additional bias reduction, such that the full model reduces bias by over 97%. This experimental evaluation demonstrates that detailed records of individuals' past behavior can improve studies of social influence, information diffusion, and imitation; these results are encouraging for the credibility of some studies but also cautionary for studies of rare or new behaviors. More generally, these results show how large, high-dimensional data sets and statistical learning techniques can be used to improve causal inference in the behavioral sciences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge