Ekaterina Karmanova

DroneARchery: Human-Drone Interaction through Augmented Reality with Haptic Feedback and Multi-UAV Collision Avoidance Driven by Deep Reinforcement Learning

Oct 14, 2022

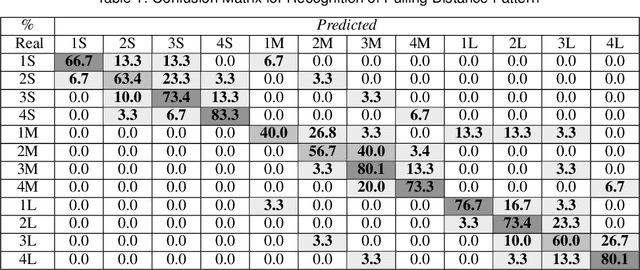

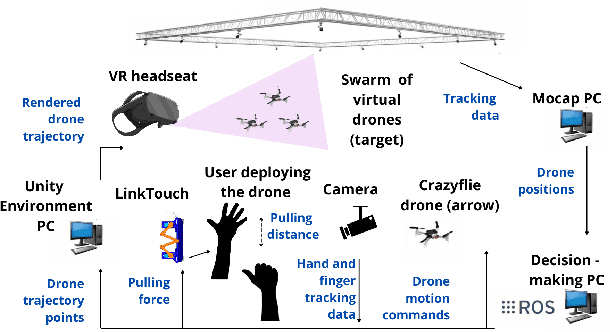

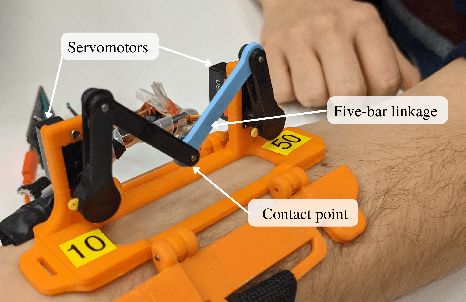

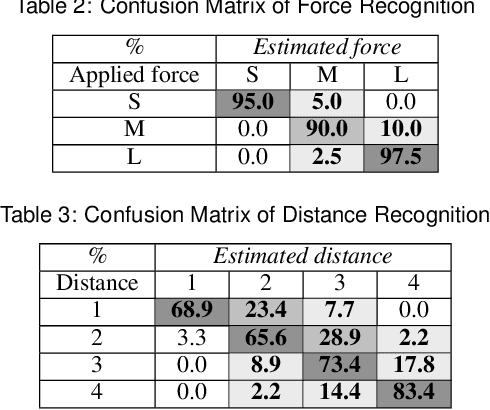

Abstract:We propose a novel concept of augmented reality (AR) human-drone interaction driven by RL-based swarm behavior to achieve intuitive and immersive control of a swarm formation of unmanned aerial vehicles. The DroneARchery system developed by us allows the user to quickly deploy a swarm of drones, generating flight paths simulating archery. The haptic interface LinkGlide delivers a tactile stimulus of the bowstring tension to the forearm to increase the precision of aiming. The swarm of released drones dynamically avoids collisions between each other, the drone following the user, and external obstacles with behavior control based on deep reinforcement learning. The developed concept was tested in the scenario with a human, where the user shoots from a virtual bow with a real drone to hit the target. The human operator observes the ballistic trajectory of the drone in an AR and achieves a realistic and highly recognizable experience of the bowstring tension through the haptic display. The experimental results revealed that the system improves trajectory prediction accuracy by 63.3% through applying AR technology and conveying haptic feedback of pulling force. DroneARchery users highlighted the naturalness (4.3 out of 5 point Likert scale) and increased confidence (4.7 out of 5) when controlling the drone. We have designed the tactile patterns to present four sliding distances (tension) and three applied force levels (stiffness) of the haptic display. Users demonstrated the ability to distinguish tactile patterns produced by the haptic display representing varying bowstring tension(average recognition rate is of 72.8%) and stiffness (average recognition rate is of 94.2%). The novelty of the research is the development of an AR-based approach for drone control that does not require special skills and training from the operator.

Multi-sensor large-scale dataset for multi-view 3D reconstruction

Mar 11, 2022

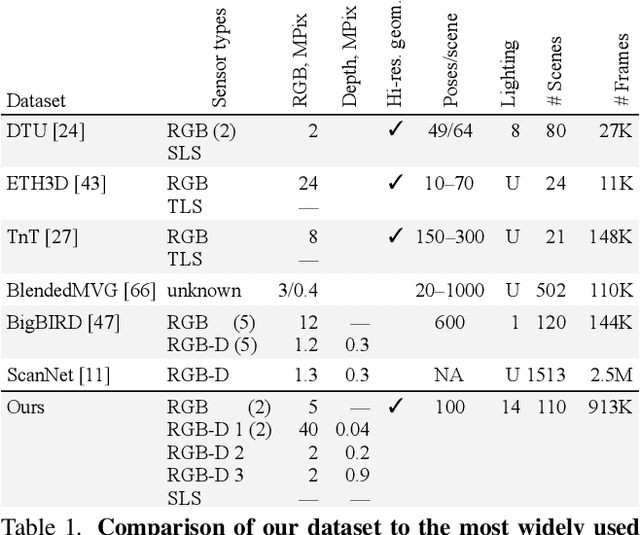

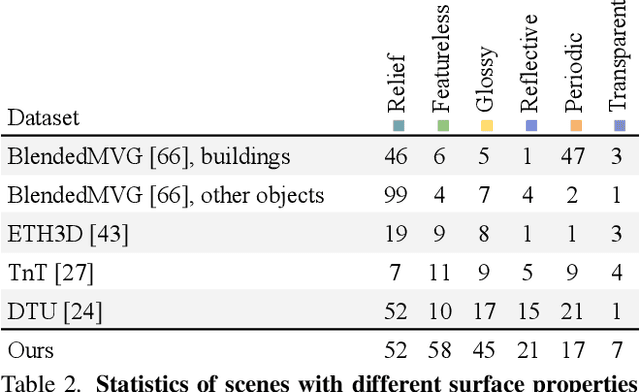

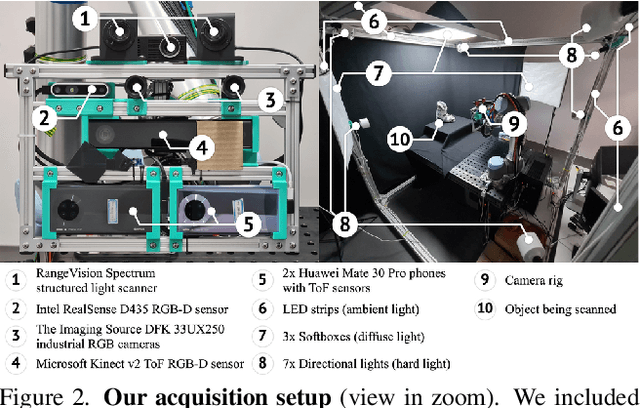

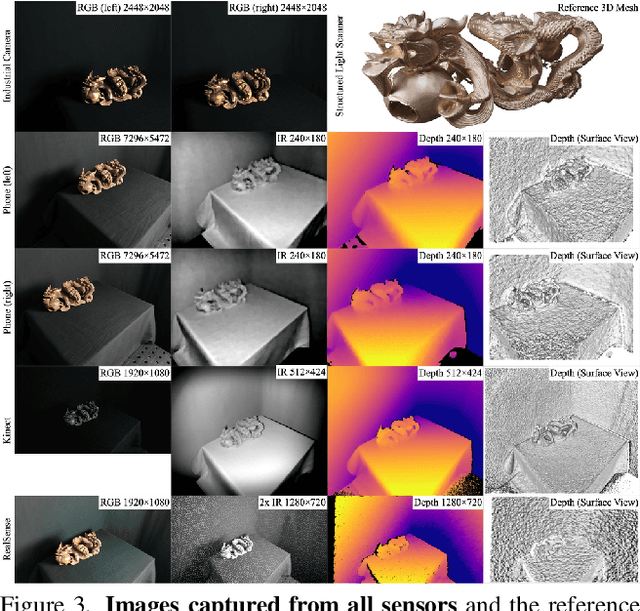

Abstract:We present a new multi-sensor dataset for 3D surface reconstruction. It includes registered RGB and depth data from sensors of different resolutions and modalities: smartphones, Intel RealSense, Microsoft Kinect, industrial cameras, and structured-light scanner. The data for each scene is obtained under a large number of lighting conditions, and the scenes are selected to emphasize a diverse set of material properties challenging for existing algorithms. In the acquisition process, we aimed to maximize high-resolution depth data quality for challenging cases, to provide reliable ground truth for learning algorithms. Overall, we provide over 1.4 million images of 110 different scenes acquired at 14 lighting conditions from 100 viewing directions. We expect our dataset will be useful for evaluation and training of 3D reconstruction algorithms of different types and for other related tasks. Our dataset and accompanying software will be available online.

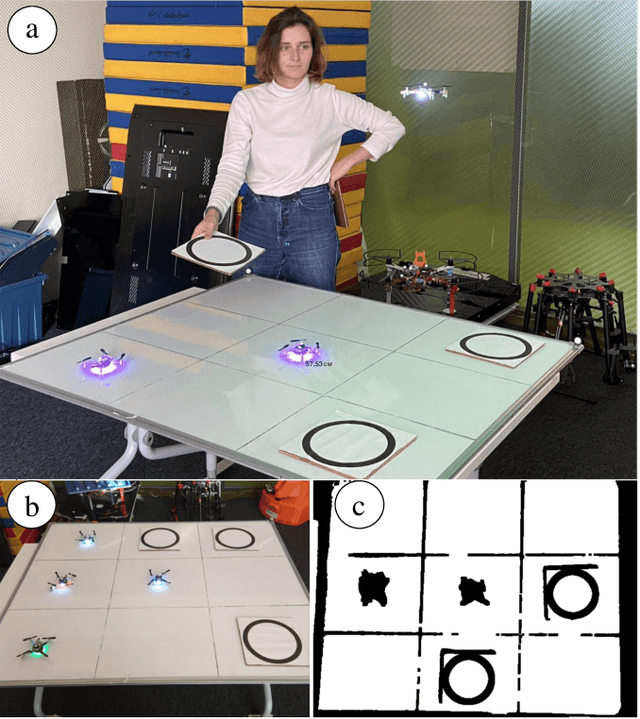

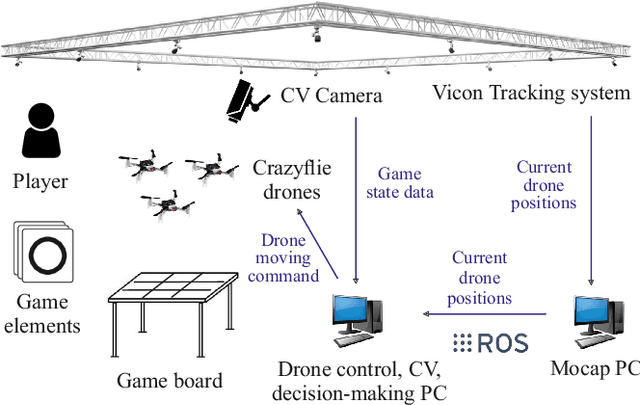

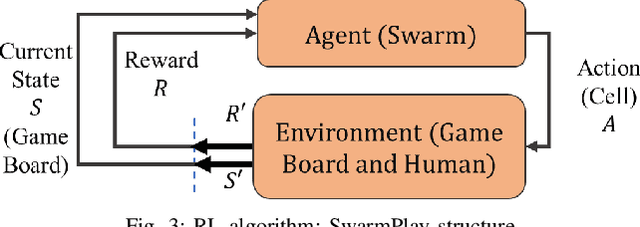

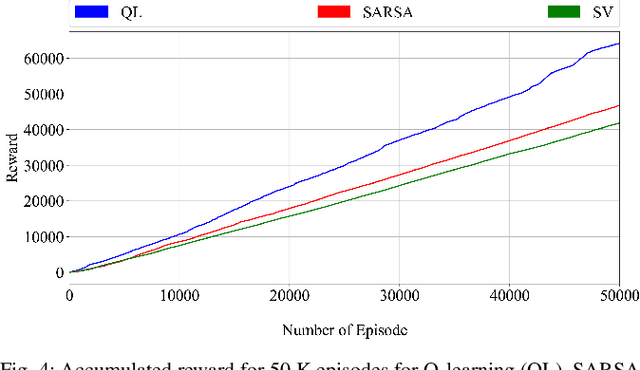

SwarmPlay: Interactive Tic-tac-toe Board Game with Swarm of Nano-UAVs driven by Reinforcement Learning

Aug 03, 2021

Abstract:Reinforcement learning (RL) methods have been actively applied in the field of robotics, allowing the system itself to find a solution for a task otherwise requiring a complex decision-making algorithm. In this paper, we present a novel RL-based Tic-tac-toe scenario, i.e. SwarmPlay, where each playing component is presented by an individual drone that has its own mobility and swarm intelligence to win against a human player. Thus, the combination of challenging swarm strategy and human-drone collaboration aims to make the games with machines tangible and interactive. Although some research on AI for board games already exists, e.g., chess, the SwarmPlay technology has the potential to offer much more engagement and interaction with the user as it proposes a multi-agent swarm instead of a single interactive robot. We explore user's evaluation of RL-based swarm behavior in comparison with the game theory-based behavior. The preliminary user study revealed that participants were highly engaged in the game with drones (70% put a maximum score on the Likert scale) and found it less artificial compared to the regular computer-based systems (80%). The affection of the user's game perception from its outcome was analyzed and put under discussion. User study revealed that SwarmPlay has the potential to be implemented in a wider range of games, significantly improving human-drone interactivity.

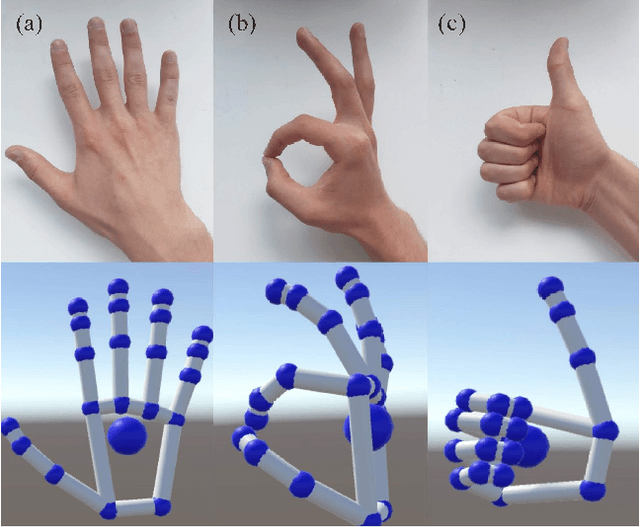

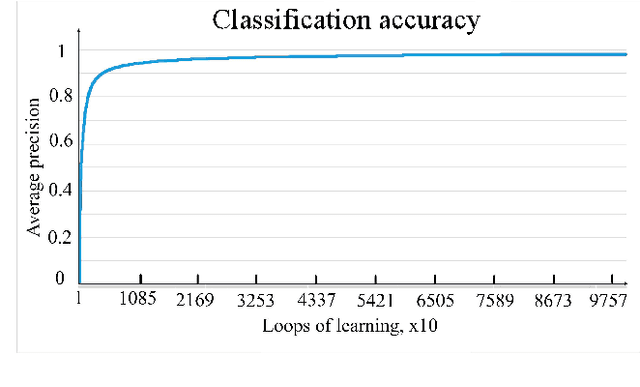

DronePaint: Swarm Light Painting with DNN-based Gesture Recognition

Jul 23, 2021

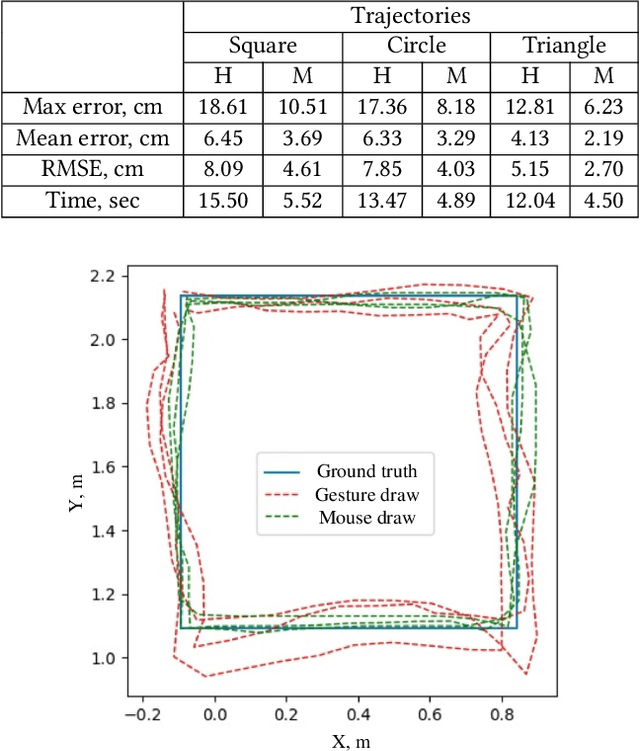

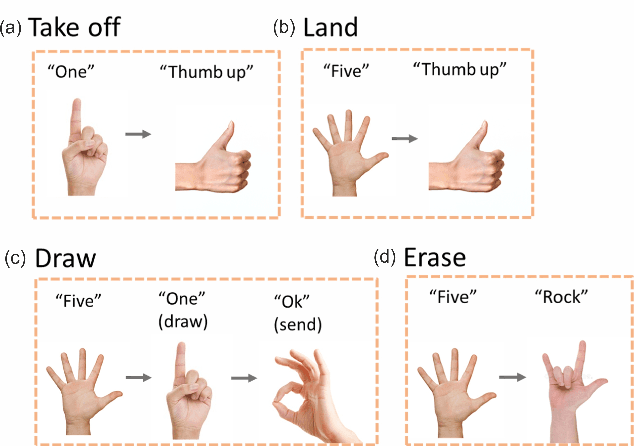

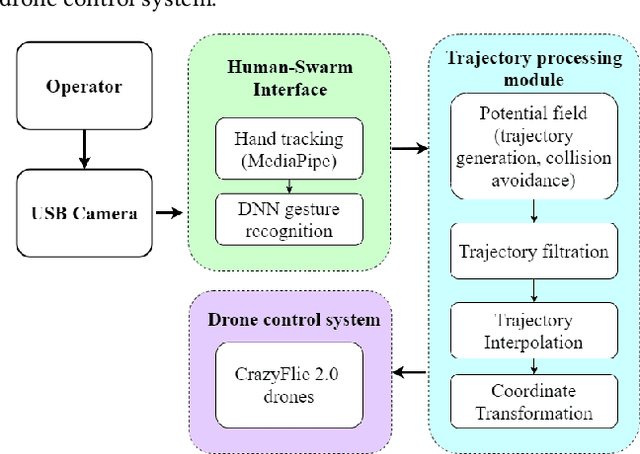

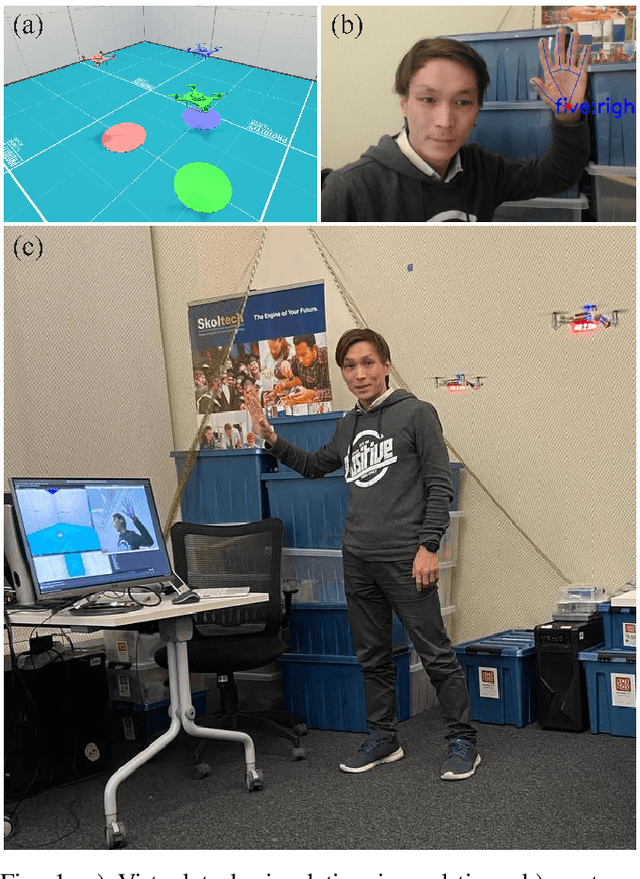

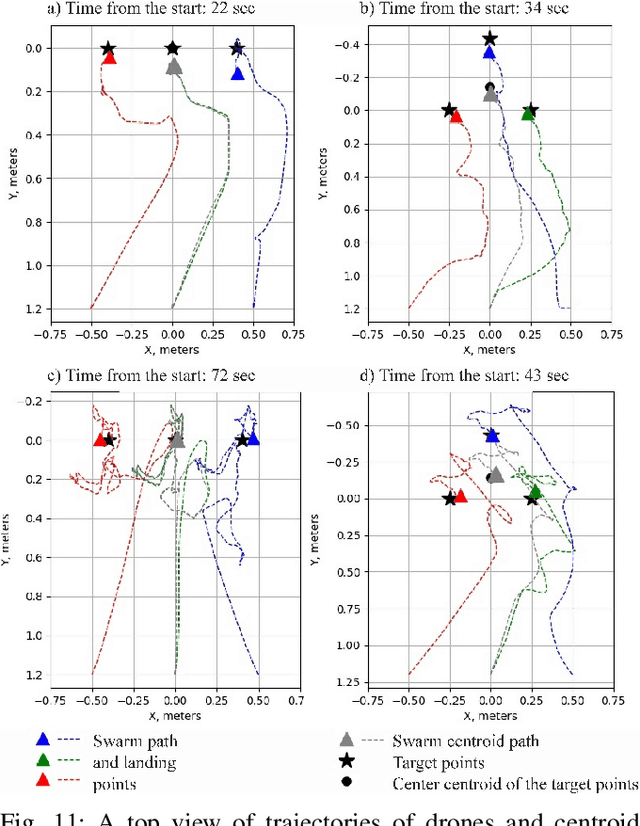

Abstract:We propose a novel human-swarm interaction system, allowing the user to directly control a swarm of drones in a complex environment through trajectory drawing with a hand gesture interface based on the DNN-based gesture recognition. The developed CV-based system allows the user to control the swarm behavior without additional devices through human gestures and motions in real-time, providing convenient tools to change the swarm's shape and formation. The two types of interaction were proposed and implemented to adjust the swarm hierarchy: trajectory drawing and free-form trajectory generation control. The experimental results revealed a high accuracy of the gesture recognition system (99.75%), allowing the user to achieve relatively high precision of the trajectory drawing (mean error of 5.6 cm in comparison to 3.1 cm by mouse drawing) over the three evaluated trajectory patterns. The proposed system can be potentially applied in complex environment exploration, spray painting using drones, and interactive drone shows, allowing users to create their own art objects by drone swarms.

SwarmPaint: Human-Swarm Interaction for Trajectory Generation and Formation Control by DNN-based Gesture Interface

Jun 28, 2021

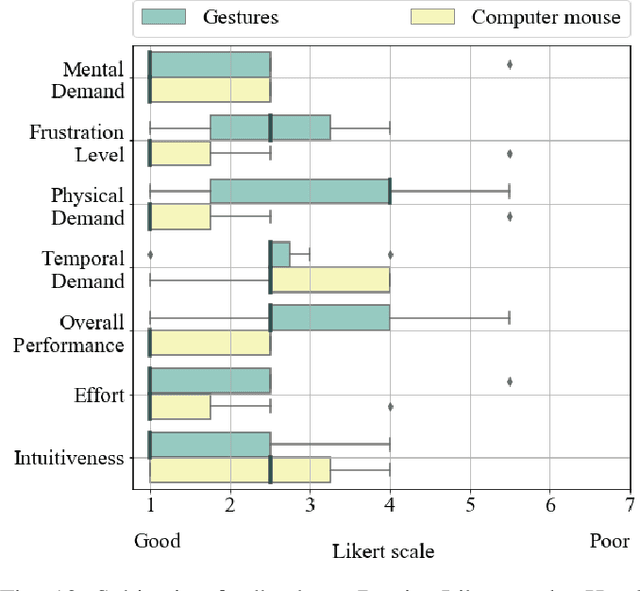

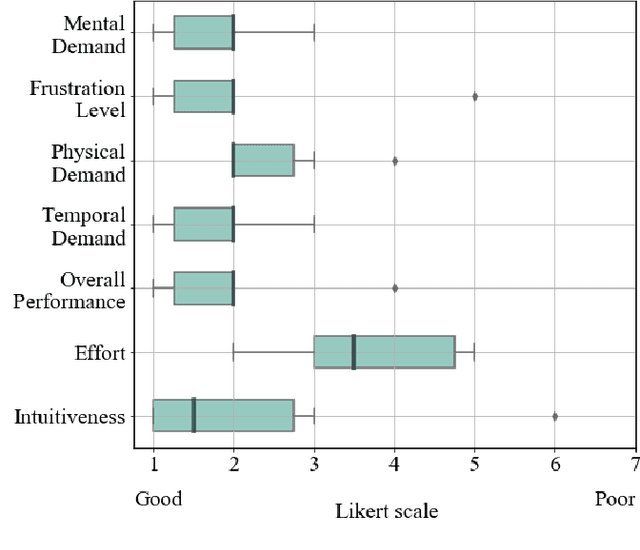

Abstract:Teleoperation tasks with multi-agent systems have a high potential in supporting human-swarm collaborative teams in exploration and rescue operations. However, it requires an intuitive and adaptive control approach to ensure swarm stability in a cluttered and dynamically shifting environment. We propose a novel human-swarm interaction system, allowing the user to control swarm position and formation by either direct hand motion or by trajectory drawing with a hand gesture interface based on the DNN gesture recognition. The key technology of the SwarmPaint is the user's ability to perform various tasks with the swarm without additional devices by switching between interaction modes. Two types of interaction were proposed and developed to adjust a swarm behavior: free-form trajectory generation control and shaped formation control. Two preliminary user studies were conducted to explore user's performance and subjective experience from human-swarm interaction through the developed control modes. The experimental results revealed a sufficient accuracy in the trajectory tracing task (mean error of 5.6 cm by gesture draw and 3.1 cm by mouse draw with the pattern of dimension 1 m by 1 m) over three evaluated trajectory patterns and up to 7.3 cm accuracy in targeting task with two target patterns of 1 m achieved by SwarmPaint interface. Moreover, the participants evaluated the trajectory drawing interface as more intuitive (12.9 %) and requiring less effort to utilize (22.7%) than direct shape and position control by gestures, although its physical workload and failure in performance were presumed as more significant (by 9.1% and 16.3%, respectively).

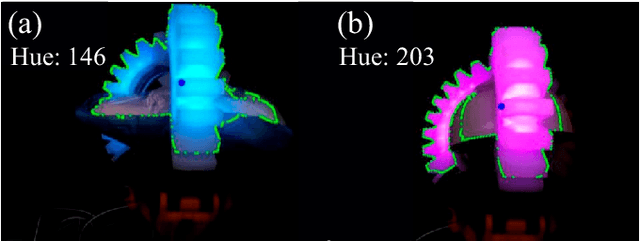

DroneTrap: Drone Catching in Midair by Soft Robotic Hand with Color-Based Force Detection and Hand Gesture Recognition

Feb 07, 2021

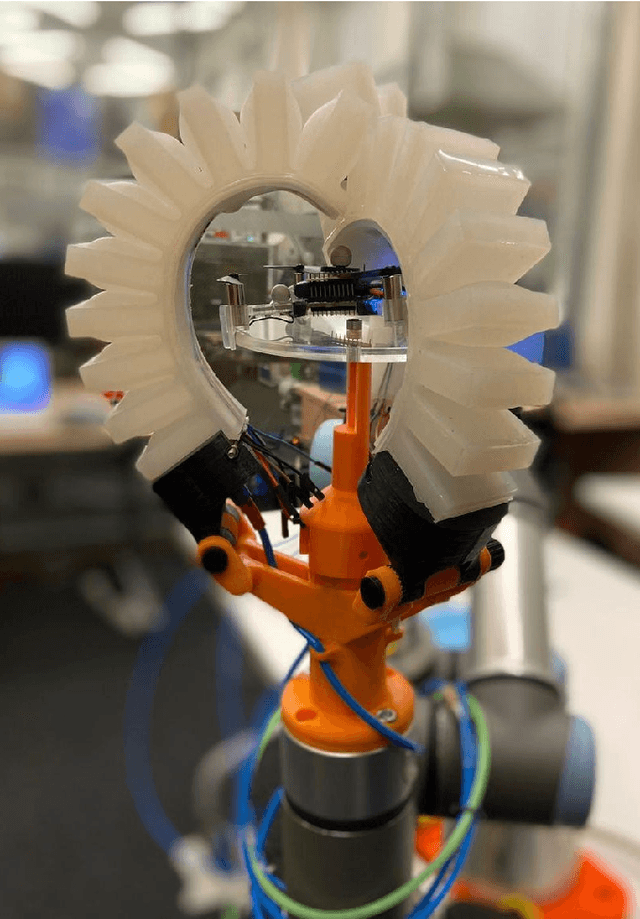

Abstract:The paper proposes a novel concept of docking drones to make this process as safe and fast as possible. The idea behind the project is that a robot with the gripper grasps the drone in midair. The human operator navigates the robotic arm with the ML-based gesture recognition interface. The 3-finger robot hand with soft fingers and integrated touch-sensors is pneumatically actuated. This allows achieving safety while catching to not destroying the drone's mechanical structure, fragile propellers, and motors. Additionally, the soft hand has a unique technology of providing force information through the color of the fingers to the remote computer vision (CV) system. In this case, not only the control system can understand the force applied but also the human operator. The operator has full control of robot motion and task execution without additional programming by wearing a mocap glove with gesture recognition, which was developed and applied for the high-level control of DroneTrap. The experimental results revealed that the developed color-based force estimation can be applied for rigid object capturing with high precision (95.3\%). The proposed technology can potentially revolutionize the landing and deployment of drones for parcel delivery on uneven ground, structure inspections, risque operations, etc.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge