Pavel Kopanev

TiltXter: CNN-based Electro-tactile Rendering of Tilt Angle for Telemanipulation of Pasteur Pipettes

Sep 24, 2024Abstract:The shape of deformable objects can change drastically during grasping by robotic grippers, causing an ambiguous perception of their alignment and hence resulting in errors in robot positioning and telemanipulation. Rendering clear tactile patterns is fundamental to increasing users' precision and dexterity through tactile haptic feedback during telemanipulation. Therefore, different methods have to be studied to decode the sensors' data into haptic stimuli. This work presents a telemanipulation system for plastic pipettes that consists of a Force Dimension Omega.7 haptic interface endowed with two electro-stimulation arrays and two tactile sensor arrays embedded in the 2-finger Robotiq gripper. We propose a novel approach based on convolutional neural networks (CNN) to detect the tilt of deformable objects. The CNN generates a tactile pattern based on recognized tilt data to render further electro-tactile stimuli provided to the user during the telemanipulation. The study has shown that using the CNN algorithm, tilt recognition by users increased from 23.13\% with the downsized data to 57.9%, and the success rate during teleoperation increased from 53.12% using the downsized data to 92.18% using the tactile patterns generated by the CNN.

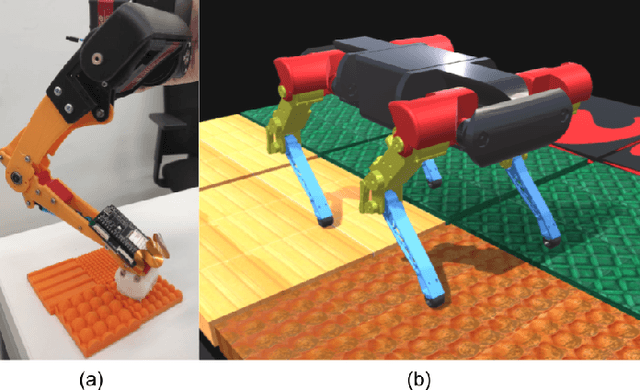

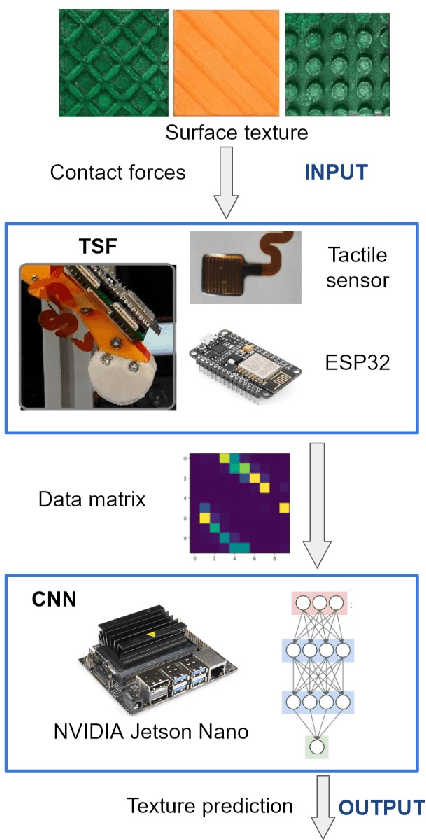

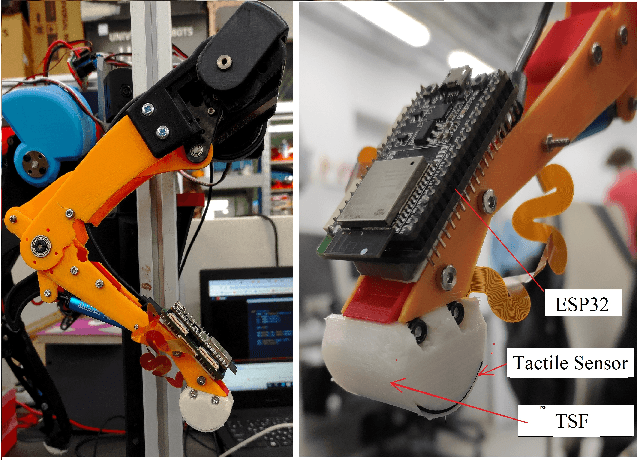

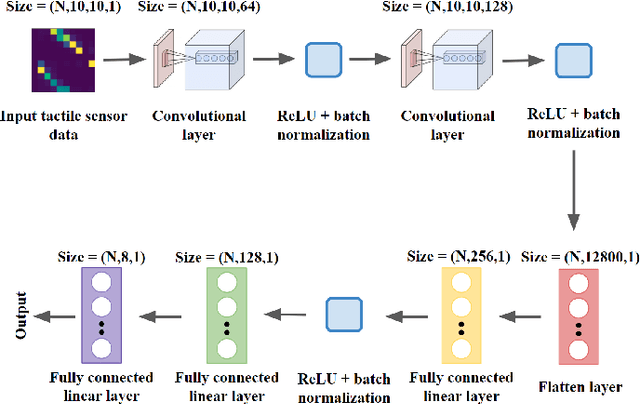

DogTouch: CNN-based Recognition of Surface Textures by Quadruped Robot with High Density Tactile Sensors

Jun 09, 2022

Abstract:The ability to perform locomotion in various terrains is critical for legged robots. However, the robot has to have a better understanding of the surface it is walking on to perform robust locomotion on different terrains. Animals and humans are able to recognize the surface with the help of the tactile sensation on their feet. Although, the foot tactile sensation for legged robots has not been much explored. This paper presents research on a novel quadruped robot DogTouch with tactile sensing feet (TSF). TSF allows the recognition of different surface textures utilizing a tactile sensor and a convolutional neural network (CNN). The experimental results show a sufficient validation accuracy of 74.37\% for our trained CNN-based model, with the highest recognition for line patterns of 90\%. In the future, we plan to improve the prediction model by presenting surface samples with the various depths of patterns and applying advanced Deep Learning and Shallow learning models for surface recognition. Additionally, we propose a novel approach to navigation of quadruped and legged robots. We can arrange the tactile paving textured surface (similar that used for blind or visually impaired people). Thus, DogTouch will be capable of locomotion in unknown environment by just recognizing the specific tactile patterns which will indicate the straight path, left or right turn, pedestrian crossing, road, and etc. That will allow robust navigation regardless of lighting condition. Future quadruped robots equipped with visual and tactile perception system will be able to safely and intelligently navigate and interact in the unstructured indoor and outdoor environment.

Multi-sensor large-scale dataset for multi-view 3D reconstruction

Mar 11, 2022

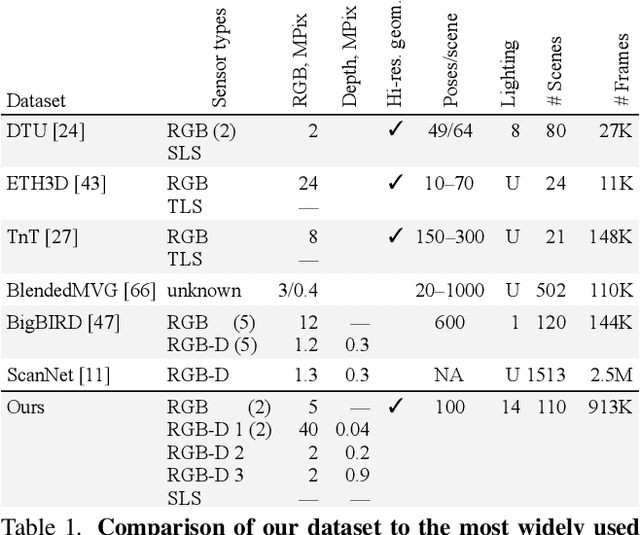

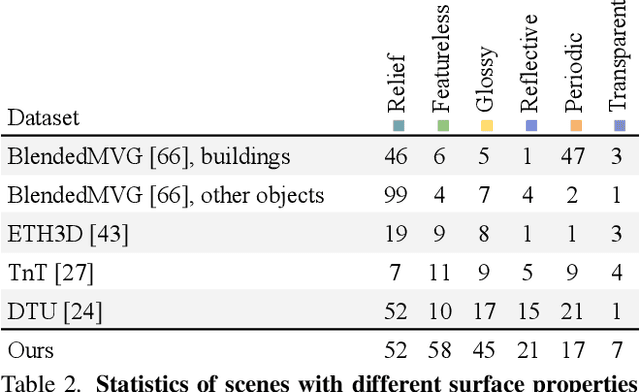

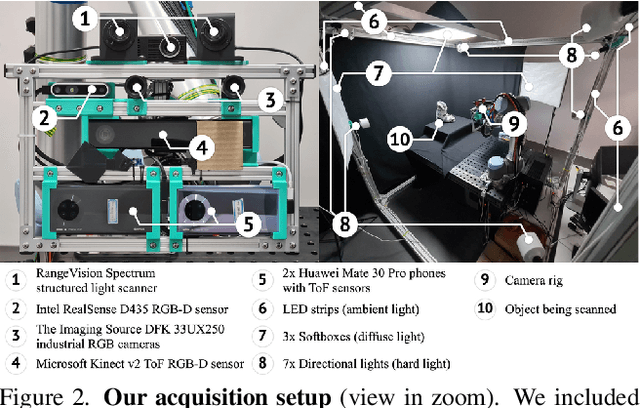

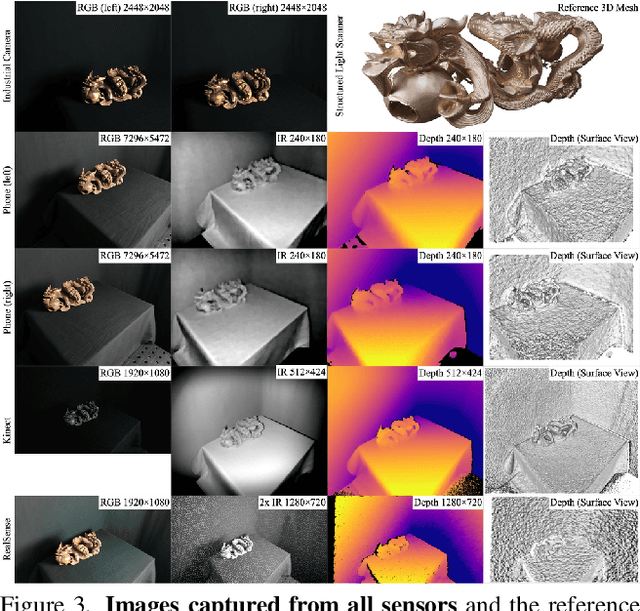

Abstract:We present a new multi-sensor dataset for 3D surface reconstruction. It includes registered RGB and depth data from sensors of different resolutions and modalities: smartphones, Intel RealSense, Microsoft Kinect, industrial cameras, and structured-light scanner. The data for each scene is obtained under a large number of lighting conditions, and the scenes are selected to emphasize a diverse set of material properties challenging for existing algorithms. In the acquisition process, we aimed to maximize high-resolution depth data quality for challenging cases, to provide reliable ground truth for learning algorithms. Overall, we provide over 1.4 million images of 110 different scenes acquired at 14 lighting conditions from 100 viewing directions. We expect our dataset will be useful for evaluation and training of 3D reconstruction algorithms of different types and for other related tasks. Our dataset and accompanying software will be available online.

CNN-based Omnidirectional Object Detection for HermesBot Autonomous Delivery Robot with Preliminary Frame Classification

Oct 22, 2021

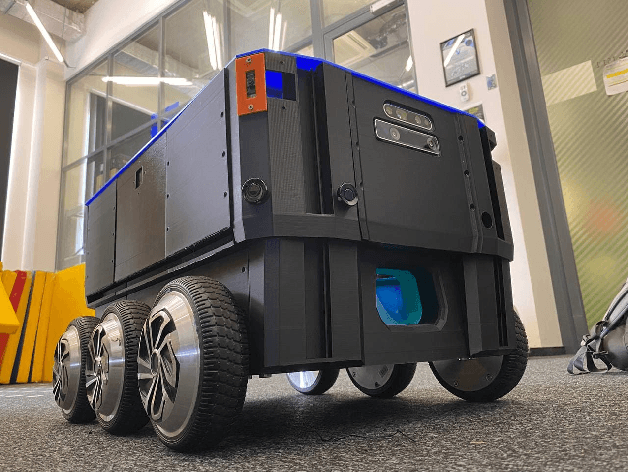

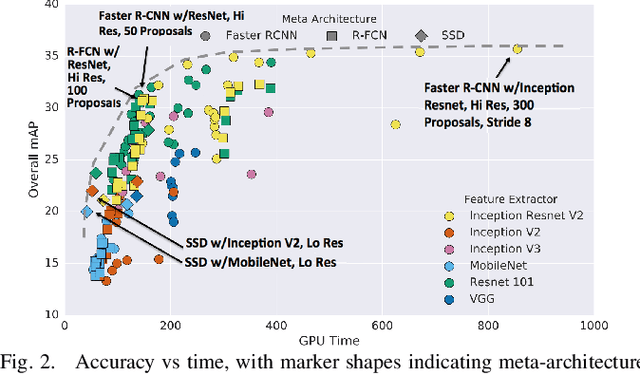

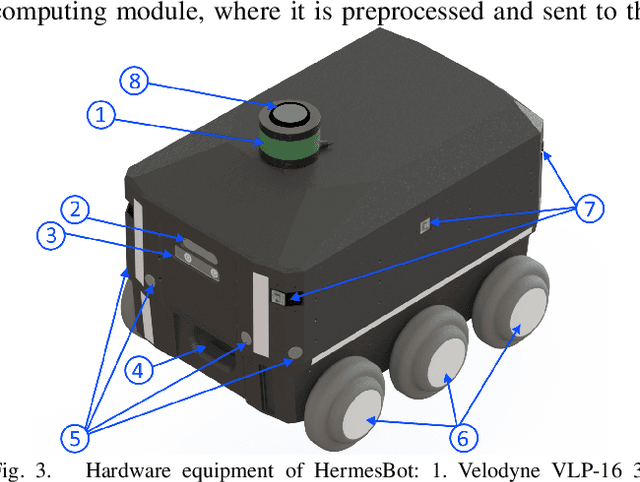

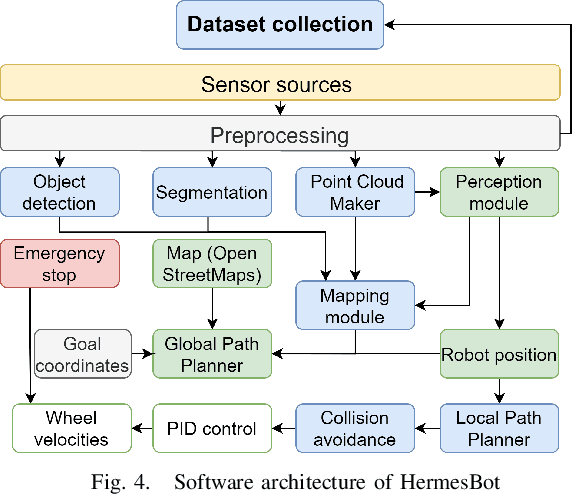

Abstract:Mobile autonomous robots include numerous sensors for environment perception. Cameras are an essential tool for robot's localization, navigation, and obstacle avoidance. To process a large flow of data from the sensors, it is necessary to optimize algorithms, or to utilize substantial computational power. In our work, we propose an algorithm for optimizing a neural network for object detection using preliminary binary frame classification. An autonomous outdoor mobile robot with 6 rolling-shutter cameras on the perimeter providing a 360-degree field of view was used as the experimental setup. The obtained experimental results revealed that the proposed optimization accelerates the inference time of the neural network in the cases with up to 5 out of 6 cameras containing target objects.

Comparison of modern open-source visual SLAM approaches

Aug 03, 2021

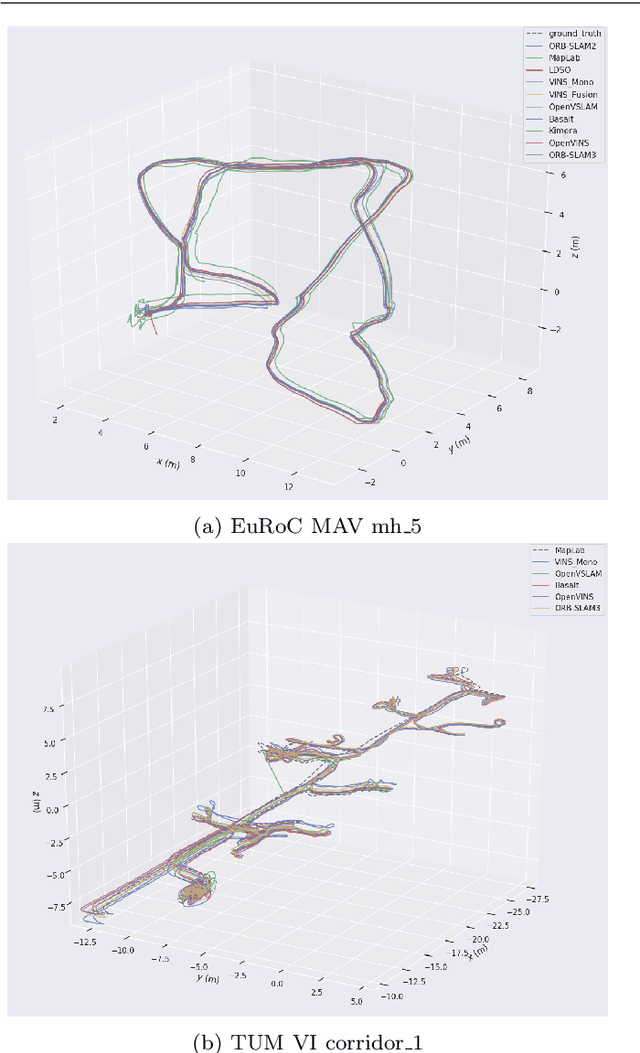

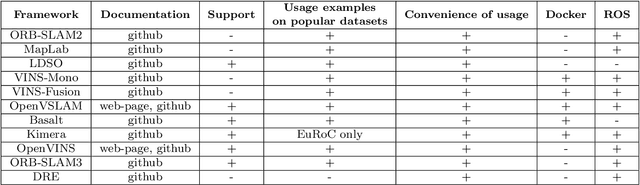

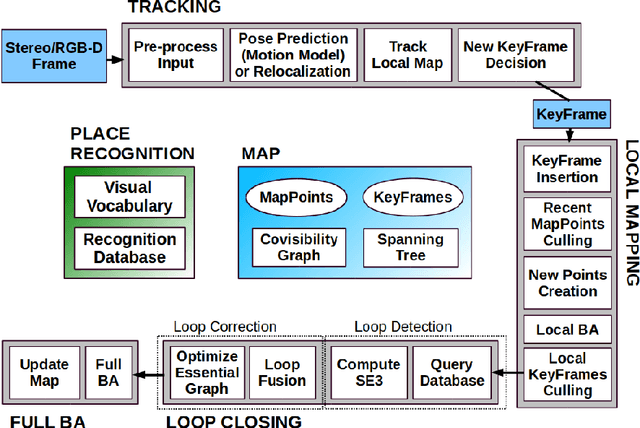

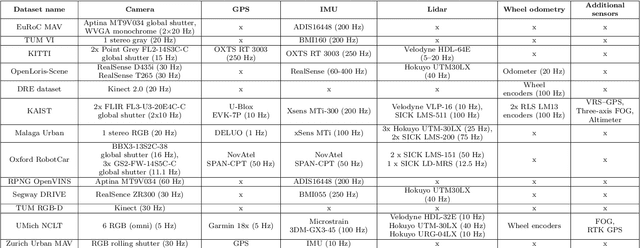

Abstract:SLAM is one of the most fundamental areas of research in robotics and computer vision. State of the art solutions has advanced significantly in terms of accuracy and stability. Unfortunately, not all the approaches are available as open-source solutions and free to use. The results of some of them are difficult to reproduce, and there is a lack of comparison on common datasets. In our work, we make a comparative analysis of state of the art open-source methods. We assess the algorithms based on accuracy, computational performance, robustness, and fault tolerance. Moreover, we present a comparison of datasets as well as an analysis of algorithms from a practical point of view. The findings of the work raise several crucial questions for SLAM researchers.

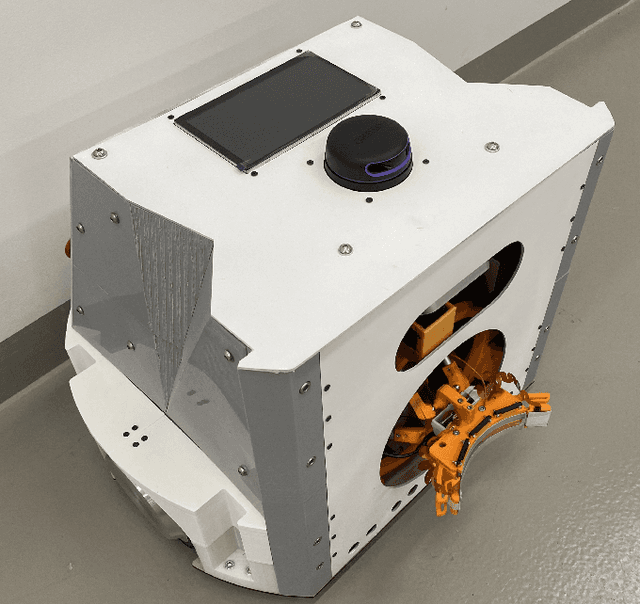

MobileCharger: an Autonomous Mobile Robot with Inverted Delta Actuator for Robust and Safe Robot Charging

Jul 23, 2021

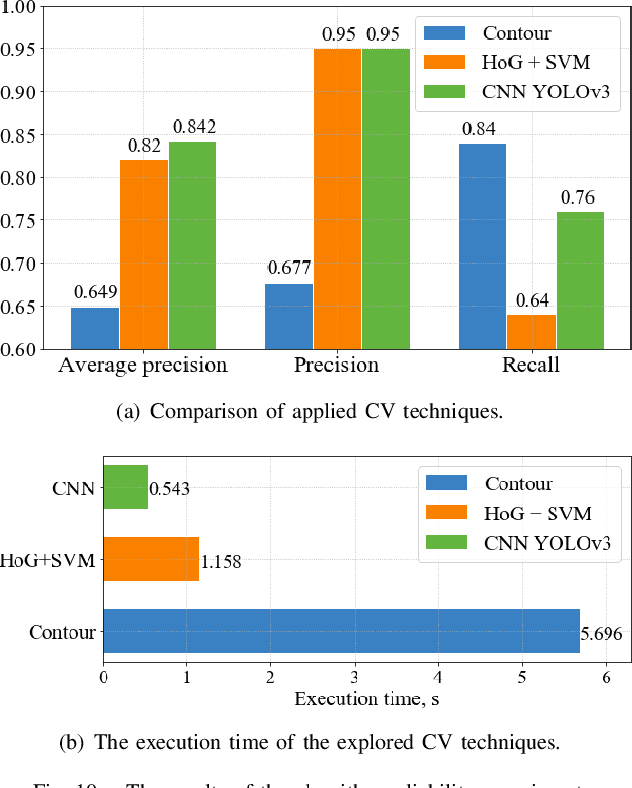

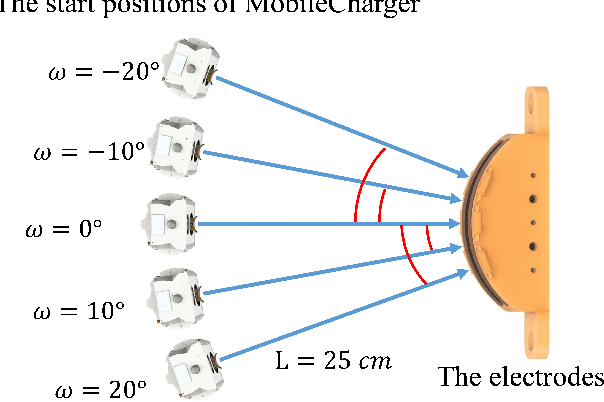

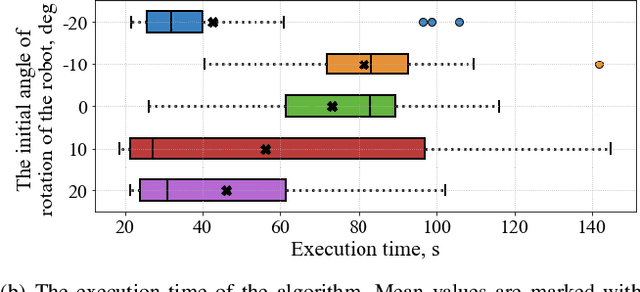

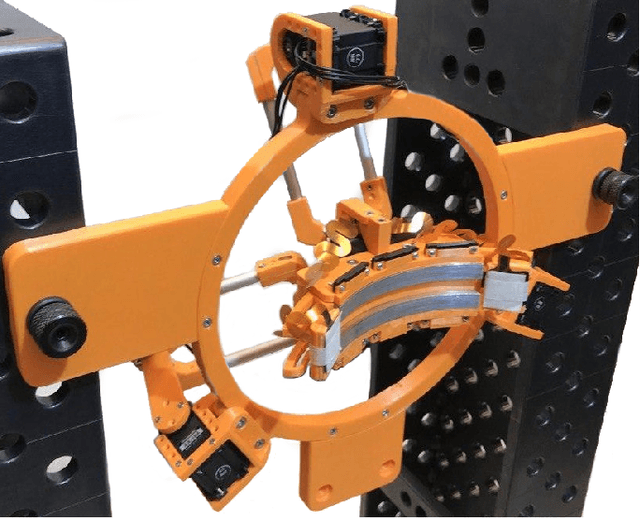

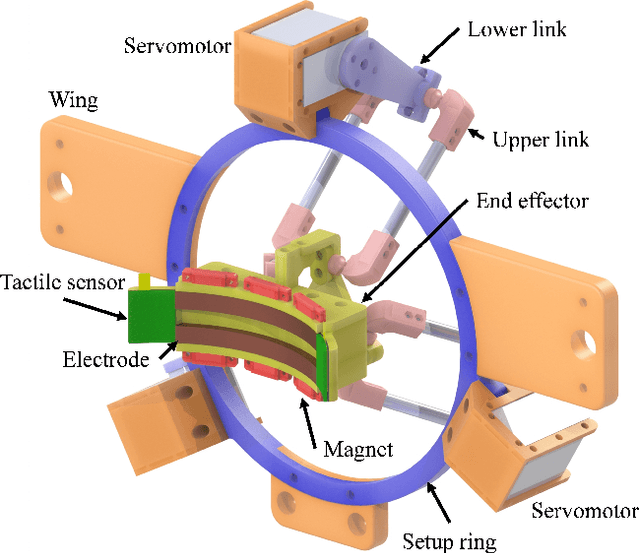

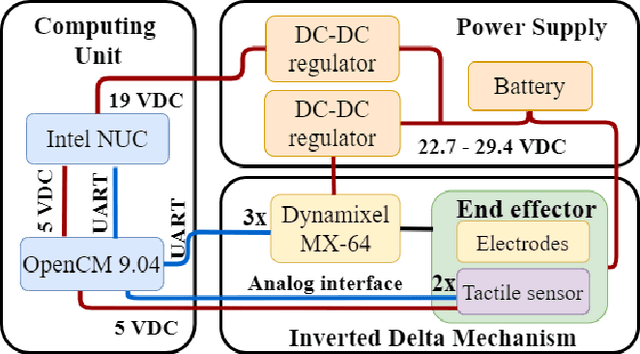

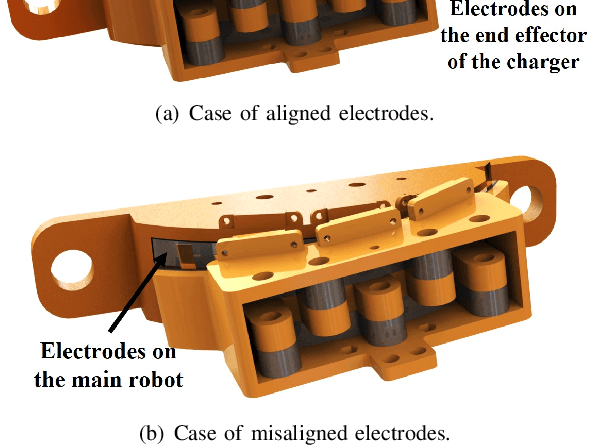

Abstract:MobileCharger is a novel mobile charging robot with an Inverted Delta actuator for safe and robust energy transfer between two mobile robots. The RGB-D camera-based computer vision system allows to detect the electrodes on the target mobile robot using a convolutional neural network (CNN). The embedded high-fidelity tactile sensors are applied to estimate the misalignment between the electrodes on the charger mechanism and the electrodes on the main robot using CNN based on pressure data on the contact surfaces. Thus, the developed vision-tactile perception system allows precise positioning of the end effector of the actuator and ensures a reliable connection between the electrodes of the two robots. The experimental results showed high average precision (84.2%) for electrode detection using CNN. The percentage of successful trials of the CNN-based electrode search algorithm reached 83% and the average execution time accounted for 60 s. MobileCharger could introduce a new level of charging systems and increase the prevalence of autonomous mobile robots.

DeltaCharger: Charging Robot with Inverted Delta Mechanism and CNN-driven High Fidelity Tactile Perception for Precise 3D Positioning

Jul 22, 2021

Abstract:DeltaCharger is a novel charging robot with an Inverted Delta structure for 3D positioning of electrodes to achieve robust and safe transferring energy between two mobile robots. The embedded high-fidelity tactile sensors allow to estimate the angular, vertical and horizontal misalignments between electrodes on the charger mechanism and electrodes on the target robot using pressure data on the contact surfaces. This is crucial for preventing a short circuit. In this paper, the mechanism of the developed prototype and evaluation study of different machine learning models for misalignment prediction are presented. The experimental results showed that the proposed system can measure the angle, vertical and horizontal values of misalignment from pressure data with an accuracy of 95.46%, 98.2%, and 86.9%, respectively, using a Convolutional Neural Network (CNN). DeltaCharger can potentially bring a new level of charging systems and improve the prevalence of mobile autonomous robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge