Dongrui Wu

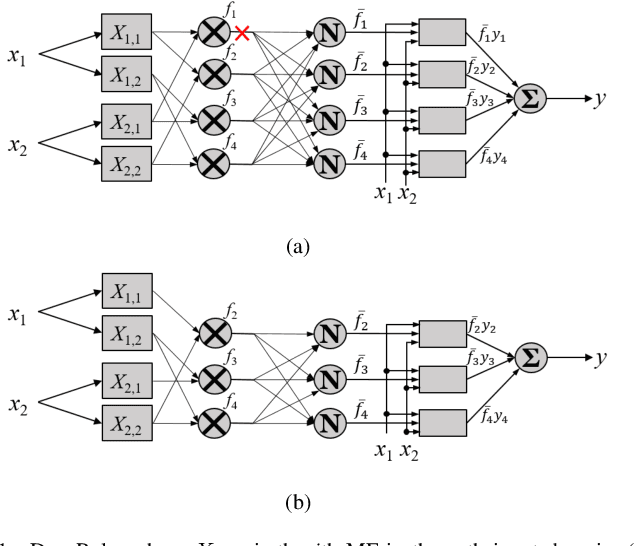

Multi-View Broad Learning System for Primate Oculomotor Decision Decoding

Aug 16, 2019

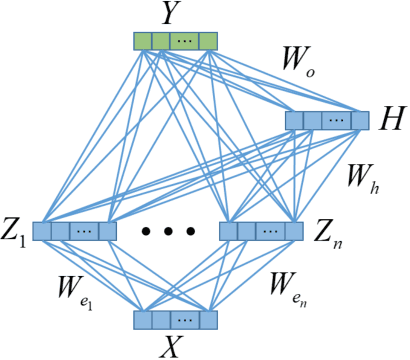

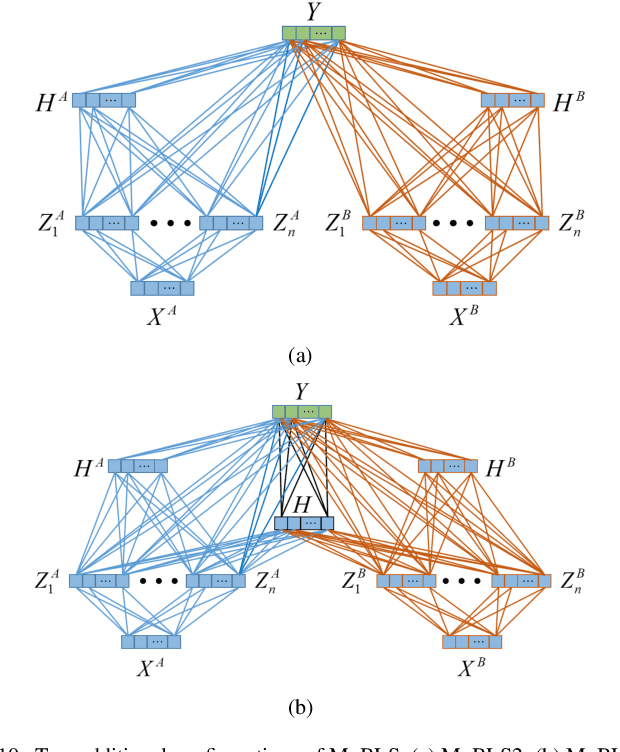

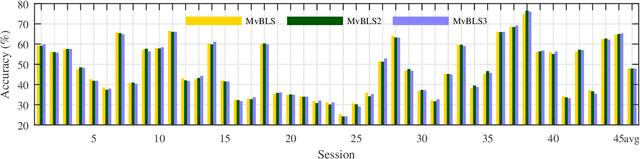

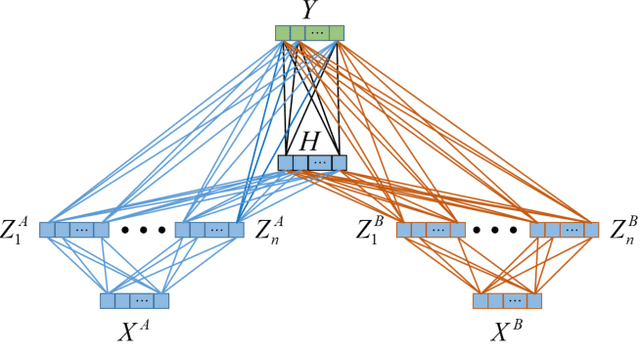

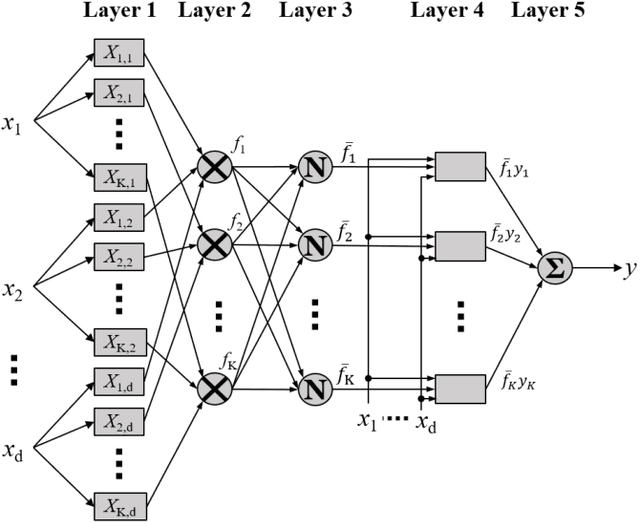

Abstract:Multi-view learning improves the learning performance by utilizing multi-view data: data collected from multiple sources, or feature sets extracted from the same data source. This approach is suitable for primate brain state decoding using cortical neural signals. This is because the complementary components of simultaneously recorded neural signals, local field potentials (LFPs) and action potentials (spikes), can be treated as two views. In this paper, we extended broad learning system (BLS), a recently proposed wide neural network architecture, from single-view learning to multi-view learning, and validated its performance in monkey oculomotor decision decoding from medial frontal LFPs and spikes. We demonstrated that medial frontal LFPs and spikes in non-human primate do contain complementary information about the oculomotor decision, and that the proposed multi-view BLS is a more effective approach to classify the oculomotor decision, than several classical and state-of-the-art single-view and multi-view learning approaches.

Optimize TSK Fuzzy Systems for Big Data Classification Problems: Bag of Tricks

Aug 01, 2019

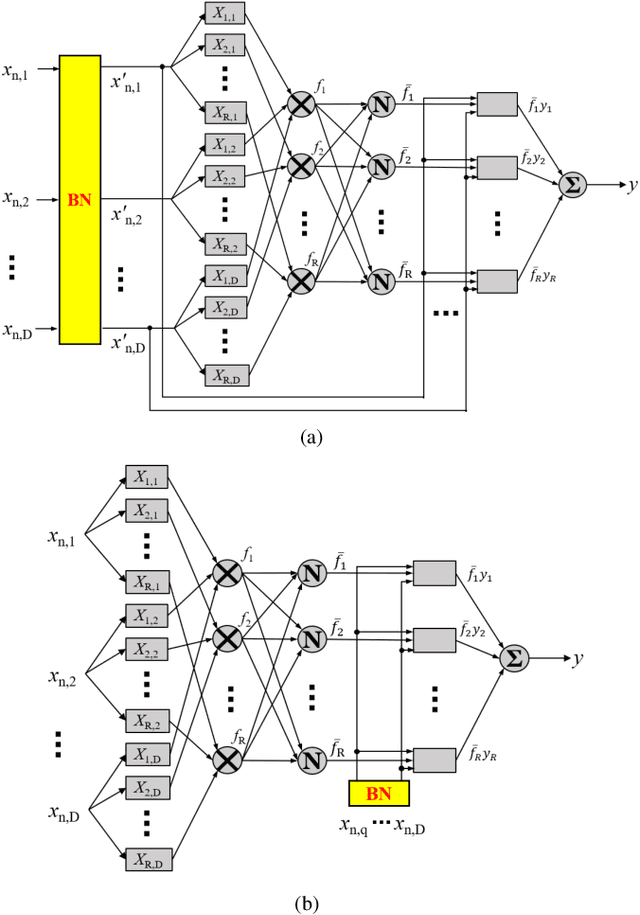

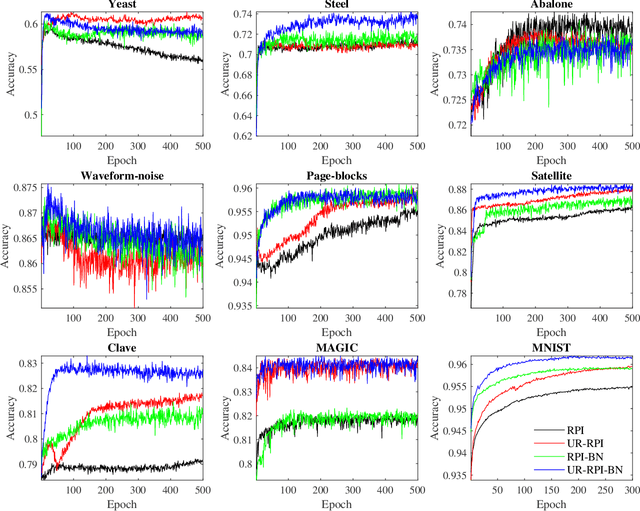

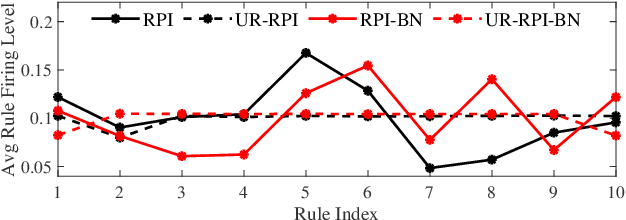

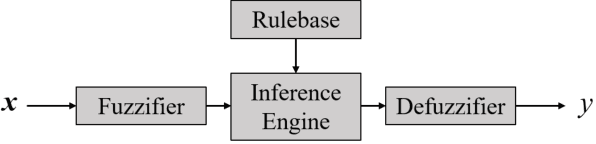

Abstract:Takagi-Sugeno-Kang (TSK) fuzzy systems are flexible and interpretable machine learning models; however, they may not be easily applicable to big data problems, especially when the size and the dimensionality of the data are both large. This paper proposes a mini-batch gradient descent (MBGD) based algorithm to efficiently and effectively train TSK fuzzy systems for big data classification problems. It integrates three novel techniques: 1) uniform regularization (UR), which is a regularization term added to the loss function to make sure the rules have similar average firing levels, and hence better generalization performance; 2) random percentile initialization (RPI), which initializes the membership function parameters efficiently and reliably; and, 3) batch normalization (BN), which extends BN from deep neural networks to TSK fuzzy systems to speedup the convergence and improve generalization. Experiments on nine datasets from various application domains, with varying size and feature dimensionality, demonstrated that each of UR, RPI and BN has its own unique advantages, and integrating all three together can achieve the best classification performance.

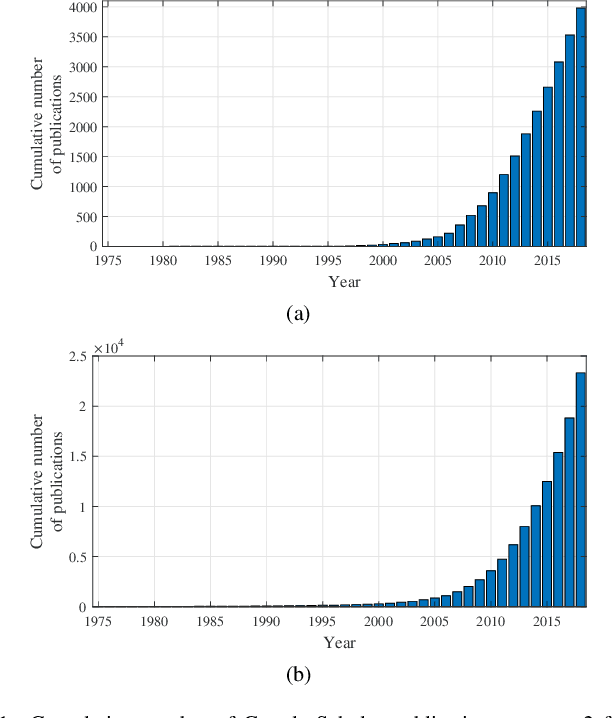

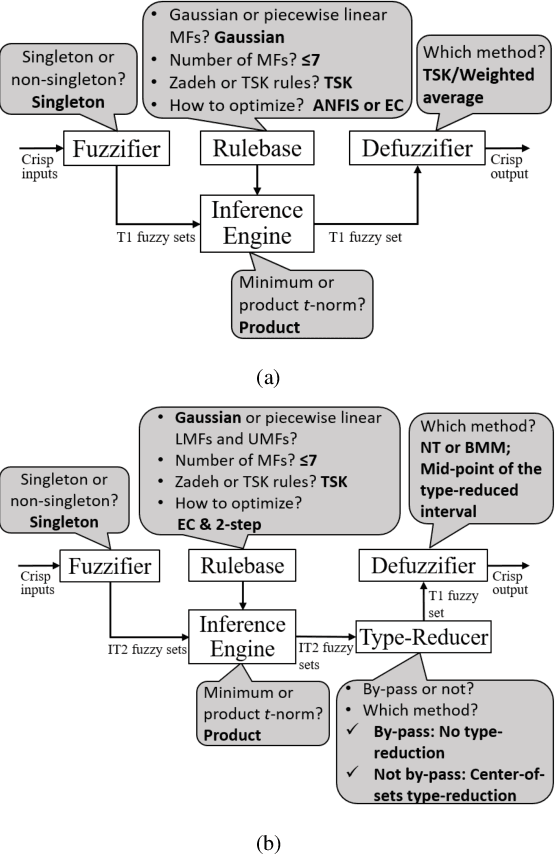

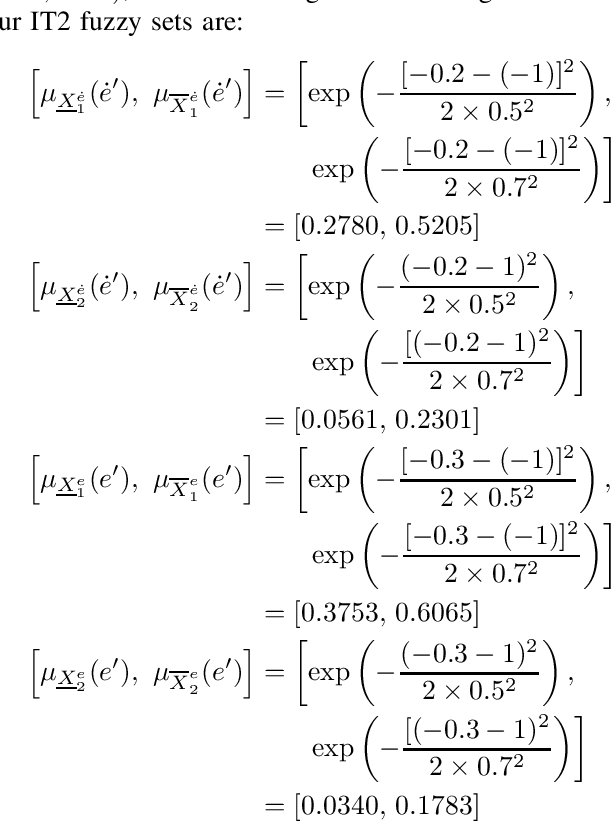

Recommendations on Designing Practical Interval Type-2 Fuzzy Systems

Jul 03, 2019

Abstract:Interval type-2 (IT2) fuzzy systems have become increasingly popular in the last 20 years. They have demonstrated superior performance in many applications. However, the operation of an IT2 fuzzy system is more complex than that of its type-1 counterpart. There are many questions to be answered in designing an IT2 fuzzy system: Should singleton or non-singleton fuzzifier be used? How many membership functions (MFs) should be used for each input? Should Gaussian or piecewise linear MFs be used? Should Mamdani or Takagi-Sugeno-Kang (TSK) inference be used? Should minimum or product $t$-norm be used? Should type-reduction be used or not? How to optimize the IT2 fuzzy system? These questions may look overwhelming and confusing to IT2 beginners. In this paper we recommend some representative starting choices for an IT2 fuzzy system design, which hopefully will make IT2 fuzzy systems more accessible to IT2 fuzzy system designers.

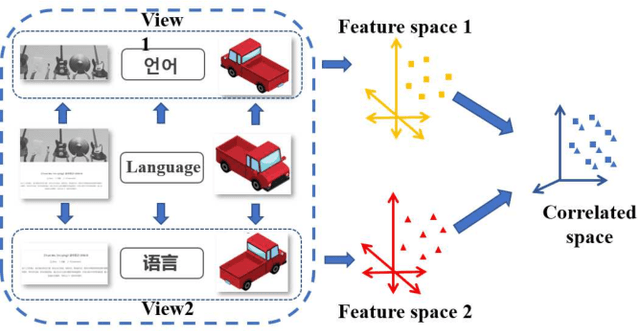

Canonical Correlation Analysis (CCA) Based Multi-View Learning: An Overview

Jul 03, 2019

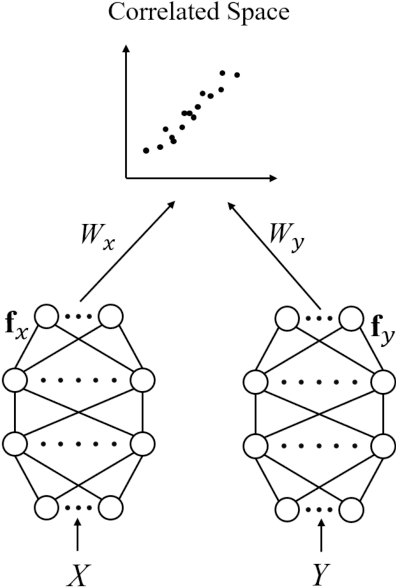

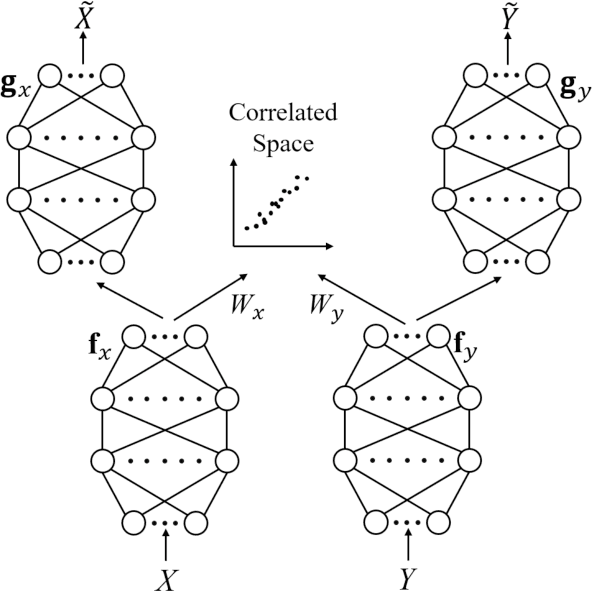

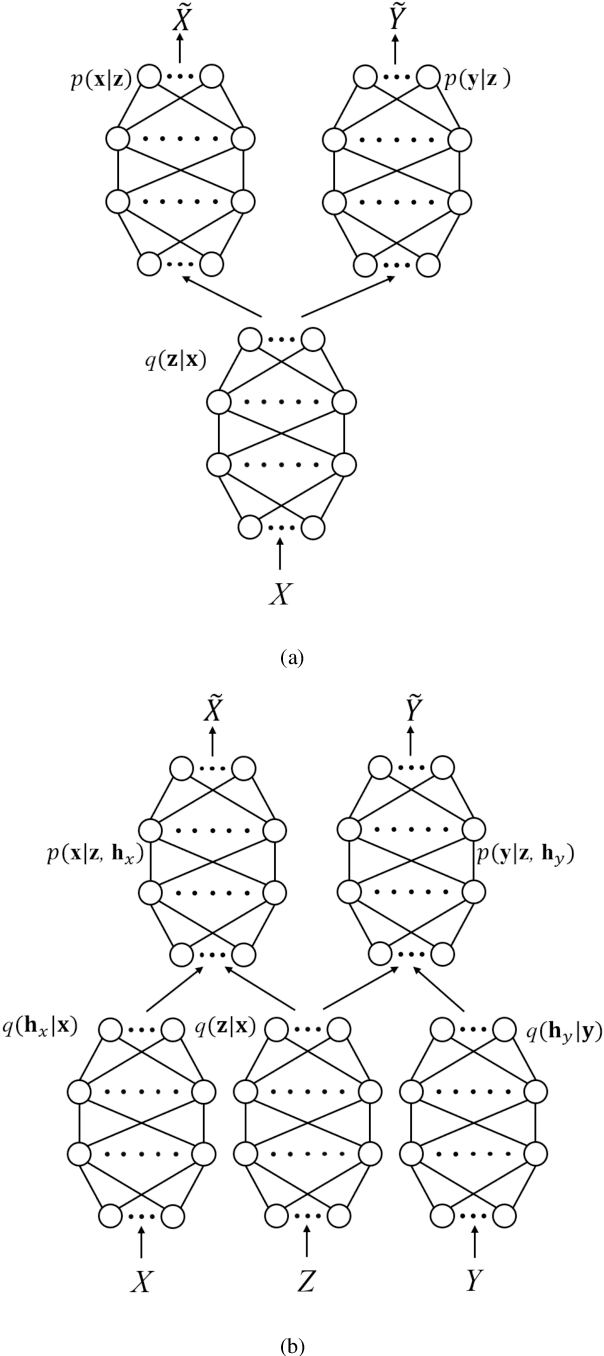

Abstract:Multi-view learning (MVL) is a strategy for fusing data from different sources or subsets. Canonical correlation analysis (CCA) is very important in MVL, whose main idea is to map data from different views onto a common space with the maximum correlation. The traditional CCA can only be used to calculate the linear correlation between two views. Moreover, it is unsupervised, and the label information is wasted in supervised learning tasks. Many nonlinear, supervised, or generalized extensions have been proposed to overcome these limitations. However, to our knowledge, there is no up-to-date overview of these approaches. This paper fills this gap, by providing a comprehensive overview of many classical and latest CCA approaches, and describing their typical applications in pattern recognition, multi-modal retrieval and classification, and multi-view embedding.

Protecting Privacy of Users in Brain-Computer Interface Applications

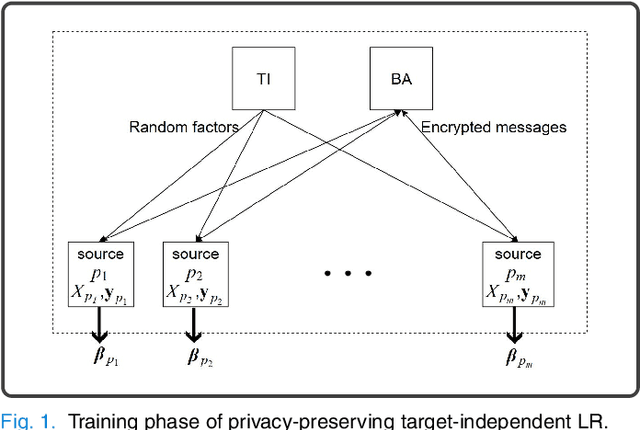

Jul 02, 2019

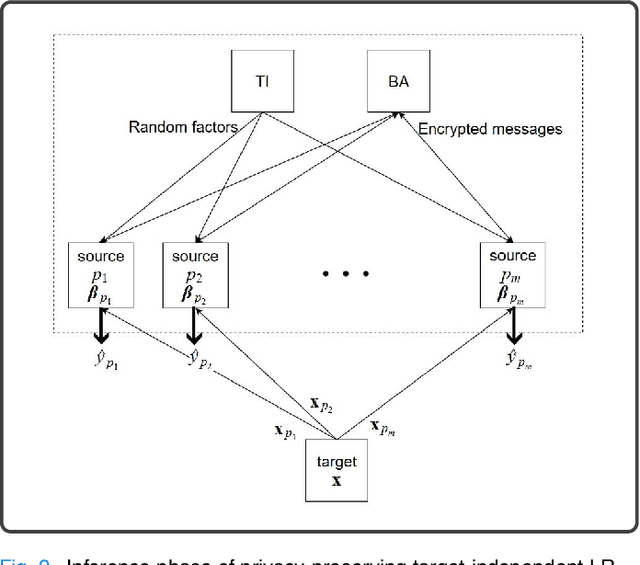

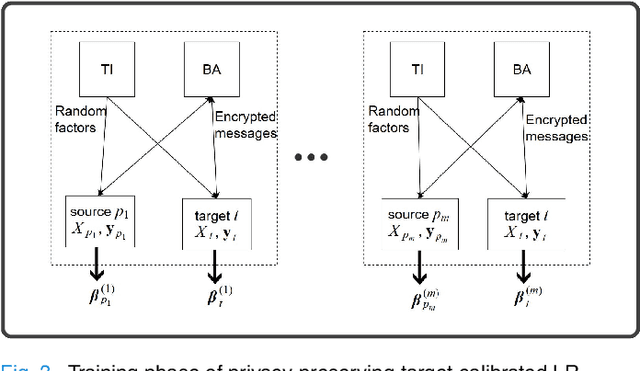

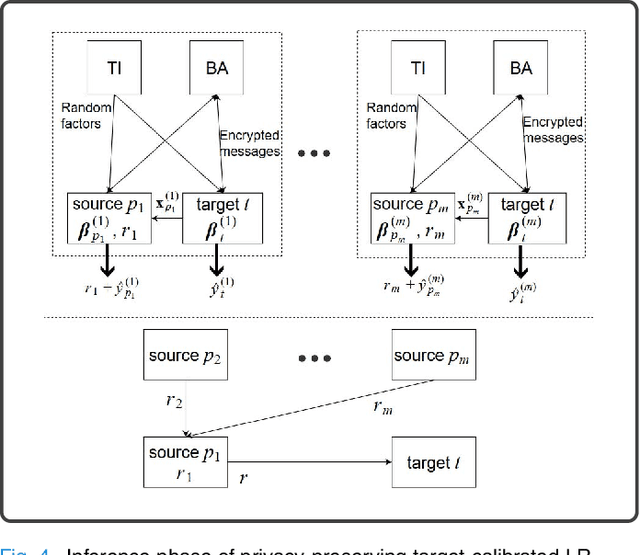

Abstract:Machine learning (ML) is revolutionizing research and industry. Many ML applications rely on the use of large amounts of personal data for training and inference. Among the most intimate exploited data sources is electroencephalogram (EEG) data, a kind of data that is so rich with information that application developers can easily gain knowledge beyond the professed scope from unprotected EEG signals, including passwords, ATM PINs, and other intimate data. The challenge we address is how to engage in meaningful ML with EEG data while protecting the privacy of users. Hence, we propose cryptographic protocols based on Secure Multiparty Computation (SMC) to perform linear regression over EEG signals from many users in a fully privacy-preserving (PP) fashion, i.e.~such that each individual's EEG signals are not revealed to anyone else. To illustrate the potential of our secure framework, we show how it allows estimating the drowsiness of drivers from their EEG signals as would be possible in the unencrypted case, and at a very reasonable computational cost. Our solution is the first application of commodity-based SMC to EEG data, as well as the largest documented experiment of secret sharing based SMC in general, namely with 15 players involved in all the computations.

Patch Learning

Jun 01, 2019

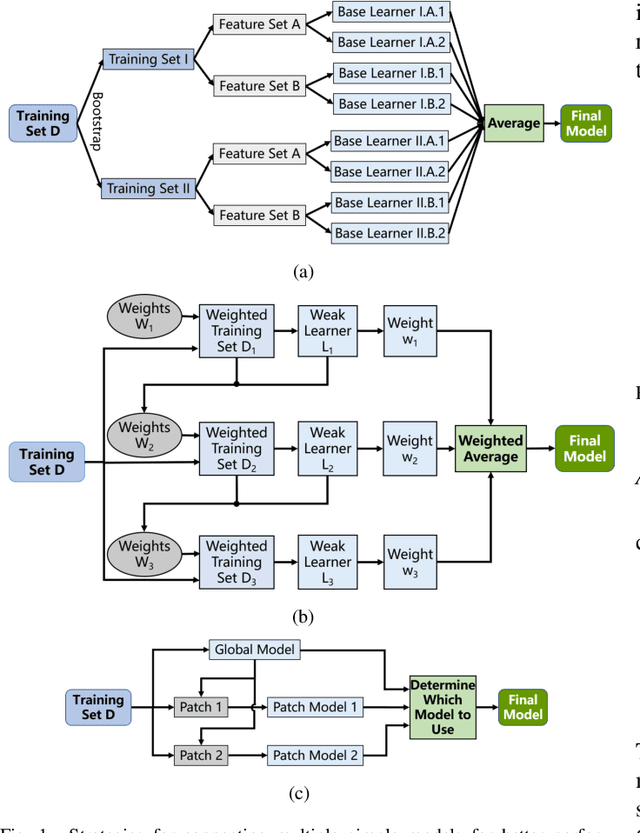

Abstract:There have been different strategies to improve the performance of a machine learning model, e.g., increasing the depth, width, and/or nonlinearity of the model, and using ensemble learning to aggregate multiple base/weak learners in parallel or in series. This paper proposes a novel strategy called patch learning (PL) for this problem. It consists of three steps: 1) train an initial global model using all training data; 2) identify from the initial global model the patches which contribute the most to the learning error, and train a (local) patch model for each such patch; and, 3) update the global model using training data that do not fall into any patch. To use a PL model, we first determine if the input falls into any patch. If yes, then the corresponding patch model is used to compute the output. Otherwise, the global model is used. We explain in detail how PL can be implemented using fuzzy systems. Five regression problems on 1D/2D/3D curve fitting, nonlinear system identification, and chaotic time-series prediction, verified its effectiveness. To our knowledge, the PL idea has not appeared in the literature before, and it opens up a promising new line of research in machine learning.

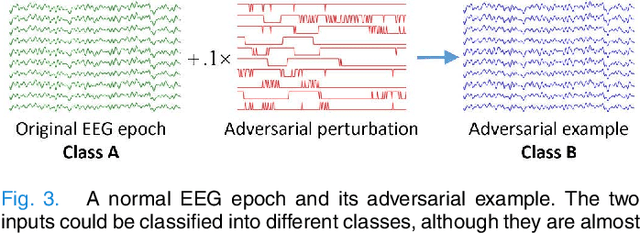

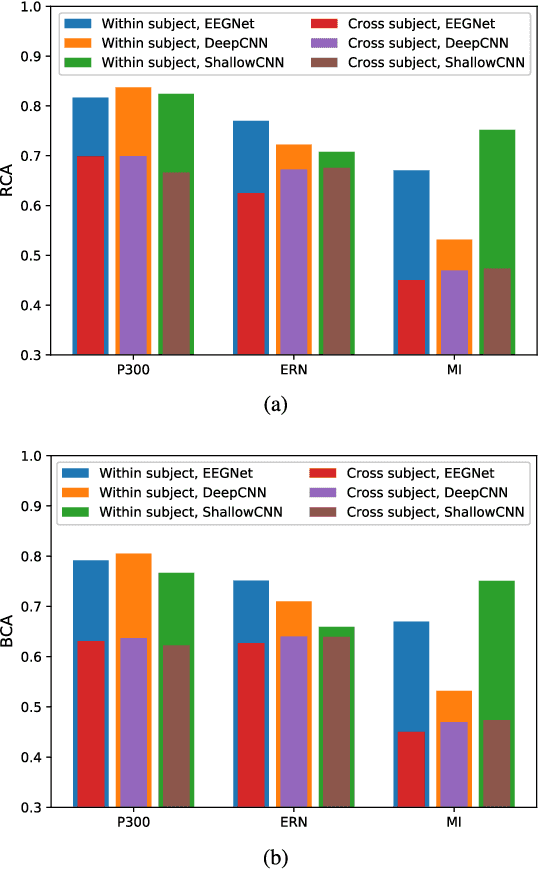

On the Vulnerability of CNN Classifiers in EEG-Based BCIs

Mar 31, 2019

Abstract:Deep learning has been successfully used in numerous applications because of its outstanding performance and the ability to avoid manual feature engineering. One such application is electroencephalogram (EEG) based brain-computer interface (BCI), where multiple convolutional neural network (CNN) models have been proposed for EEG classification. However, it has been found that deep learning models can be easily fooled with adversarial examples, which are normal examples with small deliberate perturbations. This paper proposes an unsupervised fast gradient sign method (UFGSM) to attack three popular CNN classifiers in BCIs, and demonstrates its effectiveness. We also verify the transferability of adversarial examples in BCIs, which means we can perform attacks even without knowing the architecture and parameters of the target models, or the datasets they were trained on. To our knowledge, this is the first study on the vulnerability of CNN classifiers in EEG-based BCIs, and hopefully will trigger more attention on the security of BCI systems.

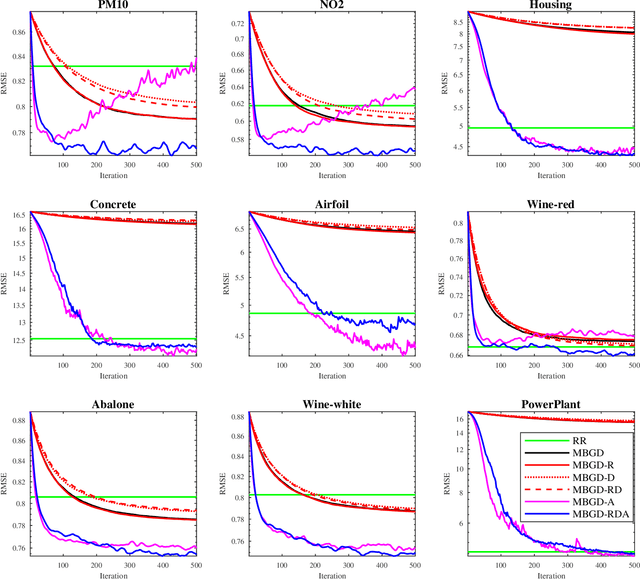

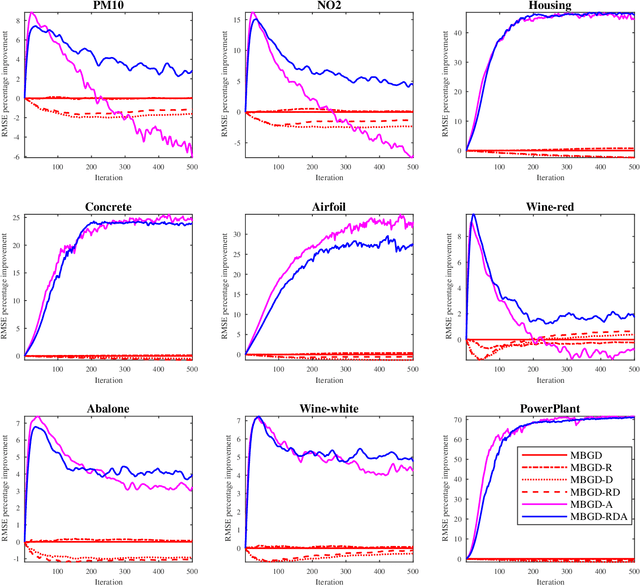

Optimize TSK Fuzzy Systems for Big Data Regression Problems: Mini-Batch Gradient Descent with Regularization, DropRule and AdaBound

Mar 26, 2019

Abstract:Takagi-Sugeno-Kang (TSK) fuzzy systems are very useful machine learning models for regression problems. However, to our knowledge, there has not existed an efficient and effective training algorithm that enables them to deal with big data. Inspired by the connections between TSK fuzzy systems and neural networks, we extend three powerful neural network optimization techniques, i.e., mini-batch gradient descent, regularization, and AdaBound, to TSK fuzzy systems, and also propose a novel DropRule technique specifically for training TSK fuzzy systems. Our final algorithm, mini-batch gradient descent with regularization, DropRule and AdaBound (MBGD-RDA), can achieve fast convergence in training TSK fuzzy systems, and also superior generalization performance in testing. It can be used for training TSK fuzzy systems on datasets of any size; however, it is particularly useful for big datasets, on which currently no other efficient training algorithms exist.

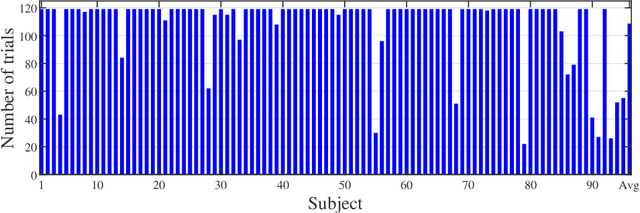

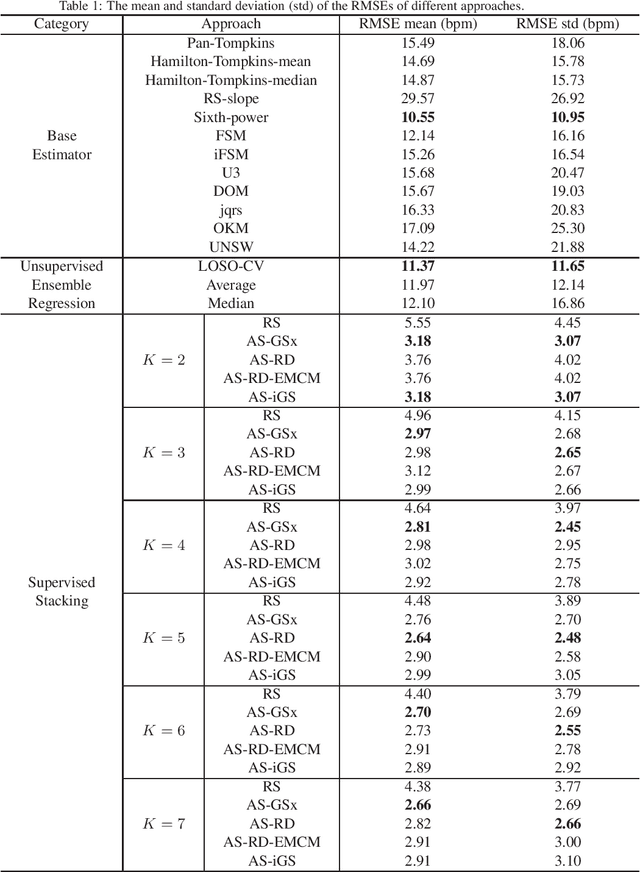

Active Stacking for Heart Rate Estimation

Mar 26, 2019

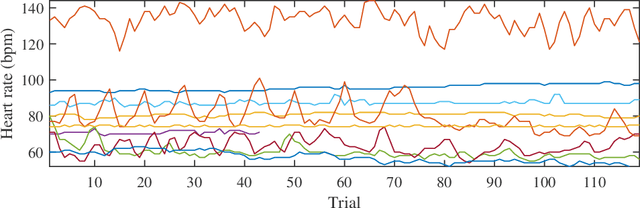

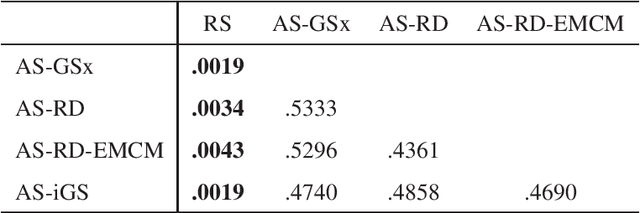

Abstract:Heart rate estimation from electrocardiogram signals is very important for the early detection of cardiovascular diseases. However, due to large individual differences and varying electrocardiogram signal quality, there does not exist a single reliable estimation algorithm that works well on all subjects. Every algorithm may break down on certain subjects, resulting in a significant estimation error. Ensemble regression, which aggregates the outputs of multiple base estimators for more reliable and stable estimates, can be used to remedy this problem. Moreover, active learning can be used to optimally select a few trials from a new subject to label, based on which a stacking ensemble regression model can be trained to aggregate the base estimators. This paper proposes four active stacking approaches, and demonstrates that they all significantly outperform three common unsupervised ensemble regression approaches, and a supervised stacking approach which randomly selects some trials to label. Remarkably, our active stacking approaches only need three or four labeled trials from each subject to achieve an average root mean squared estimation error below three beats per minute, making them very convenient for real-world applications. To our knowledge, this is the first research on active stacking, and its application to heart rate estimation.

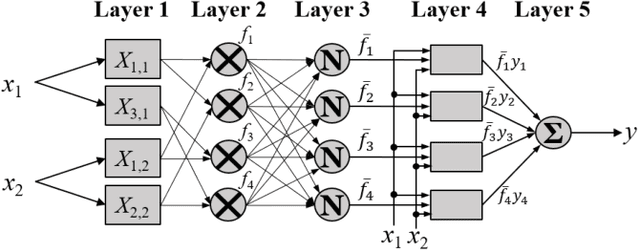

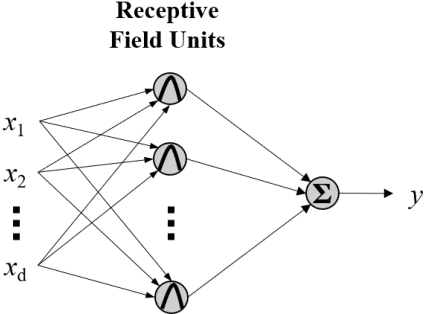

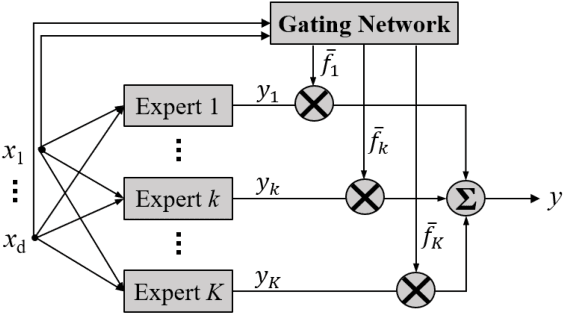

On the Functional Equivalence of TSK Fuzzy Systems to Neural Networks, Mixture of Experts, CART, and Stacking Ensemble Regression

Mar 25, 2019

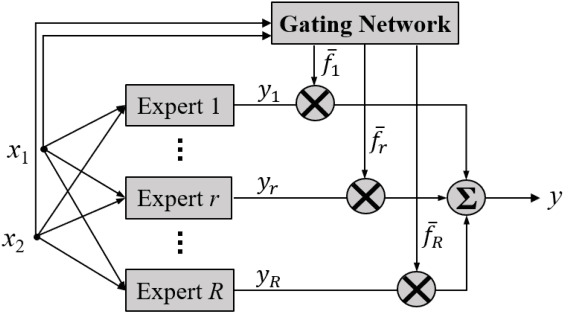

Abstract:Fuzzy systems have achieved great success in numerous applications. However, there are still many challenges in designing an optimal fuzzy system, e.g., how to efficiently train its parameters, how to improve its performance without adding too many parameters, how to balance the trade-off between cooperations and competitions among the rules, how to overcome the curse of dimensionality, etc. Literature has shown that by making appropriate connections between fuzzy systems and other machine learning approaches, good practices from other domains may be used to improve the fuzzy systems, and vice versa. This paper gives an overview on the functional equivalence between Takagi-Sugeno-Kang fuzzy systems and four classic machine learning approaches -- neural networks, mixture of experts, classification and regression trees, and stacking ensemble regression -- for regression problems. We also point out some promising new research directions, inspired by the functional equivalence, that could lead to solutions to the aforementioned problems. To our knowledge, this is so far the most comprehensive overview on the connections between fuzzy systems and other popular machine learning approaches, and hopefully will stimulate more hybridization between different machine learning algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge