Martine De Cock

FHAIM: Fully Homomorphic AIM For Private Synthetic Data Generation

Feb 05, 2026Abstract:Data is the lifeblood of AI, yet much of the most valuable data remains locked in silos due to privacy and regulations. As a result, AI remains heavily underutilized in many of the most important domains, including healthcare, education, and finance. Synthetic data generation (SDG), i.e. the generation of artificial data with a synthesizer trained on real data, offers an appealing solution to make data available while mitigating privacy concerns, however existing SDG-as-a-service workflow require data holders to trust providers with access to private data.We propose FHAIM, the first fully homomorphic encryption (FHE) framework for training a marginal-based synthetic data generator on encrypted tabular data. FHAIM adapts the widely used AIM algorithm to the FHE setting using novel FHE protocols, ensuring that the private data remains encrypted throughout and is released only with differential privacy guarantees. Our empirical analysis show that FHAIM preserves the performance of AIM while maintaining feasible runtimes.

Large Language Model-Powered Conversational Agent Delivering Problem-Solving Therapy (PST) for Family Caregivers: Enhancing Empathy and Therapeutic Alliance Using In-Context Learning

Jun 13, 2025

Abstract:Family caregivers often face substantial mental health challenges due to their multifaceted roles and limited resources. This study explored the potential of a large language model (LLM)-powered conversational agent to deliver evidence-based mental health support for caregivers, specifically Problem-Solving Therapy (PST) integrated with Motivational Interviewing (MI) and Behavioral Chain Analysis (BCA). A within-subject experiment was conducted with 28 caregivers interacting with four LLM configurations to evaluate empathy and therapeutic alliance. The best-performing models incorporated Few-Shot and Retrieval-Augmented Generation (RAG) prompting techniques, alongside clinician-curated examples. The models showed improved contextual understanding and personalized support, as reflected by qualitative responses and quantitative ratings on perceived empathy and therapeutic alliances. Participants valued the model's ability to validate emotions, explore unexpressed feelings, and provide actionable strategies. However, balancing thorough assessment with efficient advice delivery remains a challenge. This work highlights the potential of LLMs in delivering empathetic and tailored support for family caregivers.

Transforming Tuberculosis Care: Optimizing Large Language Models For Enhanced Clinician-Patient Communication

Feb 28, 2025

Abstract:Tuberculosis (TB) is the leading cause of death from an infectious disease globally, with the highest burden in low- and middle-income countries. In these regions, limited healthcare access and high patient-to-provider ratios impede effective patient support, communication, and treatment completion. To bridge this gap, we propose integrating a specialized Large Language Model into an efficacious digital adherence technology to augment interactive communication with treatment supporters. This AI-powered approach, operating within a human-in-the-loop framework, aims to enhance patient engagement and improve TB treatment outcomes.

End to End Collaborative Synthetic Data Generation

Dec 04, 2024

Abstract:The success of AI is based on the availability of data to train models. While in some cases a single data custodian may have sufficient data to enable AI, often multiple custodians need to collaborate to reach a cumulative size required for meaningful AI research. The latter is, for example, often the case for rare diseases, with each clinical site having data for only a small number of patients. Recent algorithms for federated synthetic data generation are an important step towards collaborative, privacy-preserving data sharing. Existing techniques, however, focus exclusively on synthesizer training, assuming that the training data is already preprocessed and that the desired synthetic data can be delivered in one shot, without any hyperparameter tuning. In this paper, we propose an end-to-end collaborative framework for publishing of synthetic data that accounts for privacy-preserving preprocessing as well as evaluation. We instantiate this framework with Secure Multiparty Computation (MPC) protocols and evaluate it in a use case for privacy-preserving publishing of synthetic genomic data for leukemia.

Privacy Vulnerabilities in Marginals-based Synthetic Data

Oct 07, 2024Abstract:When acting as a privacy-enhancing technology, synthetic data generation (SDG) aims to maintain a resemblance to the real data while excluding personally-identifiable information. Many SDG algorithms provide robust differential privacy (DP) guarantees to this end. However, we show that the strongest class of SDG algorithms--those that preserve \textit{marginal probabilities}, or similar statistics, from the underlying data--leak information about individuals that can be recovered more efficiently than previously understood. We demonstrate this by presenting a novel membership inference attack, MAMA-MIA, and evaluate it against three seminal DP SDG algorithms: MST, PrivBayes, and Private-GSD. MAMA-MIA leverages knowledge of which SDG algorithm was used, allowing it to learn information about the hidden data more accurately, and orders-of-magnitude faster, than other leading attacks. We use MAMA-MIA to lend insight into existing SDG vulnerabilities. Our approach went on to win the first SNAKE (SaNitization Algorithm under attacK ... $\varepsilon$) competition.

Toward Large Language Models as a Therapeutic Tool: Comparing Prompting Techniques to Improve GPT-Delivered Problem-Solving Therapy

Aug 27, 2024Abstract:While Large Language Models (LLMs) are being quickly adapted to many domains, including healthcare, their strengths and pitfalls remain under-explored. In our study, we examine the effects of prompt engineering to guide Large Language Models (LLMs) in delivering parts of a Problem-Solving Therapy (PST) session via text, particularly during the symptom identification and assessment phase for personalized goal setting. We present evaluation results of the models' performances by automatic metrics and experienced medical professionals. We demonstrate that the models' capability to deliver protocolized therapy can be improved with the proper use of prompt engineering methods, albeit with limitations. To our knowledge, this study is among the first to assess the effects of various prompting techniques in enhancing a generalist model's ability to deliver psychotherapy, focusing on overall quality, consistency, and empathy. Exploring LLMs' potential in delivering psychotherapy holds promise with the current shortage of mental health professionals amid significant needs, enhancing the potential utility of AI-based and AI-enhanced care services.

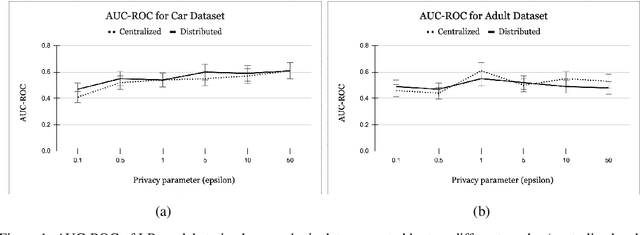

Privacy-Preserving Fair Item Ranking

Mar 06, 2023Abstract:Users worldwide access massive amounts of curated data in the form of rankings on a daily basis. The societal impact of this ease of access has been studied and work has been done to propose and enforce various notions of fairness in rankings. Current computational methods for fair item ranking rely on disclosing user data to a centralized server, which gives rise to privacy concerns for the users. This work is the first to advance research at the conjunction of producer (item) fairness and consumer (user) privacy in rankings by exploring the incorporation of privacy-preserving techniques; specifically, differential privacy and secure multi-party computation. Our work extends the equity of amortized attention ranking mechanism to be privacy-preserving, and we evaluate its effects with respect to privacy, fairness, and ranking quality. Our results using real-world datasets show that we are able to effectively preserve the privacy of users and mitigate unfairness of items without making additional sacrifices to the quality of rankings in comparison to the ranking mechanism in the clear.

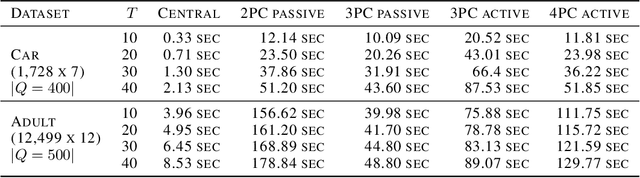

Secure Multiparty Computation for Synthetic Data Generation from Distributed Data

Oct 13, 2022

Abstract:Legal and ethical restrictions on accessing relevant data inhibit data science research in critical domains such as health, finance, and education. Synthetic data generation algorithms with privacy guarantees are emerging as a paradigm to break this data logjam. Existing approaches, however, assume that the data holders supply their raw data to a trusted curator, who uses it as fuel for synthetic data generation. This severely limits the applicability, as much of the valuable data in the world is locked up in silos, controlled by entities who cannot show their data to each other or a central aggregator without raising privacy concerns. To overcome this roadblock, we propose the first solution in which data holders only share encrypted data for differentially private synthetic data generation. Data holders send shares to servers who perform Secure Multiparty Computation (MPC) computations while the original data stays encrypted. We instantiate this idea in an MPC protocol for the Multiplicative Weights with Exponential Mechanism (MWEM) algorithm to generate synthetic data based on real data originating from many data holders without reliance on a single point of failure.

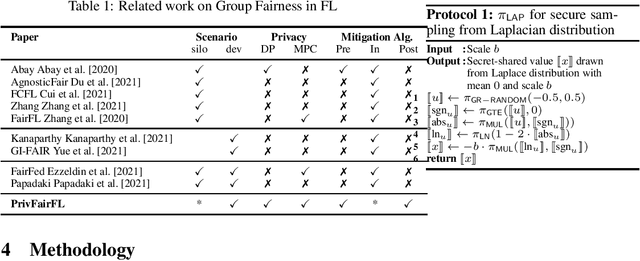

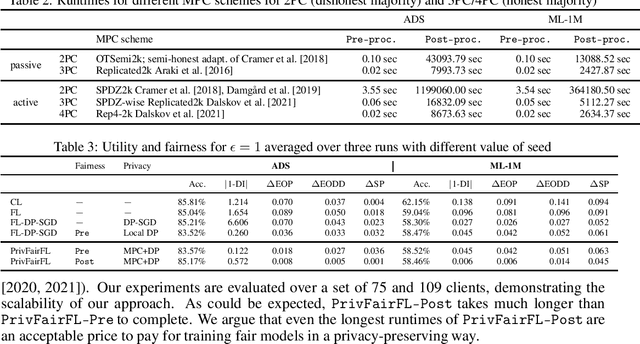

PrivFairFL: Privacy-Preserving Group Fairness in Federated Learning

May 23, 2022

Abstract:Group fairness ensures that the outcome of machine learning (ML) based decision making systems are not biased towards a certain group of people defined by a sensitive attribute such as gender or ethnicity. Achieving group fairness in Federated Learning (FL) is challenging because mitigating bias inherently requires using the sensitive attribute values of all clients, while FL is aimed precisely at protecting privacy by not giving access to the clients' data. As we show in this paper, this conflict between fairness and privacy in FL can be resolved by combining FL with Secure Multiparty Computation (MPC) and Differential Privacy (DP). In doing so, we propose a method for training group-fair ML models in cross-device FL under complete and formal privacy guarantees, without requiring the clients to disclose their sensitive attribute values.

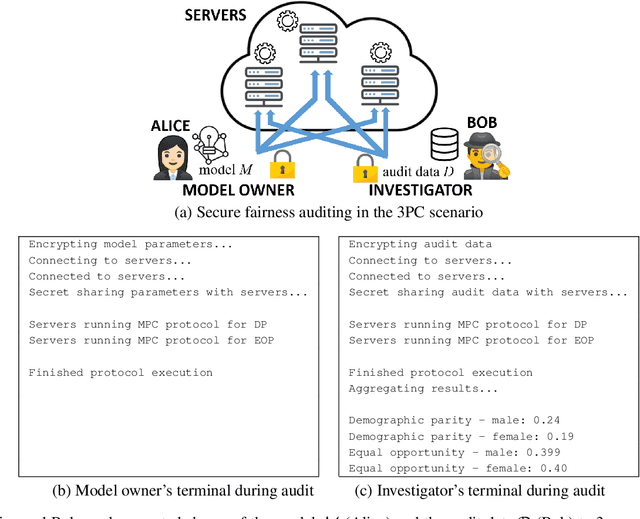

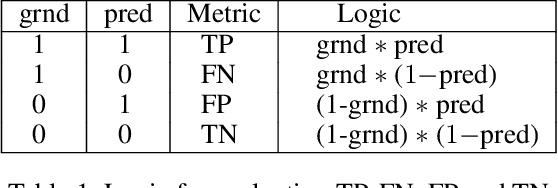

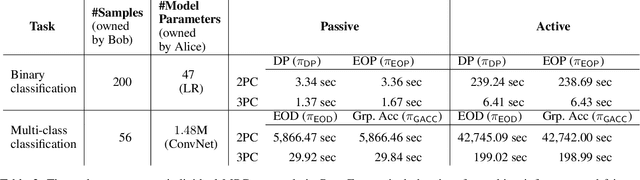

PrivFair: a Library for Privacy-Preserving Fairness Auditing

Feb 09, 2022

Abstract:Machine learning (ML) has become prominent in applications that directly affect people's quality of life, including in healthcare, justice, and finance. ML models have been found to exhibit discrimination based on sensitive attributes such as gender, race, or disability. Assessing if an ML model is free of bias remains challenging to date, and by definition has to be done with sensitive user characteristics that are subject of anti-discrimination and data protection law. Existing libraries for fairness auditing of ML models offer no mechanism to protect the privacy of the audit data. We present PrivFair, a library for privacy-preserving fairness audits of ML models. Through the use of Secure Multiparty Computation (MPC), PrivFair protects the confidentiality of the model under audit and the sensitive data used for the audit, hence it supports scenarios in which a proprietary classifier owned by a company is audited using sensitive audit data from an external investigator. We demonstrate the use of PrivFair for group fairness auditing with tabular data or image data, without requiring the investigator to disclose their data to anyone in an unencrypted manner, or the model owner to reveal their model parameters to anyone in plaintext.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge