Differentiable Bandit Exploration

Feb 17, 2020Craig Boutilier, Chih-Wei Hsu, Branislav Kveton, Martin Mladenov, Csaba Szepesvari, Manzil Zaheer

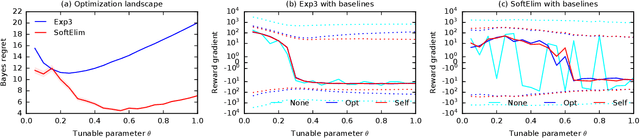

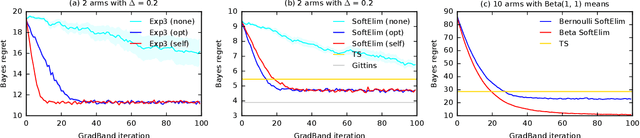

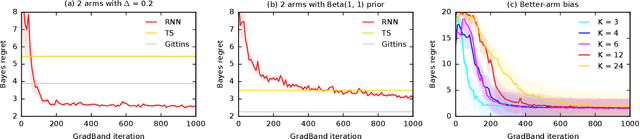

We learn bandit policies that maximize the average reward over bandit instances drawn from an unknown distribution $\mathcal{P}$, from a sample from $\mathcal{P}$. Our approach is an instance of meta-learning and its appeal is that the properties of $\mathcal{P}$ can be exploited without restricting it. We parameterize our policies in a differentiable way and optimize them by policy gradients - an approach that is easy to implement and pleasantly general. Then the challenge is to design effective gradient estimators and good policy classes. To make policy gradients practical, we introduce novel variance reduction techniques. We experiment with various bandit policy classes, including neural networks and a novel soft-elimination policy. The latter has regret guarantees and is a natural starting point for our optimization. Our experiments highlight the versatility of our approach. We also observe that neural network policies can learn implicit biases, which are only expressed through sampled bandit instances during training.

Old Dog Learns New Tricks: Randomized UCB for Bandit Problems

Oct 11, 2019Sharan Vaswani, Abbas Mehrabian, Audrey Durand, Branislav Kveton

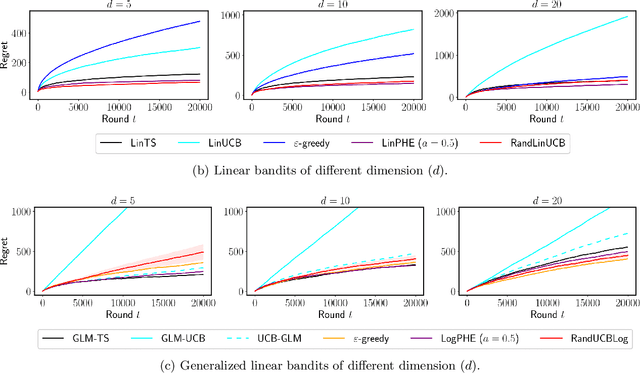

We propose $\tt RandUCB$, a bandit strategy that uses theoretically derived confidence intervals similar to upper confidence bound (UCB) algorithms, but akin to Thompson sampling (TS), uses randomization to trade off exploration and exploitation. In the $K$-armed bandit setting, we show that there are infinitely many variants of $\tt RandUCB$, all of which achieve the minimax-optimal $\widetilde{O}(\sqrt{K T})$ regret after $T$ rounds. Moreover, in a specific multi-armed bandit setting, we show that both UCB and TS can be recovered as special cases of $\tt RandUCB.$ For structured bandits, where each arm is associated with a $d$-dimensional feature vector and rewards are distributed according to a linear or generalized linear model, we prove that $\tt RandUCB$ achieves the minimax-optimal $\widetilde{O}(d \sqrt{T})$ regret even in the case of infinite arms. We demonstrate the practical effectiveness of $\tt RandUCB$ with experiments in both the multi-armed and structured bandit settings. Our results illustrate that $\tt RandUCB$ matches the empirical performance of TS while obtaining the theoretically optimal regret bounds of UCB algorithms, thus achieving the best of both worlds.

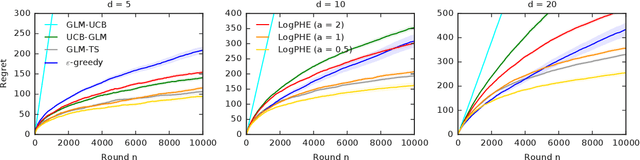

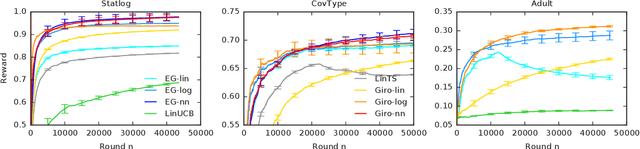

Randomized Exploration in Generalized Linear Bandits

Jun 21, 2019Branislav Kveton, Manzil Zaheer, Csaba Szepesvari, Lihong Li, Mohammad Ghavamzadeh, Craig Boutilier

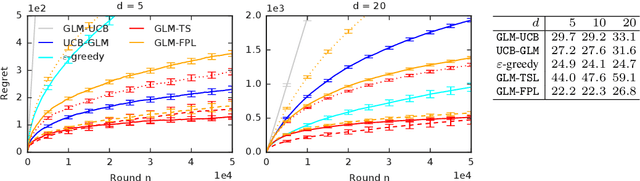

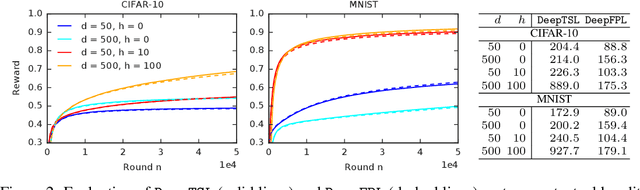

We study two randomized algorithms for generalized linear bandits, GLM-TSL and GLM-FPL. GLM-TSL samples a generalized linear model (GLM) from the Laplace approximation to the posterior distribution. GLM-FPL, a new algorithm proposed in this work, fits a GLM to a randomly perturbed history of past rewards. We prove a $\tilde{O}(d \sqrt{n} + d^2)$ upper bound on the $n$-round regret of GLM-TSL, where $d$ is the number of features. This is the first regret bound of a Thompson sampling-like algorithm in GLM bandits where the leading term is $\tilde{O}(d \sqrt{n})$. We apply both GLM-TSL and GLM-FPL to logistic and neural network bandits, and show that they perform well empirically. In more complex models, GLM-FPL is significantly faster. Our results showcase the role of randomization, beyond posterior sampling, in exploration.

Waterfall Bandits: Learning to Sell Ads Online

Apr 20, 2019Branislav Kveton, Saied Mahdian, S. Muthukrishnan, Zheng Wen, Yikun Xian

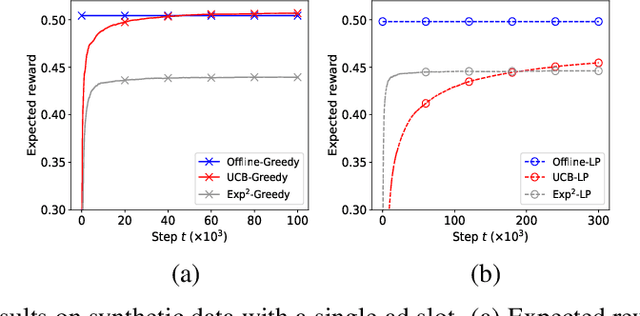

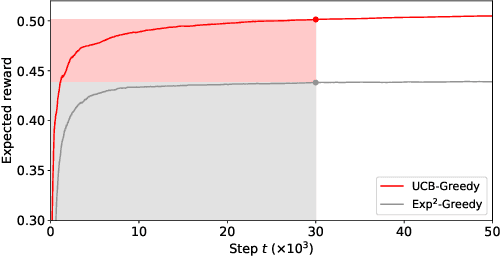

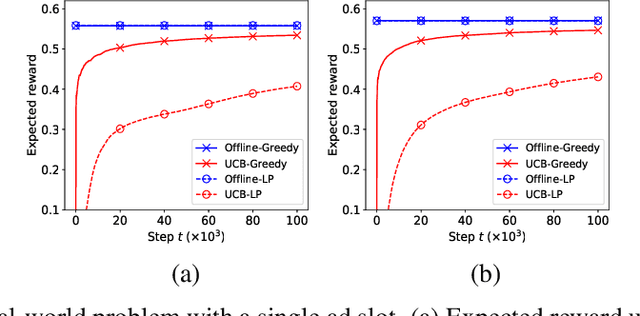

A popular approach to selling online advertising is by a waterfall, where a publisher makes sequential price offers to ad networks for an inventory, and chooses the winner in that order. The publisher picks the order and prices to maximize her revenue. A traditional solution is to learn the demand model and then subsequently solve the optimization problem for the given demand model. This will incur a linear regret. We design an online learning algorithm for solving this problem, which interleaves learning and optimization, and prove that this algorithm has sublinear regret. We evaluate the algorithm on both synthetic and real-world data, and show that it quickly learns high quality pricing strategies. This is the first principled study of learning a waterfall design online by sequential experimentation.

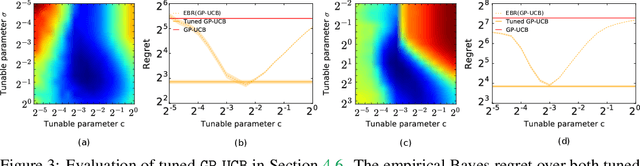

Empirical Bayes Regret Minimization

Apr 04, 2019Chih-Wei Hsu, Branislav Kveton, Ofer Meshi, Martin Mladenov, Csaba Szepesvari

The prevalent approach to bandit algorithm design is to have a low-regret algorithm by design. While celebrated, this approach is often conservative because it ignores many intricate properties of actual problem instances. In this work, we pioneer the idea of minimizing an empirical approximation to the Bayes regret, the expected regret with respect to a distribution over problems. This approach can be viewed as an instance of learning-to-learn, it is conceptually straightforward, and easy to implement. We conduct a comprehensive empirical study of empirical Bayes regret minimization in a wide range of bandit problems, from Bernoulli bandits to structured problems, such as generalized linear and Gaussian process bandits. We report significant improvements over state-of-the-art bandit algorithms, often by an order of magnitude, by simply optimizing over a sample from the distribution.

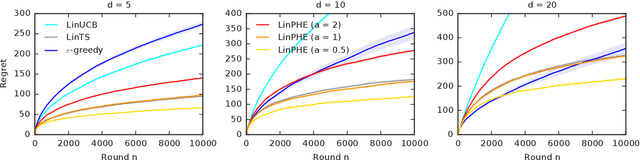

Perturbed-History Exploration in Stochastic Linear Bandits

Mar 21, 2019Branislav Kveton, Csaba Szepesvari, Mohammad Ghavamzadeh, Craig Boutilier

We propose a new online algorithm for minimizing the cumulative regret in stochastic linear bandits. The key idea is to build a perturbed history, which mixes the history of observed rewards with a pseudo-history of randomly generated i.i.d. pseudo-rewards. Our algorithm, perturbed-history exploration in a linear bandit (LinPHE), estimates a linear model from its perturbed history and pulls the arm with the highest value under that model. We prove a $\tilde{O}(d \sqrt{n})$ gap-free bound on the expected $n$-round regret of LinPHE, where $d$ is the number of features. Our analysis relies on novel concentration and anti-concentration bounds on the weighted sum of Bernoulli random variables. To show the generality of our design, we extend LinPHE to a logistic reward model. We evaluate both algorithms empirically and show that they are practical.

Perturbed-History Exploration in Stochastic Multi-Armed Bandits

Feb 26, 2019Branislav Kveton, Csaba Szepesvari, Mohammad Ghavamzadeh, Craig Boutilier

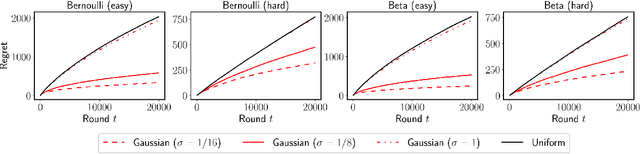

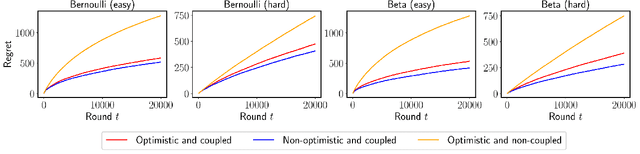

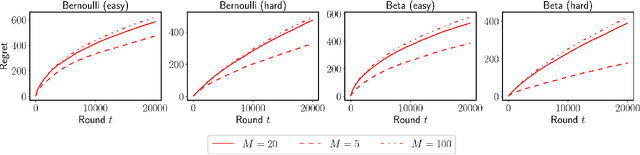

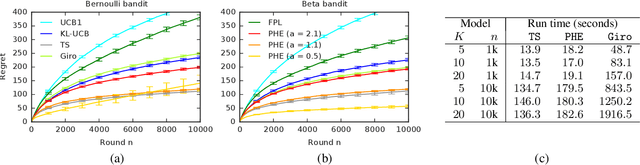

We propose an online algorithm for cumulative regret minimization in a stochastic multi-armed bandit. The algorithm adds $O(t)$ i.i.d. pseudo-rewards to its history in round $t$ and then pulls the arm with the highest estimated value in its perturbed history. Therefore, we call it perturbed-history exploration (PHE). The pseudo-rewards are designed to offset the underestimated values of arms in round $t$ with a sufficiently high probability. We analyze PHE in a $K$-armed bandit and prove a $O(K \Delta^{-1} \log n)$ bound on its $n$-round regret, where $\Delta$ is the minimum gap between the expected rewards of the optimal and suboptimal arms. The key to our analysis is a novel argument that shows that randomized Bernoulli rewards lead to optimism. We compare PHE empirically to several baselines and show that it is competitive with the best of them.

Garbage In, Reward Out: Bootstrapping Exploration in Multi-Armed Bandits

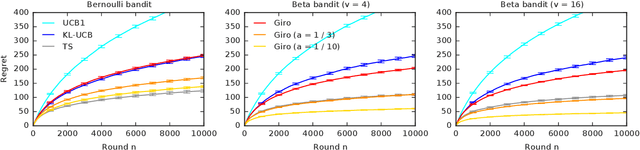

Nov 13, 2018Branislav Kveton, Csaba Szepesvari, Zheng Wen, Mohammad Ghavamzadeh, Tor Lattimore

We propose a multi-armed bandit algorithm that explores based on randomizing its history. The key idea is to estimate the value of the arm from the bootstrap sample of its history, where we add pseudo observations after each pull of the arm. The pseudo observations seem to be harmful. But on the contrary, they guarantee that the bootstrap sample is optimistic with a high probability. Because of this, we call our algorithm Giro, which is an abbreviation for garbage in, reward out. We analyze Giro in a $K$-armed Bernoulli bandit and prove a $O(K \Delta^{-1} \log n)$ bound on its $n$-round regret, where $\Delta$ denotes the difference in the expected rewards of the optimal and best suboptimal arms. The main advantage of our exploration strategy is that it can be applied to any reward function generalization, such as neural networks. We evaluate Giro and its contextual variant on multiple synthetic and real-world problems, and observe that Giro is comparable to or better than state-of-the-art algorithms.

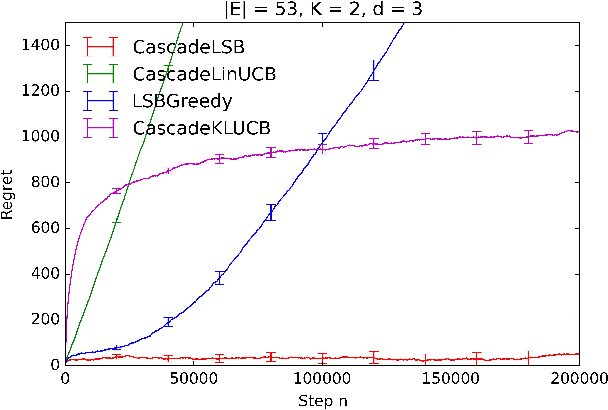

Online Diverse Learning to Rank from Partial-Click Feedback

Nov 01, 2018Prakhar Gupta, Gaurush Hiranandani, Harvineet Singh, Branislav Kveton, Zheng Wen, Iftikhar Ahamath Burhanuddin

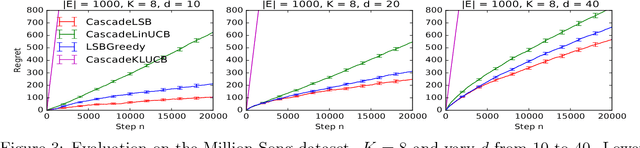

Learning to rank is an important problem in machine learning and recommender systems. In a recommender system, a user is typically recommended a list of items. Since the user is unlikely to examine the entire recommended list, partial feedback arises naturally. At the same time, diverse recommendations are important because it is challenging to model all tastes of the user in practice. In this paper, we propose the first algorithm for online learning to rank diverse items from partial-click feedback. We assume that the user examines the list of recommended items until the user is attracted by an item, which is clicked, and does not examine the rest of the items. This model of user behavior is known as the cascade model. We propose an online learning algorithm, cascadelsb, for solving our problem. The algorithm actively explores the tastes of the user with the objective of learning to recommend the optimal diverse list. We analyze the algorithm and prove a gap-free upper bound on its n-step regret. We evaluate cascadelsb on both synthetic and real-world datasets, compare it to various baselines, and show that it learns even when our modeling assumptions do not hold exactly.

Online Influence Maximization under Independent Cascade Model with Semi-Bandit Feedback

Jun 19, 2018Zheng Wen, Branislav Kveton, Michal Valko, Sharan Vaswani

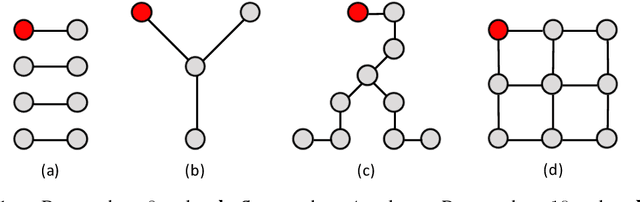

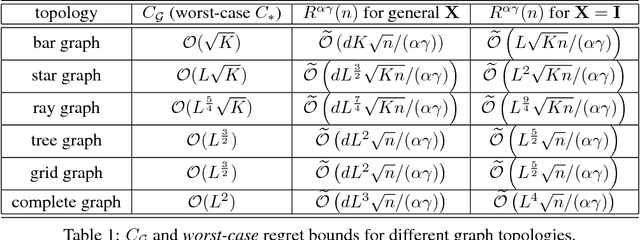

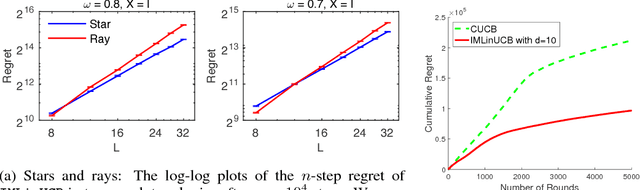

We study the online influence maximization problem in social networks under the independent cascade model. Specifically, we aim to learn the set of "best influencers" in a social network online while repeatedly interacting with it. We address the challenges of (i) combinatorial action space, since the number of feasible influencer sets grows exponentially with the maximum number of influencers, and (ii) limited feedback, since only the influenced portion of the network is observed. Under a stochastic semi-bandit feedback, we propose and analyze IMLinUCB, a computationally efficient UCB-based algorithm. Our bounds on the cumulative regret are polynomial in all quantities of interest, achieve near-optimal dependence on the number of interactions and reflect the topology of the network and the activation probabilities of its edges, thereby giving insights on the problem complexity. To the best of our knowledge, these are the first such results. Our experiments show that in several representative graph topologies, the regret of IMLinUCB scales as suggested by our upper bounds. IMLinUCB permits linear generalization and thus is both statistically and computationally suitable for large-scale problems. Our experiments also show that IMLinUCB with linear generalization can lead to low regret in real-world online influence maximization.

* Compared with the previous version, this version has fixed a mistake. This version is also consistent with the NIPS camera-ready version

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge