Bernardo Magnini

Converting Annotated Clinical Cases into Structured Case Report Forms

Jun 13, 2025Abstract:Case Report Forms (CRFs) are largely used in medical research as they ensure accuracy, reliability, and validity of results in clinical studies. However, publicly available, wellannotated CRF datasets are scarce, limiting the development of CRF slot filling systems able to fill in a CRF from clinical notes. To mitigate the scarcity of CRF datasets, we propose to take advantage of available datasets annotated for information extraction tasks and to convert them into structured CRFs. We present a semi-automatic conversion methodology, which has been applied to the E3C dataset in two languages (English and Italian), resulting in a new, high-quality dataset for CRF slot filling. Through several experiments on the created dataset, we report that slot filling achieves 59.7% for Italian and 67.3% for English on a closed Large Language Models (zero-shot) and worse performances on three families of open-source models, showing that filling CRFs is challenging even for recent state-of-the-art LLMs. We release the datest at https://huggingface.co/collections/NLP-FBK/e3c-to-crf-67b9844065460cbe42f80166

ViPlan: A Benchmark for Visual Planning with Symbolic Predicates and Vision-Language Models

May 19, 2025Abstract:Integrating Large Language Models with symbolic planners is a promising direction for obtaining verifiable and grounded plans compared to planning in natural language, with recent works extending this idea to visual domains using Vision-Language Models (VLMs). However, rigorous comparison between VLM-grounded symbolic approaches and methods that plan directly with a VLM has been hindered by a lack of common environments, evaluation protocols and model coverage. We introduce ViPlan, the first open-source benchmark for Visual Planning with symbolic predicates and VLMs. ViPlan features a series of increasingly challenging tasks in two domains: a visual variant of the classic Blocksworld planning problem and a simulated household robotics environment. We benchmark nine open-source VLM families across multiple sizes, along with selected closed models, evaluating both VLM-grounded symbolic planning and using the models directly to propose actions. We find symbolic planning to outperform direct VLM planning in Blocksworld, where accurate image grounding is crucial, whereas the opposite is true in the household robotics tasks, where commonsense knowledge and the ability to recover from errors are beneficial. Finally, we show that across most models and methods, there is no significant benefit to using Chain-of-Thought prompting, suggesting that current VLMs still struggle with visual reasoning.

Low-resource Information Extraction with the European Clinical Case Corpus

Mar 26, 2025Abstract:We present E3C-3.0, a multilingual dataset in the medical domain, comprising clinical cases annotated with diseases and test-result relations. The dataset includes both native texts in five languages (English, French, Italian, Spanish and Basque) and texts translated and projected from the English source into five target languages (Greek, Italian, Polish, Slovak, and Slovenian). A semi-automatic approach has been implemented, including automatic annotation projection based on Large Language Models (LLMs) and human revision. We present several experiments showing that current state-of-the-art LLMs can benefit from being fine-tuned on the E3C-3.0 dataset. We also show that transfer learning in different languages is very effective, mitigating the scarcity of data. Finally, we compare performance both on native data and on projected data. We release the data at https://huggingface.co/collections/NLP-FBK/e3c-projected-676a7d6221608d60e4e9fd89 .

All-in-one: Understanding and Generation in Multimodal Reasoning with the MAIA Benchmark

Feb 24, 2025Abstract:We introduce MAIA (Multimodal AI Assessment), a native-Italian benchmark designed for fine-grained investigation of the reasoning abilities of visual language models on videos. MAIA differs from other available video benchmarks for its design, its reasoning categories, the metric it uses and the language and culture of the videos. It evaluates Vision Language Models (VLMs) on two aligned tasks: a visual statement verification task, and an open-ended visual question-answering task, both on the same set of video-related questions. It considers twelve reasoning categories that aim to disentangle language and vision relations by highlight when one of two alone encodes sufficient information to solve the tasks, when they are both needed and when the full richness of the short video is essential instead of just a part of it. Thanks to its carefully taught design, it evaluates VLMs' consistency and visually grounded natural language comprehension and generation simultaneously through an aggregated metric. Last but not least, the video collection has been carefully selected to reflect the Italian culture and the language data are produced by native-speakers.

Evalita-LLM: Benchmarking Large Language Models on Italian

Feb 04, 2025

Abstract:We describe Evalita-LLM, a new benchmark designed to evaluate Large Language Models (LLMs) on Italian tasks. The distinguishing and innovative features of Evalita-LLM are the following: (i) all tasks are native Italian, avoiding issues of translating from Italian and potential cultural biases; (ii) in addition to well established multiple-choice tasks, the benchmark includes generative tasks, enabling more natural interaction with LLMs; (iii) all tasks are evaluated against multiple prompts, this way mitigating the model sensitivity to specific prompts and allowing a fairer and objective evaluation. We propose an iterative methodology, where candidate tasks and candidate prompts are validated against a set of LLMs used for development. We report experimental results from the benchmark's development phase, and provide performance statistics for several state-of-the-art LLMs.

Evaluating Task-Oriented Dialogue Consistency through Constraint Satisfaction

Jul 16, 2024

Abstract:Task-oriented dialogues must maintain consistency both within the dialogue itself, ensuring logical coherence across turns, and with the conversational domain, accurately reflecting external knowledge. We propose to conceptualize dialogue consistency as a Constraint Satisfaction Problem (CSP), wherein variables represent segments of the dialogue referencing the conversational domain, and constraints among variables reflect dialogue properties, including linguistic, conversational, and domain-based aspects. To demonstrate the feasibility of the approach, we utilize a CSP solver to detect inconsistencies in dialogues re-lexicalized by an LLM. Our findings indicate that: (i) CSP is effective to detect dialogue inconsistencies; and (ii) consistent dialogue re-lexicalization is challenging for state-of-the-art LLMs, achieving only a 0.15 accuracy rate when compared to a CSP solver. Furthermore, through an ablation study, we reveal that constraints derived from domain knowledge pose the greatest difficulty in being respected. We argue that CSP captures core properties of dialogue consistency that have been poorly considered by approaches based on component pipelines.

Medical mT5: An Open-Source Multilingual Text-to-Text LLM for The Medical Domain

Apr 11, 2024Abstract:Research on language technology for the development of medical applications is currently a hot topic in Natural Language Understanding and Generation. Thus, a number of large language models (LLMs) have recently been adapted to the medical domain, so that they can be used as a tool for mediating in human-AI interaction. While these LLMs display competitive performance on automated medical texts benchmarks, they have been pre-trained and evaluated with a focus on a single language (English mostly). This is particularly true of text-to-text models, which typically require large amounts of domain-specific pre-training data, often not easily accessible for many languages. In this paper, we address these shortcomings by compiling, to the best of our knowledge, the largest multilingual corpus for the medical domain in four languages, namely English, French, Italian and Spanish. This new corpus has been used to train Medical mT5, the first open-source text-to-text multilingual model for the medical domain. Additionally, we present two new evaluation benchmarks for all four languages with the aim of facilitating multilingual research in this domain. A comprehensive evaluation shows that Medical mT5 outperforms both encoders and similarly sized text-to-text models for the Spanish, French, and Italian benchmarks, while being competitive with current state-of-the-art LLMs in English.

Unraveling ChatGPT: A Critical Analysis of AI-Generated Goal-Oriented Dialogues and Annotations

May 23, 2023

Abstract:Large pre-trained language models have exhibited unprecedented capabilities in producing high-quality text via prompting techniques. This fact introduces new possibilities for data collection and annotation, particularly in situations where such data is scarce, complex to gather, expensive, or even sensitive. In this paper, we explore the potential of these models to generate and annotate goal-oriented dialogues, and conduct an in-depth analysis to evaluate their quality. Our experiments employ ChatGPT, and encompass three categories of goal-oriented dialogues (task-oriented, collaborative, and explanatory), two generation modes (interactive and one-shot), and two languages (English and Italian). Based on extensive human-based evaluations, we demonstrate that the quality of generated dialogues and annotations is on par with those generated by humans.

Recent Neural Methods on Slot Filling and Intent Classification for Task-Oriented Dialogue Systems: A Survey

Nov 01, 2020

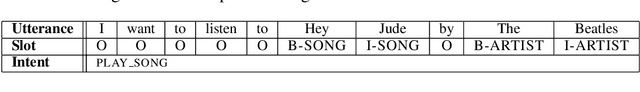

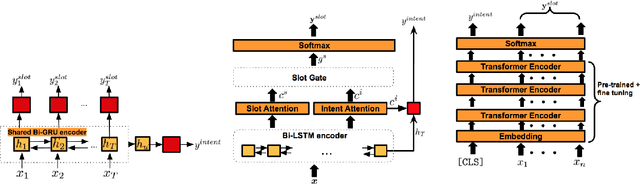

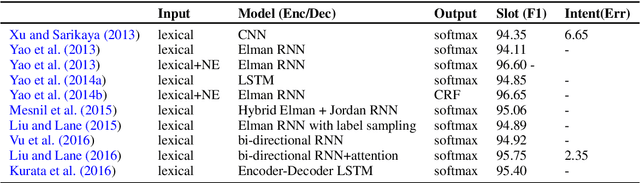

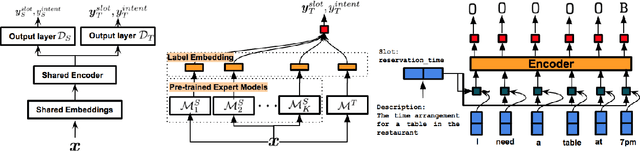

Abstract:In recent years, fostered by deep learning technologies and by the high demand for conversational AI, various approaches have been proposed that address the capacity to elicit and understand user's needs in task-oriented dialogue systems. We focus on two core tasks, slot filling (SF) and intent classification (IC), and survey how neural-based models have rapidly evolved to address natural language understanding in dialogue systems. We introduce three neural architectures: independent model, which model SF and IC separately, joint models, which exploit the mutual benefit of the two tasks simultaneously, and transfer learning models, that scale the model to new domains. We discuss the current state of the research in SF and IC and highlight challenges that still require attention.

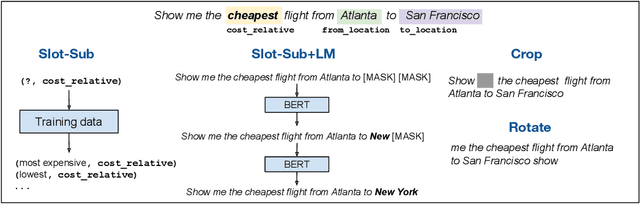

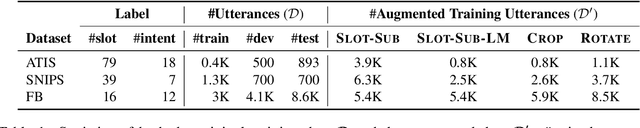

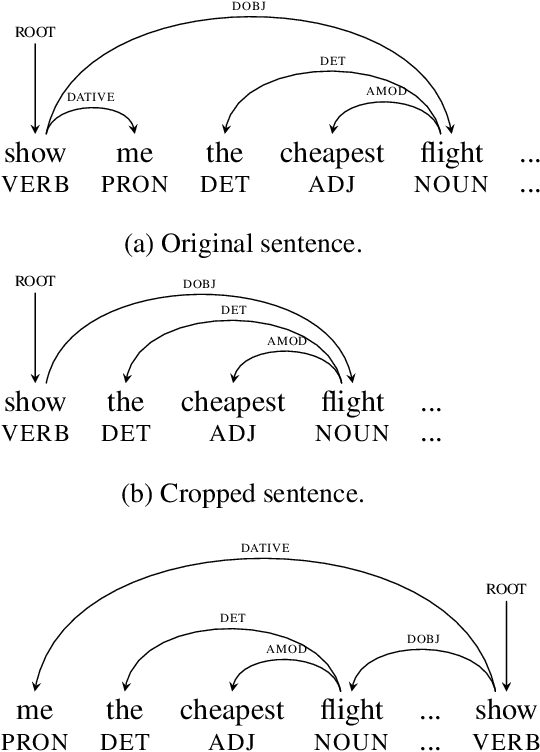

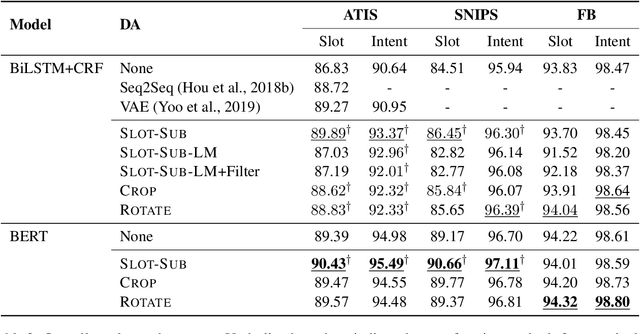

Simple is Better! Lightweight Data Augmentation for Low Resource Slot Filling and Intent Classification

Sep 08, 2020

Abstract:Neural-based models have achieved outstanding performance on slot filling and intent classification, when fairly large in-domain training data are available. However, as new domains are frequently added, creating sizeable data is expensive. We show that lightweight augmentation, a set of augmentation methods involving word span and sentence level operations, alleviates data scarcity problems. Our experiments on limited data settings show that lightweight augmentation yields significant performance improvement on slot filling on the ATIS and SNIPS datasets, and achieves competitive performance with respect to more complex, state-of-the-art, augmentation approaches. Furthermore, lightweight augmentation is also beneficial when combined with pre-trained LM-based models, as it improves BERT-based joint intent and slot filling models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge