Alessandro Lazaric

INRIA Lille - Nord Europe

Active Exploration in Markov Decision Processes

Feb 28, 2019

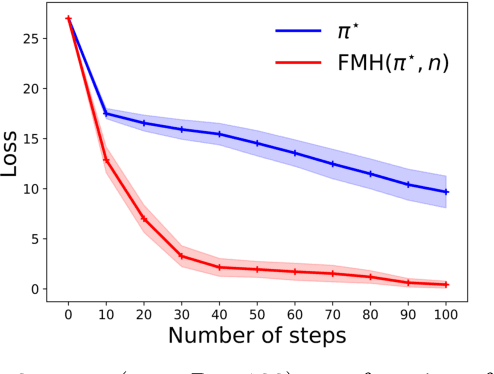

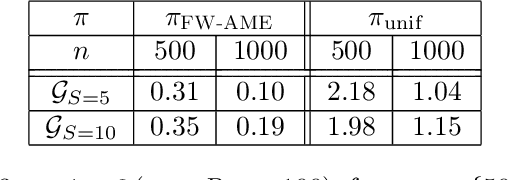

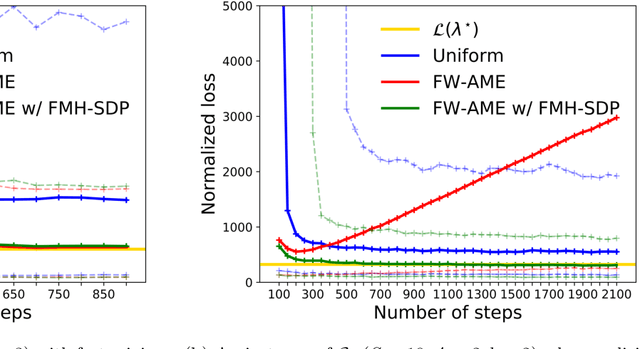

Abstract:We introduce the active exploration problem in Markov decision processes (MDPs). Each state of the MDP is characterized by a random value and the learner should gather samples to estimate the mean value of each state as accurately as possible. Similarly to active exploration in multi-armed bandit (MAB), states may have different levels of noise, so that the higher the noise, the more samples are needed. As the noise level is initially unknown, we need to trade off the exploration of the environment to estimate the noise and the exploitation of these estimates to compute a policy maximizing the accuracy of the mean predictions. We introduce a novel learning algorithm to solve this problem showing that active exploration in MDPs may be significantly more difficult than in MAB. We also derive a heuristic procedure to mitigate the negative effect of slowly mixing policies. Finally, we validate our findings on simple numerical simulations.

Exploration Bonus for Regret Minimization in Undiscounted Discrete and Continuous Markov Decision Processes

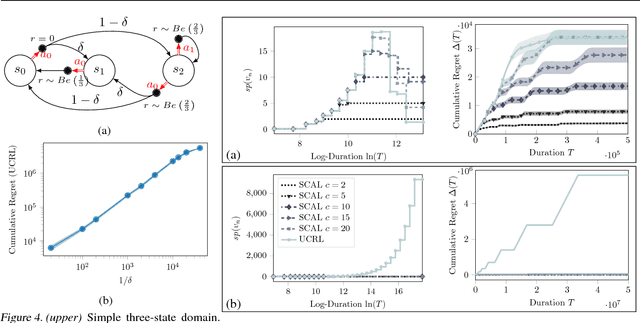

Dec 11, 2018Abstract:We introduce and analyse two algorithms for exploration-exploitation in discrete and continuous Markov Decision Processes (MDPs) based on exploration bonuses. SCAL$^+$ is a variant of SCAL (Fruit et al., 2018) that performs efficient exploration-exploitation in any unknown weakly-communicating MDP for which an upper bound C on the span of the optimal bias function is known. For an MDP with $S$ states, $A$ actions and $\Gamma \leq S$ possible next states, we prove that SCAL$^+$ achieves the same theoretical guarantees as SCAL (i.e., a high probability regret bound of $\widetilde{O}(C\sqrt{\Gamma SAT})$), with a much smaller computational complexity. Similarly, C-SCAL$^+$ exploits an exploration bonus to achieve sublinear regret in any undiscounted MDP with continuous state space. We show that C-SCAL$^+$ achieves the same regret bound as UCCRL (Ortner and Ryabko, 2012) while being the first implementable algorithm with regret guarantees in this setting. While optimistic algorithms such as UCRL, SCAL or UCCRL maintain a high-confidence set of plausible MDPs around the true unknown MDP, SCAL$^+$ and C-SCAL$^+$ leverage on an exploration bonus to directly plan on the empirically estimated MDP, thus being more computationally efficient.

Rotting bandits are no harder than stochastic ones

Nov 27, 2018

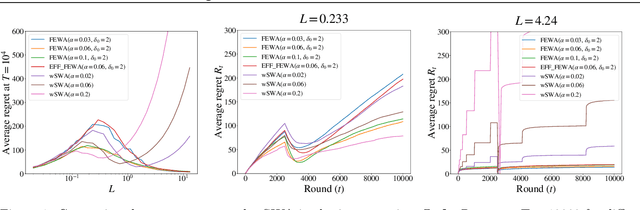

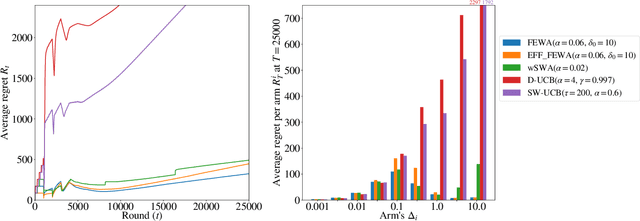

Abstract:In bandits, arms' distributions are stationary. This is often violated in practice, where rewards change over time. In applications as recommendation systems, online advertising, and crowdsourcing, the changes may be triggered by the pulls, so that the arms' rewards change as a function of the number of pulls. In this paper, we consider the specific case of non-parametric rotting bandits, where the expected reward of an arm may decrease every time it is pulled. We introduce the filtering on expanding window average (FEWA) algorithm that at each round constructs moving averages of increasing windows to identify arms that are more likely to return high rewards when pulled once more. We prove that, without any knowledge on the decreasing behavior of the arms, FEWA achieves similar anytime problem-dependent, $\widetilde{\mathcal{O}}(\log{(KT)}),$ and problem-independent, $\widetilde{\mathcal{O}}(\sqrt{KT})$, regret bounds of near-optimal stochastic algorithms as UCB1 of Auer et al. (2002a). This result substantially improves the prior result of Levine et al. (2017) which needed knowledge of the horizon and decaying parameters to achieve problem-independent bound of only $\widetilde{\mathcal{O}}(K^{1/3}T^{2/3})$. Finally, we report simulations confirming the theoretical improvements of FEWA.

Efficient Bias-Span-Constrained Exploration-Exploitation in Reinforcement Learning

Jul 06, 2018

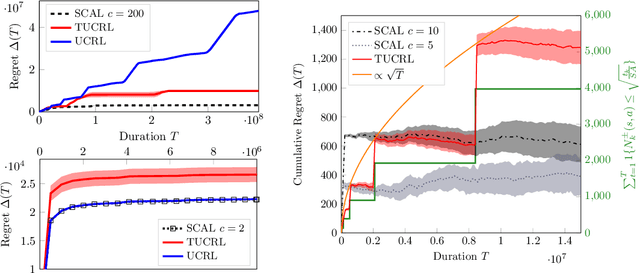

Abstract:We introduce SCAL, an algorithm designed to perform efficient exploration-exploitation in any unknown weakly-communicating Markov decision process (MDP) for which an upper bound $c$ on the span of the optimal bias function is known. For an MDP with $S$ states, $A$ actions and $\Gamma \leq S$ possible next states, we prove a regret bound of $\widetilde{O}(c\sqrt{\Gamma SAT})$, which significantly improves over existing algorithms (e.g., UCRL and PSRL), whose regret scales linearly with the MDP diameter $D$. In fact, the optimal bias span is finite and often much smaller than $D$ (e.g., $D=\infty$ in non-communicating MDPs). A similar result was originally derived by Bartlett and Tewari (2009) for REGAL.C, for which no tractable algorithm is available. In this paper, we relax the optimization problem at the core of REGAL.C, we carefully analyze its properties, and we provide the first computationally efficient algorithm to solve it. Finally, we report numerical simulations supporting our theoretical findings and showing how SCAL significantly outperforms UCRL in MDPs with large diameter and small span.

Near Optimal Exploration-Exploitation in Non-Communicating Markov Decision Processes

Jul 06, 2018

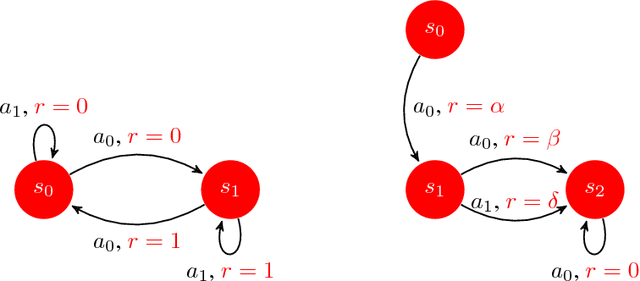

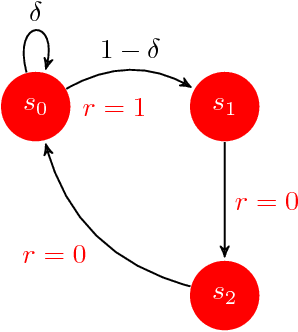

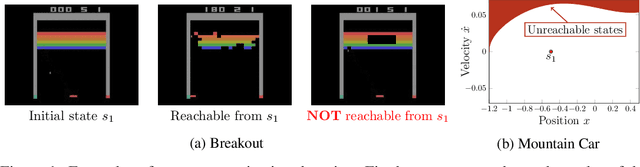

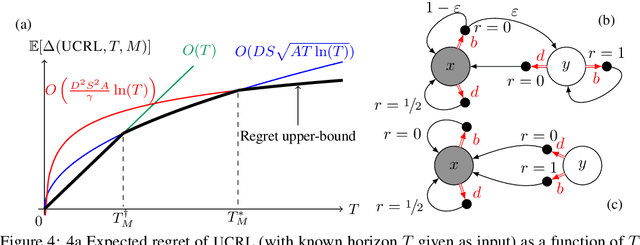

Abstract:While designing the state space of an MDP, it is common to include states that are transient or not reachable by any policy (e.g., in mountain car, the product space of speed and position contains configurations that are not physically reachable). This leads to defining weakly-communicating or multi-chain MDPs. In this paper, we introduce \tucrl, the first algorithm able to perform efficient exploration-exploitation in any finite Markov Decision Process (MDP) without requiring any form of prior knowledge. In particular, for any MDP with $S^{\texttt{C}}$ communicating states, $A$ actions and $\Gamma^{\texttt{C}} \leq S^{\texttt{C}}$ possible communicating next states, we derive a $\widetilde{O}(D^{\texttt{C}} \sqrt{\Gamma^{\texttt{C}} S^{\texttt{C}} AT})$ regret bound, where $D^{\texttt{C}}$ is the diameter (i.e., the longest shortest path) of the communicating part of the MDP. This is in contrast with optimistic algorithms (e.g., UCRL, Optimistic PSRL) that suffer linear regret in weakly-communicating MDPs, as well as posterior sampling or regularised algorithms (e.g., REGAL), which require prior knowledge on the bias span of the optimal policy to bias the exploration to achieve sub-linear regret. We also prove that in weakly-communicating MDPs, no algorithm can ever achieve a logarithmic growth of the regret without first suffering a linear regret for a number of steps that is exponential in the parameters of the MDP. Finally, we report numerical simulations supporting our theoretical findings and showing how TUCRL overcomes the limitations of the state-of-the-art.

Reinforcement Learning in Rich-Observation MDPs using Spectral Methods

Jun 19, 2018

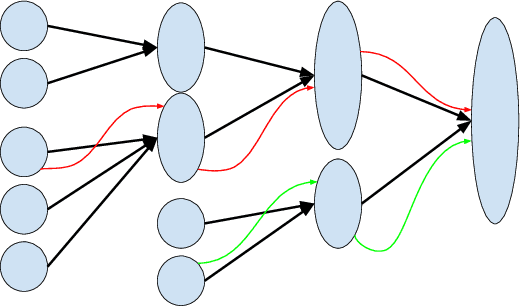

Abstract:Reinforcement learning (RL) in Markov decision processes (MDPs) with large state spaces is a challenging problem. The performance of standard RL algorithms degrades drastically with the dimensionality of state space. However, in practice, these large MDPs typically incorporate a latent or hidden low-dimensional structure. In this paper, we study the setting of rich-observation Markov decision processes (ROMDP), where there are a small number of hidden states which possess an injective mapping to the observation states. In other words, every observation state is generated through a single hidden state, and this mapping is unknown a priori. We introduce a spectral decomposition method that consistently learns this mapping, and more importantly, achieves it with low regret. The estimated mapping is integrated into an optimistic RL algorithm (UCRL), which operates on the estimated hidden space. We derive finite-time regret bounds for our algorithm with a weak dependence on the dimensionality of the observed space. In fact, our algorithm asymptotically achieves the same average regret as the oracle UCRL algorithm, which has the knowledge of the mapping from hidden to observed spaces. Thus, we derive an efficient spectral RL algorithm for ROMDPs.

Distributed Adaptive Sampling for Kernel Matrix Approximation

Mar 27, 2018

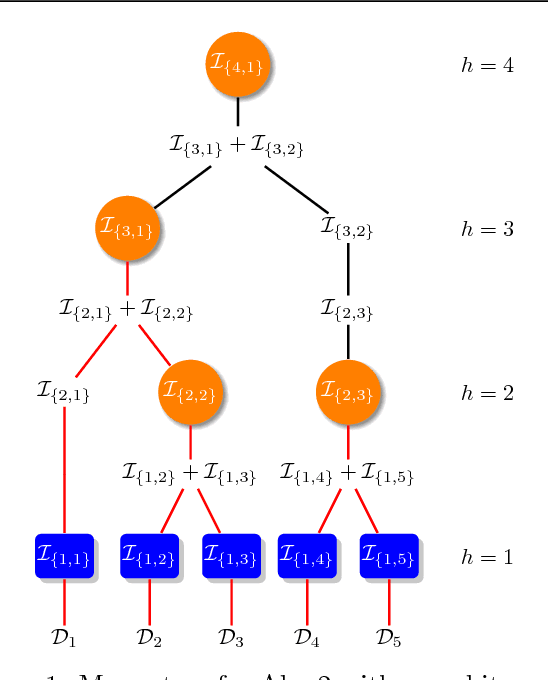

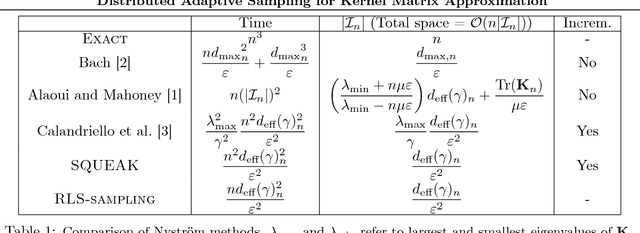

Abstract:Most kernel-based methods, such as kernel or Gaussian process regression, kernel PCA, ICA, or $k$-means clustering, do not scale to large datasets, because constructing and storing the kernel matrix $\mathbf{K}_n$ requires at least $\mathcal{O}(n^2)$ time and space for $n$ samples. Recent works show that sampling points with replacement according to their ridge leverage scores (RLS) generates small dictionaries of relevant points with strong spectral approximation guarantees for $\mathbf{K}_n$. The drawback of RLS-based methods is that computing exact RLS requires constructing and storing the whole kernel matrix. In this paper, we introduce SQUEAK, a new algorithm for kernel approximation based on RLS sampling that sequentially processes the dataset, storing a dictionary which creates accurate kernel matrix approximations with a number of points that only depends on the effective dimension $d_{eff}(\gamma)$ of the dataset. Moreover since all the RLS estimations are efficiently performed using only the small dictionary, SQUEAK is the first RLS sampling algorithm that never constructs the whole matrix $\mathbf{K}_n$, runs in linear time $\widetilde{\mathcal{O}}(nd_{eff}(\gamma)^3)$ w.r.t. $n$, and requires only a single pass over the dataset. We also propose a parallel and distributed version of SQUEAK that linearly scales across multiple machines, achieving similar accuracy in as little as $\widetilde{\mathcal{O}}(\log(n)d_{eff}(\gamma)^3)$ time.

Active Learning for Accurate Estimation of Linear Models

Jul 29, 2017

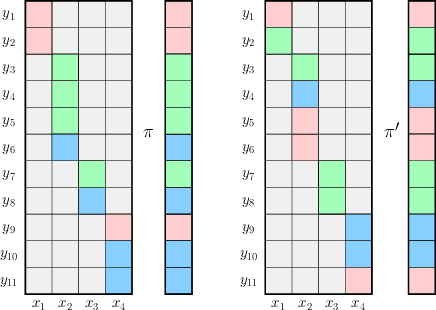

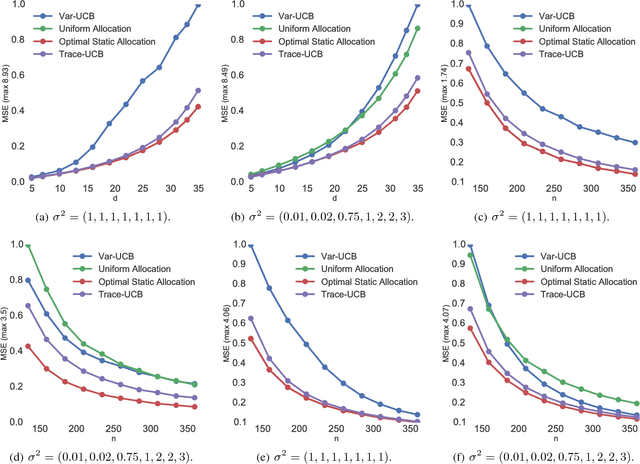

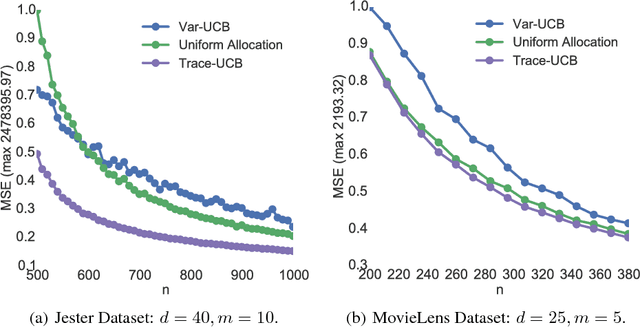

Abstract:We explore the sequential decision making problem where the goal is to estimate uniformly well a number of linear models, given a shared budget of random contexts independently sampled from a known distribution. The decision maker must query one of the linear models for each incoming context, and receives an observation corrupted by noise levels that are unknown, and depend on the model instance. We present Trace-UCB, an adaptive allocation algorithm that learns the noise levels while balancing contexts accordingly across the different linear functions, and derive guarantees for simple regret in both expectation and high-probability. Finally, we extend the algorithm and its guarantees to high dimensional settings, where the number of linear models times the dimension of the contextual space is higher than the total budget of samples. Simulations with real data suggest that Trace-UCB is remarkably robust, outperforming a number of baselines even when its assumptions are violated.

Second-Order Kernel Online Convex Optimization with Adaptive Sketching

Jun 15, 2017Abstract:Kernel online convex optimization (KOCO) is a framework combining the expressiveness of non-parametric kernel models with the regret guarantees of online learning. First-order KOCO methods such as functional gradient descent require only $\mathcal{O}(t)$ time and space per iteration, and, when the only information on the losses is their convexity, achieve a minimax optimal $\mathcal{O}(\sqrt{T})$ regret. Nonetheless, many common losses in kernel problems, such as squared loss, logistic loss, and squared hinge loss posses stronger curvature that can be exploited. In this case, second-order KOCO methods achieve $\mathcal{O}(\log(\text{Det}(\boldsymbol{K})))$ regret, which we show scales as $\mathcal{O}(d_{\text{eff}}\log T)$, where $d_{\text{eff}}$ is the effective dimension of the problem and is usually much smaller than $\mathcal{O}(\sqrt{T})$. The main drawback of second-order methods is their much higher $\mathcal{O}(t^2)$ space and time complexity. In this paper, we introduce kernel online Newton step (KONS), a new second-order KOCO method that also achieves $\mathcal{O}(d_{\text{eff}}\log T)$ regret. To address the computational complexity of second-order methods, we introduce a new matrix sketching algorithm for the kernel matrix $\boldsymbol{K}_t$, and show that for a chosen parameter $\gamma \leq 1$ our Sketched-KONS reduces the space and time complexity by a factor of $\gamma^2$ to $\mathcal{O}(t^2\gamma^2)$ space and time per iteration, while incurring only $1/\gamma$ times more regret.

Experimental results : Reinforcement Learning of POMDPs using Spectral Methods

May 07, 2017

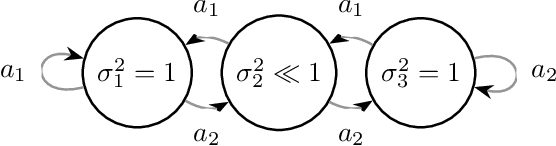

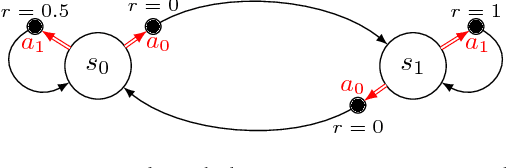

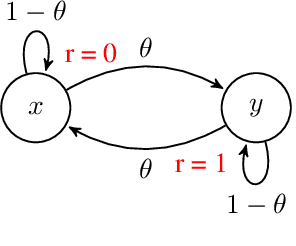

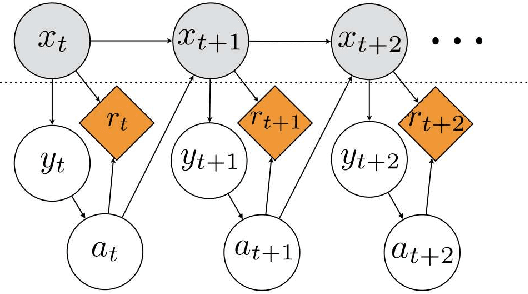

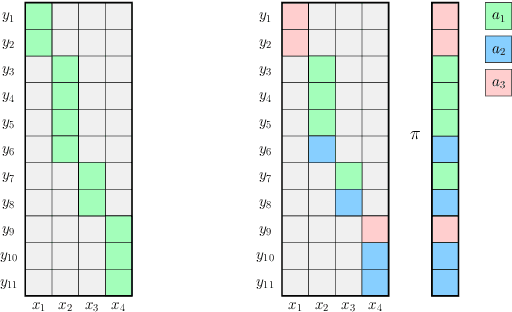

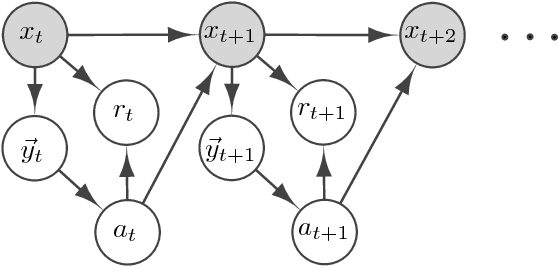

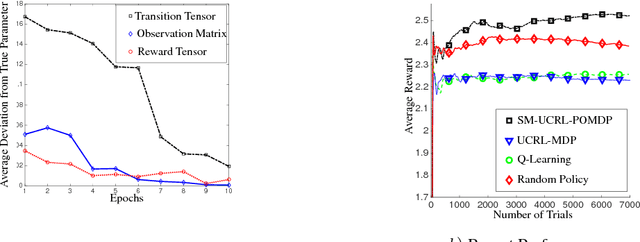

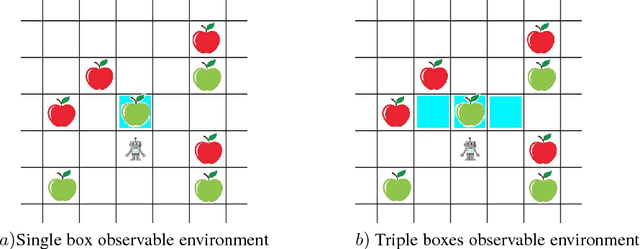

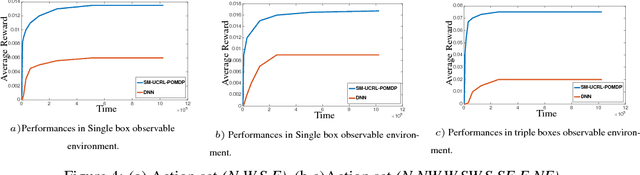

Abstract:We propose a new reinforcement learning algorithm for partially observable Markov decision processes (POMDP) based on spectral decomposition methods. While spectral methods have been previously employed for consistent learning of (passive) latent variable models such as hidden Markov models, POMDPs are more challenging since the learner interacts with the environment and possibly changes the future observations in the process. We devise a learning algorithm running through epochs, in each epoch we employ spectral techniques to learn the POMDP parameters from a trajectory generated by a fixed policy. At the end of the epoch, an optimization oracle returns the optimal memoryless planning policy which maximizes the expected reward based on the estimated POMDP model. We prove an order-optimal regret bound with respect to the optimal memoryless policy and efficient scaling with respect to the dimensionality of observation and action spaces.

* 30th Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge