Abhishek Singh

Microsoft

SplitNN-driven Vertical Partitioning

Aug 07, 2020

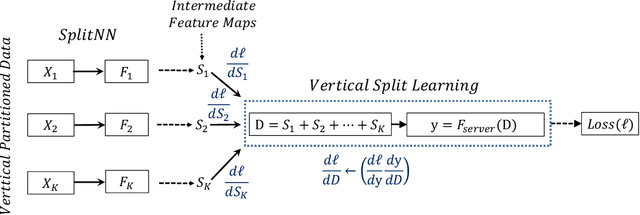

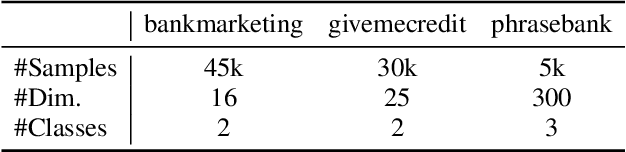

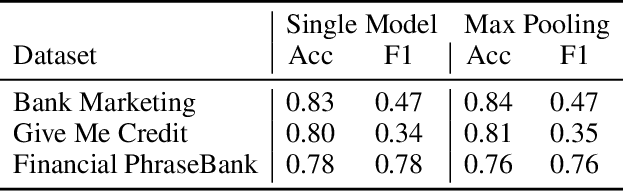

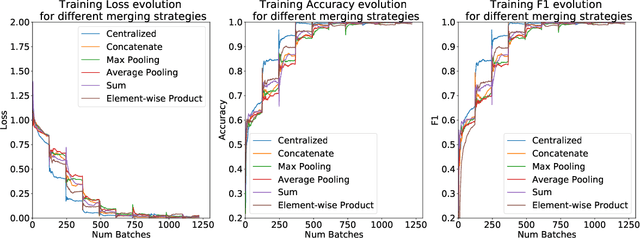

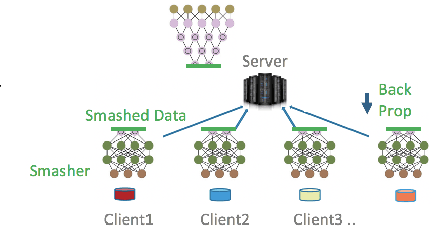

Abstract:In this work, we introduce SplitNN-driven Vertical Partitioning, a configuration of a distributed deep learning method called SplitNN to facilitate learning from vertically distributed features. SplitNN does not share raw data or model details with collaborating institutions. The proposed configuration allows training among institutions holding diverse sources of data without the need of complex encryption algorithms or secure computation protocols. We evaluate several configurations to merge the outputs of the split models, and compare performance and resource efficiency. The method is flexible and allows many different configurations to tackle the specific challenges posed by vertically split datasets.

FedML: A Research Library and Benchmark for Federated Machine Learning

Jul 27, 2020

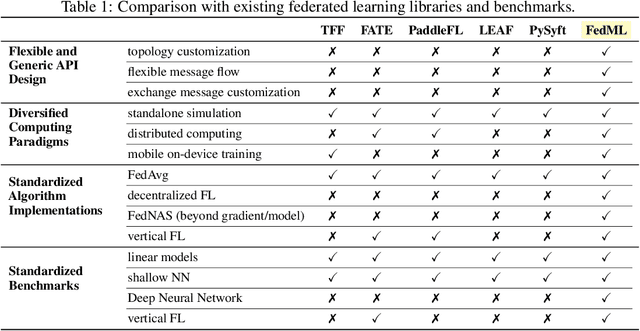

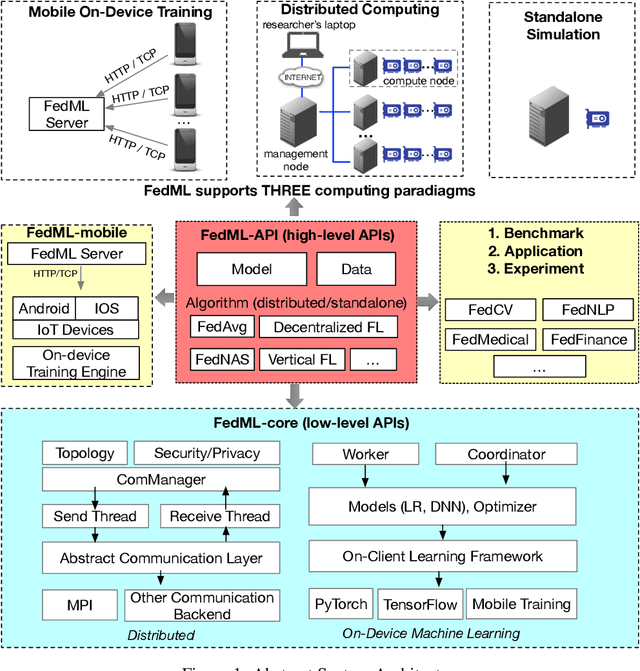

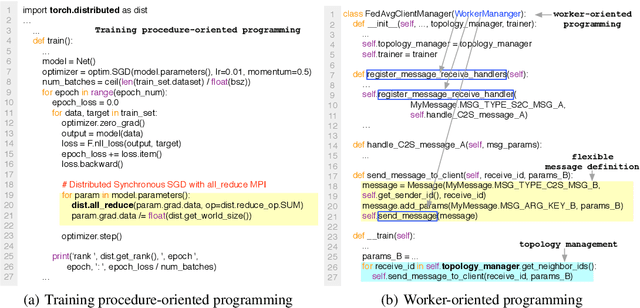

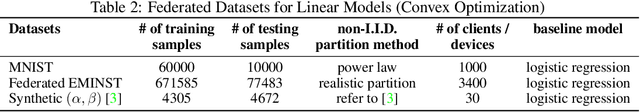

Abstract:Federated learning is a rapidly growing research field in the machine learning domain. Although considerable research efforts have been made, existing libraries cannot adequately support diverse algorithmic development (e.g., diverse topology and flexible message exchange), and inconsistent dataset and model usage in experiments make fair comparisons difficult. In this work, we introduce FedML, an open research library and benchmark that facilitates the development of new federated learning algorithms and fair performance comparisons. FedML supports three computing paradigms (distributed training, mobile on-device training, and standalone simulation) for users to conduct experiments in different system environments. FedML also promotes diverse algorithmic research with flexible and generic API design and reference baseline implementations. A curated and comprehensive benchmark dataset for the non-I.I.D setting aims at making a fair comparison. We believe FedML can provide an efficient and reproducible means of developing and evaluating algorithms for the federated learning research community. We maintain the source code, documents, and user community at https://FedML.ai.

Voice@SRIB at SemEval-2020 Task : Sentiment and Offensiveness detection in Social Media

Jul 20, 2020

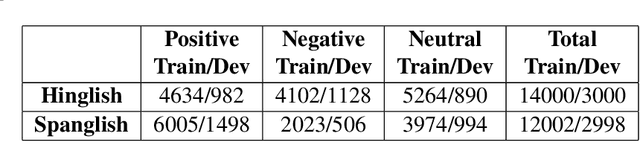

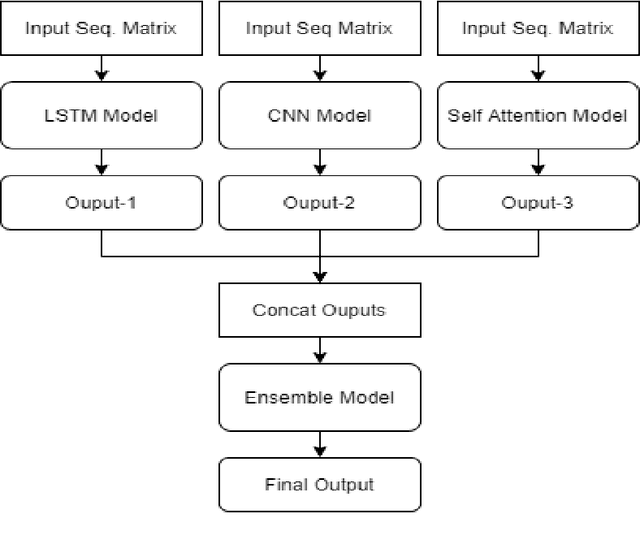

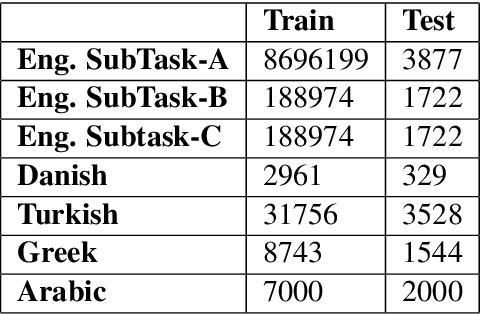

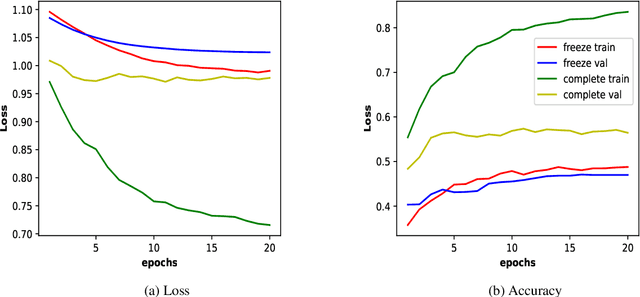

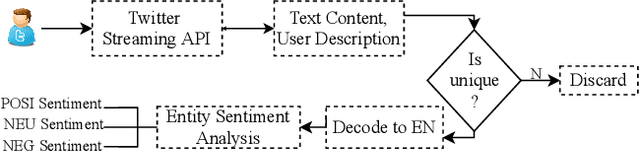

Abstract:In social-media platforms such as Twitter, Facebook, and Reddit, people prefer to use code-mixed language such as Spanish-English, Hindi-English to express their opinions. In this paper, we describe different models we used, using the external dataset to train embeddings, ensembling methods for Sentimix, and OffensEval tasks. The use of pre-trained embeddings usually helps in multiple tasks such as sentence classification, and machine translation. In this experiment, we haveused our trained code-mixed embeddings and twitter pre-trained embeddings to SemEval tasks. We evaluate our models on macro F1-score, precision, accuracy, and recall on the datasets. We intend to show that hyper-parameter tuning and data pre-processing steps help a lot in improving the scores. In our experiments, we are able to achieve 0.886 F1-Macro on OffenEval Greek language subtask post-evaluation, whereas the highest is 0.852 during the Evaluation Period. We stood third in Spanglish competition with our best F1-score of 0.756. Codalab username is asking28.

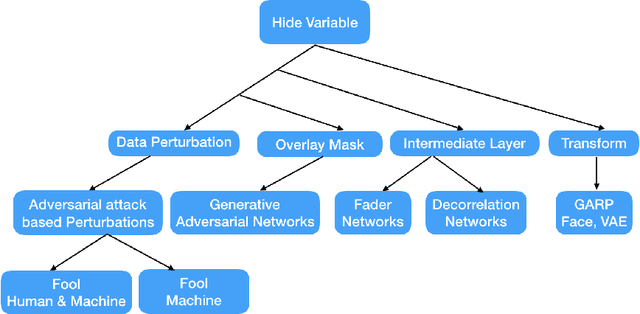

Privacy in Deep Learning: A Survey

May 09, 2020

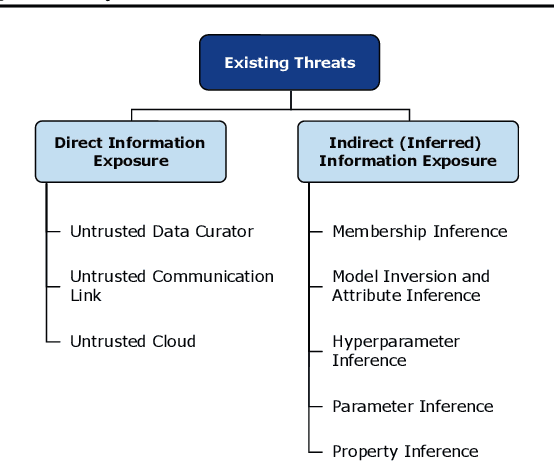

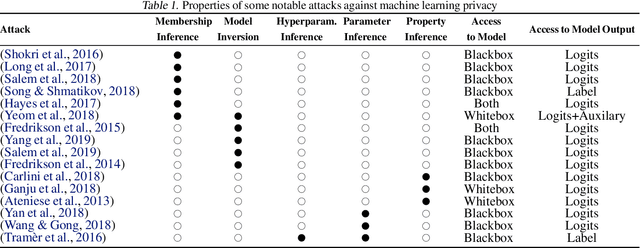

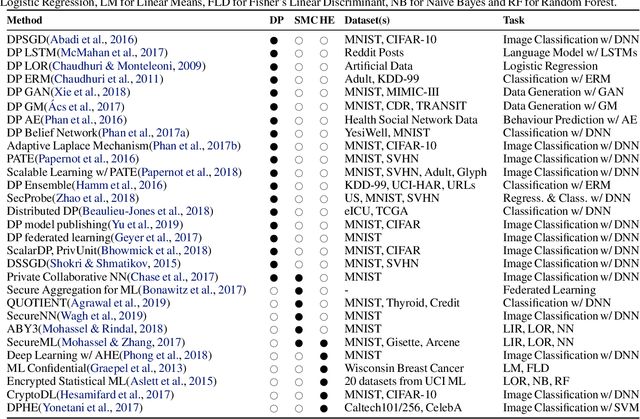

Abstract:The ever-growing advances of deep learning in many areas including vision, recommendation systems, natural language processing, etc., have led to the adoption of Deep Neural Networks (DNNs) in production systems. The availability of large datasets and high computational power are the main contributors to these advances. The datasets are usually crowdsourced and may contain sensitive information. This poses serious privacy concerns as this data can be misused or leaked through various vulnerabilities. Even if the cloud provider and the communication link is trusted, there are still threats of inference attacks where an attacker could speculate properties of the data used for training, or find the underlying model architecture and parameters. In this survey, we review the privacy concerns brought by deep learning, and the mitigating techniques introduced to tackle these issues. We also show that there is a gap in the literature regarding test-time inference privacy, and propose possible future research directions.

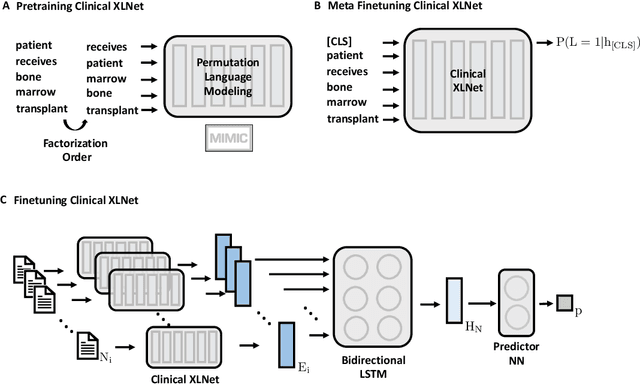

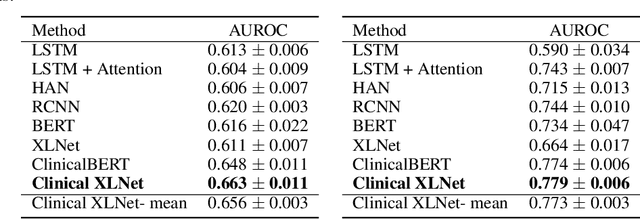

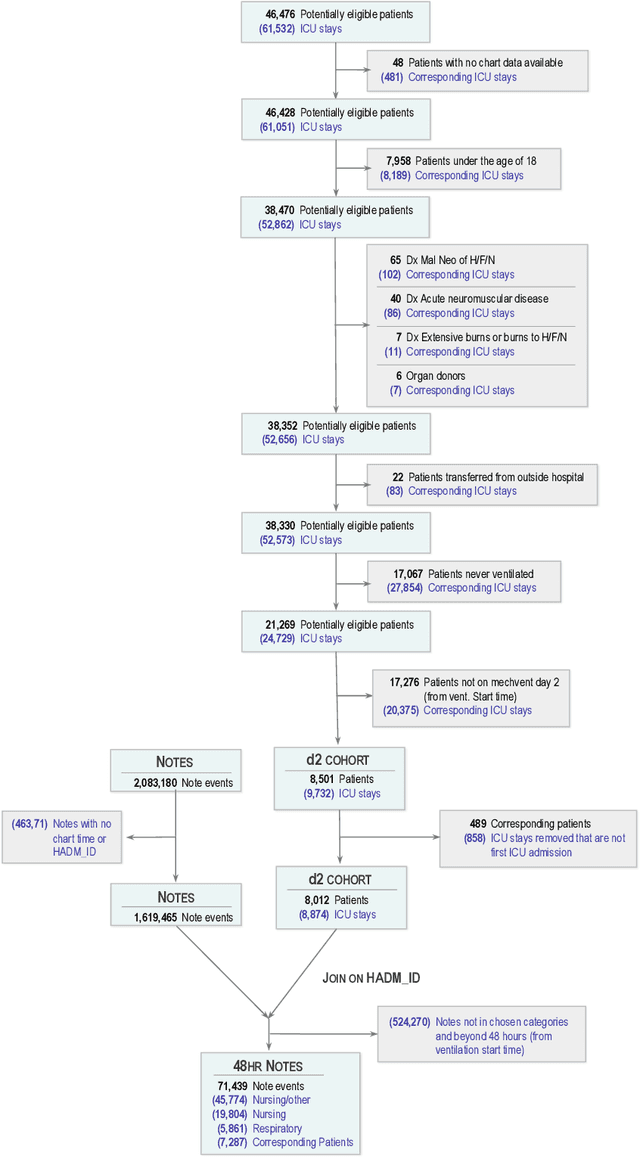

Clinical XLNet: Modeling Sequential Clinical Notes and Predicting Prolonged Mechanical Ventilation

Dec 27, 2019

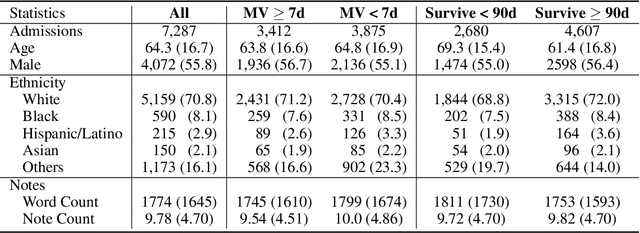

Abstract:Clinical notes contain rich data, which is unexploited in predictive modeling compared to structured data. In this work, we developed a new text representation Clinical XLNet for clinical notes which also leverages the temporal information of the sequence of the notes. We evaluated our models on prolonged mechanical ventilation prediction problem and our experiments demonstrated that Clinical XLNet outperforms the best baselines consistently.

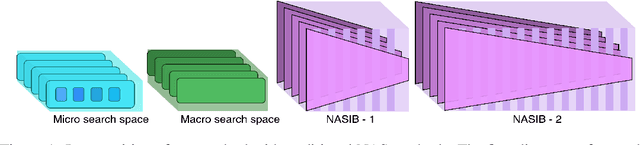

NASIB: Neural Architecture Search withIn Budget

Oct 19, 2019

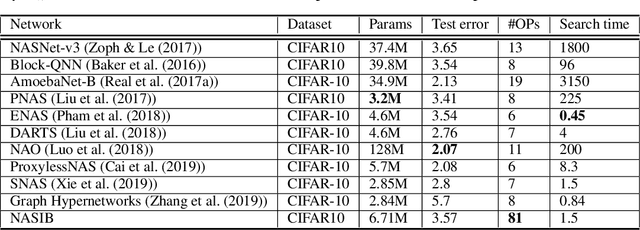

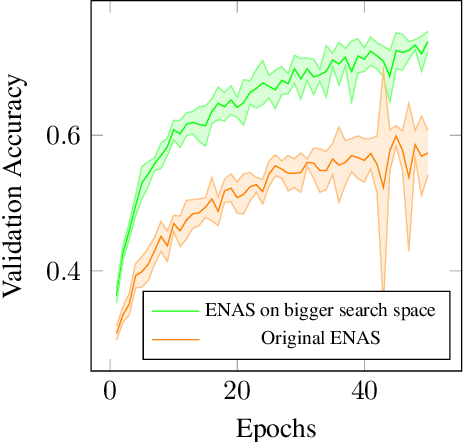

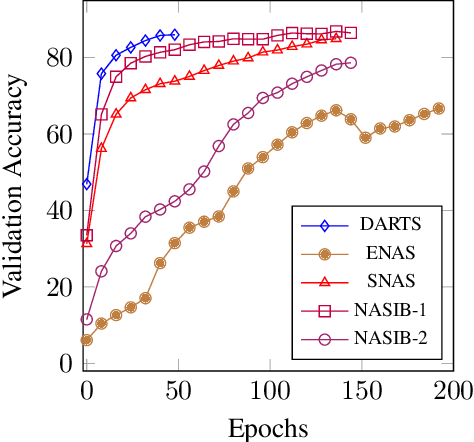

Abstract:Neural Architecture Search (NAS) represents a class of methods to generate the optimal neural network architecture and typically iterate over candidate architectures till convergence over some particular metric like validation loss. They are constrained by the available computation resources, especially in enterprise environments. In this paper, we propose a new approach for NAS, called NASIB, which adapts and attunes to the computation resources (budget) available by varying the exploration vs. exploitation trade-off. We reduce the expert bias by searching over an augmented search space induced by Superkernels. The proposed method can provide the architecture search useful for different computation resources and different domains beyond image classification of natural images where we lack bespoke architecture motifs and domain expertise. We show, on CIFAR10, that itis possible to search over a space that comprises of 12x more candidate operations than the traditional prior art in just 1.5 GPU days, while reaching close to state of the art accuracy. While our method searches over an exponentially larger search space, it could lead to novel architectures that require lesser domain expertise, compared to the majority of the existing methods.

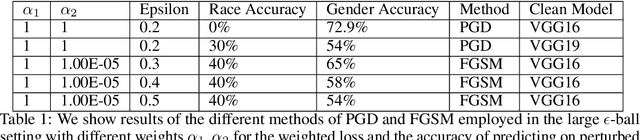

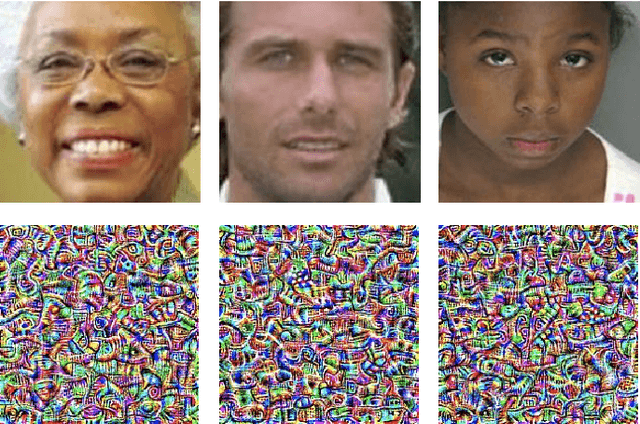

Maximal adversarial perturbations for obfuscation: Hiding certain attributes while preserving rest

Sep 27, 2019

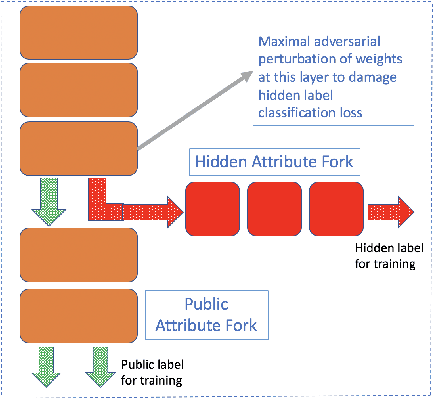

Abstract:In this paper we investigate the usage of adversarial perturbations for the purpose of privacy from human perception and model (machine) based detection. We employ adversarial perturbations for obfuscating certain variables in raw data while preserving the rest. Current adversarial perturbation methods are used for data poisoning with minimal perturbations of the raw data such that the machine learning model's performance is adversely impacted while the human vision cannot perceive the difference in the poisoned dataset due to minimal nature of perturbations. We instead apply relatively maximal perturbations of raw data to conditionally damage model's classification of one attribute while preserving the model performance over another attribute. In addition, the maximal nature of perturbation helps adversely impact human perception in classifying hidden attribute apart from impacting model performance. We validate our result qualitatively by showing the obfuscated dataset and quantitatively by showing the inability of models trained on clean data to predict the hidden attribute from the perturbed dataset while being able to predict the rest of attributes.

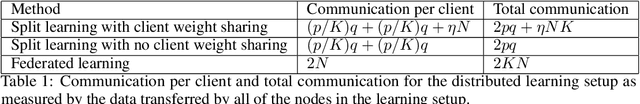

Detailed comparison of communication efficiency of split learning and federated learning

Sep 18, 2019

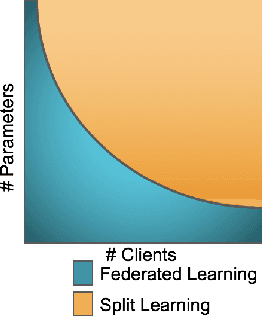

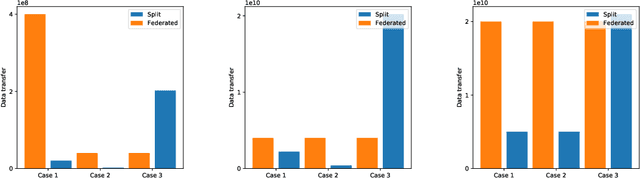

Abstract:We compare communication efficiencies of two compelling distributed machine learning approaches of split learning and federated learning. We show useful settings under which each method outperforms the other in terms of communication efficiency. We consider various practical scenarios of distributed learning setup and juxtapose the two methods under various real-life scenarios. We consider settings of small and large number of clients as well as small models (1M - 6M parameters), large models (10M - 200M parameters) and very large models (1 Billion-100 Billion parameters). We show that increasing number of clients or increasing model size favors split learning setup over the federated while increasing the number of data samples while keeping the number of clients or model size low makes federated learning more communication efficient.

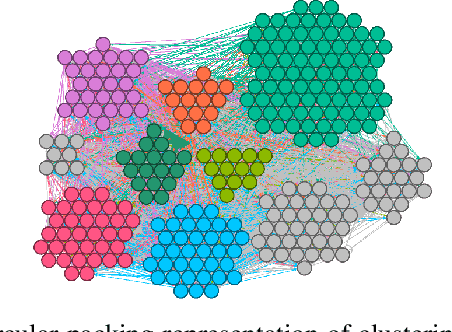

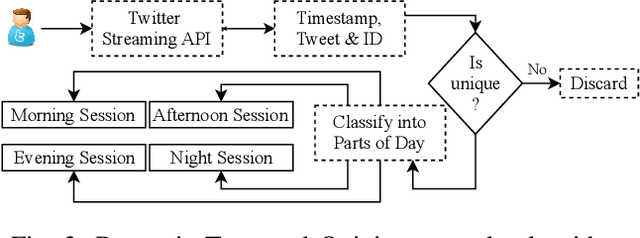

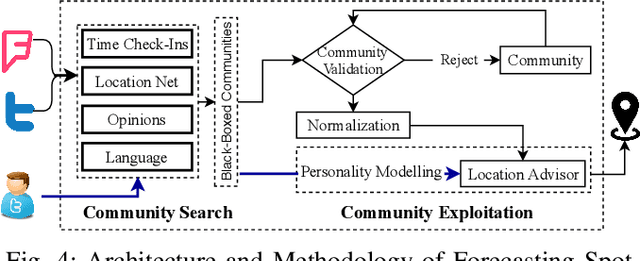

"When and Where?": Behavior Dominant Location Forecasting with Micro-blog Streams

Dec 16, 2018

Abstract:The proliferation of smartphones and wearable devices has increased the availability of large amounts of geospatial streams to provide significant automated discovery of knowledge in pervasive environments, but most prominent information related to altering interests have not yet adequately capitalized. In this paper, we provide a novel algorithm to exploit the dynamic fluctuations in user's point-of-interest while forecasting the future place of visit with fine granularity. Our proposed algorithm is based on the dynamic formation of collective personality communities using different languages, opinions, geographical and temporal distributions for finding out optimized equivalent content. We performed extensive empirical experiments involving, real-time streams derived from 0.6 million stream tuples of micro-blog comprising 1945 social person fusion with graph algorithm and feed-forward neural network model as a predictive classification model. Lastly, The framework achieves 62.10% mean average precision on 1,20,000 embeddings on unlabeled users and surprisingly 85.92% increment on the state-of-the-art approach.

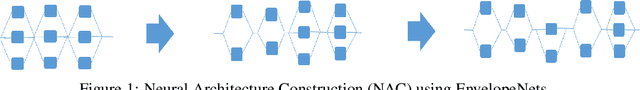

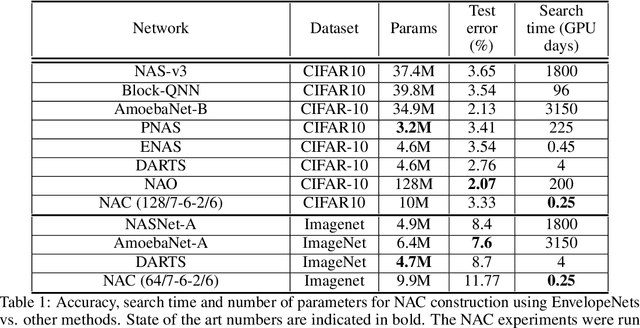

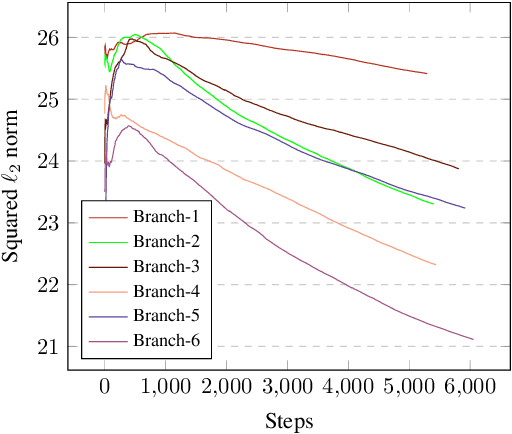

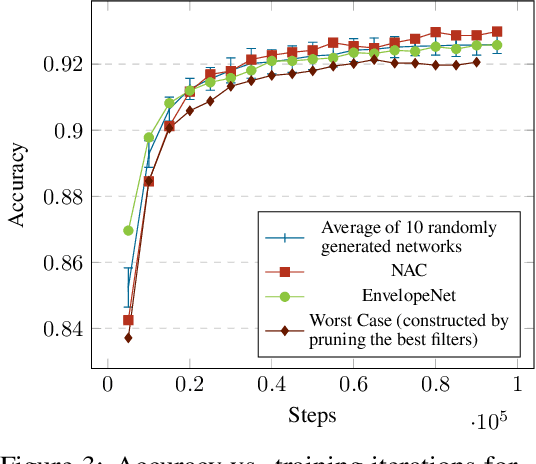

Neural Architecture Construction using EnvelopeNets

May 22, 2018

Abstract:In recent years, several automated search methods for neural network architectures have been proposed using methods such as evolutionary algorithms and reinforcement learning. These methods use an objective function (usually accuracy) that is evaluated after a full training and evaluation cycle. We show that statistics derived from filter featuremaps reach a state where the utility of different filters within a network can be compared and hence can be used to construct networks. The training epochs needed for filters within a network to reach this state is much less than the training epochs needed for the accuracy of a network to stabilize. EnvelopeNets is a construction method that exploits this finding to design convolutional neural nets (CNNs) in a fraction of the time needed by conventional search methods. The constructed networks show close to state of the art performance on the image classification problem on well known datasets (CIFAR-10, ImageNet) and consistently show better performance than hand constructed and randomly generated networks of the same depth, operators and approximately the same number of parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge