Floorplancad

Papers and Code

CADSpotting: Robust Panoptic Symbol Spotting on Large-Scale CAD Drawings

Dec 10, 2024We introduce CADSpotting, an efficient method for panoptic symbol spotting in large-scale architectural CAD drawings. Existing approaches struggle with the diversity of symbols, scale variations, and overlapping elements in CAD designs. CADSpotting overcomes these challenges by representing each primitive with dense points instead of a single primitive point, described by essential attributes like coordinates and color. Building upon a unified 3D point cloud model for joint semantic, instance, and panoptic segmentation, CADSpotting learns robust feature representations. To enable accurate segmentation in large, complex drawings, we further propose a novel Sliding Window Aggregation (SWA) technique, combining weighted voting and Non-Maximum Suppression (NMS). Moreover, we introduce a large-scale CAD dataset named LS-CAD to support our experiments. Each floorplan in LS-CAD has an average coverage of 1,000 square meter(versus 100 square meter in the existing dataset), providing a valuable benchmark for symbol spotting research. Experimental results on FloorPlanCAD and LS-CAD datasets demonstrate that CADSpotting outperforms existing methods, showcasing its robustness and scalability for real-world CAD applications.

VectorGraphNET: Graph Attention Networks for Accurate Segmentation of Complex Technical Drawings

Oct 02, 2024

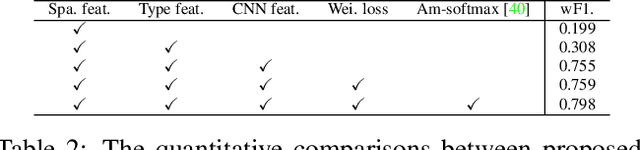

This paper introduces a new approach to extract and analyze vector data from technical drawings in PDF format. Our method involves converting PDF files into SVG format and creating a feature-rich graph representation, which captures the relationships between vector entities using geometrical information. We then apply a graph attention transformer with hierarchical label definition to achieve accurate line-level segmentation. Our approach is evaluated on two datasets, including the public FloorplanCAD dataset, which achieves state-of-the-art results on weighted F1 score, surpassing existing methods. The proposed vector-based method offers a more scalable solution for large-scale technical drawing analysis compared to vision-based approaches, while also requiring significantly less GPU power than current state-of-the-art vector-based techniques. Moreover, it demonstrates improved performance in terms of the weighted F1 (wF1) score on the semantic segmentation task. Our results demonstrate the effectiveness of our approach in extracting meaningful information from technical drawings, enabling new applications, and improving existing workflows in the AEC industry. Potential applications of our approach include automated building information modeling (BIM) and construction planning, which could significantly impact the efficiency and productivity of the industry.

Symbol as Points: Panoptic Symbol Spotting via Point-based Representation

Jan 19, 2024This work studies the problem of panoptic symbol spotting, which is to spot and parse both countable object instances (windows, doors, tables, etc.) and uncountable stuff (wall, railing, etc.) from computer-aided design (CAD) drawings. Existing methods typically involve either rasterizing the vector graphics into images and using image-based methods for symbol spotting, or directly building graphs and using graph neural networks for symbol recognition. In this paper, we take a different approach, which treats graphic primitives as a set of 2D points that are locally connected and use point cloud segmentation methods to tackle it. Specifically, we utilize a point transformer to extract the primitive features and append a mask2former-like spotting head to predict the final output. To better use the local connection information of primitives and enhance their discriminability, we further propose the attention with connection module (ACM) and contrastive connection learning scheme (CCL). Finally, we propose a KNN interpolation mechanism for the mask attention module of the spotting head to better handle primitive mask downsampling, which is primitive-level in contrast to pixel-level for the image. Our approach, named SymPoint, is simple yet effective, outperforming recent state-of-the-art method GAT-CADNet by an absolute increase of 9.6% PQ and 10.4% RQ on the FloorPlanCAD dataset. The source code and models will be available at https://github.com/nicehuster/SymPoint.

FloorPlanCAD: A Large-Scale CAD Drawing Dataset for Panoptic Symbol Spotting

May 15, 2021

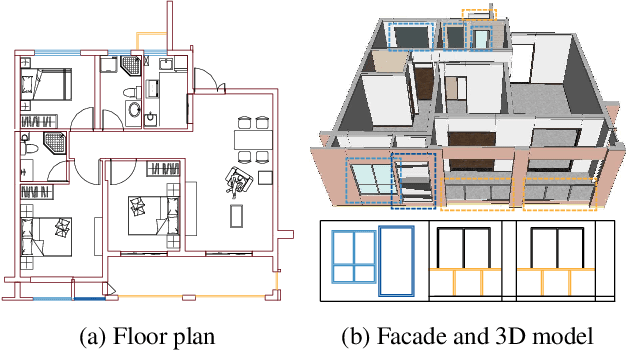

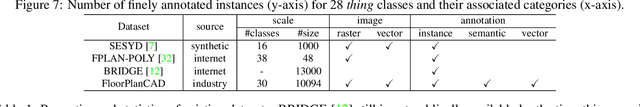

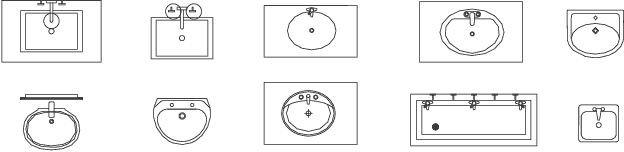

Access to large and diverse computer-aided design (CAD) drawings is critical for developing symbol spotting algorithms. In this paper, we present FloorPlanCAD, a large-scale real-world CAD drawing dataset containing over 10,000 floor plans, ranging from residential to commercial buildings. CAD drawings in the dataset are all represented as vector graphics, which enable us to provide line-grained annotations of 30 object categories. Equipped by such annotations, we introduce the task of panoptic symbol spotting, which requires to spot not only instances of countable things, but also the semantic of uncountable stuff. Aiming to solve this task, we propose a novel method by combining Graph Convolutional Networks (GCNs) with Convolutional Neural Networks (CNNs), which captures both non-Euclidean and Euclidean features and can be trained end-to-end. The proposed CNN-GCN method achieved state-of-the-art (SOTA) performance on the task of semantic symbol spotting, and help us build a baseline network for the panoptic symbol spotting task. Our contributions are three-fold: 1) to the best of our knowledge, the presented CAD drawing dataset is the first of its kind; 2) the panoptic symbol spotting task considers the spotting of both thing instances and stuff semantic as one recognition problem; and 3) we presented a baseline solution to the panoptic symbol spotting task based on a novel CNN-GCN method, which achieved SOTA performance on semantic symbol spotting. We believe that these contributions will boost research in related areas.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge