Yuhong Guo

Carleton University, DiDi Chuxing

Ensemble Model with Batch Spectral Regularization and Data Blending for Cross-Domain Few-Shot Learning with Unlabeled Data

Jun 09, 2020

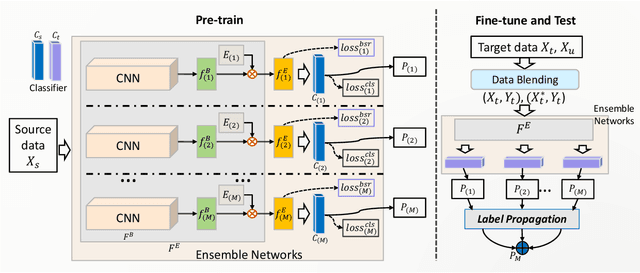

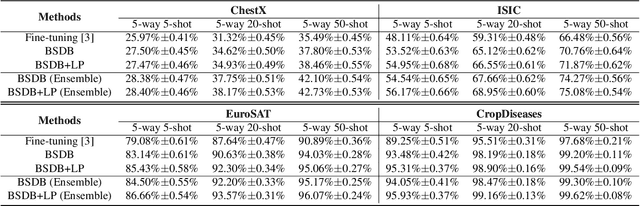

Abstract:In this paper, we present our proposed ensemble model with batch spectral regularization and data blending mechanisms for the Track 2 problem of the cross-domain few-shot learning (CD-FSL) challenge. We build a multi-branch ensemble framework by using diverse feature transformation matrices, while deploying batch spectral feature regularization on each branch to improve the model's transferability. Moreover, we propose a data blending method to exploit the unlabeled data and augment the sparse support set in the target domain. Our proposed model demonstrates effective performance on the CD-FSL benchmark tasks.

A Transductive Multi-Head Model for Cross-Domain Few-Shot Learning

Jun 08, 2020

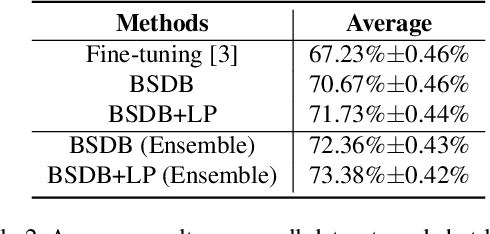

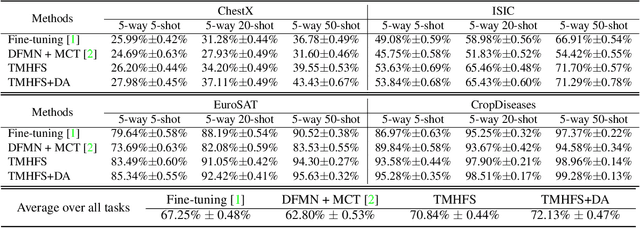

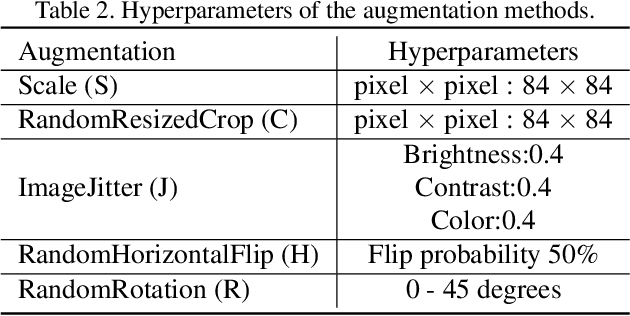

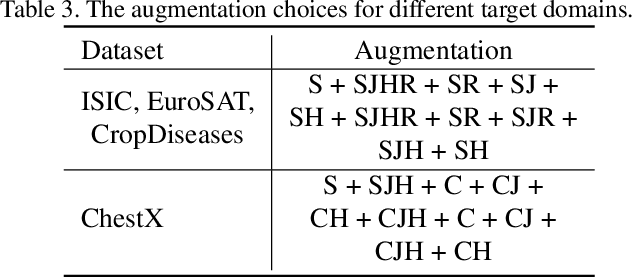

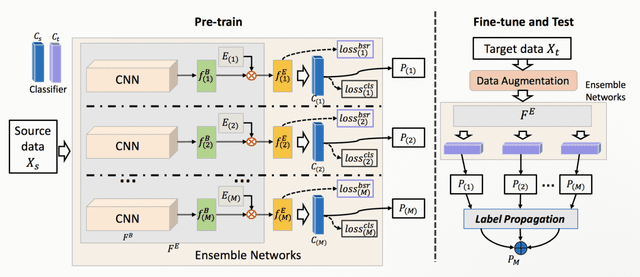

Abstract:In this paper, we present a new method, Transductive Multi-Head Few-Shot learning (TMHFS), to address the Cross-Domain Few-Shot Learning (CD-FSL) challenge. The TMHFS method extends the Meta-Confidence Transduction (MCT) and Dense Feature-Matching Networks (DFMN) method [2] by introducing a new prediction head, i.e, an instance-wise global classification network based on semantic information, after the common feature embedding network. We train the embedding network with the multiple heads, i.e,, the MCT loss, the DFMN loss and the semantic classifier loss, simultaneously in the source domain. For the few-shot learning in the target domain, we first perform fine-tuning on the embedding network with only the semantic global classifier and the support instances, and then use the MCT part to predict labels of the query set with the fine-tuned embedding network. Moreover, we further exploit data augmentation techniques during the fine-tuning and test stages to improve the prediction performance. The experimental results demonstrate that the proposed methods greatly outperform the strong baseline, fine-tuning, on four different target domains.

Feature Transformation Ensemble Model with Batch Spectral Regularization for Cross-Domain Few-Shot Classification

May 21, 2020

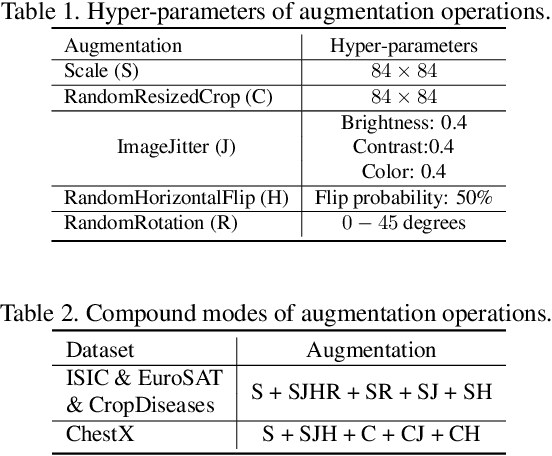

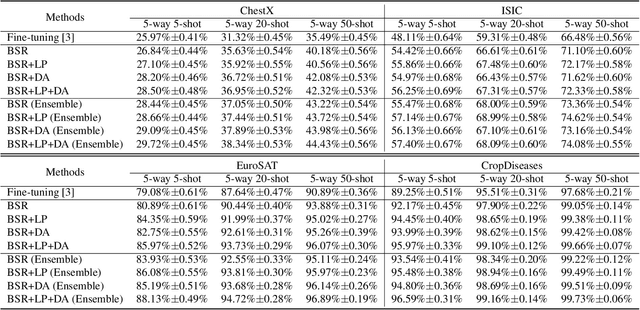

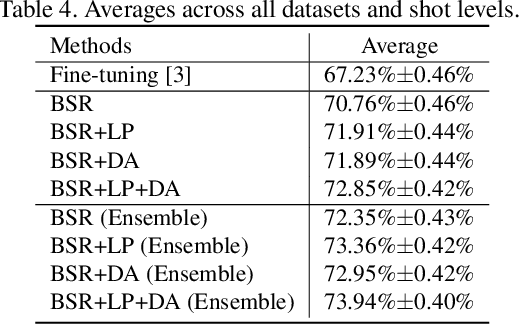

Abstract:In this paper, we propose a feature transformation ensemble model with batch spectral regularization for the Cross-domain few-shot learning (CD-FSL) challenge. Specifically, we proposes to construct an ensemble prediction model by performing diverse feature transformations after a feature extraction network. On each branch prediction network of the model we use a batch spectral regularization term to suppress the singular values of the feature matrix during pre-training to improve the generalization ability of the model. The proposed model can then be fine tuned in the target domain to address few-shot classification. We also further apply label propagation, entropy minimization and data augmentation to mitigate the shortage of labeled data in target domains. Experiments are conducted on a number of CD-FSL benchmark tasks with four target domains and the results demonstrate the superiority of our proposed model.

Multi-Level Generative Models for Partial Label Learning with Non-random Label Noise

May 11, 2020

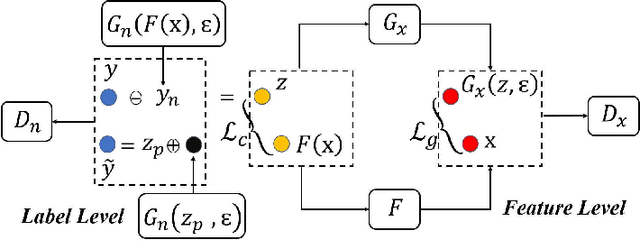

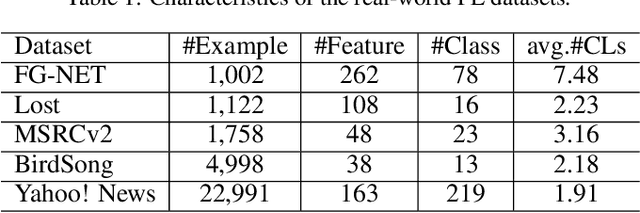

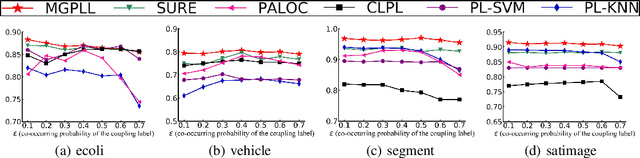

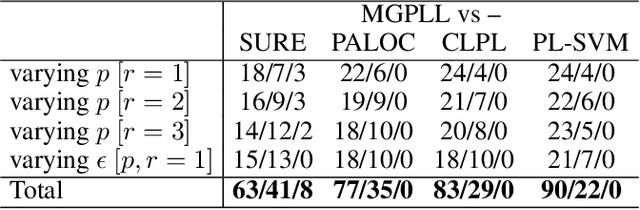

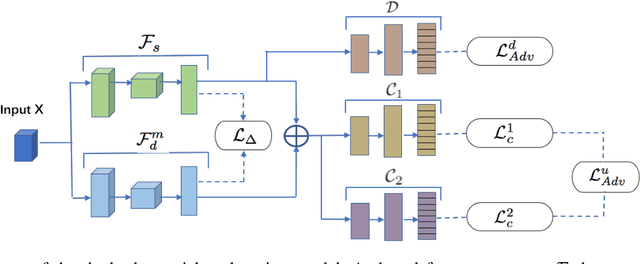

Abstract:Partial label (PL) learning tackles the problem where each training instance is associated with a set of candidate labels that include both the true label and irrelevant noise labels. In this paper, we propose a novel multi-level generative model for partial label learning (MGPLL), which tackles the problem by learning both a label level adversarial generator and a feature level adversarial generator under a bi-directional mapping framework between the label vectors and the data samples. Specifically, MGPLL uses a conditional noise label generation network to model the non-random noise labels and perform label denoising, and uses a multi-class predictor to map the training instances to the denoised label vectors, while a conditional data feature generator is used to form an inverse mapping from the denoised label vectors to data samples. Both the noise label generator and the data feature generator are learned in an adversarial manner to match the observed candidate labels and data features respectively. Extensive experiments are conducted on synthesized and real-world partial label datasets. The proposed approach demonstrates the state-of-the-art performance for partial label learning.

Unsupervised Domain Adaptation with Progressive Domain Augmentation

Apr 24, 2020

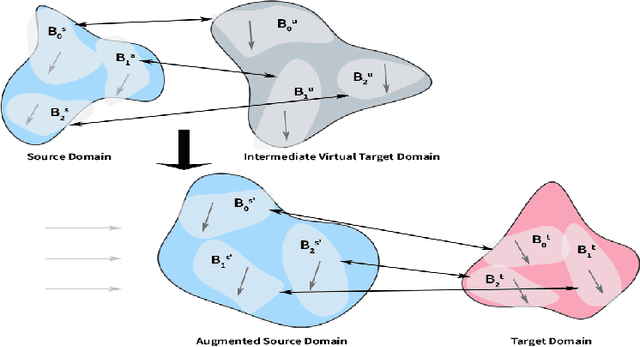

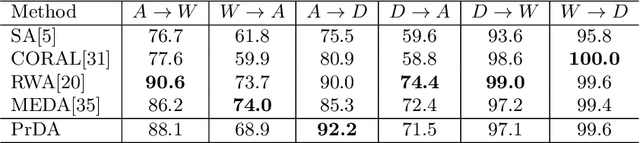

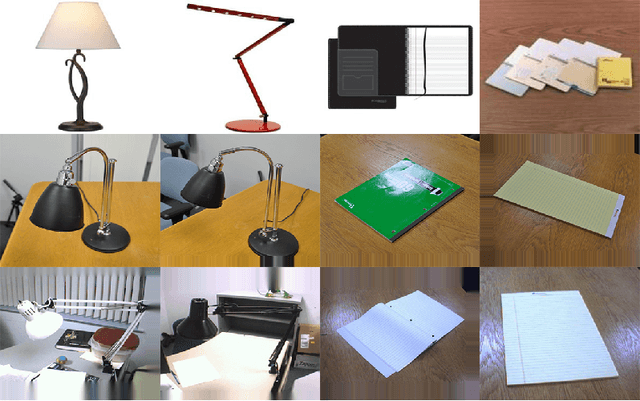

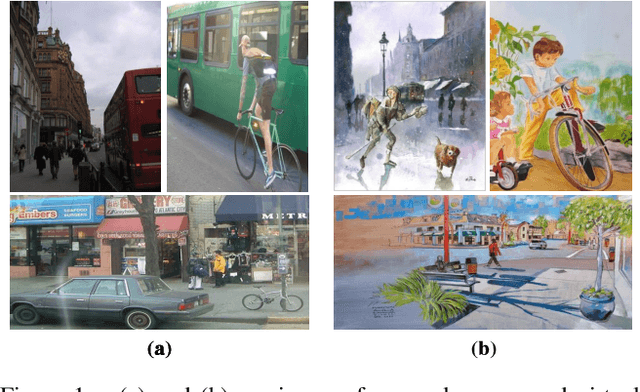

Abstract:Domain adaptation aims to exploit a label-rich source domain for learning classifiers in a different label-scarce target domain. It is particularly challenging when there are significant divergences between the two domains. In the paper, we propose a novel unsupervised domain adaptation method based on progressive domain augmentation. The proposed method generates virtual intermediate domains via domain interpolation, progressively augments the source domain and bridges the source-target domain divergence by conducting multiple subspace alignment on the Grassmann manifold. We conduct experiments on multiple domain adaptation tasks and the results shows the proposed method achieves the state-of-the-art performance.

Mutual Learning Network for Multi-Source Domain Adaptation

Mar 29, 2020

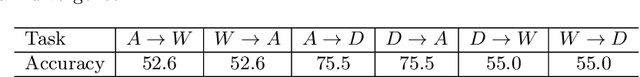

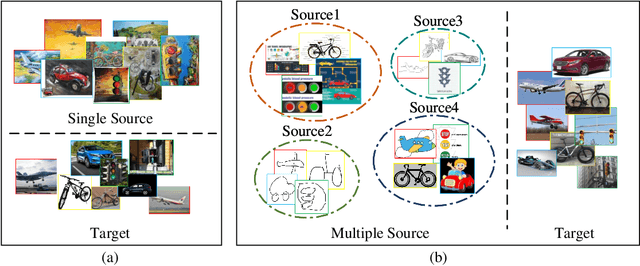

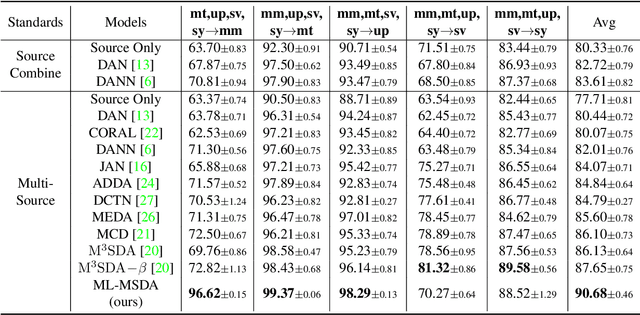

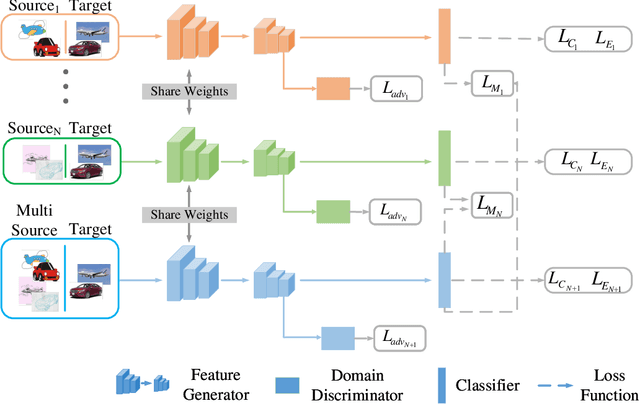

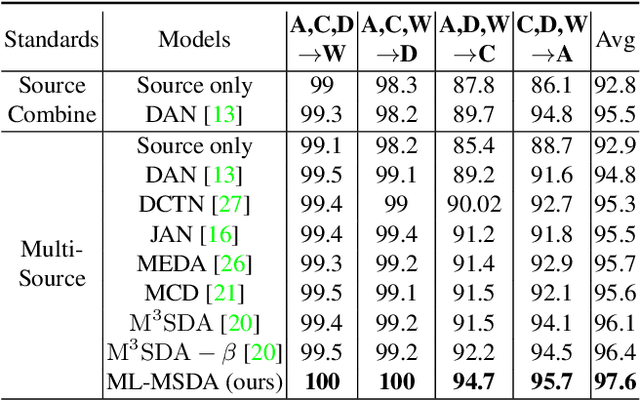

Abstract:Early Unsupervised Domain Adaptation (UDA) methods have mostly assumed the setting of a single source domain, where all the labeled source data come from the same distribution. However, in practice the labeled data can come from multiple source domains with different distributions. In such scenarios, the single source domain adaptation methods can fail due to the existence of domain shifts across different source domains and multi-source domain adaptation methods need to be designed. In this paper, we propose a novel multi-source domain adaptation method, Mutual Learning Network for Multiple Source Domain Adaptation (ML-MSDA). Under the framework of mutual learning, the proposed method pairs the target domain with each single source domain to train a conditional adversarial domain adaptation network as a branch network, while taking the pair of the combined multi-source domain and target domain to train a conditional adversarial adaptive network as the guidance network. The multiple branch networks are aligned with the guidance network to achieve mutual learning by enforcing JS-divergence regularization over their prediction probability distributions on the corresponding target data. We conduct extensive experiments on multiple multi-source domain adaptation benchmark datasets. The results show the proposed ML-MSDA method outperforms the comparison methods and achieves the state-of-the-art performance.

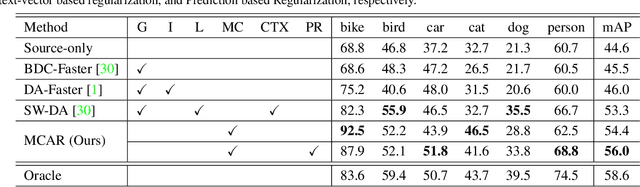

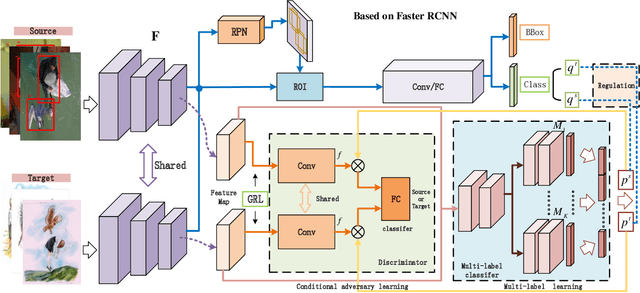

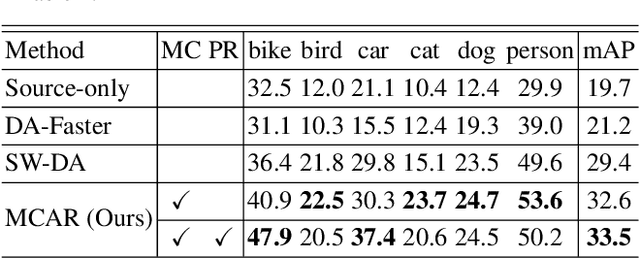

Adaptive Object Detection with Dual Multi-Label Prediction

Mar 29, 2020

Abstract:In this paper, we propose a novel end-to-end unsupervised deep domain adaptation model for adaptive object detection by exploiting multi-label object recognition as a dual auxiliary task. The model exploits multi-label prediction to reveal the object category information in each image and then uses the prediction results to perform conditional adversarial global feature alignment, such that the multi-modal structure of image features can be tackled to bridge the domain divergence at the global feature level while preserving the discriminability of the features. Moreover, we introduce a prediction consistency regularization mechanism to assist object detection, which uses the multi-label prediction results as an auxiliary regularization information to ensure consistent object category discoveries between the object recognition task and the object detection task. Experiments are conducted on a few benchmark datasets and the results show the proposed model outperforms the state-of-the-art comparison methods.

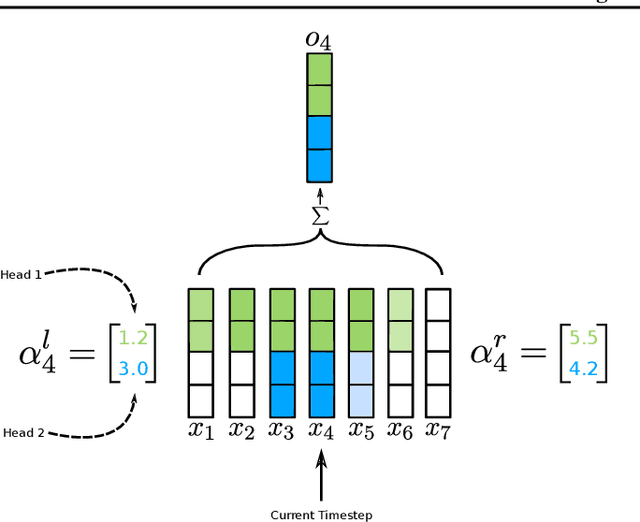

Time-aware Large Kernel Convolutions

Feb 08, 2020

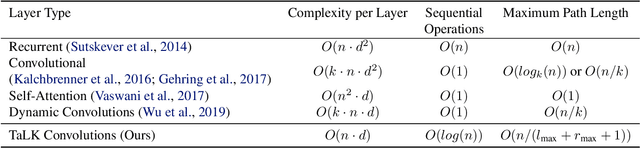

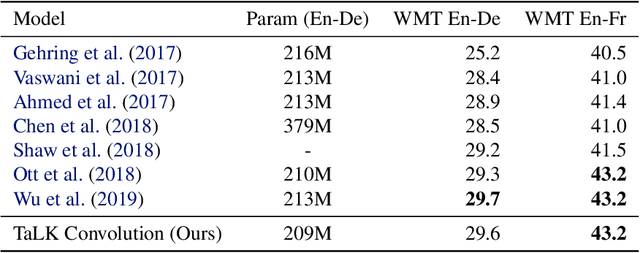

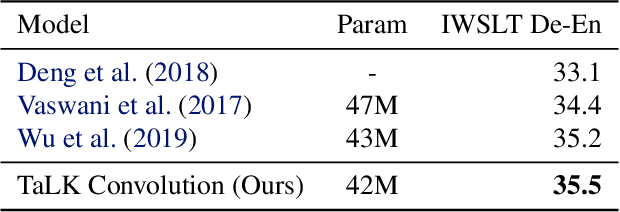

Abstract:To date, most state-of-the-art sequence modelling architectures use attention to build generative models for language based tasks. Some of these models use all the available sequence tokens to generate an attention distribution which results in time complexity of $O(n^2)$. Alternatively, they utilize depthwise convolutions with softmax normalized kernels of size $k$ acting as a limited-window self-attention, resulting in time complexity of $O(k{\cdot}n)$. In this paper, we introduce Time-aware Large Kernel (TaLK) Convolutions, a novel adaptive convolution operation that learns to predict the size of a summation kernel instead of using the fixed-sized kernel matrix. This method yields a time complexity of $O(n)$, effectively making the sequence encoding process linear to the number of tokens. We evaluate the proposed method on large-scale standard machine translation and language modelling datasets and show that TaLK Convolutions constitute an efficient improvement over other attention/convolution based approaches.

Dual Adversarial Co-Learning for Multi-Domain Text Classification

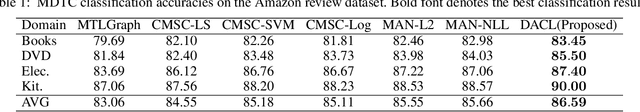

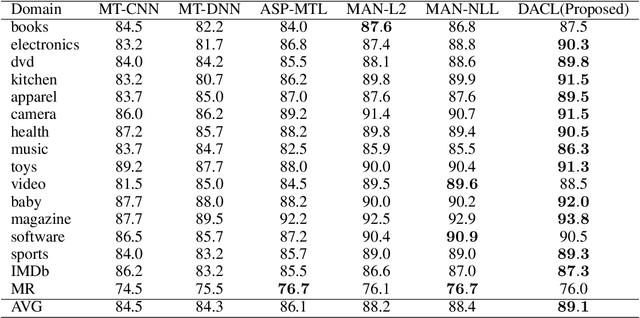

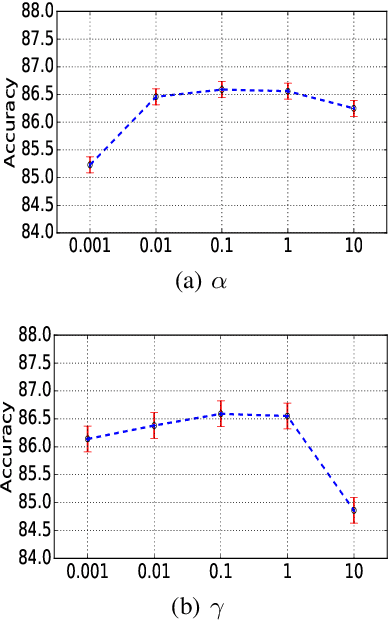

Sep 18, 2019

Abstract:In this paper we propose a novel dual adversarial co-learning approach for multi-domain text classification (MDTC). The approach learns shared-private networks for feature extraction and deploys dual adversarial regularizations to align features across different domains and between labeled and unlabeled data simultaneously under a discrepancy based co-learning framework, aiming to improve the classifiers' generalization capacity with the learned features. We conduct experiments on multi-domain sentiment classification datasets. The results show the proposed approach achieves the state-of-the-art MDTC performance.

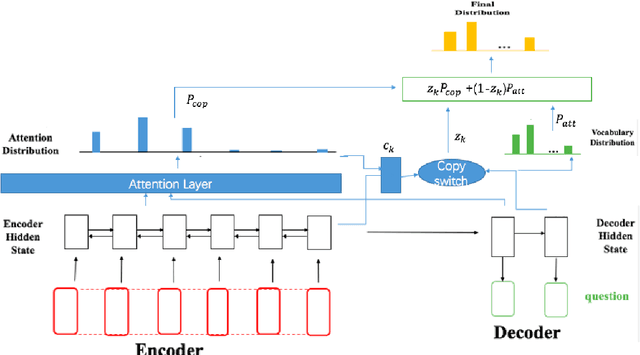

Learning to Generate Questions with Adaptive Copying Neural Networks

Sep 17, 2019

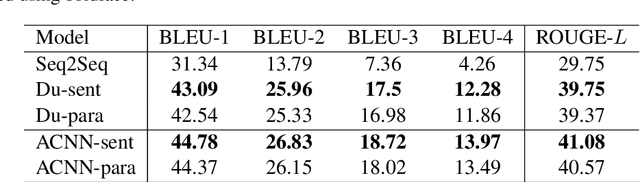

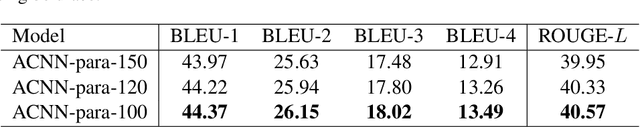

Abstract:Automatic question generation is an important problem in natural language processing. In this paper we propose a novel adaptive copying recurrent neural network model to tackle the problem of question generation from sentences and paragraphs. The proposed model adds a copying mechanism component onto a bidirectional LSTM architecture to generate more suitable questions adaptively from the input data. Our experimental results show the proposed model can outperform the state-of-the-art question generation methods in terms of BLEU and ROUGE evaluation scores.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge