Yizhuo Ma

GraphCogent: Overcoming LLMs' Working Memory Constraints via Multi-Agent Collaboration in Complex Graph Understanding

Aug 17, 2025

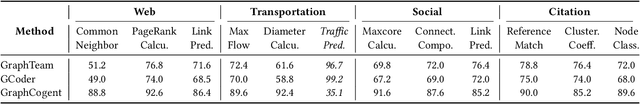

Abstract:Large language models (LLMs) show promising performance on small-scale graph reasoning tasks but fail when handling real-world graphs with complex queries. This phenomenon stems from LLMs' inability to effectively process complex graph topology and perform multi-step reasoning simultaneously. To address these limitations, we propose GraphCogent, a collaborative agent framework inspired by human Working Memory Model that decomposes graph reasoning into specialized cognitive processes: sense, buffer, and execute. The framework consists of three modules: Sensory Module standardizes diverse graph text representations via subgraph sampling, Buffer Module integrates and indexes graph data across multiple formats, and Execution Module combines tool calling and model generation for efficient reasoning. We also introduce Graph4real, a comprehensive benchmark contains with four domains of real-world graphs (Web, Social, Transportation, and Citation) to evaluate LLMs' graph reasoning capabilities. Our Graph4real covers 21 different graph reasoning tasks, categorized into three types (Structural Querying, Algorithmic Reasoning, and Predictive Modeling tasks), with graph scales that are 10 times larger than existing benchmarks. Experiments show that Llama3.1-8B based GraphCogent achieves a 50% improvement over massive-scale LLMs like DeepSeek-R1 (671B). Compared to state-of-the-art agent-based baseline, our framework outperforms by 20% in accuracy while reducing token usage by 80% for in-toolset tasks and 30% for out-toolset tasks. Code will be available after review.

EmoAttack: Emotion-to-Image Diffusion Models for Emotional Backdoor Generation

Jun 22, 2024

Abstract:Text-to-image diffusion models can create realistic images based on input texts. Users can describe an object to convey their opinions visually. In this work, we unveil a previously unrecognized and latent risk of using diffusion models to generate images; we utilize emotion in the input texts to introduce negative contents, potentially eliciting unfavorable emotions in users. Emotions play a crucial role in expressing personal opinions in our daily interactions, and the inclusion of maliciously negative content can lead users astray, exacerbating negative emotions. Specifically, we identify the emotion-aware backdoor attack (EmoAttack) that can incorporate malicious negative content triggered by emotional texts during image generation. We formulate such an attack as a diffusion personalization problem to avoid extensive model retraining and propose the EmoBooth. Unlike existing personalization methods, our approach fine-tunes a pre-trained diffusion model by establishing a mapping between a cluster of emotional words and a given reference image containing malicious negative content. To validate the effectiveness of our method, we built a dataset and conducted extensive analysis and discussion about its effectiveness. Given consumers' widespread use of diffusion models, uncovering this threat is critical for society.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge