Yijie Zhong

Scene-Aware Memory Discrimination: Deciding Which Personal Knowledge Stays

Feb 12, 2026Abstract:Intelligent devices have become deeply integrated into everyday life, generating vast amounts of user interactions that form valuable personal knowledge. Efficient organization of this knowledge in user memory is essential for enabling personalized applications. However, current research on memory writing, management, and reading using large language models (LLMs) faces challenges in filtering irrelevant information and in dealing with rising computational costs. Inspired by the concept of selective attention in the human brain, we introduce a memory discrimination task. To address large-scale interactions and diverse memory standards in this task, we propose a Scene-Aware Memory Discrimination method (SAMD), which comprises two key components: the Gating Unit Module (GUM) and the Cluster Prompting Module (CPM). GUM enhances processing efficiency by filtering out non-memorable interactions and focusing on the salient content most relevant to application demands. CPM establishes adaptive memory standards, guiding LLMs to discern what information should be remembered or discarded. It also analyzes the relationship between user intents and memory contexts to build effective clustering prompts. Comprehensive direct and indirect evaluations demonstrate the effectiveness and generalization of our approach. We independently assess the performance of memory discrimination, showing that SAMD successfully recalls the majority of memorable data and remains robust in dynamic scenarios. Furthermore, when integrated into personalized applications, SAMD significantly enhances both the efficiency and quality of memory construction, leading to better organization of personal knowledge.

Synergizing RAG and Reasoning: A Systematic Review

Apr 22, 2025

Abstract:Recent breakthroughs in large language models (LLMs), particularly in reasoning capabilities, have propelled Retrieval-Augmented Generation (RAG) to unprecedented levels. By synergizing retrieval mechanisms with advanced reasoning, LLMs can now tackle increasingly complex problems. This paper presents a systematic review of the collaborative interplay between RAG and reasoning, clearly defining "reasoning" within the RAG context. It construct a comprehensive taxonomy encompassing multi-dimensional collaborative objectives, representative paradigms, and technical implementations, and analyze the bidirectional synergy methods. Additionally, we critically evaluate current limitations in RAG assessment, including the absence of intermediate supervision for multi-step reasoning and practical challenges related to cost-risk trade-offs. To bridge theory and practice, we provide practical guidelines tailored to diverse real-world applications. Finally, we identify promising research directions, such as graph-based knowledge integration, hybrid model collaboration, and RL-driven optimization. Overall, this work presents a theoretical framework and practical foundation to advance RAG systems in academia and industry, fostering the next generation of RAG solutions.

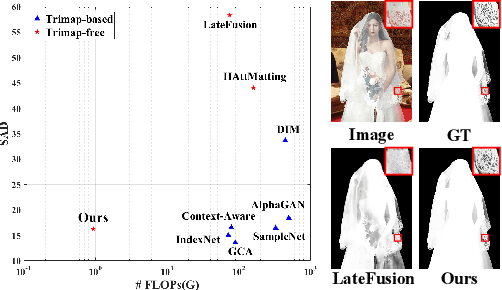

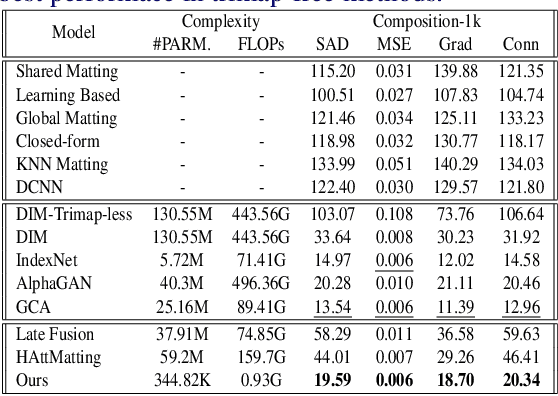

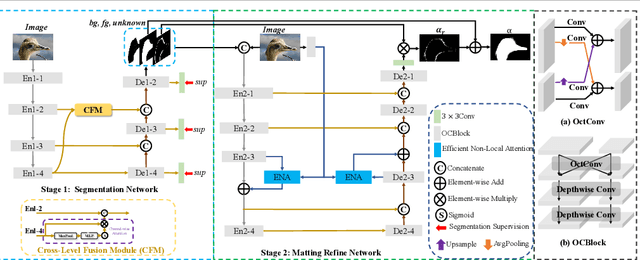

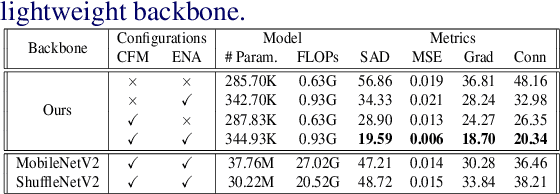

Highly Efficient Natural Image Matting

Oct 25, 2021

Abstract:Over the last few years, deep learning based approaches have achieved outstanding improvements in natural image matting. However, there are still two drawbacks that impede the widespread application of image matting: the reliance on user-provided trimaps and the heavy model sizes. In this paper, we propose a trimap-free natural image matting method with a lightweight model. With a lightweight basic convolution block, we build a two-stages framework: Segmentation Network (SN) is designed to capture sufficient semantics and classify the pixels into unknown, foreground and background regions; Matting Refine Network (MRN) aims at capturing detailed texture information and regressing accurate alpha values. With the proposed cross-level fusion Module (CFM), SN can efficiently utilize multi-scale features with less computational cost. Efficient non-local attention module (ENA) in MRN can efficiently model the relevance between different pixels and help regress high-quality alpha values. Utilizing these techniques, we construct an extremely light-weighted model, which achieves comparable performance with ~1\% parameters (344k) of large models on popular natural image matting benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge