Yaxin Wang

AMSbench: A Comprehensive Benchmark for Evaluating MLLM Capabilities in AMS Circuits

May 30, 2025Abstract:Analog/Mixed-Signal (AMS) circuits play a critical role in the integrated circuit (IC) industry. However, automating Analog/Mixed-Signal (AMS) circuit design has remained a longstanding challenge due to its difficulty and complexity. Recent advances in Multi-modal Large Language Models (MLLMs) offer promising potential for supporting AMS circuit analysis and design. However, current research typically evaluates MLLMs on isolated tasks within the domain, lacking a comprehensive benchmark that systematically assesses model capabilities across diverse AMS-related challenges. To address this gap, we introduce AMSbench, a benchmark suite designed to evaluate MLLM performance across critical tasks including circuit schematic perception, circuit analysis, and circuit design. AMSbench comprises approximately 8000 test questions spanning multiple difficulty levels and assesses eight prominent models, encompassing both open-source and proprietary solutions such as Qwen 2.5-VL and Gemini 2.5 Pro. Our evaluation highlights significant limitations in current MLLMs, particularly in complex multi-modal reasoning and sophisticated circuit design tasks. These results underscore the necessity of advancing MLLMs' understanding and effective application of circuit-specific knowledge, thereby narrowing the existing performance gap relative to human expertise and moving toward fully automated AMS circuit design workflows. Our data is released at https://huggingface.co/datasets/wwhhyy/AMSBench

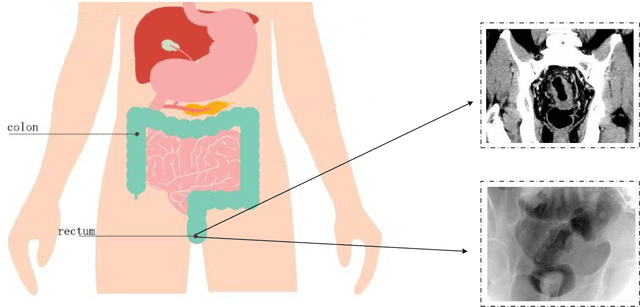

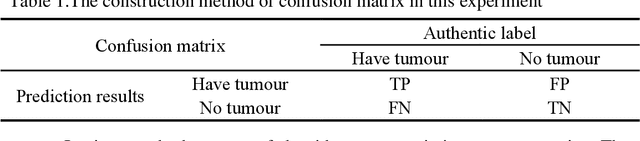

Attention Augmented ConvNeXt UNet For Rectal Tumour Segmentation

Oct 01, 2022

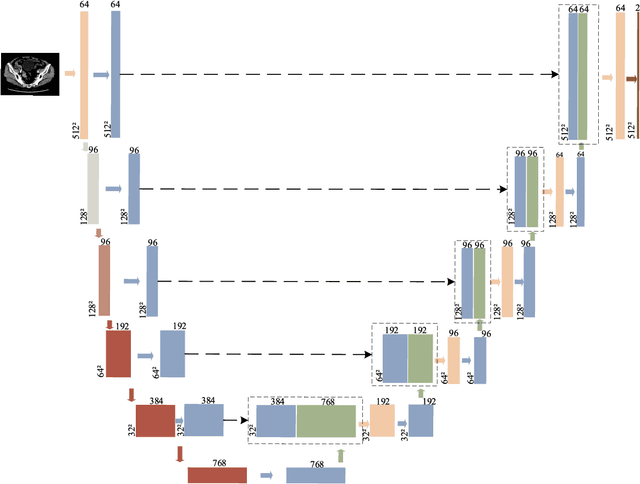

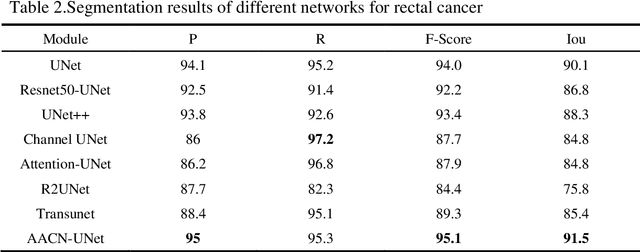

Abstract:It is a challenge to segment the location and size of rectal cancer tumours through deep learning. In this paper, in order to improve the ability of extracting suffi-cient feature information in rectal tumour segmentation, attention enlarged ConvNeXt UNet (AACN-UNet), is proposed. The network mainly includes two improvements: 1) the encoder stage of UNet is changed to ConvNeXt structure for encoding operation, which can not only integrate multi-scale semantic information on a large scale, but al-so reduce information loss and extract more feature information from CT images; 2) CBAM attention mechanism is added to improve the connection of each feature in channel and space, which is conducive to extracting the effective feature of the target and improving the segmentation accuracy.The experiment with UNet and its variant network shows that AACN-UNet is 0.9% ,1.1% and 1.4% higher than the current best results in P, F1 and Miou.Compared with the training time, the number of parameters in UNet network is less. This shows that our proposed AACN-UNet has achieved ex-cellent results in CT image segmentation of rectal cancer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge