Yaolong Wang

Infinite-Instruct: Synthesizing Scaling Code instruction Data with Bidirectional Synthesis and Static Verification

May 29, 2025Abstract:Traditional code instruction data synthesis methods suffer from limited diversity and poor logic. We introduce Infinite-Instruct, an automated framework for synthesizing high-quality question-answer pairs, designed to enhance the code generation capabilities of large language models (LLMs). The framework focuses on improving the internal logic of synthesized problems and the quality of synthesized code. First, "Reverse Construction" transforms code snippets into diverse programming problems. Then, through "Backfeeding Construction," keywords in programming problems are structured into a knowledge graph to reconstruct them into programming problems with stronger internal logic. Finally, a cross-lingual static code analysis pipeline filters invalid samples to ensure data quality. Experiments show that on mainstream code generation benchmarks, our fine-tuned models achieve an average performance improvement of 21.70% on 7B-parameter models and 36.95% on 32B-parameter models. Using less than one-tenth of the instruction fine-tuning data, we achieved performance comparable to the Qwen-2.5-Coder-Instruct. Infinite-Instruct provides a scalable solution for LLM training in programming. We open-source the datasets used in the experiments, including both unfiltered versions and filtered versions via static analysis. The data are available at https://github.com/xingwenjing417/Infinite-Instruct-dataset

Modeling Lost Information in Lossy Image Compression

Jul 08, 2020

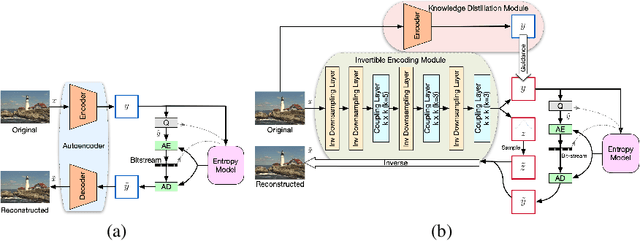

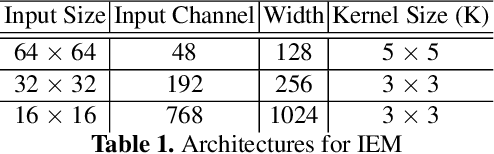

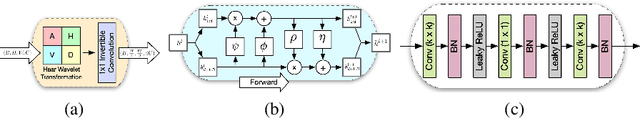

Abstract:Lossy image compression is one of the most commonly used operators for digital images. Most recently proposed deep-learning-based image compression methods leverage the auto-encoder structure, and reach a series of promising results in this field. The images are encoded into low dimensional latent features first, and entropy coded subsequently by exploiting the statistical redundancy. However, the information lost during encoding is unfortunately inevitable, which poses a significant challenge to the decoder to reconstruct the original images. In this work, we propose a novel invertible framework called Invertible Lossy Compression (ILC) to largely mitigate the information loss problem. Specifically, ILC introduces an invertible encoding module to replace the encoder-decoder structure to produce the low dimensional informative latent representation, meanwhile, transform the lost information into an auxiliary latent variable that won't be further coded or stored. The latent representation is quantized and encoded into bit-stream, and the latent variable is forced to follow a specified distribution, i.e. isotropic Gaussian distribution. In this way, recovering the original image is made tractable by easily drawing a surrogate latent variable and applying the inverse pass of the module with the sampled variable and decoded latent features. Experimental results demonstrate that with a new component replacing the auto-encoder in image compression methods, ILC can significantly outperform the baseline method on extensive benchmark datasets by combining with the existing compression algorithms.

Invertible Image Rescaling

May 12, 2020

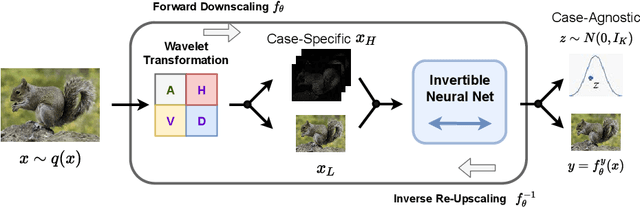

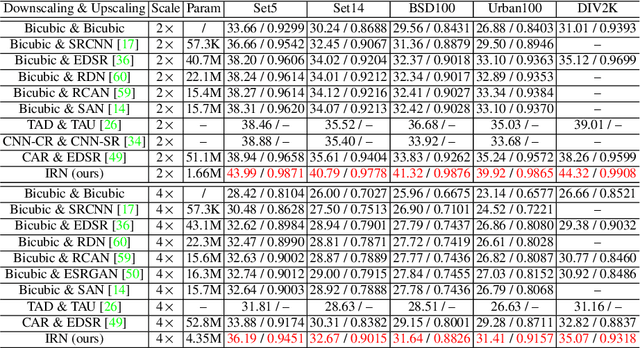

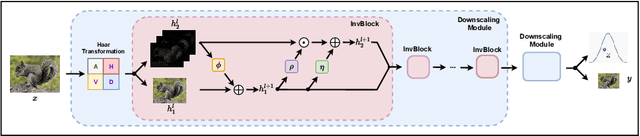

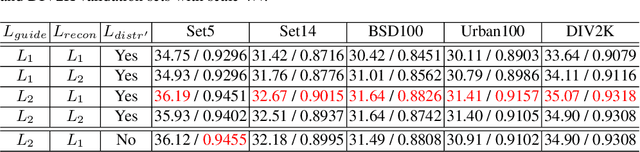

Abstract:High-resolution digital images are usually downscaled to fit various display screens or save the cost of storage and bandwidth, meanwhile the post-upscaling is adpoted to recover the original resolutions or the details in the zoom-in images. However, typical image downscaling is a non-injective mapping due to the loss of high-frequency information, which leads to the ill-posed problem of the inverse upscaling procedure and poses great challenges for recovering details from the downscaled low-resolution images. Simply upscaling with image super-resolution methods results in unsatisfactory recovering performance. In this work, we propose to solve this problem by modeling the downscaling and upscaling processes from a new perspective, i.e. an invertible bijective transformation, which can largely mitigate the ill-posed nature of image upscaling. We develop an Invertible Rescaling Net (IRN) with deliberately designed framework and objectives to produce visually-pleasing low-resolution images and meanwhile capture the distribution of the lost information using a latent variable following a specified distribution in the downscaling process. In this way, upscaling is made tractable by inversely passing a randomly-drawn latent variable with the low-resolution image through the network. Experimental results demonstrate the significant improvement of our model over existing methods in terms of both quantitative and qualitative evaluations of image upscaling reconstruction from downscaled images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge