Yangfan Zhou

SwipeGen: Bridging the Execution Gap in GUI Agents via Human-like Swipe Synthesis

Jan 26, 2026Abstract:With the widespread adoption of Graphical User Interface (GUI) agents for automating GUI interaction tasks, substantial research focused on improving GUI perception to ground task instructions into concrete action steps. However, the step execution capability of these agents has gradually emerged as a new bottleneck for task completion. In particular, existing GUI agents often adopt overly simplified strategies for handling swipe interactions, preventing them from accurately replicating human-like behavior. To address this limitation, we decompose human swipe gestures into multiple quantifiable dimensions and propose an automated pipeline SwipeGen to synthesize human-like swipe interactions through GUI exploration. Based on this pipeline, we construct and release the first benchmark for evaluating the swipe execution capability of GUI agents. Furthermore, leveraging the synthesized data, we propose GUISwiper, a GUI agent with enhanced interaction execution capabilities. Experimental results demonstrate that GUISwiper achieves a swipe execution accuracy of 69.07%, representing a 214% improvement over existing VLM baselines.

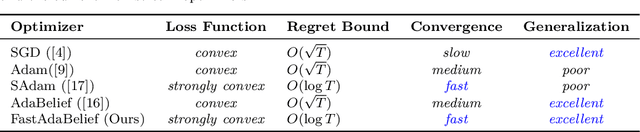

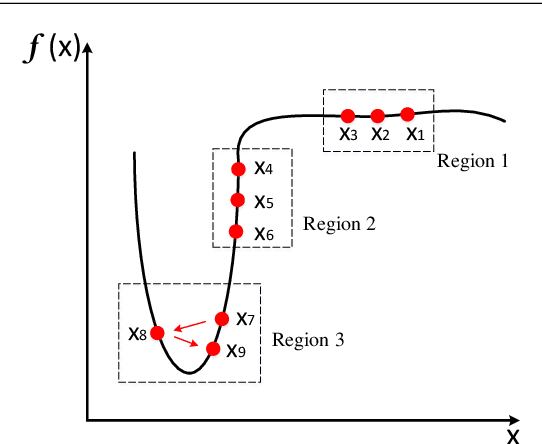

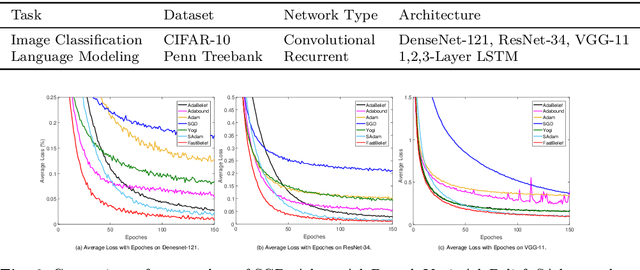

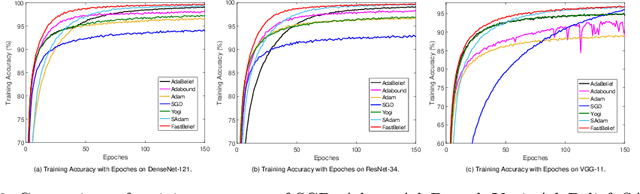

FastAdaBelief: Improving Convergence Rate for Belief-based Adaptive Optimizer by Strong Convexity

Apr 28, 2021

Abstract:The AdaBelief algorithm demonstrates superior generalization ability to the Adam algorithm by viewing the exponential moving average of observed gradients. AdaBelief is proved to have a data-dependent $O(\sqrt{T})$ regret bound when objective functions are convex, where $T$ is a time horizon. However, it remains to be an open problem on how to exploit strong convexity to further improve the convergence rate of AdaBelief. To tackle this problem, we present a novel optimization algorithm under strong convexity, called FastAdaBelief. We prove that FastAdaBelief attains a data-dependant $O(\log T)$ regret bound, which is substantially lower than AdaBelief. In addition, the theoretical analysis is validated by extensive experiments performed on open datasets (i.e., CIFAR-10 and Penn Treebank) for image classification and language modeling.

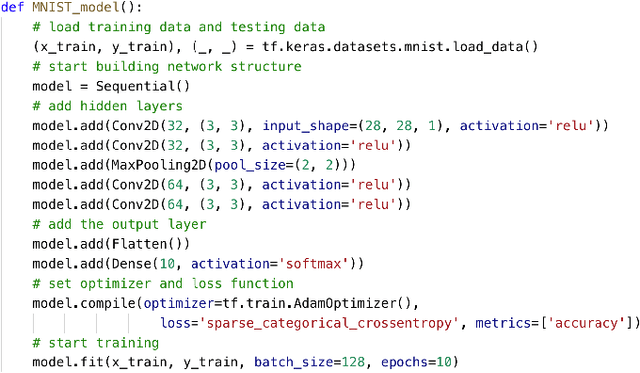

Detecting Deep Neural Network Defects with Data Flow Analysis

Sep 30, 2019

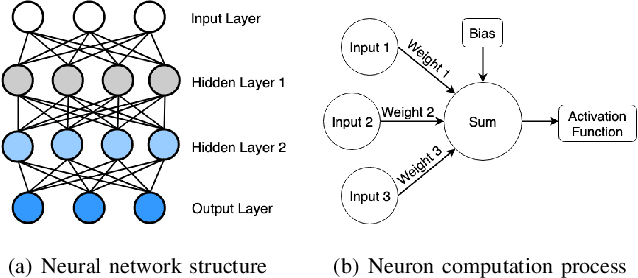

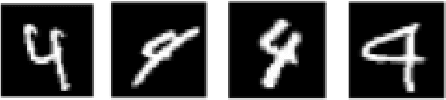

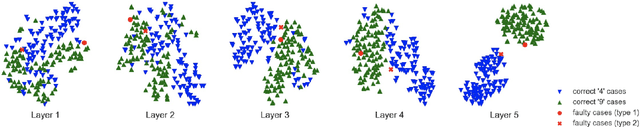

Abstract:Deep neural networks (DNNs) are shown to be promising solutions in many challenging artificial intelligence tasks. However, it is very hard to figure out whether the low precision of a DNN model is an inevitable result, or caused by defects. This paper aims at addressing this challenging problem. We find that the internal data flow footprints of a DNN model can provide insights to locate the root cause effectively. We develop DeepMorph (DNN Tomography) to analyze the root cause, which can guide a DNN developer to improve the model.

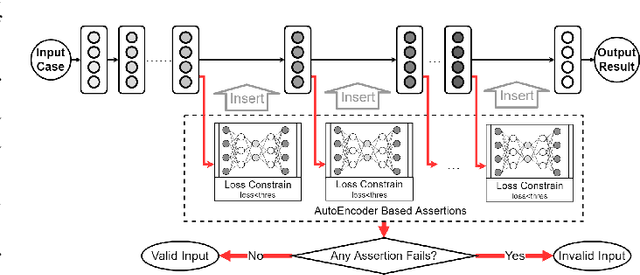

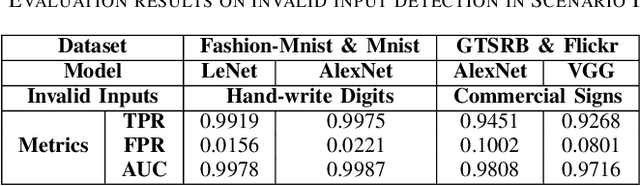

Data Sanity Check for Deep Learning Systems via Learnt Assertions

Sep 28, 2019

Abstract:Reliability is a critical consideration to DL-based systems. But the statistical nature of DL makes it quite vulnerable to invalid inputs, i.e., those cases that are not considered in the training phase of a DL model. This paper proposes to perform data sanity check to identify invalid inputs, so as to enhance the reliability of DL-based systems. We design and implement a tool to detect behavior deviation of a DL model when processing an input case. This tool extracts the data flow footprints and conducts an assertion-based validation mechanism. The assertions are built automatically, which are specifically-tailored for DL model data flow analysis. Our experiments conducted with real-world scenarios demonstrate that such an assertion-based data sanity check mechanism is effective in identifying invalid input cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge