Ya-Chuan Hsu

Timing the Message: Language-Based Notifications for Time-Critical Assistive Settings

Sep 09, 2025Abstract:In time-critical settings such as assistive driving, assistants often rely on alerts or haptic signals to prompt rapid human attention, but these cues usually leave humans to interpret situations and decide responses independently, introducing potential delays or ambiguity in meaning. Language-based assistive systems can instead provide instructions backed by context, offering more informative guidance. However, current approaches (e.g., social assistive robots) largely prioritize content generation while overlooking critical timing factors such as verbal conveyance duration, human comprehension delays, and subsequent follow-through duration. These timing considerations are crucial in time-critical settings, where even minor delays can substantially affect outcomes. We aim to study this inherent trade-off between timeliness and informativeness by framing the challenge as a sequential decision-making problem using an augmented-state Markov Decision Process. We design a framework combining reinforcement learning and a generated offline taxonomy dataset, where we balance the trade-off while enabling a scalable taxonomy dataset generation pipeline. Empirical evaluation with synthetic humans shows our framework improves success rates by over 40% compared to methods that ignore time delays, while effectively balancing timeliness and informativeness. It also exposes an often-overlooked trade-off between these two factors, opening new directions for optimizing communication in time-critical human-AI assistance.

Integrating Field of View in Human-Aware Collaborative Planning

May 20, 2025Abstract:In human-robot collaboration (HRC), it is crucial for robot agents to consider humans' knowledge of their surroundings. In reality, humans possess a narrow field of view (FOV), limiting their perception. However, research on HRC often overlooks this aspect and presumes an omniscient human collaborator. Our study addresses the challenge of adapting to the evolving subtask intent of humans while accounting for their limited FOV. We integrate FOV within the human-aware probabilistic planning framework. To account for large state spaces due to considering FOV, we propose a hierarchical online planner that efficiently finds approximate solutions while enabling the robot to explore low-level action trajectories that enter the human FOV, influencing their intended subtask. Through user study with our adapted cooking domain, we demonstrate our FOV-aware planner reduces human's interruptions and redundant actions during collaboration by adapting to human perception limitations. We extend these findings to a virtual reality kitchen environment, where we observe similar collaborative behaviors.

Surrogate Assisted Generation of Human-Robot Interaction Scenarios

May 11, 2023

Abstract:As human-robot interaction (HRI) systems advance, so does the difficulty of evaluating and understanding the strengths and limitations of these systems in different environments and with different users. To this end, previous methods have algorithmically generated diverse scenarios that reveal system failures in a shared control teleoperation task. However, these methods require directly evaluating generated scenarios by simulating robot policies and human actions. The computational cost of these evaluations limits their applicability in more complex domains. Thus, we propose augmenting scenario generation systems with surrogate models that predict both human and robot behaviors. In the shared control teleoperation domain and a more complex shared workspace collaboration task, we show that surrogate assisted scenario generation efficiently synthesizes diverse datasets of challenging scenarios. We demonstrate that these failures are reproducible in real-world interactions.

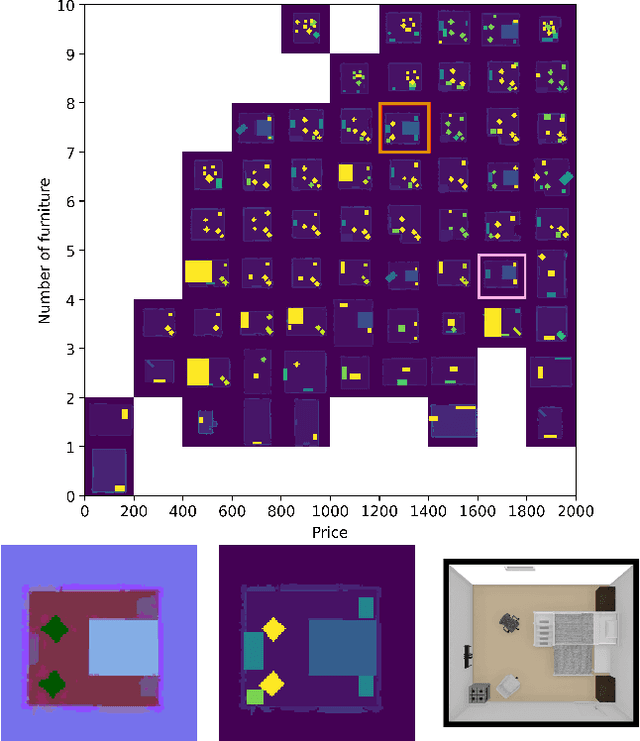

Generating Diverse Indoor Furniture Arrangements

Jun 20, 2022

Abstract:We present a method for generating arrangements of indoor furniture from human-designed furniture layout data. Our method creates arrangements that target specified diversity, such as the total price of all furniture in the room and the number of pieces placed. To generate realistic furniture arrangement, we train a generative adversarial network (GAN) on human-designed layouts. To target specific diversity in the arrangements, we optimize the latent space of the GAN via a quality diversity algorithm to generate a diverse arrangement collection. Experiments show our approach discovers a set of arrangements that are similar to human-designed layouts but varies in price and number of furniture pieces.

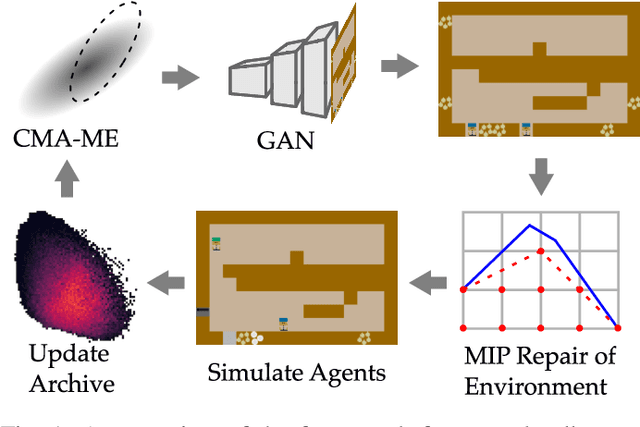

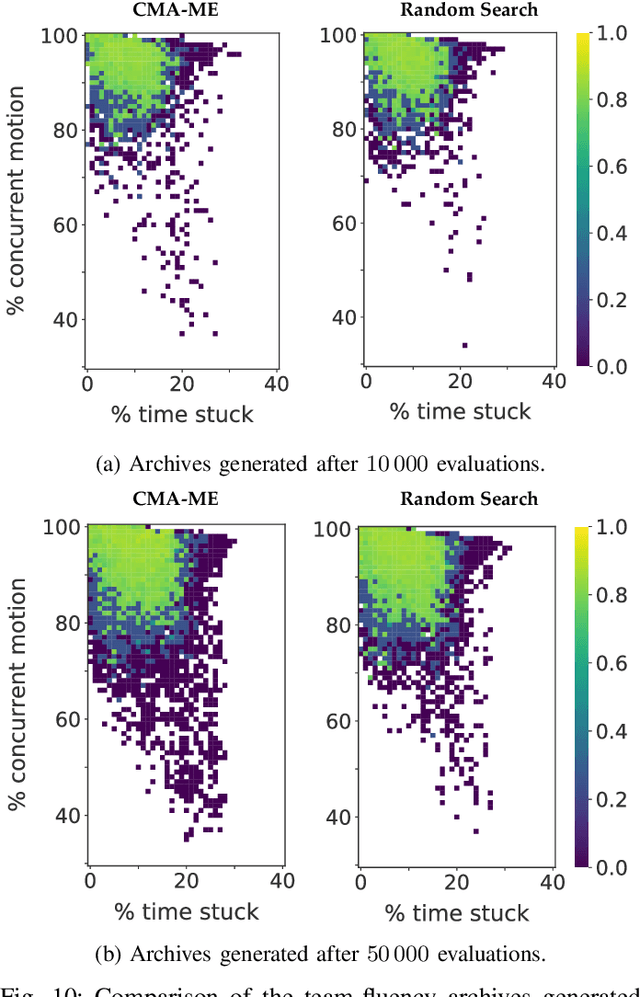

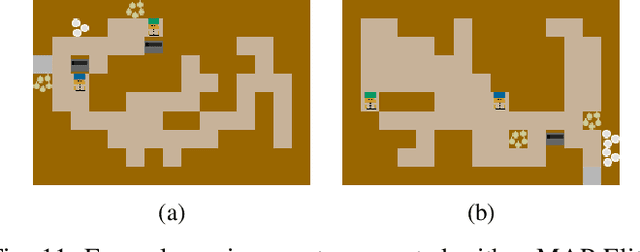

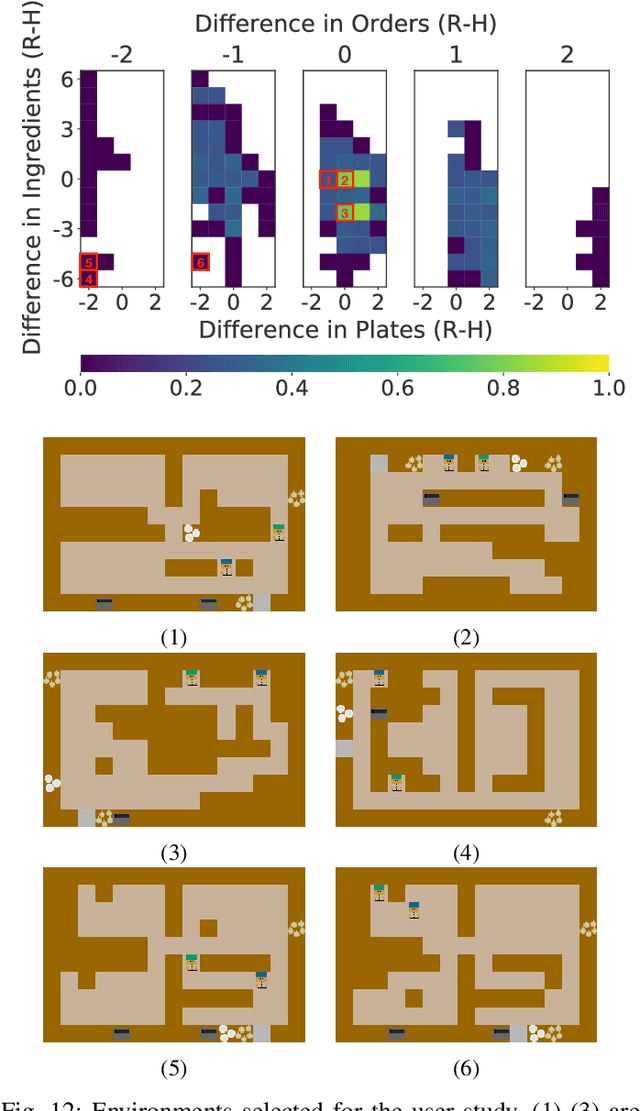

On the Importance of Environments in Human-Robot Coordination

Jun 28, 2021

Abstract:When studying robots collaborating with humans, much of the focus has been on robot policies that coordinate fluently with human teammates in collaborative tasks. However, less emphasis has been placed on the effect of the environment on coordination behaviors. To thoroughly explore environments that result in diverse behaviors, we propose a framework for procedural generation of environments that are (1) stylistically similar to human-authored environments, (2) guaranteed to be solvable by the human-robot team, and (3) diverse with respect to coordination measures. We analyze the procedurally generated environments in the Overcooked benchmark domain via simulation and an online user study. Results show that the environments result in qualitatively different emerging behaviors and statistically significant differences in collaborative fluency metrics, even when the robot runs the same planning algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge