Xiulian Peng

Real-time speech enhancement with dynamic attention span

Feb 21, 2023Abstract:For real-time speech enhancement (SE) including noise suppression, dereverberation and acoustic echo cancellation, the time-variance of the audio signals becomes a severe challenge. The causality and memory usage limit that only the historical information can be used for the system to capture the time-variant characteristics. We propose to adaptively change the receptive field according to the input signal in deep neural network based SE model. Specifically, in an encoder-decoder framework, a dynamic attention span mechanism is introduced to all the attention modules for controlling the size of historical content used for processing the current frame. Experimental results verify that this dynamic mechanism can better track time-variant factors and capture speech-related characteristics, benefiting to both interference removing and speech quality retaining.

Disentangled Feature Learning for Real-Time Neural Speech Coding

Nov 22, 2022Abstract:Recently end-to-end neural audio/speech coding has shown its great potential to outperform traditional signal analysis based audio codecs. This is mostly achieved by following the VQ-VAE paradigm where blind features are learned, vector-quantized and coded. In this paper, instead of blind end-to-end learning, we propose to learn disentangled features for real-time neural speech coding. Specifically, more global-like speaker identity and local content features are learned with disentanglement to represent speech. Such a compact feature decomposition not only achieves better coding efficiency by exploiting bit allocation among different features but also provides the flexibility to do audio editing in embedding space, such as voice conversion in real-time communications. Both subjective and objective results demonstrate its coding efficiency and we find that the learned disentangled features show comparable performance on any-to-any voice conversion with modern self-supervised speech representation learning models with far less parameters and low latency, showing the potential of our neural coding framework.

Predictive Neural Speech Coding

Jul 18, 2022

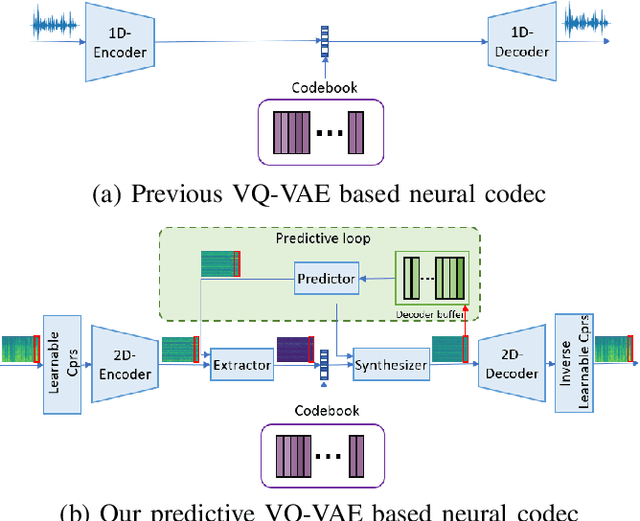

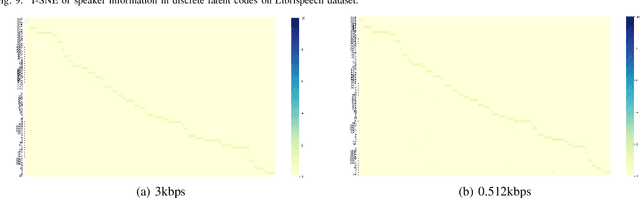

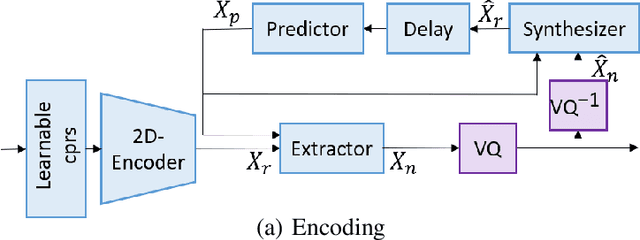

Abstract:Neural audio/speech coding has shown its capability to deliver a high quality at much lower bitrates than traditional methods recently. However, existing neural audio/speech codecs employ either acoustic features or learned blind features with a convolutional neural network for encoding, by which there are still temporal redundancies inside encoded features. This paper introduces latent-domain predictive coding into the VQ-VAE framework to fully remove such redundancies and proposes the TF-Codec for low-latency neural speech coding in an end-to-end way. Specifically, the extracted features are encoded conditioned on a prediction from past quantized latent frames so that temporal correlations are further removed. What's more, we introduce a learnable compression on the time-frequency input to adaptively adjust the attention paid on main frequencies and details at different bitrates. A differentiable vector quantization scheme based on distance-to-soft mapping and Gumbel-Softmax is proposed to better model the latent distributions with rate constraint. Subjective results on multilingual speech datasets show that with a latency of 40ms, the proposed TF-Codec at 1kbps can achieve a much better quality than Opus 9kbps and TF-Codec at 3kbps outperforms both EVS 9.6kbps and Opus 12kbps. Numerous studies are conducted to show the effectiveness of these techniques.

Cross-Scale Vector Quantization for Scalable Neural Speech Coding

Jul 07, 2022

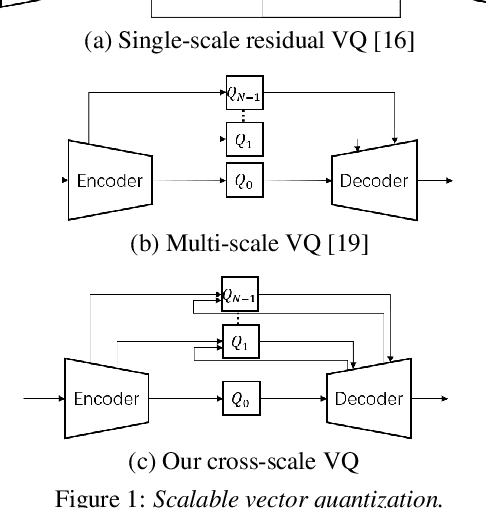

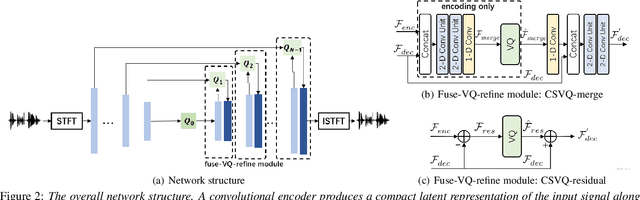

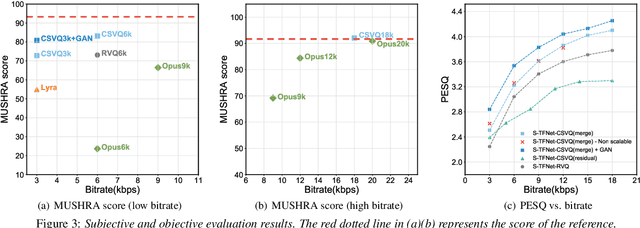

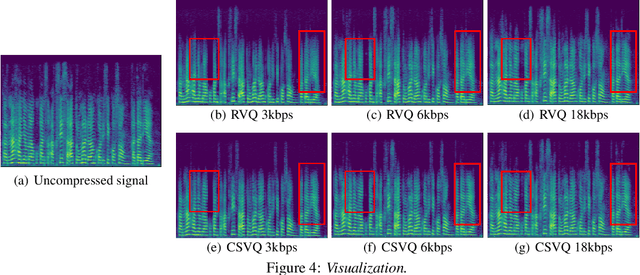

Abstract:Bitrate scalability is a desirable feature for audio coding in real-time communications. Existing neural audio codecs usually enforce a specific bitrate during training, so different models need to be trained for each target bitrate, which increases the memory footprint at the sender and the receiver side and transcoding is often needed to support multiple receivers. In this paper, we introduce a cross-scale scalable vector quantization scheme (CSVQ), in which multi-scale features are encoded progressively with stepwise feature fusion and refinement. In this way, a coarse-level signal is reconstructed if only a portion of the bitstream is received, and progressively improves the quality as more bits are available. The proposed CSVQ scheme can be flexibly applied to any neural audio coding network with a mirrored auto-encoder structure to achieve bitrate scalability. Subjective results show that the proposed scheme outperforms the classical residual VQ (RVQ) with scalability. Moreover, the proposed CSVQ at 3 kbps outperforms Opus at 9 kbps and Lyra at 3kbps and it could provide a graceful quality boost with bitrate increase.

Multi-Modal Multi-Correlation Learning for Audio-Visual Speech Separation

Jul 04, 2022

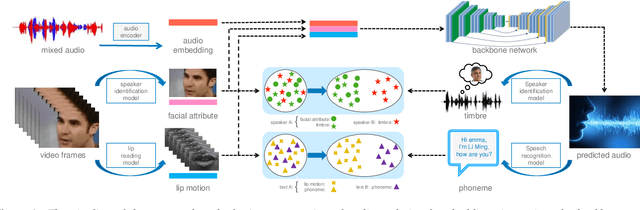

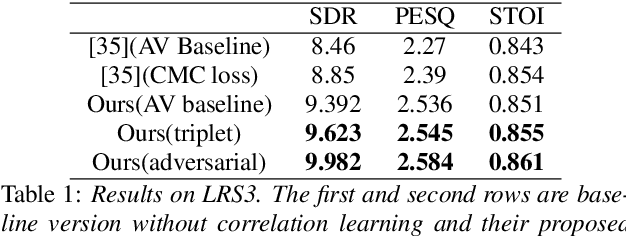

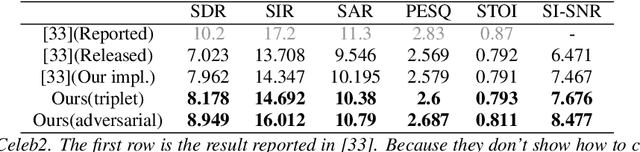

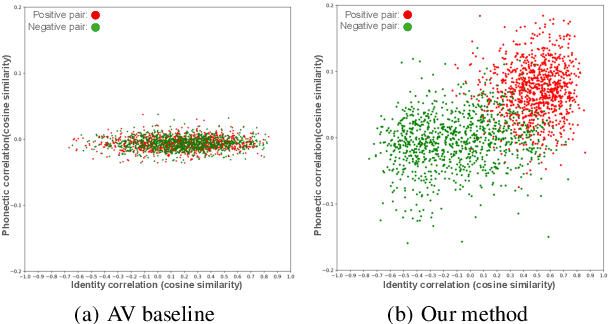

Abstract:In this paper we propose a multi-modal multi-correlation learning framework targeting at the task of audio-visual speech separation. Although previous efforts have been extensively put on combining audio and visual modalities, most of them solely adopt a straightforward concatenation of audio and visual features. To exploit the real useful information behind these two modalities, we define two key correlations which are: (1) identity correlation (between timbre and facial attributes); (2) phonetic correlation (between phoneme and lip motion). These two correlations together comprise the complete information, which shows a certain superiority in separating target speaker's voice especially in some hard cases, such as the same gender or similar content. For implementation, contrastive learning or adversarial training approach is applied to maximize these two correlations. Both of them work well, while adversarial training shows its advantage by avoiding some limitations of contrastive learning. Compared with previous research, our solution demonstrates clear improvement on experimental metrics without additional complexity. Further analysis reveals the validity of the proposed architecture and its good potential for future extension.

Towards Error-Resilient Neural Speech Coding

Jul 03, 2022

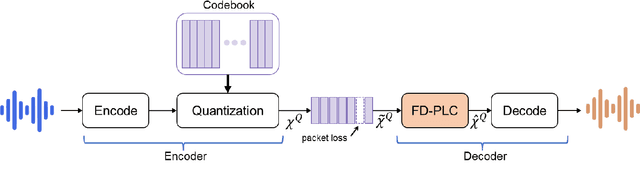

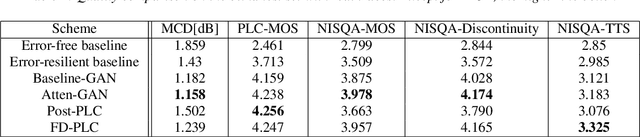

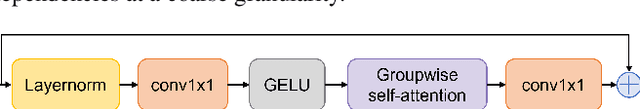

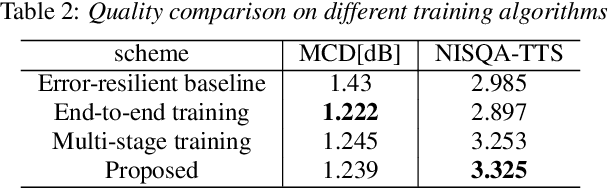

Abstract:Neural audio coding has shown very promising results recently in the literature to largely outperform traditional codecs but limited attention has been paid on its error resilience. Neural codecs trained considering only source coding tend to be extremely sensitive to channel noises, especially in wireless channels with high error rate. In this paper, we investigate how to elevate the error resilience of neural audio codecs for packet losses that often occur during real-time communications. We propose a feature-domain packet loss concealment algorithm (FD-PLC) for real-time neural speech coding. Specifically, we introduce a self-attention-based module on the received latent features to recover lost frames in the feature domain before the decoder. A hybrid segment-level and frame-level frequency-domain discriminator is employed to guide the network to focus on both the generative quality of lost frames and the continuity with neighbouring frames. Experimental results on several error patterns show that the proposed scheme can achieve better robustness compared with the corresponding error-free and error-resilient baselines. We also show that feature-domain concealment is superior to waveform-domain counterpart as post-processing.

End-to-End Neural Audio Coding for Real-Time Communications

Jan 25, 2022

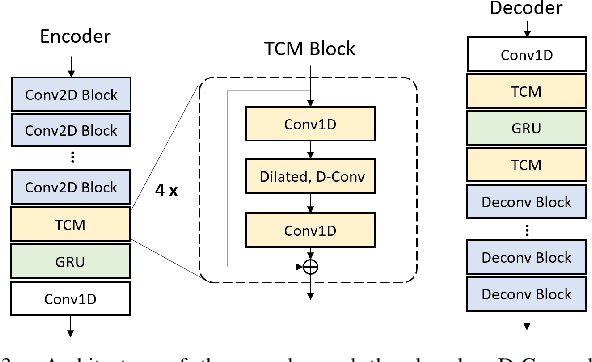

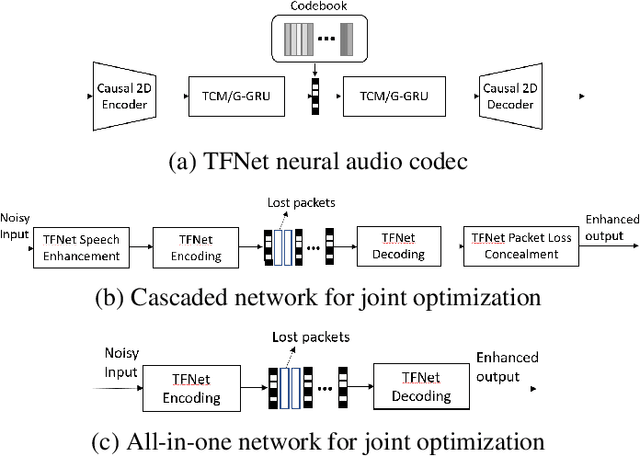

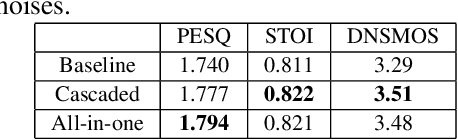

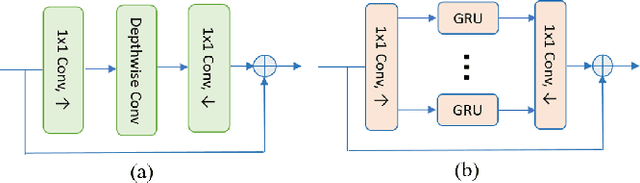

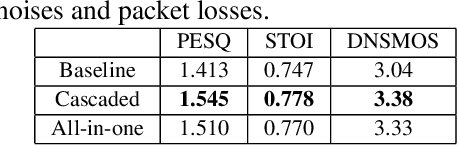

Abstract:Deep-learning based methods have shown their advantages in audio coding over traditional ones but limited attention has been paid on real-time communications (RTC). This paper proposes the TFNet, an end-to-end neural audio codec with low latency for RTC. It takes an encoder-temporal filtering-decoder paradigm that seldom being investigated in audio coding. An interleaved structure is proposed for temporal filtering to capture both short-term and long-term temporal dependencies. Furthermore, with end-to-end optimization, the TFNet is jointly optimized with speech enhancement and packet loss concealment, yielding a one-for-all network for three tasks. Both subjective and objective results demonstrate the efficiency of the proposed TFNet.

Phoneme-based Distribution Regularization for Speech Enhancement

Apr 08, 2021

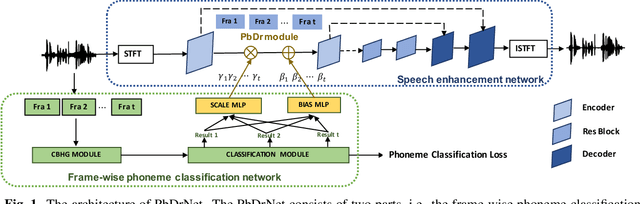

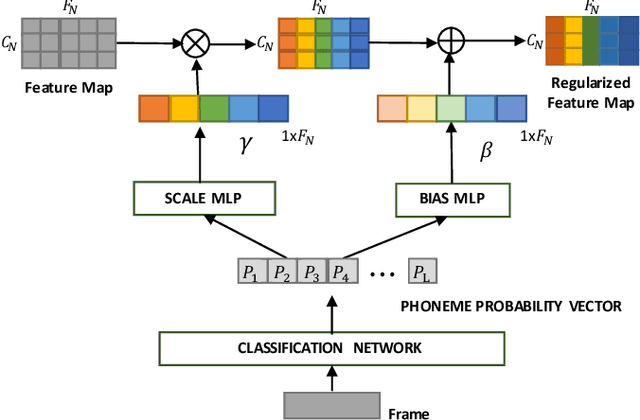

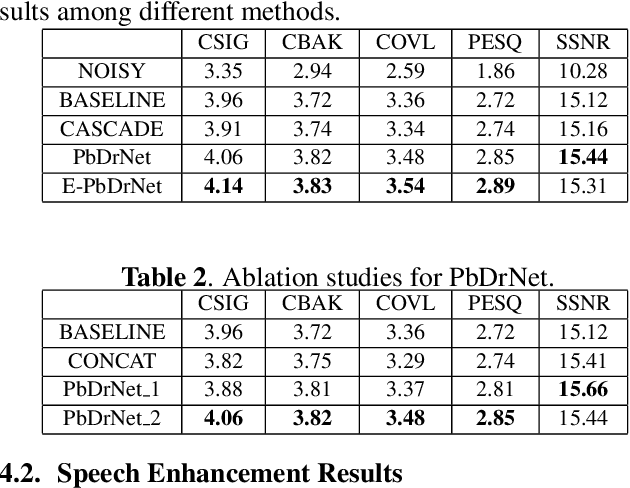

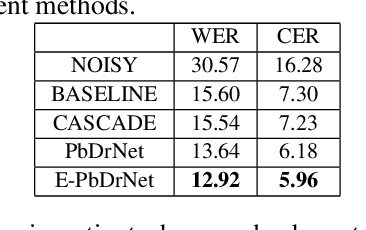

Abstract:Existing speech enhancement methods mainly separate speech from noises at the signal level or in the time-frequency domain. They seldom pay attention to the semantic information of a corrupted signal. In this paper, we aim to bridge this gap by extracting phoneme identities to help speech enhancement. Specifically, we propose a phoneme-based distribution regularization (PbDr) for speech enhancement, which incorporates frame-wise phoneme information into speech enhancement network in a conditional manner. As different phonemes always lead to different feature distributions in frequency, we propose to learn a parameter pair, i.e. scale and bias, through a phoneme classification vector to modulate the speech enhancement network. The modulation parameter pair includes not only frame-wise but also frequency-wise conditions, which effectively map features to phoneme-related distributions. In this way, we explicitly regularize speech enhancement features by recognition vectors. Experiments on public datasets demonstrate that the proposed PbDr module can not only boost the perceptual quality for speech enhancement but also the recognition accuracy of an ASR system on the enhanced speech. This PbDr module could be readily incorporated into other speech enhancement networks as well.

End-to-End United Video Dehazing and Detection

Sep 12, 2017

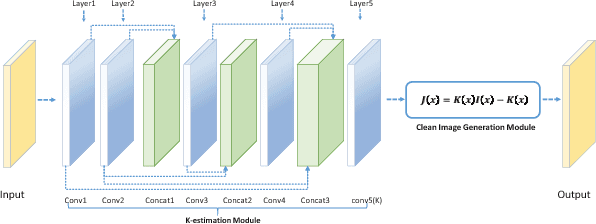

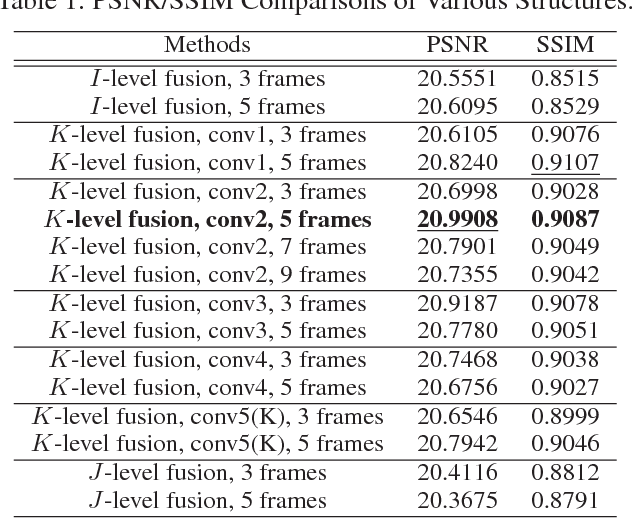

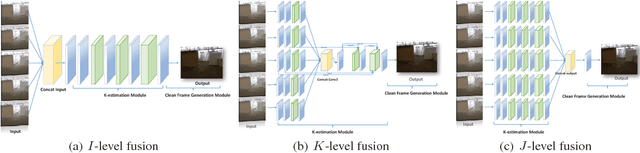

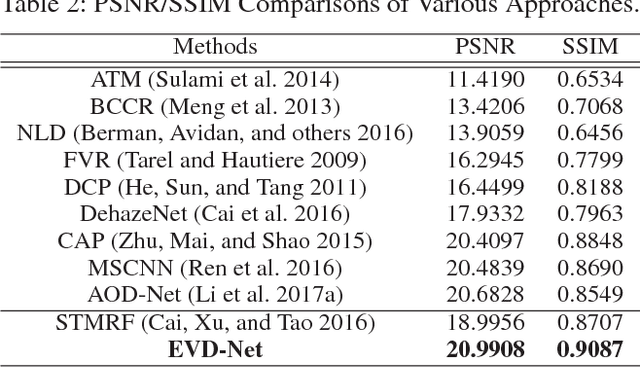

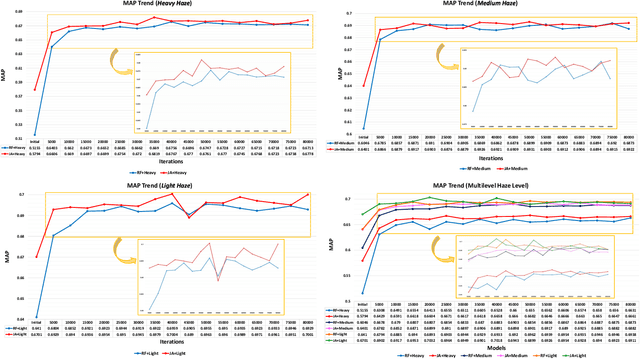

Abstract:The recent development of CNN-based image dehazing has revealed the effectiveness of end-to-end modeling. However, extending the idea to end-to-end video dehazing has not been explored yet. In this paper, we propose an End-to-End Video Dehazing Network (EVD-Net), to exploit the temporal consistency between consecutive video frames. A thorough study has been conducted over a number of structure options, to identify the best temporal fusion strategy. Furthermore, we build an End-to-End United Video Dehazing and Detection Network(EVDD-Net), which concatenates and jointly trains EVD-Net with a video object detection model. The resulting augmented end-to-end pipeline has demonstrated much more stable and accurate detection results in hazy video.

An All-in-One Network for Dehazing and Beyond

Jul 20, 2017

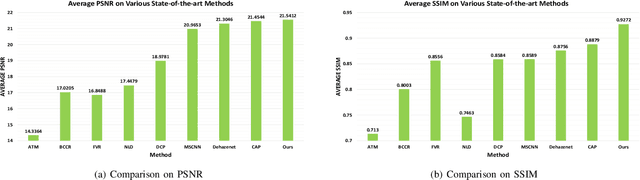

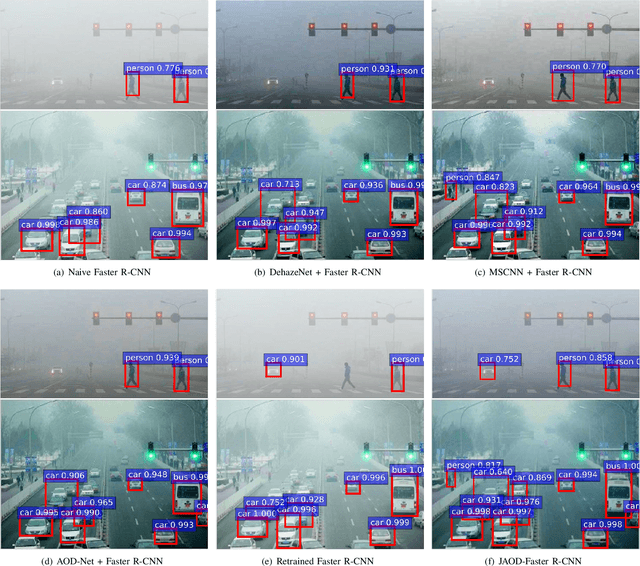

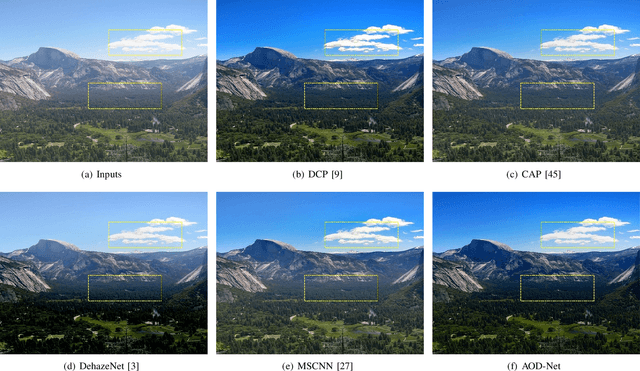

Abstract:This paper proposes an image dehazing model built with a convolutional neural network (CNN), called All-in-One Dehazing Network (AOD-Net). It is designed based on a re-formulated atmospheric scattering model. Instead of estimating the transmission matrix and the atmospheric light separately as most previous models did, AOD-Net directly generates the clean image through a light-weight CNN. Such a novel end-to-end design makes it easy to embed AOD-Net into other deep models, e.g., Faster R-CNN, for improving high-level task performance on hazy images. Experimental results on both synthesized and natural hazy image datasets demonstrate our superior performance than the state-of-the-art in terms of PSNR, SSIM and the subjective visual quality. Furthermore, when concatenating AOD-Net with Faster R-CNN and training the joint pipeline from end to end, we witness a large improvement of the object detection performance on hazy images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge