Xiaoyong Yuan

Membership Inference Attacks and Defenses in Neural Network Pruning

Feb 07, 2022

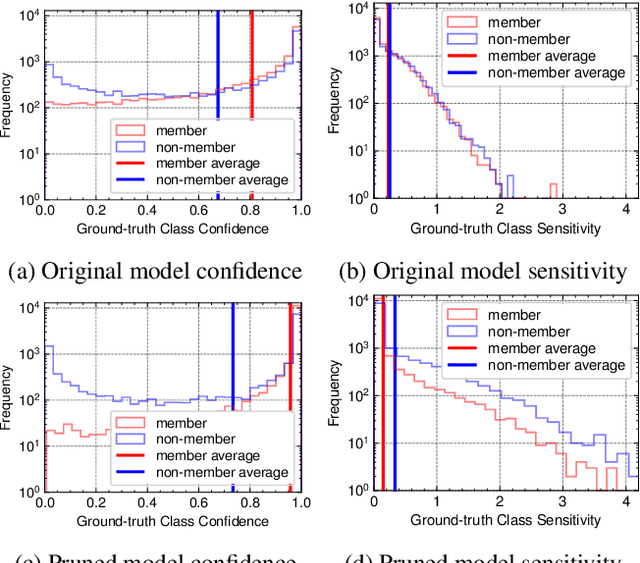

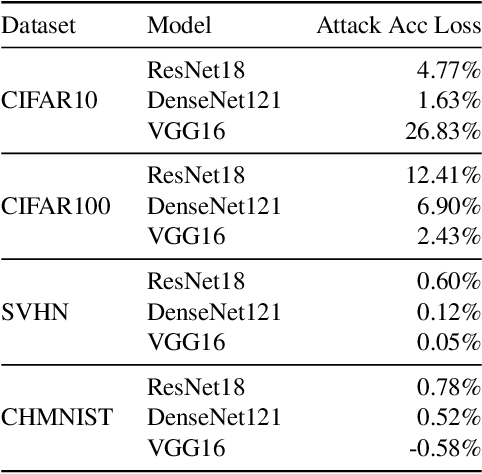

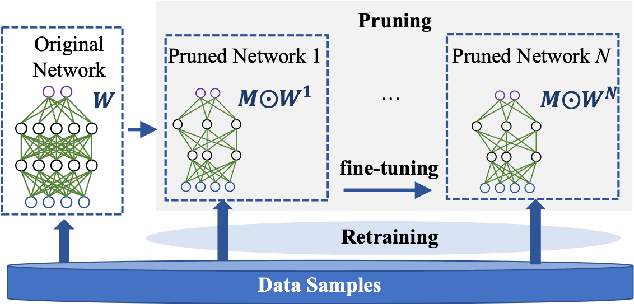

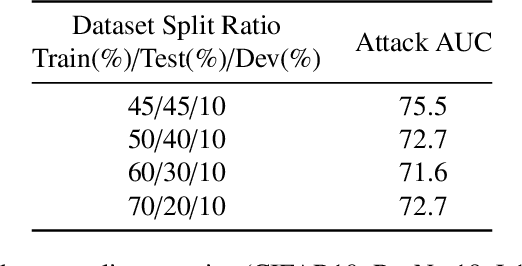

Abstract:Neural network pruning has been an essential technique to reduce the computation and memory requirements for using deep neural networks for resource-constrained devices. Most existing research focuses primarily on balancing the sparsity and accuracy of a pruned neural network by strategically removing insignificant parameters and retraining the pruned model. Such efforts on reusing training samples pose serious privacy risks due to increased memorization, which, however, has not been investigated yet. In this paper, we conduct the first analysis of privacy risks in neural network pruning. Specifically, we investigate the impacts of neural network pruning on training data privacy, i.e., membership inference attacks. We first explore the impact of neural network pruning on prediction divergence, where the pruning process disproportionately affects the pruned model's behavior for members and non-members. Meanwhile, the influence of divergence even varies among different classes in a fine-grained manner. Enlighten by such divergence, we proposed a self-attention membership inference attack against the pruned neural networks. Extensive experiments are conducted to rigorously evaluate the privacy impacts of different pruning approaches, sparsity levels, and adversary knowledge. The proposed attack shows the higher attack performance on the pruned models when compared with eight existing membership inference attacks. In addition, we propose a new defense mechanism to protect the pruning process by mitigating the prediction divergence based on KL-divergence distance, whose effectiveness has been experimentally demonstrated to effectively mitigate the privacy risks while maintaining the sparsity and accuracy of the pruned models.

FedZKT: Zero-Shot Knowledge Transfer towards Heterogeneous On-Device Models in Federated Learning

Sep 08, 2021

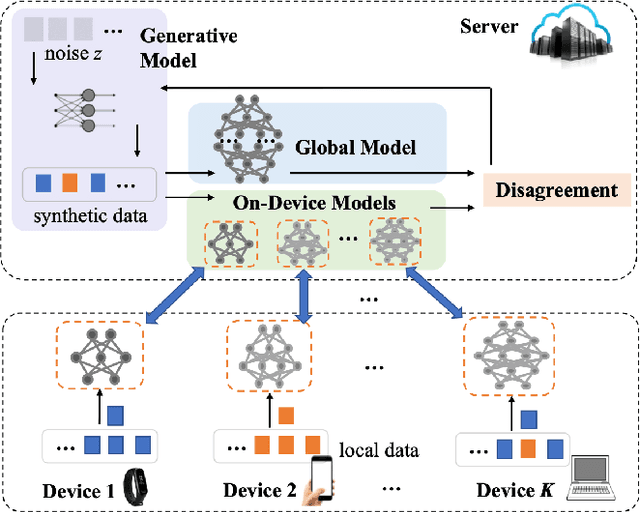

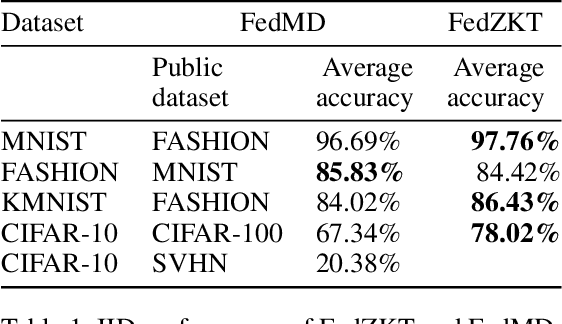

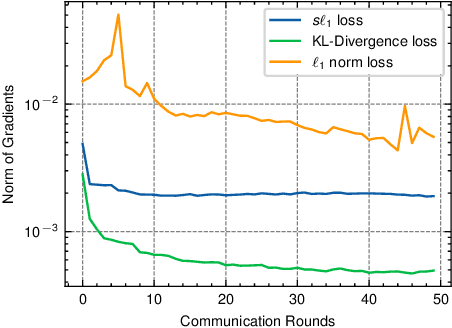

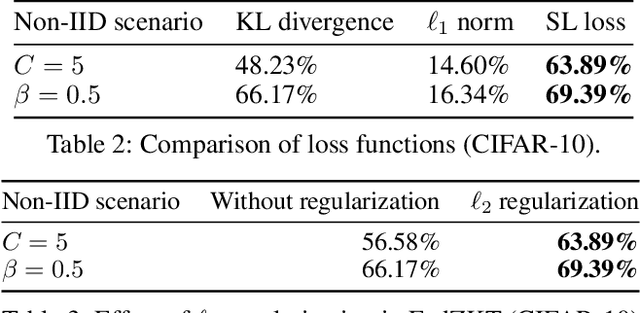

Abstract:Federated learning enables distributed devices to collaboratively learn a shared prediction model without centralizing on-device training data. Most of the current algorithms require comparable individual efforts to train on-device models with the same structure and size, impeding participation from resource-constrained devices. Given the widespread yet heterogeneous devices nowadays, this paper proposes a new framework supporting federated learning across heterogeneous on-device models via Zero-shot Knowledge Transfer, named by FedZKT. Specifically, FedZKT allows participating devices to independently determine their on-device models. To transfer knowledge across on-device models, FedZKT develops a zero-shot distillation approach contrary to certain prior research based on a public dataset or a pre-trained data generator. To utmostly reduce on-device workload, the resource-intensive distillation task is assigned to the server, which constructs a generator to adversarially train with the ensemble of the received heterogeneous on-device models. The distilled central knowledge will then be sent back in the form of the corresponding on-device model parameters, which can be easily absorbed at the device side. Experimental studies demonstrate the effectiveness and the robustness of FedZKT towards heterogeneous on-device models and challenging federated learning scenarios, such as non-iid data distribution and straggler effects.

A Vertical Federated Learning Framework for Horizontally Partitioned Labels

Jun 18, 2021

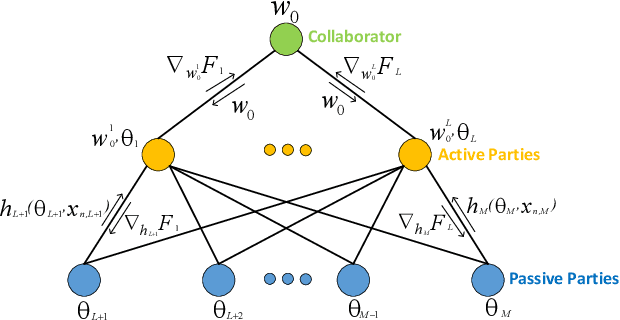

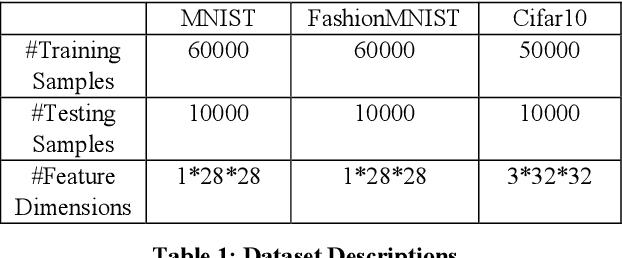

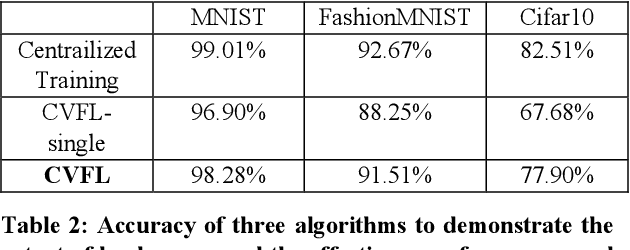

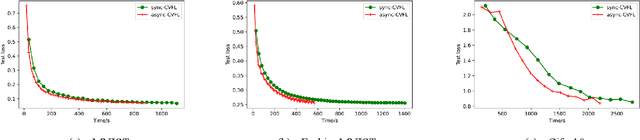

Abstract:Vertical federated learning is a collaborative machine learning framework to train deep leaning models on vertically partitioned data with privacy-preservation. It attracts much attention both from academia and industry. Unfortunately, applying most existing vertical federated learning methods in real-world applications still faces two daunting challenges. First, most existing vertical federated learning methods have a strong assumption that at least one party holds the complete set of labels of all data samples, while this assumption is not satisfied in many practical scenarios, where labels are horizontally partitioned and the parties only hold partial labels. Existing vertical federated learning methods can only utilize partial labels, which may lead to inadequate model update in end-to-end backpropagation. Second, computational and communication resources vary in parties. Some parties with limited computational and communication resources will become the stragglers and slow down the convergence of training. Such straggler problem will be exaggerated in the scenarios of horizontally partitioned labels in vertical federated learning. To address these challenges, we propose a novel vertical federated learning framework named Cascade Vertical Federated Learning (CVFL) to fully utilize all horizontally partitioned labels to train neural networks with privacy-preservation. To mitigate the straggler problem, we design a novel optimization objective which can increase straggler's contribution to the trained models. We conduct a series of qualitative experiments to rigorously verify the effectiveness of CVFL. It is demonstrated that CVFL can achieve comparable performance (e.g., accuracy for classification tasks) with centralized training. The new optimization objective can further mitigate the straggler problem comparing with only using the asynchronous aggregation mechanism during training.

ES Attack: Model Stealing against Deep Neural Networks without Data Hurdles

Sep 21, 2020

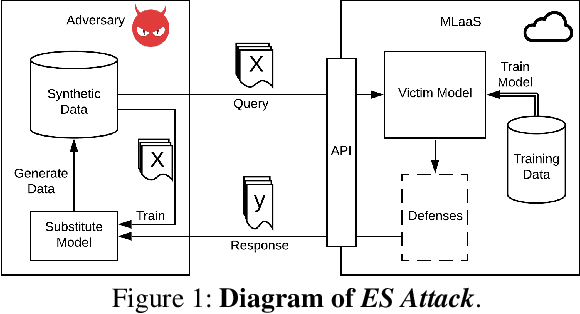

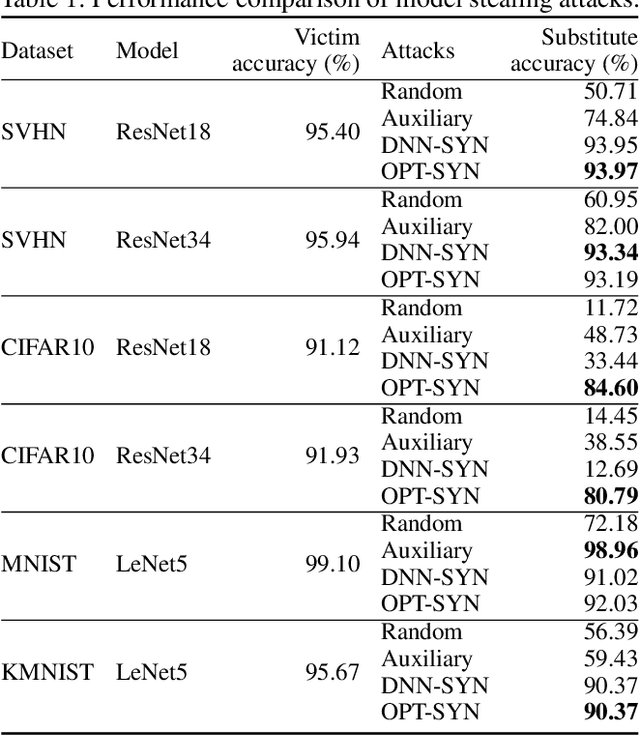

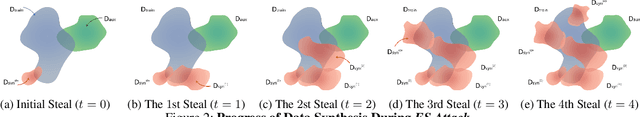

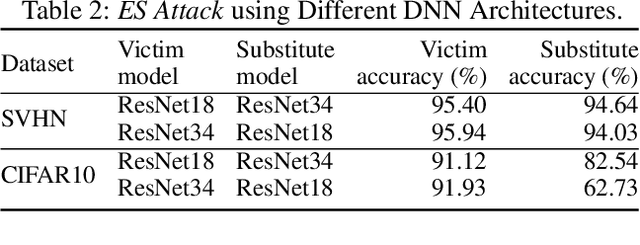

Abstract:Deep neural networks (DNNs) have become the essential components for various commercialized machine learning services, such as Machine Learning as a Service (MLaaS). Recent studies show that machine learning services face severe privacy threats - well-trained DNNs owned by MLaaS providers can be stolen through public APIs, namely model stealing attacks. However, most existing works undervalued the impact of such attacks, where a successful attack has to acquire confidential training data or auxiliary data regarding the victim DNN. In this paper, we propose ES Attack, a novel model stealing attack without any data hurdles. By using heuristically generated synthetic data, ES Attackiteratively trains a substitute model and eventually achieves a functionally equivalent copy of the victim DNN. The experimental results reveal the severity of ES Attack: i) ES Attack successfully steals the victim model without data hurdles, and ES Attack even outperforms most existing model stealing attacks using auxiliary data in terms of model accuracy; ii) most countermeasures are ineffective in defending ES Attack; iii) ES Attack facilitates further attacks relying on the stolen model.

Connecting Web Event Forecasting with Anomaly Detection: A Case Study on Enterprise Web Applications Using Self-Supervised Neural Networks

Sep 07, 2020

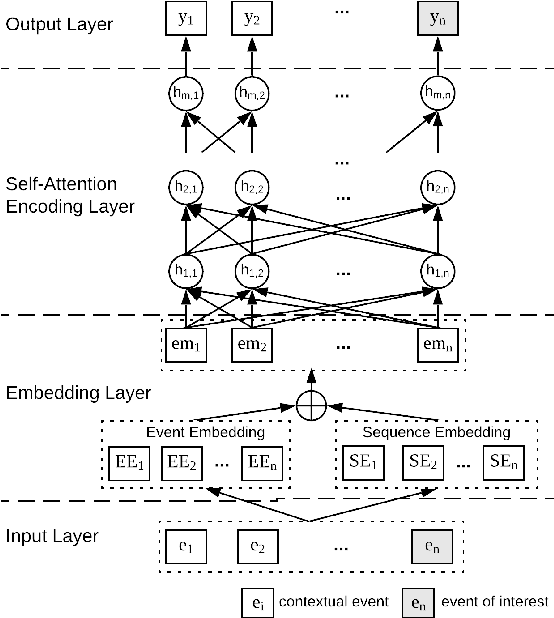

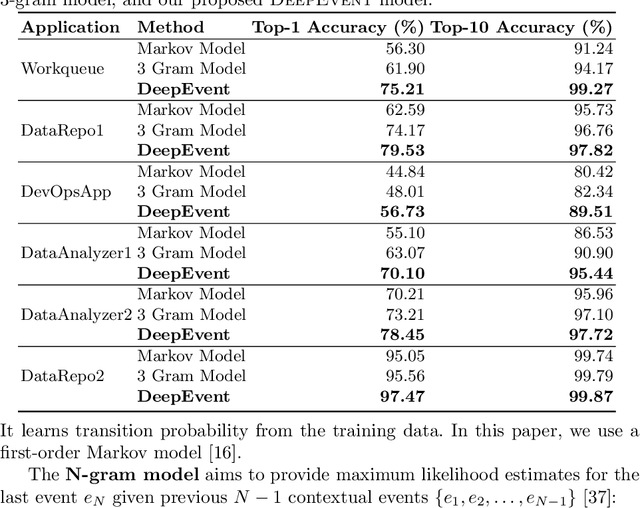

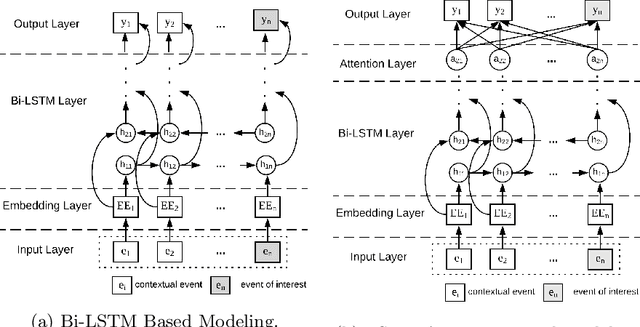

Abstract:Recently web applications have been widely used in enterprises to assist employees in providing effective and efficient business processes. Forecasting upcoming web events in enterprise web applications can be beneficial in many ways, such as efficient caching and recommendation. In this paper, we present a web event forecasting approach, DeepEvent, in enterprise web applications for better anomaly detection. DeepEvent includes three key features: web-specific neural networks to take into account the characteristics of sequential web events, self-supervised learning techniques to overcome the scarcity of labeled data, and sequence embedding techniques to integrate contextual events and capture dependencies among web events. We evaluate DeepEvent on web events collected from six real-world enterprise web applications. Our experimental results demonstrate that DeepEvent is effective in forecasting sequential web events and detecting web based anomalies. DeepEvent provides a context-based system for researchers and practitioners to better forecast web events with situational awareness.

Generalized Batch Normalization: Towards Accelerating Deep Neural Networks

Dec 08, 2018

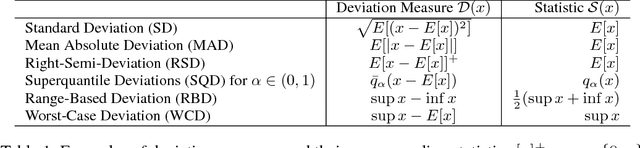

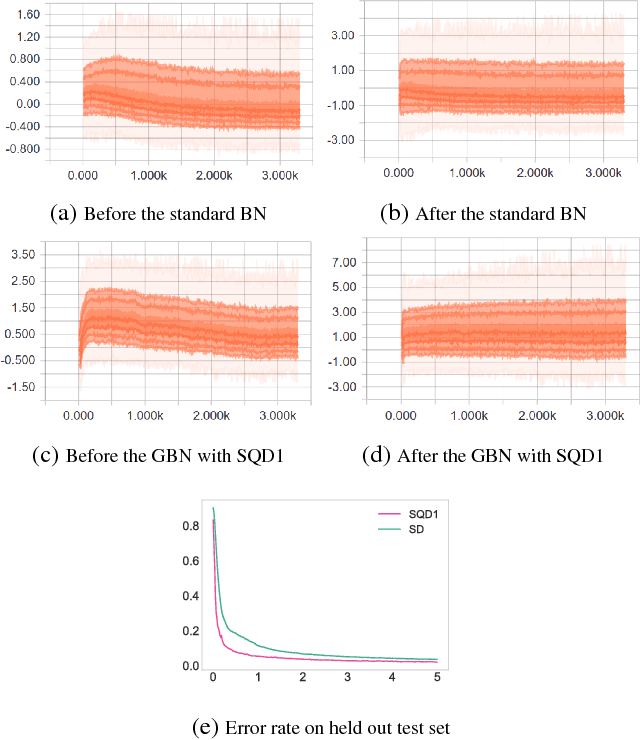

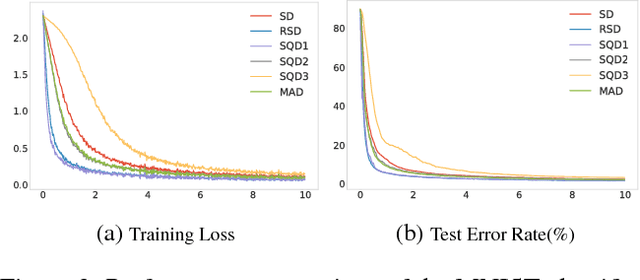

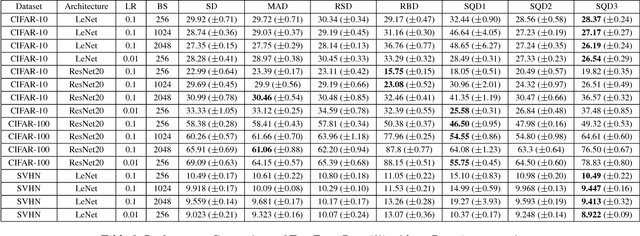

Abstract:Utilizing recently introduced concepts from statistics and quantitative risk management, we present a general variant of Batch Normalization (BN) that offers accelerated convergence of Neural Network training compared to conventional BN. In general, we show that mean and standard deviation are not always the most appropriate choice for the centering and scaling procedure within the BN transformation, particularly if ReLU follows the normalization step. We present a Generalized Batch Normalization (GBN) transformation, which can utilize a variety of alternative deviation measures for scaling and statistics for centering, choices which naturally arise from the theory of generalized deviation measures and risk theory in general. When used in conjunction with the ReLU non-linearity, the underlying risk theory suggests natural, arguably optimal choices for the deviation measure and statistic. Utilizing the suggested deviation measure and statistic, we show experimentally that training is accelerated more so than with conventional BN, often with improved error rate as well. Overall, we propose a more flexible BN transformation supported by a complimentary theoretical framework that can potentially guide design choices.

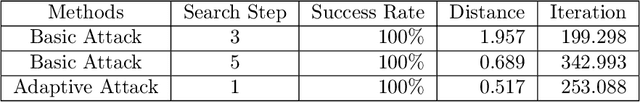

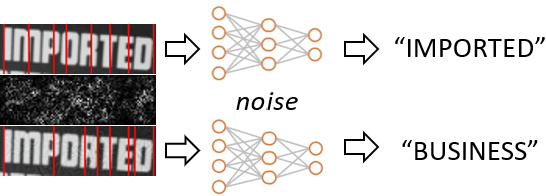

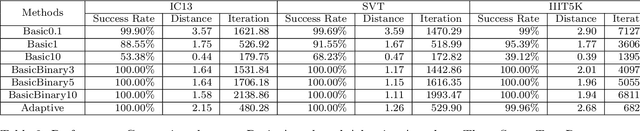

Adaptive Adversarial Attack on Scene Text Recognition

Jul 09, 2018

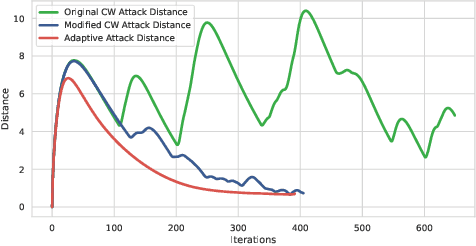

Abstract:Recent studies have shown that state-of-the-art deep learning models are vulnerable to the inputs with small perturbations (adversarial examples). We observe two critical obstacles in adversarial examples: (i) Strong adversarial attacks require manually tuning hyper-parameters, which take longer time to construct a single adversarial example, making it impractical to attack real-time systems; (ii) Most of the studies focus on non-sequential tasks, such as image classification and object detection. Only a few consider sequential tasks. Despite extensive research studies, the cause of adversarial examples remains an open problem, especially on sequential tasks. We propose an adaptive adversarial attack, called AdaptiveAttack, to speed up the process of generating adversarial examples. To validate its effectiveness, we leverage the scene text detection task as a case study of sequential adversarial examples. We further visualize the generated adversarial examples to analyze the cause of sequential adversarial examples. AdaptiveAttack achieved over 99.9\% success rate with 3-6 times speedup compared to state-of-the-art adversarial attacks.

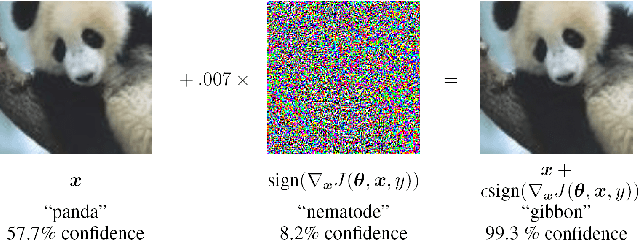

Adversarial Examples: Attacks and Defenses for Deep Learning

Jul 07, 2018

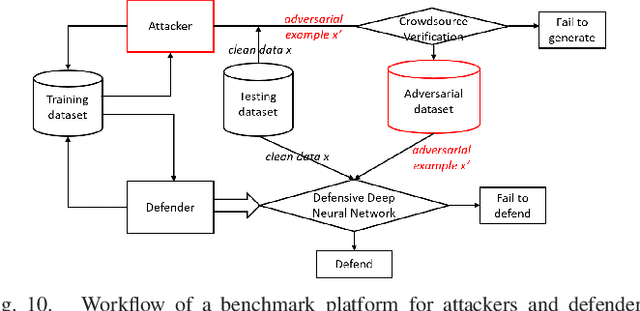

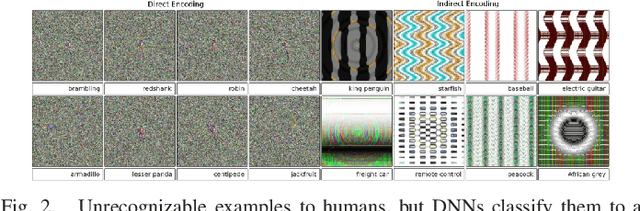

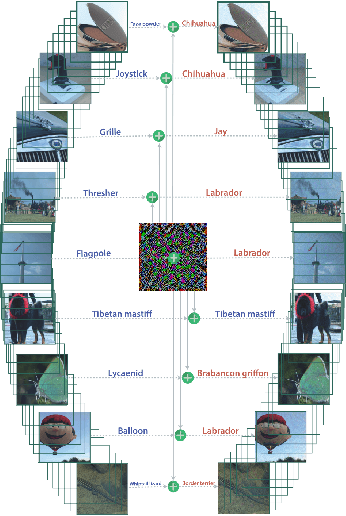

Abstract:With rapid progress and significant successes in a wide spectrum of applications, deep learning is being applied in many safety-critical environments. However, deep neural networks have been recently found vulnerable to well-designed input samples, called adversarial examples. Adversarial examples are imperceptible to human but can easily fool deep neural networks in the testing/deploying stage. The vulnerability to adversarial examples becomes one of the major risks for applying deep neural networks in safety-critical environments. Therefore, attacks and defenses on adversarial examples draw great attention. In this paper, we review recent findings on adversarial examples for deep neural networks, summarize the methods for generating adversarial examples, and propose a taxonomy of these methods. Under the taxonomy, applications for adversarial examples are investigated. We further elaborate on countermeasures for adversarial examples and explore the challenges and the potential solutions.

Learning Fast and Slow: PROPEDEUTICA for Real-time Malware Detection

Dec 04, 2017

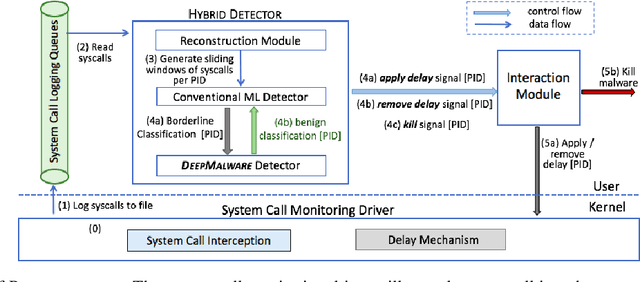

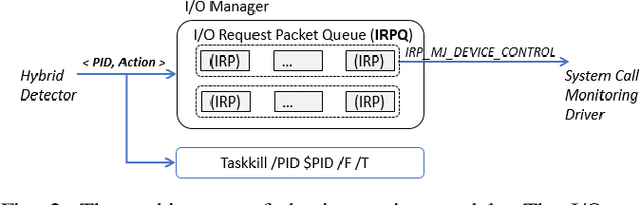

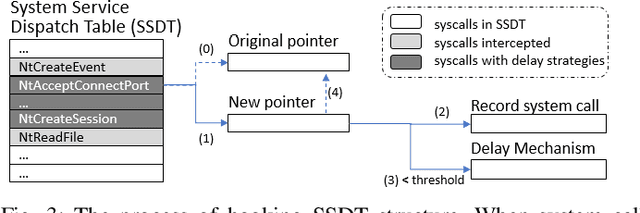

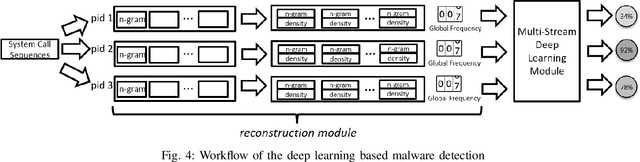

Abstract:In this paper, we introduce and evaluate PROPEDEUTICA, a novel methodology and framework for efficient and effective real-time malware detection, leveraging the best of conventional machine learning (ML) and deep learning (DL) algorithms. In PROPEDEUTICA, all software processes in the system start execution subjected to a conventional ML detector for fast classification. If a piece of software receives a borderline classification, it is subjected to further analysis via more performance expensive and more accurate DL methods, via our newly proposed DL algorithm DEEPMALWARE. Further, we introduce delays to the execution of software subjected to deep learning analysis as a way to "buy time" for DL analysis and to rate-limit the impact of possible malware in the system. We evaluated PROPEDEUTICA with a set of 9,115 malware samples and 877 commonly used benign software samples from various categories for the Windows OS. Our results show that the false positive rate for conventional ML methods can reach 20%, and for modern DL methods it is usually below 6%. However, the classification time for DL can be 100X longer than conventional ML methods. PROPEDEUTICA improved the detection F1-score from 77.54% (conventional ML method) to 90.25%, and reduced the detection time by 54.86%. Further, the percentage of software subjected to DL analysis was approximately 40% on average. Further, the application of delays in software subjected to ML reduced the detection time by approximately 10%. Finally, we found and discussed a discrepancy between the detection accuracy offline (analysis after all traces are collected) and on-the-fly (analysis in tandem with trace collection). Our insights show that conventional ML and modern DL-based malware detectors in isolation cannot meet the needs of efficient and effective malware detection: high accuracy, low false positive rate, and short classification time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge