Takayuki Nishio

SC-MII: Infrastructure LiDAR-based 3D Object Detection on Edge Devices for Split Computing with Multiple Intermediate Outputs Integration

Jan 12, 2026Abstract:3D object detection using LiDAR-based point cloud data and deep neural networks is essential in autonomous driving technology. However, deploying state-of-the-art models on edge devices present challenges due to high computational demands and energy consumption. Additionally, single LiDAR setups suffer from blind spots. This paper proposes SC-MII, multiple infrastructure LiDAR-based 3D object detection on edge devices for Split Computing with Multiple Intermediate outputs Integration. In SC-MII, edge devices process local point clouds through the initial DNN layers and send intermediate outputs to an edge server. The server integrates these features and completes inference, reducing both latency and device load while improving privacy. Experimental results on a real-world dataset show a 2.19x speed-up and a 71.6% reduction in edge device processing time, with at most a 1.09% drop in accuracy.

Load-Aware Training Scheduling for Model Circulation-based Decentralized Federated Learning

Jun 11, 2025Abstract:This paper proposes Load-aware Tram-FL, an extension of Tram-FL that introduces a training scheduling mechanism to minimize total training time in decentralized federated learning by accounting for both computational and communication loads. The scheduling problem is formulated as a global optimization task, which-though intractable in its original form-is made solvable by decomposing it into node-wise subproblems. To promote balanced data utilization under non-IID distributions, a variance constraint is introduced, while the overall training latency, including both computation and communication costs, is minimized through the objective function. Simulation results on MNIST and CIFAR-10 demonstrate that Load-aware Tram-FL significantly reduces training time and accelerates convergence compared to baseline methods.

SALT: A Lightweight Model Adaptation Method for Closed Split Computing Environments

Jun 09, 2025Abstract:We propose SALT (Split-Adaptive Lightweight Tuning), a lightweight model adaptation framework for Split Computing under closed constraints, where the head and tail networks are proprietary and inaccessible to users. In such closed environments, conventional adaptation methods are infeasible since they require access to model parameters or architectures. SALT addresses this challenge by introducing a compact, trainable adapter on the client side to refine latent features from the head network, enabling user-specific adaptation without modifying the original models or increasing communication overhead. We evaluate SALT on user-specific classification tasks with CIFAR-10 and CIFAR-100, demonstrating improved accuracy with lower training latency compared to fine-tuning methods. Furthermore, SALT facilitates model adaptation for robust inference over lossy networks, a common challenge in edge-cloud environments. With minimal deployment overhead, SALT offers a practical solution for personalized inference in edge AI systems under strict system constraints.

Soft-Label Caching and Sharpening for Communication-Efficient Federated Distillation

May 01, 2025Abstract:Federated Learning (FL) enables collaborative model training across decentralized clients, enhancing privacy by keeping data local. Yet conventional FL, relying on frequent parameter-sharing, suffers from high communication overhead and limited model heterogeneity. Distillation-based FL approaches address these issues by sharing predictions (soft-labels) instead, but they often involve redundant transmissions across communication rounds, reducing efficiency. We propose SCARLET, a novel framework integrating synchronized soft-label caching and an enhanced Entropy Reduction Aggregation (Enhanced ERA) mechanism. SCARLET minimizes redundant communication by reusing cached soft-labels, achieving up to 50% reduction in communication costs compared to existing methods while maintaining accuracy. Enhanced ERA can be tuned to adapt to non-IID data variations, ensuring robust aggregation and performance in diverse client scenarios. Experimental evaluations demonstrate that SCARLET consistently outperforms state-of-the-art distillation-based FL methods in terms of accuracy and communication efficiency. The implementation of SCARLET is publicly available at https://github.com/kitsuyaazuma/SCARLET.

Adapting CSI-Guided Imaging Across Diverse Environments: An Experimental Study Leveraging Continuous Learning

Apr 01, 2024Abstract:This study explores the feasibility of adapting CSI-guided imaging across varied environments. Focusing on continuous model learning through continuous updates, we investigate CSI-Imager's adaptability in dynamically changing settings, specifically transitioning from an office to an industrial environment. Unlike traditional approaches that may require retraining for new environments, our experimental study aims to validate the potential of CSI-guided imaging to maintain accurate imaging performance through Continuous Learning (CL). By conducting experiments across different scenarios and settings, this work contributes to understanding the limitations and capabilities of existing CSI-guided imaging systems in adapting to new environmental contexts.

$Λ$-Split: A Privacy-Preserving Split Computing Framework for Cloud-Powered Generative AI

Oct 23, 2023Abstract:In the wake of the burgeoning expansion of generative artificial intelligence (AI) services, the computational demands inherent to these technologies frequently necessitate cloud-powered computational offloading, particularly for resource-constrained mobile devices. These services commonly employ prompts to steer the generative process, and both the prompts and the resultant content, such as text and images, may harbor privacy-sensitive or confidential information, thereby elevating security and privacy risks. To mitigate these concerns, we introduce $\Lambda$-Split, a split computing framework to facilitate computational offloading while simultaneously fortifying data privacy against risks such as eavesdropping and unauthorized access. In $\Lambda$-Split, a generative model, usually a deep neural network (DNN), is partitioned into three sub-models and distributed across the user's local device and a cloud server: the input-side and output-side sub-models are allocated to the local, while the intermediate, computationally-intensive sub-model resides on the cloud server. This architecture ensures that only the hidden layer outputs are transmitted, thereby preventing the external transmission of privacy-sensitive raw input and output data. Given the black-box nature of DNNs, estimating the original input or output from intercepted hidden layer outputs poses a significant challenge for malicious eavesdroppers. Moreover, $\Lambda$-Split is orthogonal to traditional encryption-based security mechanisms, offering enhanced security when deployed in conjunction. We empirically validate the efficacy of the $\Lambda$-Split framework using Llama 2 and Stable Diffusion XL, representative large language and diffusion models developed by Meta and Stability AI, respectively. Our $\Lambda$-Split implementation is publicly accessible at https://github.com/nishio-laboratory/lambda_split.

Tram-FL: Routing-based Model Training for Decentralized Federated Learning

Aug 09, 2023

Abstract:In decentralized federated learning (DFL), substantial traffic from frequent inter-node communication and non-independent and identically distributed (non-IID) data challenges high-accuracy model acquisition. We propose Tram-FL, a novel DFL method, which progressively refines a global model by transferring it sequentially amongst nodes, rather than by exchanging and aggregating local models. We also introduce a dynamic model routing algorithm for optimal route selection, aimed at enhancing model precision with minimal forwarding. Our experiments using MNIST, CIFAR-10, and IMDb datasets demonstrate that Tram-FL with the proposed routing delivers high model accuracy under non-IID conditions, outperforming baselines while reducing communication costs.

Trans-Inpainter: A Transformer Model for High Accuracy Image Inpainting from Channel State Information

May 09, 2023

Abstract:Radio Frequency (RF) signal-based multimodal image inpainting has recently emerged as a promising paradigm to enhance the capability of distortion-free image restoration by integrating wireless and visual information from the identical physical environment and has potential applications in fields like security and surveillance systems. In this paper, we aim to implement an RF-based image inpainting system that enables image restoration in a complex environment while maintaining high robustness and accuracy. This requires accurately converting RF signals into meaningful visual information and overcoming the challenges of RF signals in complex environments, such as multipath interference, signal attenuation, and noise. To tackle this problem, we propose Trans-Inpainter, a novel image inpainting method that utilizes the Channel State Information (CSI) of WiFi signals in combination with transformer networks to generate high-quality reconstructed images. This approach is the first to use CSI for image inpainting, which allows for extracting visual information from WiFi signals to fill in missing regions in images. To further improve Trans-Inpainter's performance, we investigate the impact of variations in CSI data on RF-based imaging ability, i.e., analyzing how the location of the CSI sensors, the combination of CSI from different sensors, and changes in temporal or frequency dimensions of CSI matrix affect the imaging quality. We compare the performance of Trans-Inpainter with RF-Inpainter, the state-of-the-art technology for RF-based multimodal image inpainting, under more realistic experimental scenarios, and with single-modality image inpainting models when only RF or image data is available, respectively. The results show that Trans-Inpainter outperforms other baseline methods in all cases.

Point Cloud-based Proactive Link Quality Prediction for Millimeter-wave Communications

Jan 02, 2023

Abstract:This study demonstrates the feasibility of point cloud-based proactive link quality prediction for millimeter-wave (mmWave) communications. Image-based methods to quantitatively and deterministically predict future received signal strength using machine learning from time series of depth images to mitigate the human body line-of-sight (LOS) path blockage in mmWave communications have been proposed. However, image-based methods have been limited in applicable environments because camera images may contain private information. Thus, this study demonstrates the feasibility of using point clouds obtained from light detection and ranging (LiDAR) for the mmWave link quality prediction. Point clouds represent three-dimensional (3D) spaces as a set of points and are sparser and less likely to contain sensitive information than camera images. Additionally, point clouds provide 3D position and motion information, which is necessary for understanding the radio propagation environment involving pedestrians. This study designs the mmWave link quality prediction method and conducts two experimental evaluations using different types of point clouds obtained from LiDAR and depth cameras, as well as different numerical indicators of link quality, received signal strength and throughput. Based on these experiments, our proposed method can predict future large attenuation of mmWave link quality due to LOS blockage by human bodies, therefore our point cloud-based method can be an alternative to image-based methods.

Neural Architecture Search for Improving Latency-Accuracy Trade-off in Split Computing

Aug 30, 2022

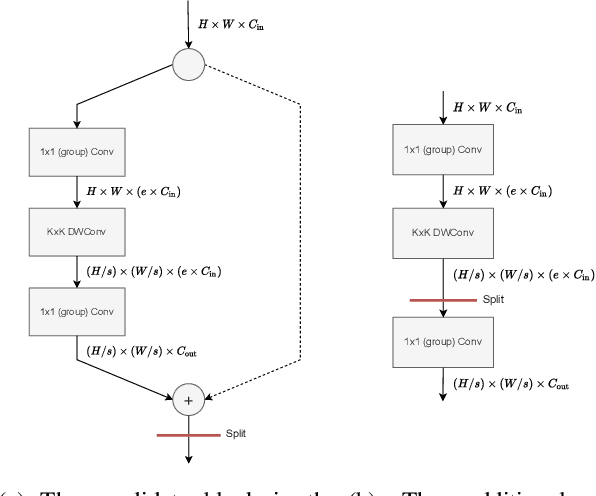

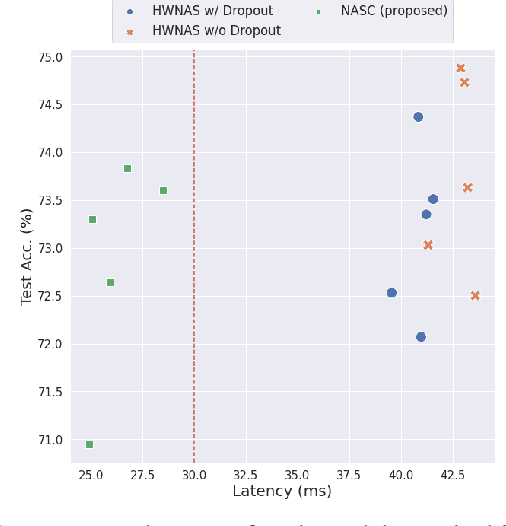

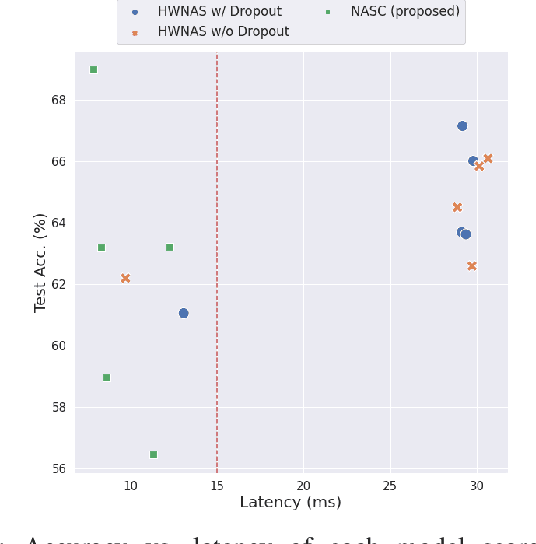

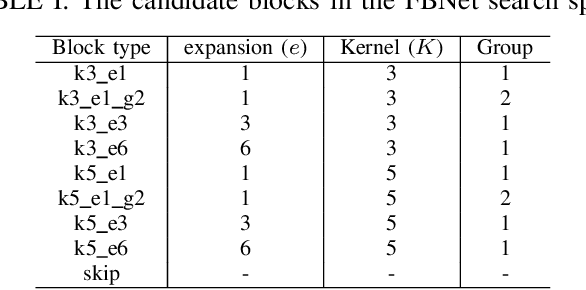

Abstract:This paper proposes a neural architecture search (NAS) method for split computing. Split computing is an emerging machine-learning inference technique that addresses the privacy and latency challenges of deploying deep learning in IoT systems. In split computing, neural network models are separated and cooperatively processed using edge servers and IoT devices via networks. Thus, the architecture of the neural network model significantly impacts the communication payload size, model accuracy, and computational load. In this paper, we address the challenge of optimizing neural network architecture for split computing. To this end, we proposed NASC, which jointly explores optimal model architecture and a split point to achieve higher accuracy while meeting latency requirements (i.e., smaller total latency of computation and communication than a certain threshold). NASC employs a one-shot NAS that does not require repeating model training for a computationally efficient architecture search. Our performance evaluation using hardware (HW)-NAS-Bench of benchmark data demonstrates that the proposed NASC can improve the ``communication latency and model accuracy" trade-off, i.e., reduce the latency by approximately 40-60% from the baseline, with slight accuracy degradation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge