Cross-Attention End-to-End ASR for Two-Party Conversations

Jul 24, 2019Suyoun Kim, Siddharth Dalmia, Florian Metze

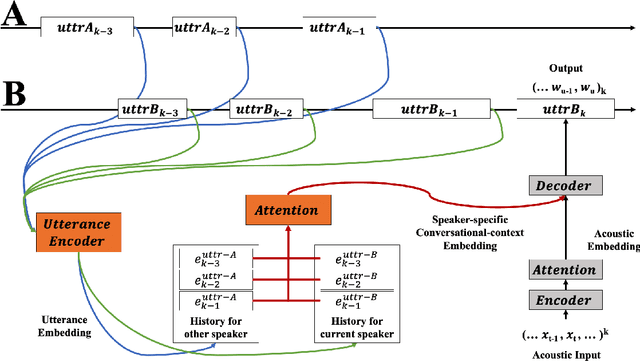

We present an end-to-end speech recognition model that learns interaction between two speakers based on the turn-changing information. Unlike conventional speech recognition models, our model exploits two speakers' history of conversational-context information that spans across multiple turns within an end-to-end framework. Specifically, we propose a speaker-specific cross-attention mechanism that can look at the output of the other speaker side as well as the one of the current speaker for better at recognizing long conversations. We evaluated the models on the Switchboard conversational speech corpus and show that our model outperforms standard end-to-end speech recognition models.

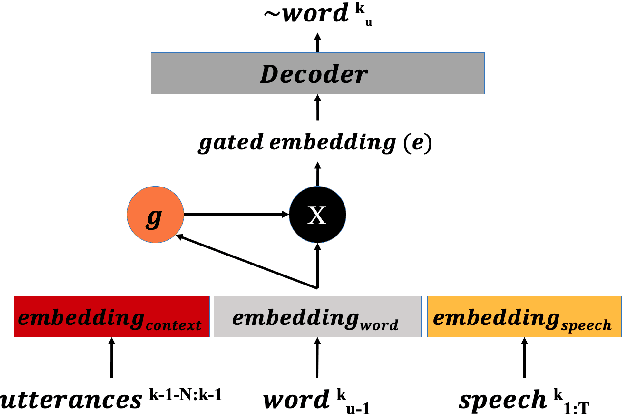

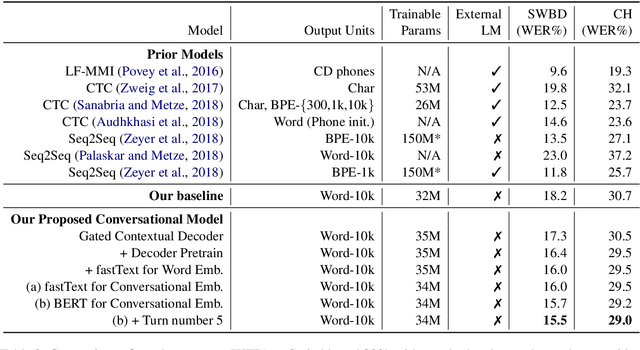

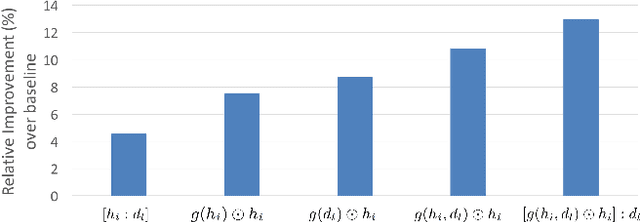

Gated Embeddings in End-to-End Speech Recognition for Conversational-Context Fusion

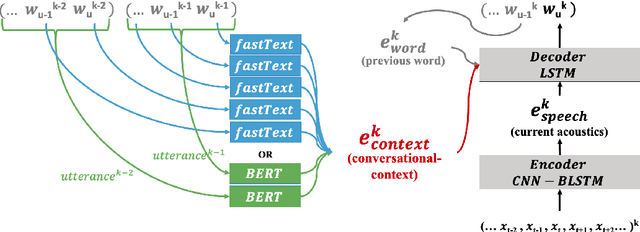

Jun 27, 2019Suyoun Kim, Siddharth Dalmia, Florian Metze

We present a novel conversational-context aware end-to-end speech recognizer based on a gated neural network that incorporates conversational-context/word/speech embeddings. Unlike conventional speech recognition models, our model learns longer conversational-context information that spans across sentences and is consequently better at recognizing long conversations. Specifically, we propose to use the text-based external word and/or sentence embeddings (i.e., fastText, BERT) within an end-to-end framework, yielding a significant improvement in word error rate with better conversational-context representation. We evaluated the models on the Switchboard conversational speech corpus and show that our model outperforms standard end-to-end speech recognition models.

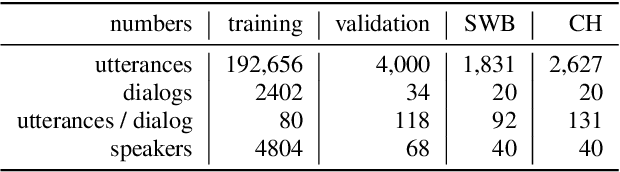

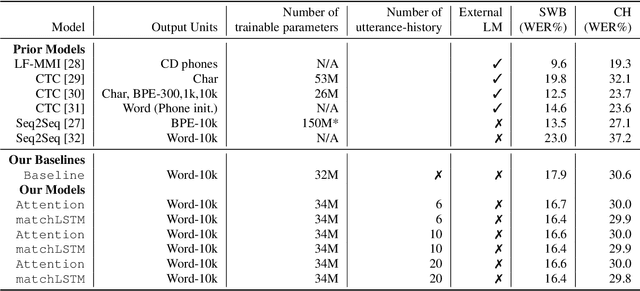

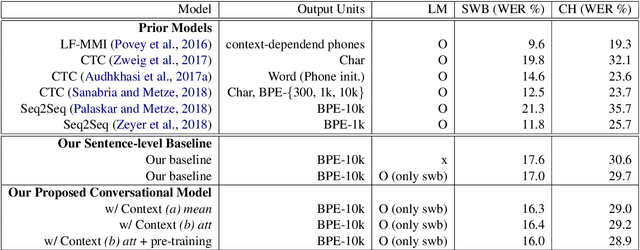

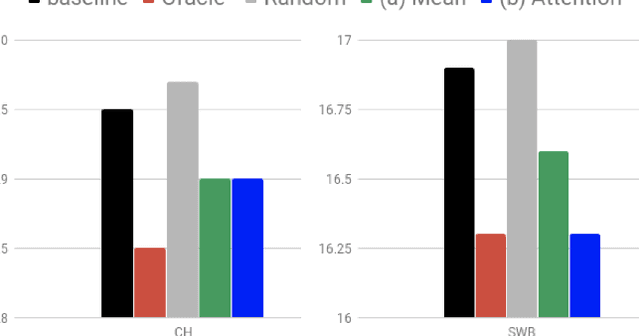

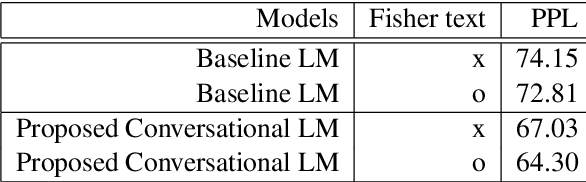

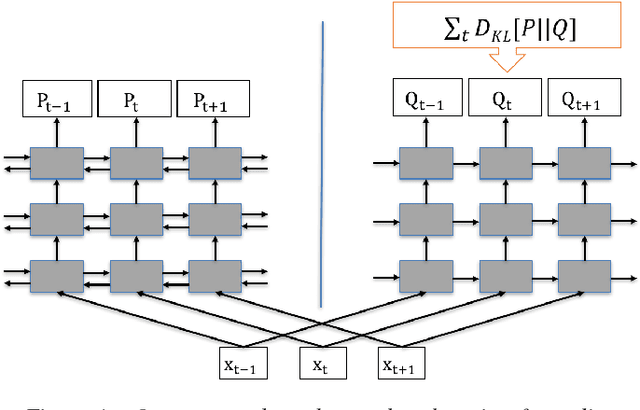

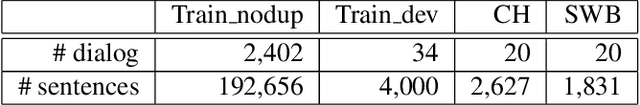

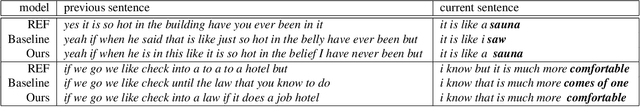

Acoustic-to-Word Models with Conversational Context Information

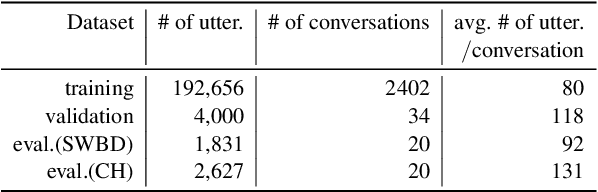

May 21, 2019Suyoun Kim, Florian Metze

Conversational context information, higher-level knowledge that spans across sentences, can help to recognize a long conversation. However, existing speech recognition models are typically built at a sentence level, and thus it may not capture important conversational context information. The recent progress in end-to-end speech recognition enables integrating context with other available information (e.g., acoustic, linguistic resources) and directly recognizing words from speech. In this work, we present a direct acoustic-to-word, end-to-end speech recognition model capable of utilizing the conversational context to better process long conversations. We evaluate our proposed approach on the Switchboard conversational speech corpus and show that our system outperforms a standard end-to-end speech recognition system.

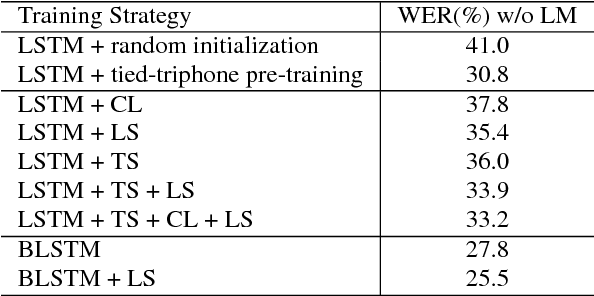

Improved training for online end-to-end speech recognition systems

Aug 30, 2018Suyoun Kim, Michael L. Seltzer, Jinyu Li, Rui Zhao

Achieving high accuracy with end-to-end speech recognizers requires careful parameter initialization prior to training. Otherwise, the networks may fail to find a good local optimum. This is particularly true for online networks, such as unidirectional LSTMs. Currently, the best strategy to train such systems is to bootstrap the training from a tied-triphone system. However, this is time consuming, and more importantly, is impossible for languages without a high-quality pronunciation lexicon. In this work, we propose an initialization strategy that uses teacher-student learning to transfer knowledge from a large, well-trained, offline end-to-end speech recognition model to an online end-to-end model, eliminating the need for a lexicon or any other linguistic resources. We also explore curriculum learning and label smoothing and show how they can be combined with the proposed teacher-student learning for further improvements. We evaluate our methods on a Microsoft Cortana personal assistant task and show that the proposed method results in a 19 % relative improvement in word error rate compared to a randomly-initialized baseline system.

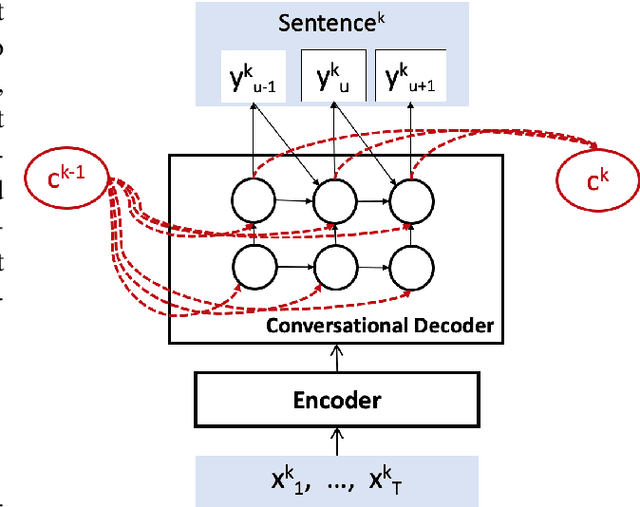

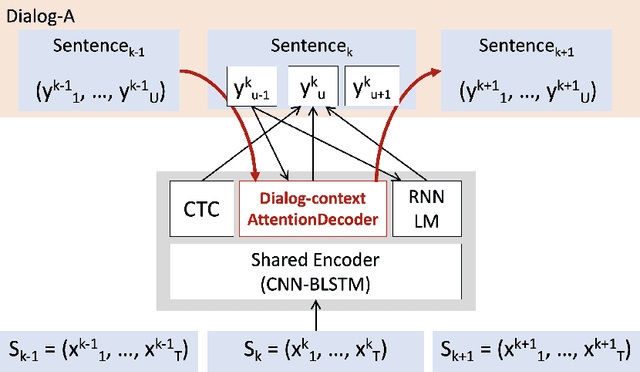

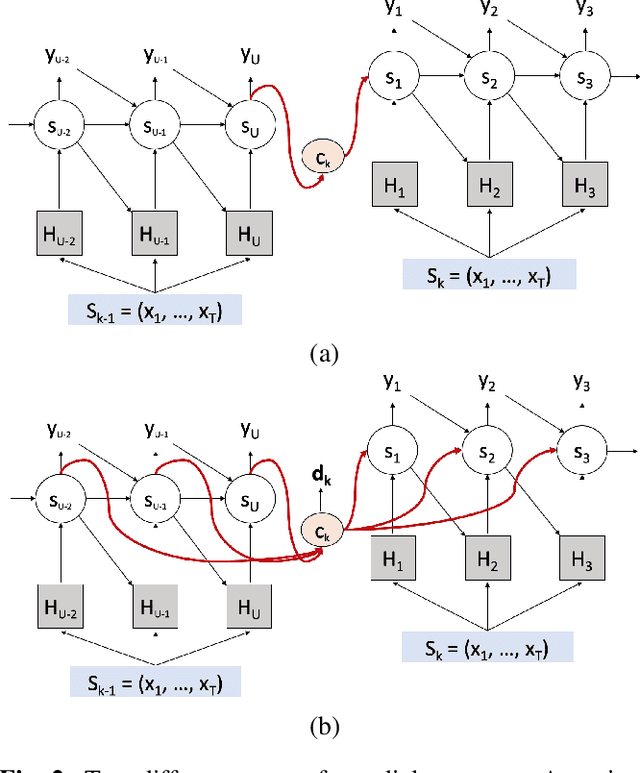

Dialog-context aware end-to-end speech recognition

Aug 07, 2018Suyoun Kim, Florian Metze

Existing speech recognition systems are typically built at the sentence level, although it is known that dialog context, e.g. higher-level knowledge that spans across sentences or speakers, can help the processing of long conversations. The recent progress in end-to-end speech recognition systems promises to integrate all available information (e.g. acoustic, language resources) into a single model, which is then jointly optimized. It seems natural that such dialog context information should thus also be integrated into the end-to-end models to improve further recognition accuracy. In this work, we present a dialog-context aware speech recognition model, which explicitly uses context information beyond sentence-level information, in an end-to-end fashion. Our dialog-context model captures a history of sentence-level context so that the whole system can be trained with dialog-context information in an end-to-end manner. We evaluate our proposed approach on the Switchboard conversational speech corpus and show that our system outperforms a comparable sentence-level end-to-end speech recognition system.

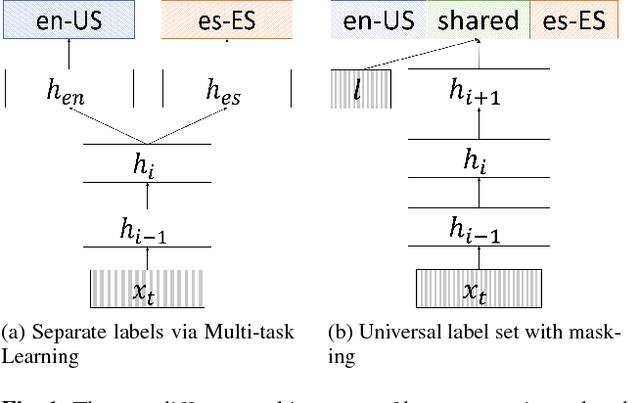

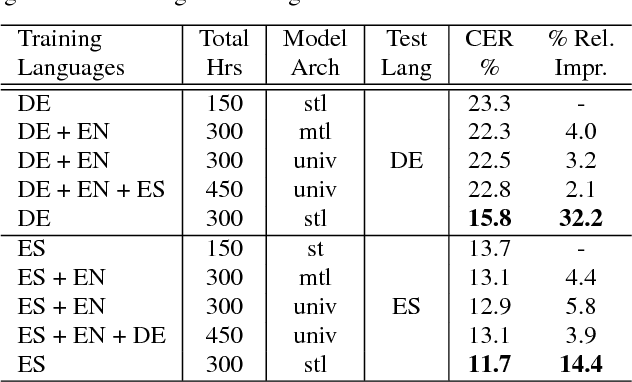

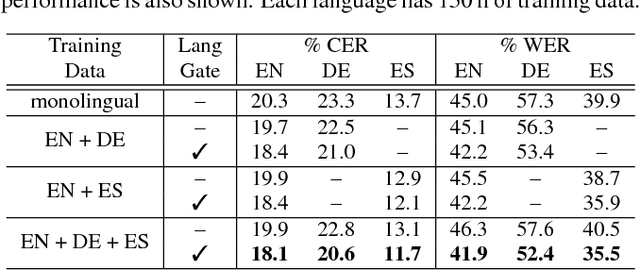

Towards Language-Universal End-to-End Speech Recognition

Nov 06, 2017Suyoun Kim, Michael L. Seltzer

Building speech recognizers in multiple languages typically involves replicating a monolingual training recipe for each language, or utilizing a multi-task learning approach where models for different languages have separate output labels but share some internal parameters. In this work, we exploit recent progress in end-to-end speech recognition to create a single multilingual speech recognition system capable of recognizing any of the languages seen in training. To do so, we propose the use of a universal character set that is shared among all languages. We also create a language-specific gating mechanism within the network that can modulate the network's internal representations in a language-specific way. We evaluate our proposed approach on the Microsoft Cortana task across three languages and show that our system outperforms both the individual monolingual systems and systems built with a multi-task learning approach. We also show that this model can be used to initialize a monolingual speech recognizer, and can be used to create a bilingual model for use in code-switching scenarios.

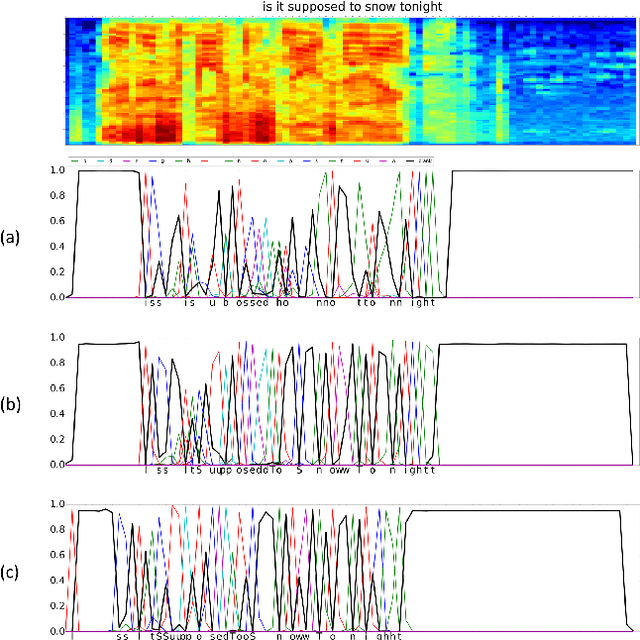

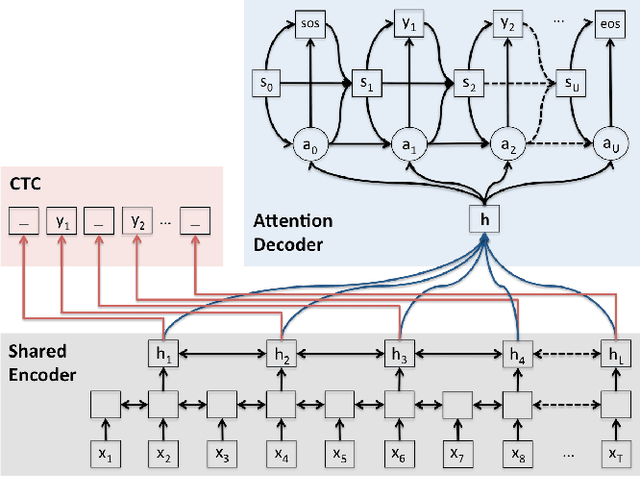

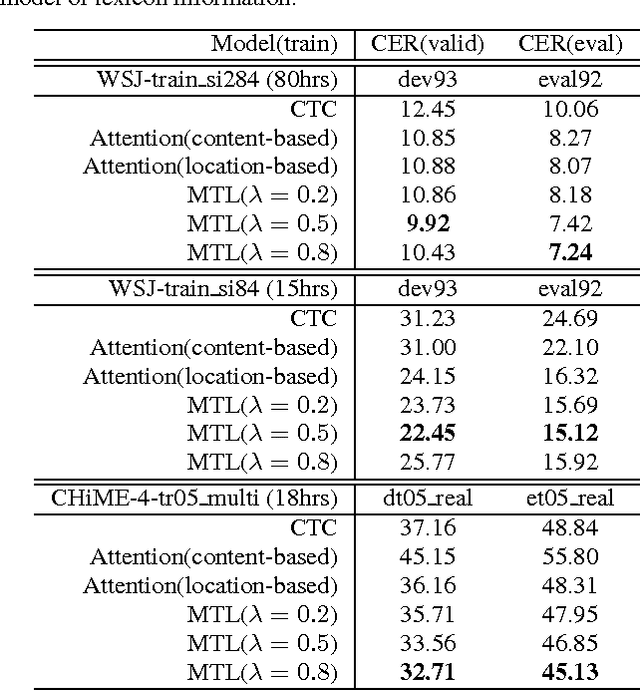

Joint CTC-Attention based End-to-End Speech Recognition using Multi-task Learning

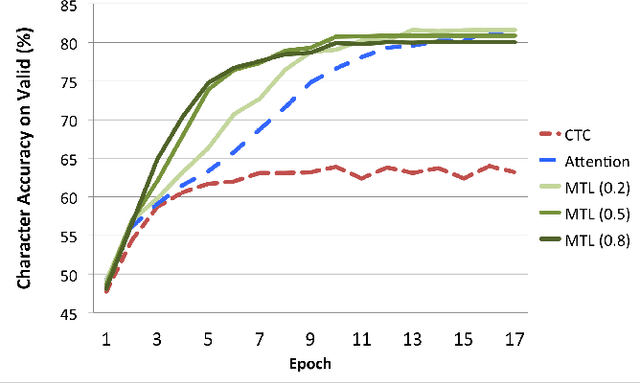

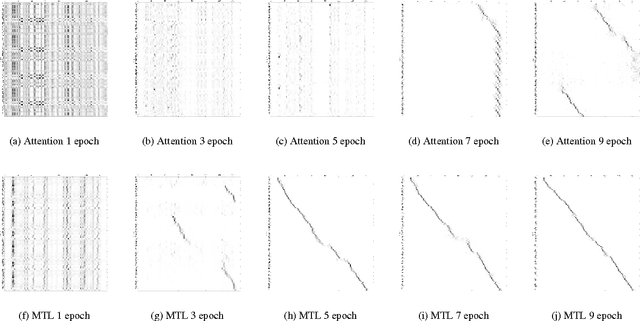

Jan 31, 2017Suyoun Kim, Takaaki Hori, Shinji Watanabe

Recently, there has been an increasing interest in end-to-end speech recognition that directly transcribes speech to text without any predefined alignments. One approach is the attention-based encoder-decoder framework that learns a mapping between variable-length input and output sequences in one step using a purely data-driven method. The attention model has often been shown to improve the performance over another end-to-end approach, the Connectionist Temporal Classification (CTC), mainly because it explicitly uses the history of the target character without any conditional independence assumptions. However, we observed that the performance of the attention has shown poor results in noisy condition and is hard to learn in the initial training stage with long input sequences. This is because the attention model is too flexible to predict proper alignments in such cases due to the lack of left-to-right constraints as used in CTC. This paper presents a novel method for end-to-end speech recognition to improve robustness and achieve fast convergence by using a joint CTC-attention model within the multi-task learning framework, thereby mitigating the alignment issue. An experiment on the WSJ and CHiME-4 tasks demonstrates its advantages over both the CTC and attention-based encoder-decoder baselines, showing 5.4-14.6% relative improvements in Character Error Rate (CER).

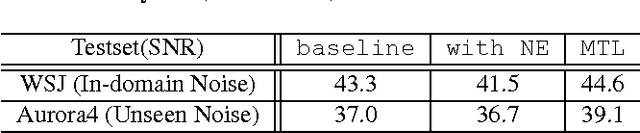

Environmental Noise Embeddings for Robust Speech Recognition

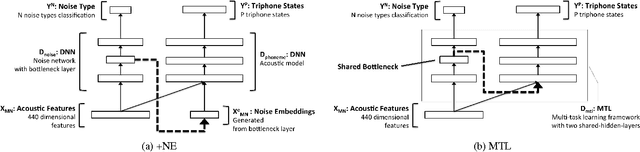

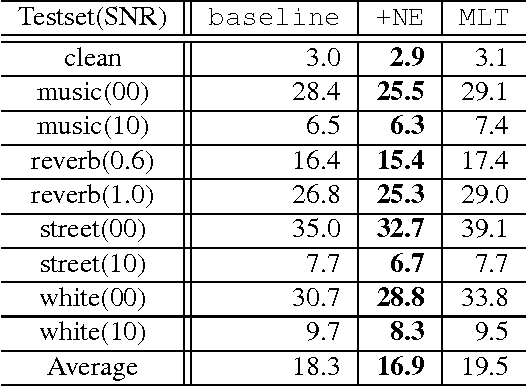

Sep 29, 2016Suyoun Kim, Bhiksha Raj, Ian Lane

We propose a novel deep neural network architecture for speech recognition that explicitly employs knowledge of the background environmental noise within a deep neural network acoustic model. A deep neural network is used to predict the acoustic environment in which the system in being used. The discriminative embedding generated at the bottleneck layer of this network is then concatenated with traditional acoustic features as input to a deep neural network acoustic model. Through a series of experiments on Resource Management, CHiME-3 task, and Aurora4, we show that the proposed approach significantly improves speech recognition accuracy in noisy and highly reverberant environments, outperforming multi-condition training, noise-aware training, i-vector framework, and multi-task learning on both in-domain noise and unseen noise.

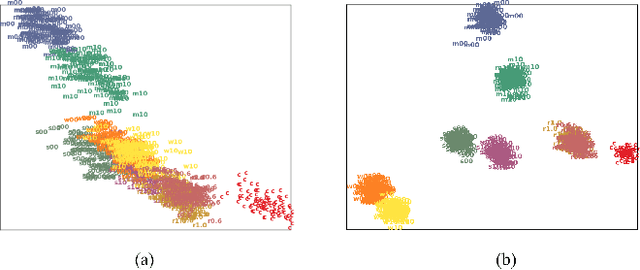

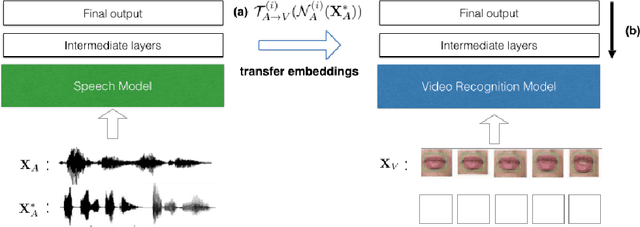

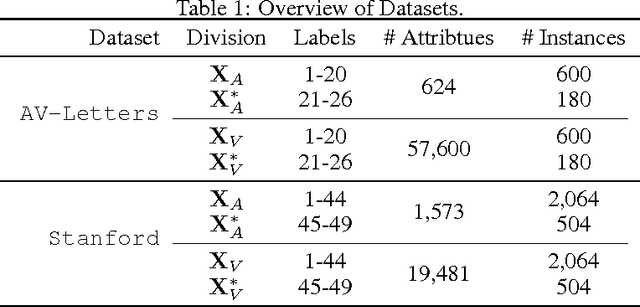

Multimodal Transfer Deep Learning with Applications in Audio-Visual Recognition

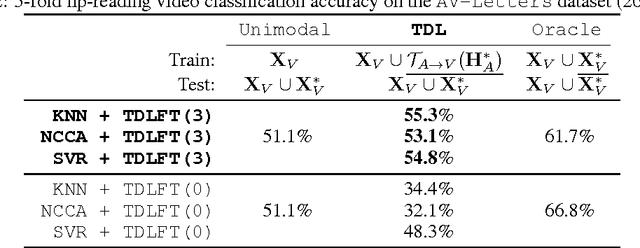

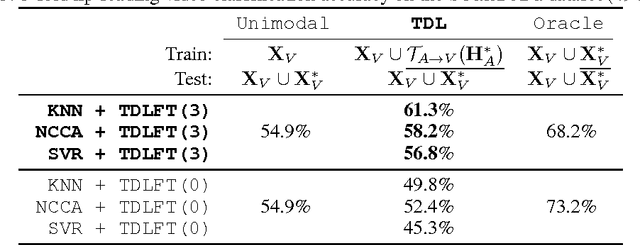

Feb 18, 2016Seungwhan Moon, Suyoun Kim, Haohan Wang

We propose a transfer deep learning (TDL) framework that can transfer the knowledge obtained from a single-modal neural network to a network with a different modality. Specifically, we show that we can leverage speech data to fine-tune the network trained for video recognition, given an initial set of audio-video parallel dataset within the same semantics. Our approach first learns the analogy-preserving embeddings between the abstract representations learned from intermediate layers of each network, allowing for semantics-level transfer between the source and target modalities. We then apply our neural network operation that fine-tunes the target network with the additional knowledge transferred from the source network, while keeping the topology of the target network unchanged. While we present an audio-visual recognition task as an application of our approach, our framework is flexible and thus can work with any multimodal dataset, or with any already-existing deep networks that share the common underlying semantics. In this work in progress report, we aim to provide comprehensive results of different configurations of the proposed approach on two widely used audio-visual datasets, and we discuss potential applications of the proposed approach.

Recurrent Models for Auditory Attention in Multi-Microphone Distance Speech Recognition

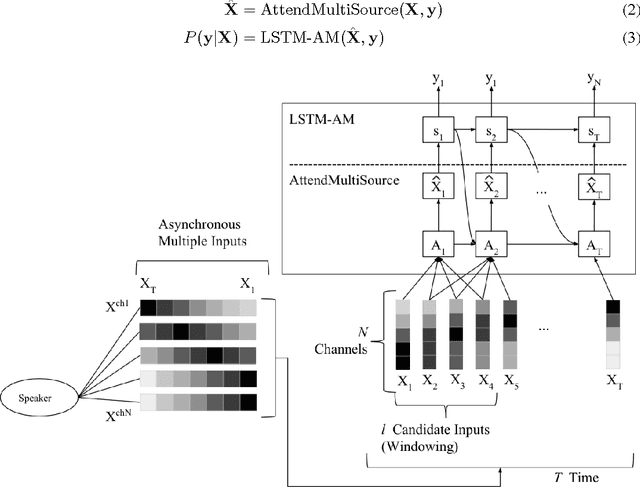

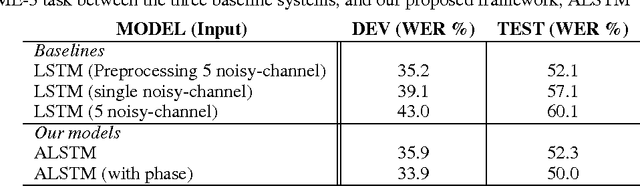

Jan 07, 2016Suyoun Kim, Ian Lane

Integration of multiple microphone data is one of the key ways to achieve robust speech recognition in noisy environments or when the speaker is located at some distance from the input device. Signal processing techniques such as beamforming are widely used to extract a speech signal of interest from background noise. These techniques, however, are highly dependent on prior spatial information about the microphones and the environment in which the system is being used. In this work, we present a neural attention network that directly combines multi-channel audio to generate phonetic states without requiring any prior knowledge of the microphone layout or any explicit signal preprocessing for speech enhancement. We embed an attention mechanism within a Recurrent Neural Network (RNN) based acoustic model to automatically tune its attention to a more reliable input source. Unlike traditional multi-channel preprocessing, our system can be optimized towards the desired output in one step. Although attention-based models have recently achieved impressive results on sequence-to-sequence learning, no attention mechanisms have previously been applied to learn potentially asynchronous and non-stationary multiple inputs. We evaluate our neural attention model on the CHiME-3 challenge task, and show that the model achieves comparable performance to beamforming using a purely data-driven method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge