Stephan Sigg

Low-Power On-Device Gesture Recognition with Einsum Networks

Jan 23, 2026Abstract:We design a gesture-recognition pipeline for networks of distributed, resource constrained devices utilising Einsum Networks. Einsum Networks are probabilistic circuits that feature a tractable inference, explainability, and energy efficiency. The system is validated in a scenario of low-power, body-worn, passive Radio Frequency Identification-based gesture recognition. Each constrained device includes task-specific processing units responsible for Received Signal Strength (RSS) and phase processing or Angle of Arrival (AoA) estimation, along with feature extraction, as well as dedicated Einsum hardware that processes the extracted features. The output of all constrained devices is then fused in a decision aggregation module to predict gestures. Experimental results demonstrate that the method outperforms the benchmark models.

Angle of Arrival Estimation for Gesture Recognition from reflective body-worn tags

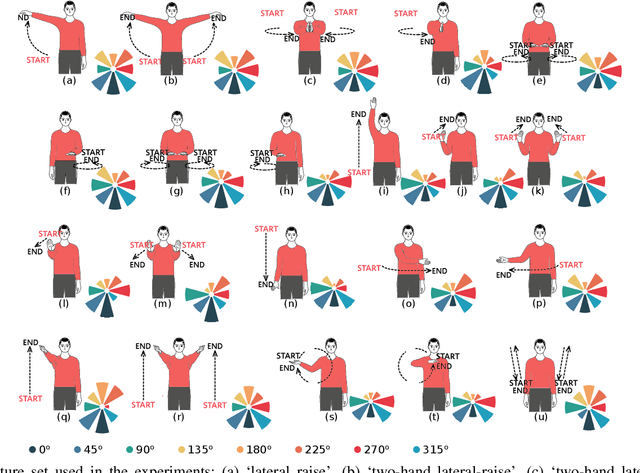

Jan 22, 2026Abstract:We investigate hand gesture recognition by leveraging passive reflective tags worn on the body. Considering a large set of gestures, distinct patterns are difficult to be captured by learning algorithms using backscattered received signal strength (RSS) and phase signals. This is because these features often exhibit similarities across signals from different gestures. To address this limitation, we explore the estimation of Angle of Arrival (AoA) as a distinguishing feature, since AoA characteristically varies during body motion. To ensure reliable estimation in our system, which employs Smart Antenna Switching (SAS), we first validate AoA estimation using the Multiple SIgnal Classification (MUSIC) algorithm while the tags are fixed at specific angles. Building on this, we propose an AoA tracking method based on Kalman smoothing. Our analysis demonstrates that, while RSS and phase alone are insufficient for distinguishing certain gesture data, AoA tracking can effectively differentiate them. To evaluate the effectiveness of AoA tracking, we implement gesture recognition system benchmarks and show that incorporating AoA features significantly boosts their performance. Improvements of up to 15% confirm the value of AoA-based enhancement.

Gesture Recognition from body-Worn RFID under Missing Data

Jan 22, 2026Abstract:We explore hand-gesture recognition through the use of passive body-worn reflective tags. A data processing pipeline is proposed to address the issue of missing data. Specifically, missing information is recovered through linear and exponential interpolation and extrapolation. Furthermore, imputation and proximity-based inference are employed. We represent tags as nodes in a temporal graph, with edges formed based on correlations between received signal strength (RSS) and phase values across successive timestamps, and we train a graph-based convolutional neural network that exploits graph-based self-attention. The system outperforms state-of-the-art methods with an accuracy of 98.13% for the recognition of 21 gestures. We achieve 89.28% accuracy under leave-one-person-out cross-validation. We further investigate the contribution of various body locations on the recognition accuracy. Removing tags from the arms reduces accuracy by more than 10%, while removing the wrist tag only reduces accuracy by around 2%. Therefore, tag placements on the arms are more expressive for gesture recognition than on the wrist.

Non-Invasive Arterial Pulse Detection with Millimeter-wave Radar and Comparison With Photoplethysmography

May 19, 2025Abstract:Cardiovascular diseases remain a leading cause of mortality and disability. The convenient measurement of cardiovascular health using smart systems is therefore a key enabler to foster accurate and early detection and diagnosis of cardiovascular diseases and it require accessing a correct pulse morphology similar to arterial pressure wave. This paper investigates the comparison between different sensor modalities, such as mmWave and photoplethysmography from the same physiological site and reference continuous non-invasive blood pressure devide. We have developed a hardware prototype and established an experiment consist of 23 test participants. Both mmWave and PPG are capable of detecting inter-beat intervals. mmWave is providing more accurate arterial pulse waveform than green photoplethysmography.

Awareness in robotics: An early perspective from the viewpoint of the EIC Pathfinder Challenge "Awareness Inside''

Feb 14, 2024Abstract:Consciousness has been historically a heavily debated topic in engineering, science, and philosophy. On the contrary, awareness had less success in raising the interest of scholars in the past. However, things are changing as more and more researchers are getting interested in answering questions concerning what awareness is and how it can be artificially generated. The landscape is rapidly evolving, with multiple voices and interpretations of the concept being conceived and techniques being developed. The goal of this paper is to summarize and discuss the ones among these voices connected with projects funded by the EIC Pathfinder Challenge called ``Awareness Inside'', a nonrecurring call for proposals within Horizon Europe designed specifically for fostering research on natural and synthetic awareness. In this perspective, we dedicate special attention to challenges and promises of applying synthetic awareness in robotics, as the development of mature techniques in this new field is expected to have a special impact on generating more capable and trustworthy embodied systems.

Unsupervised Statistical Feature-Guided Diffusion Model for Sensor-based Human Activity Recognition

May 30, 2023Abstract:Recognizing human activities from sensor data is a vital task in various domains, but obtaining diverse and labeled sensor data remains challenging and costly. In this paper, we propose an unsupervised statistical feature-guided diffusion model for sensor-based human activity recognition. The proposed method aims to generate synthetic time-series sensor data without relying on labeled data, addressing the scarcity and annotation difficulties associated with real-world sensor data. By conditioning the diffusion model on statistical information such as mean, standard deviation, Z-score, and skewness, we generate diverse and representative synthetic sensor data. We conducted experiments on public human activity recognition datasets and compared the proposed method to conventional oversampling methods and state-of-the-art generative adversarial network methods. The experimental results demonstrate that the proposed method can improve the performance of human activity recognition and outperform existing techniques.

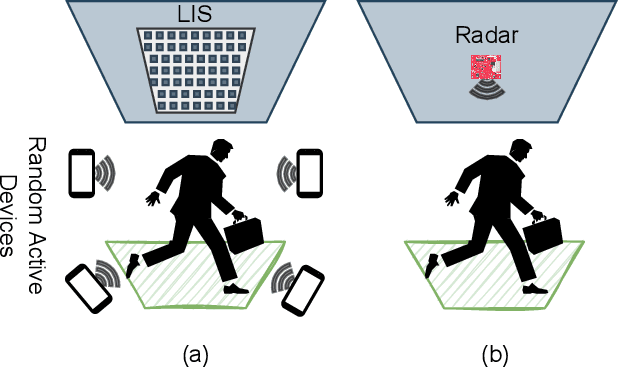

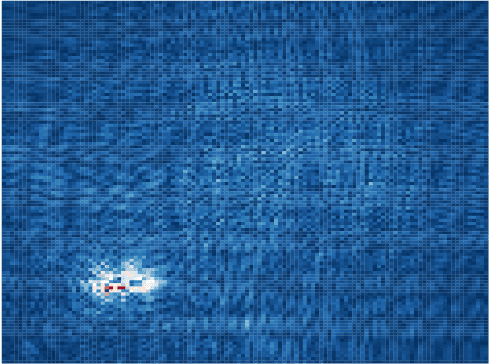

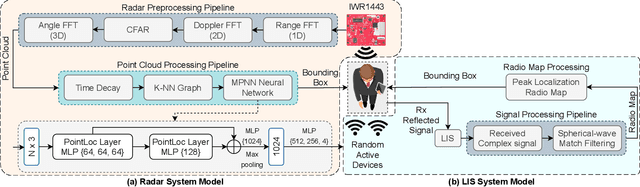

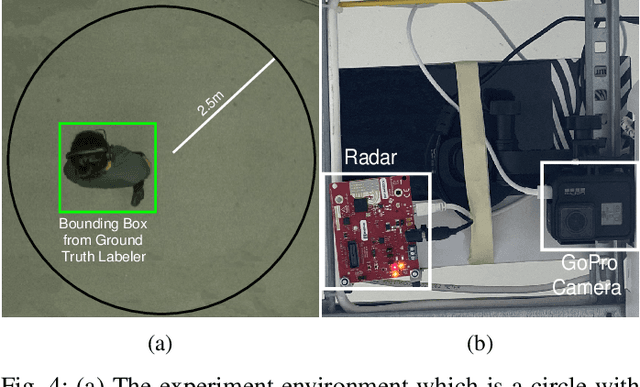

User Localization using RF Sensing: A Performance comparison between LIS and mmWave Radars

May 17, 2022

Abstract:Since electromagnetic signals are omnipresent, Radio Frequency (RF)-sensing has the potential to become a universal sensing mechanism with applications in localization, smart-home, retail, gesture recognition, intrusion detection, etc. Two emerging technologies in RF-sensing, namely sensing through Large Intelligent Surfaces (LISs) and mmWave Frequency-Modulated Continuous-Wave (FMCW) radars, have been successfully applied to a wide range of applications. In this work, we compare LIS and mmWave radars for localization in real-world and simulated environments. In our experiments, the mmWave radar achieves 0.71 Intersection Over Union (IOU) and 3cm error for bounding boxes, while LIS has 0.56 IOU and 10cm distance error. Although the radar outperforms the LIS in terms of accuracy, LIS features additional applications in communication in addition to sensing scenarios.

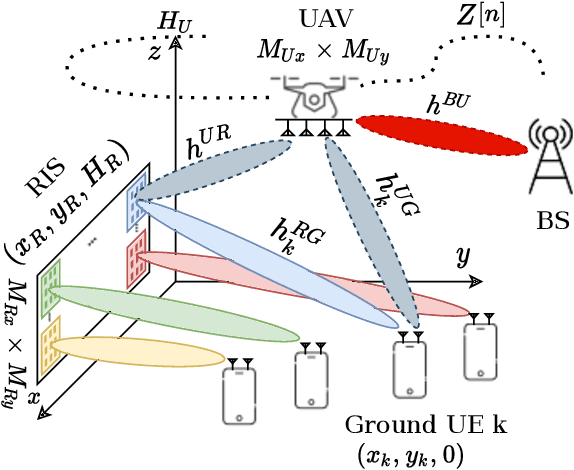

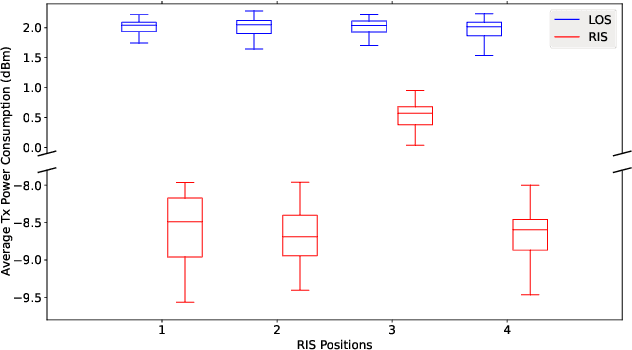

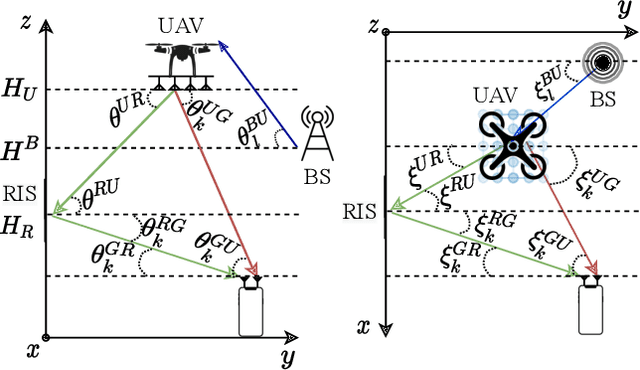

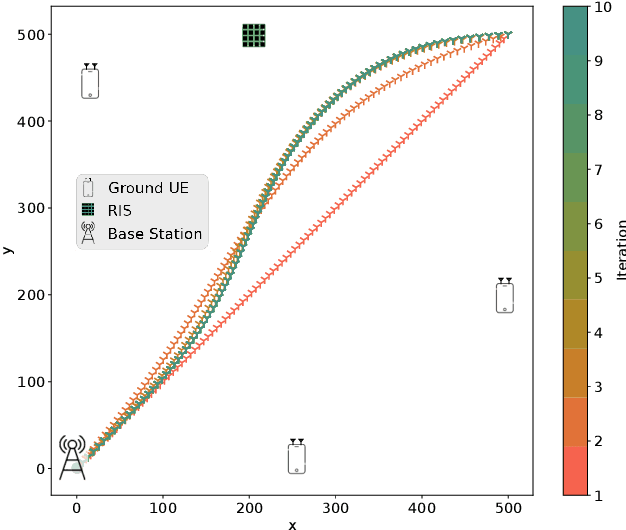

Energy-Efficient Design for RIS-assisted UAVcommunications in beyond-5G Networks

Sep 24, 2021

Abstract:The usage of Reconfigurable Intelligent Surfaces (RIS) in conjunction with Unmanned Ariel Vehicles (UAVs) is being investigated as a way to provide energy-efficient communication to ground users in dense urban areas. In this paper, we devise an optimization scenario to reduce overall energy consumption in the network while guaranteeing certain Quality of Service (QoS) to the ground users in the area. Due to the complex nature of the optimization problem, we provide a joint UAV trajectory and RIS phase decision to minimize transmission power of the UAV and Base Station (BS) that yields good performance with lower complexity. So, the proposed method uses a Successive Convex Approximation (SCA) to iteratively determine a joint optimal solution for UAV Trajectory, RIS phase and BS and UAV Transmission Power. The approach has, therefore, been analytically evaluated under different sets of criterion.

Integrating Sensing and Communication in Cellular Networks via NR Sidelink

Sep 15, 2021

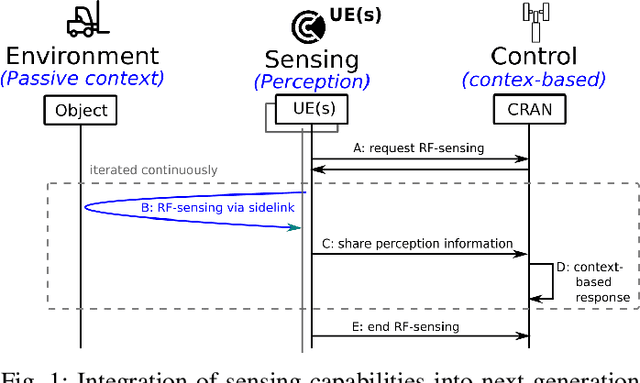

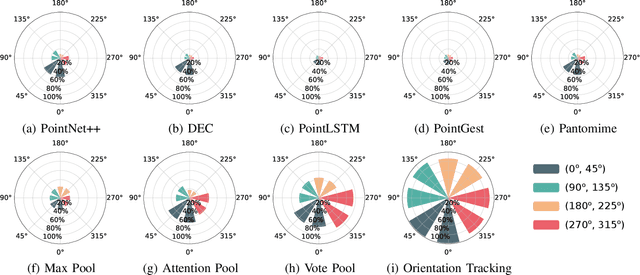

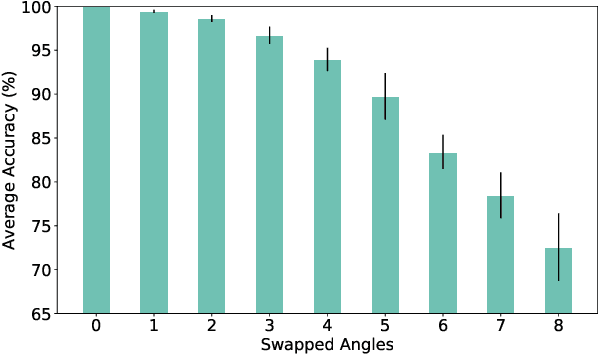

Abstract:RF-sensing, the analysis and interpretation of movement or environment-induced patterns in received electromagnetic signals, has been actively investigated for more than a decade. Since electromagnetic signals, through cellular communication systems, are omnipresent, RF sensing has the potential to become a universal sensing mechanism with applications in smart home, retail, localization, gesture recognition, intrusion detection, etc. Specifically, existing cellular network installations might be dual-used for both communication and sensing. Such communications and sensing convergence is envisioned for future communication networks. We propose the use of NR-sidelink direct device-to-device communication to achieve device-initiated,flexible sensing capabilities in beyond 5G cellular communication systems. In this article, we specifically investigate a common issue related to sidelink-based RF-sensing, which is its angle and rotation dependence. In particular, we discuss transformations of mmWave point-cloud data which achieve rotational invariance, as well as distributed processing based on such rotational invariant inputs, at angle and distance diverse devices. To process the distributed data, we propose a graph based encoder to capture spatio-temporal features of the data and propose four approaches for multi-angle learning. The approaches are compared on a newly recorded and openly available dataset comprising 15 subjects, performing 21 gestures which are recorded from 8 angles.

Tesla-Rapture: A Lightweight Gesture Recognition System from mmWave Radar Point Clouds

Sep 14, 2021

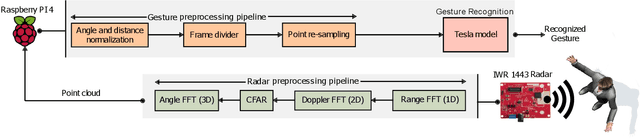

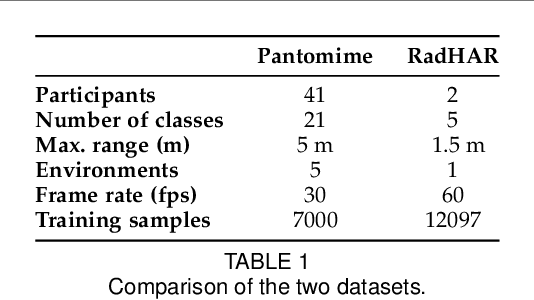

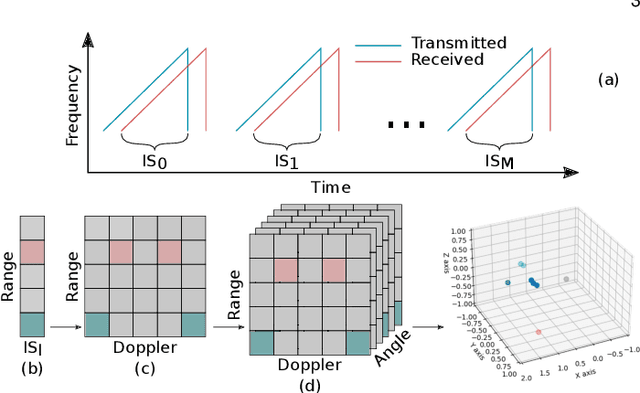

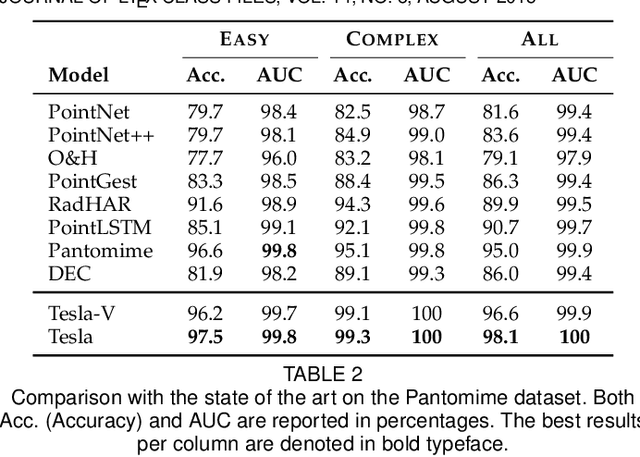

Abstract:We present Tesla-Rapture, a gesture recognition interface for point clouds generated by mmWave Radars. State of the art gesture recognition models are either too resource consuming or not sufficiently accurate for integration into real-life scenarios using wearable or constrained equipment such as IoT devices (e.g. Raspberry PI), XR hardware (e.g. HoloLens), or smart-phones. To tackle this issue, we developed Tesla, a Message Passing Neural Network (MPNN) graph convolution approach for mmWave radar point clouds. The model outperforms the state of the art on two datasets in terms of accuracy while reducing the computational complexity and, hence, the execution time. In particular, the approach, is able to predict a gesture almost 8 times faster than the most accurate competitor. Our performance evaluation in different scenarios (environments, angles, distances) shows that Tesla generalizes well and improves the accuracy up to 20% in challenging scenarios like a through-wall setting and sensing at extreme angles. Utilizing Tesla, we develop Tesla-Rapture, a real-time implementation using a mmWave Radar on a Raspberry PI 4 and evaluate its accuracy and time-complexity. We also publish the source code, the trained models, and the implementation of the model for embedded devices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge