Stefanos Zafeiriou

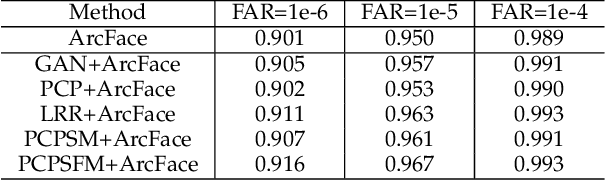

ArcFace: Additive Angular Margin Loss for Deep Face Recognition

Jan 23, 2018

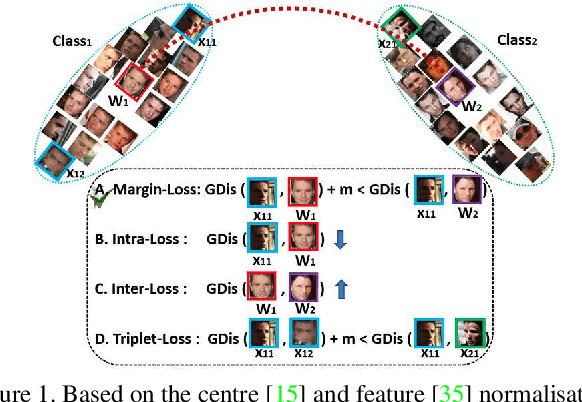

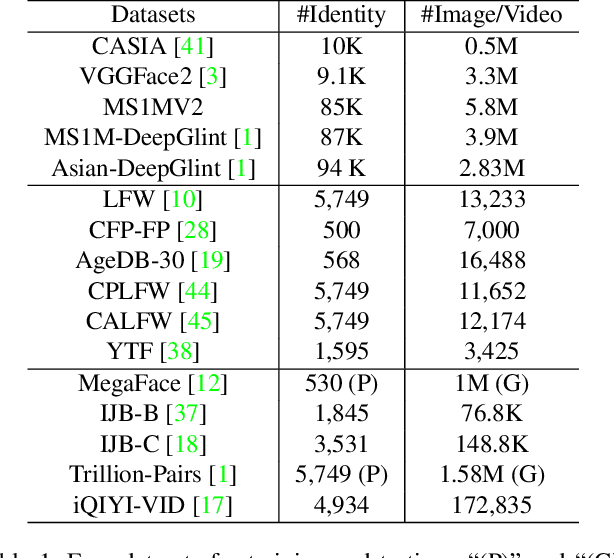

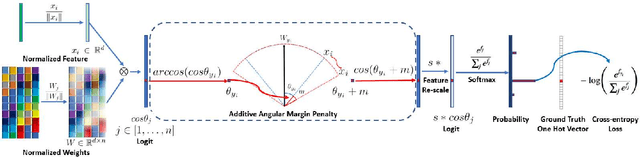

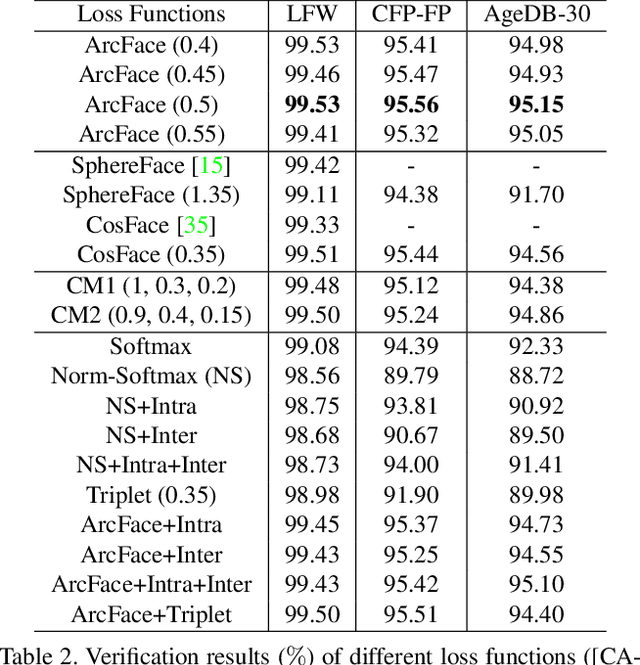

Abstract:Convolutional neural networks have significantly boosted the performance of face recognition in recent years due to its high capacity in learning discriminative features. To enhance the discriminative power of the Softmax loss, multiplicative angular margin and additive cosine margin incorporate angular margin and cosine margin into the loss functions, respectively. In this paper, we propose a novel supervisor signal, additive angular margin (ArcFace), which has a better geometrical interpretation than supervision signals proposed so far. Specifically, the proposed ArcFace $\cos(\theta + m)$ directly maximise decision boundary in angular (arc) space based on the L2 normalised weights and features. Compared to multiplicative angular margin $\cos(m\theta)$ and additive cosine margin $\cos\theta-m$, ArcFace can obtain more discriminative deep features. We also emphasise the importance of network settings and data refinement in the problem of deep face recognition. Extensive experiments on several relevant face recognition benchmarks, LFW, CFP and AgeDB, prove the effectiveness of the proposed ArcFace. Most importantly, we get state-of-art performance in the MegaFace Challenge in a totally reproducible way. We make data, models and training/test code public available~\footnote{https://github.com/deepinsight/insightface}.

Visual Data Augmentation through Learning

Jan 20, 2018

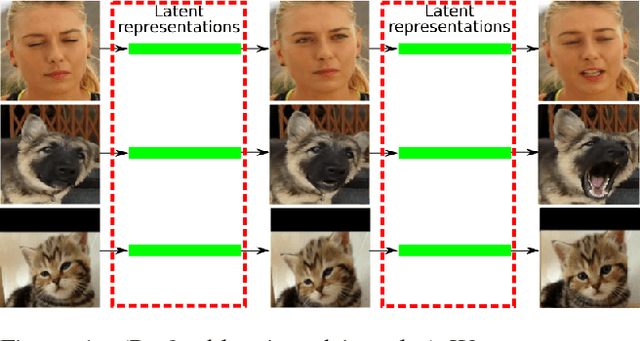

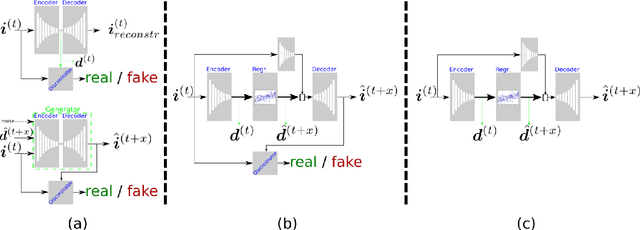

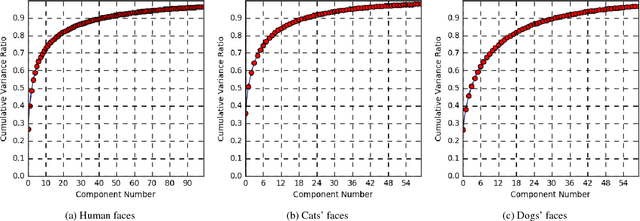

Abstract:The rapid progress in machine learning methods has been empowered by i) huge datasets that have been collected and annotated, ii) improved engineering (e.g. data pre-processing/normalization). The existing datasets typically include several million samples, which constitutes their extension a colossal task. In addition, the state-of-the-art data-driven methods demand a vast amount of data, hence a standard engineering trick employed is artificial data augmentation for instance by adding into the data cropped and (affinely) transformed images. However, this approach does not correspond to any change in the natural 3D scene. We propose instead to perform data augmentation through learning realistic local transformations. We learn a forward and an inverse transformation that maps an image from the high-dimensional space of pixel intensities to a latent space which varies (approximately) linearly with the latent space of a realistically transformed version of the image. Such transformed images can be considered two successive frames in a video. Next, we utilize these transformations to learn a linear model that modifies the latent spaces and then use the inverse transformation to synthesize a new image. We argue that the this procedure produces powerful invariant representations. We perform both qualitative and quantitative experiments that demonstrate our proposed method creates new realistic images.

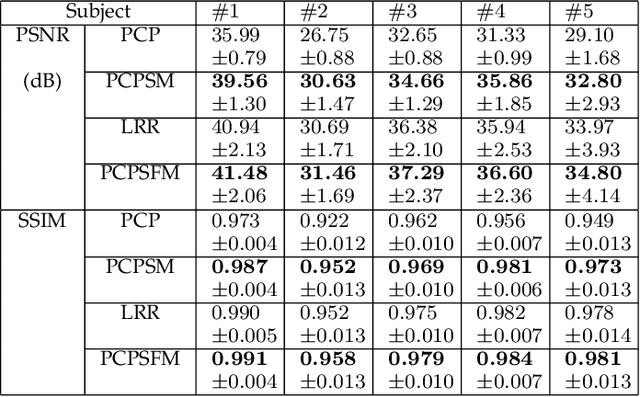

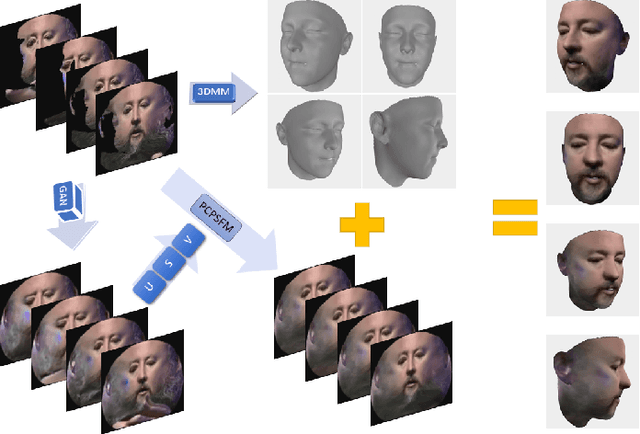

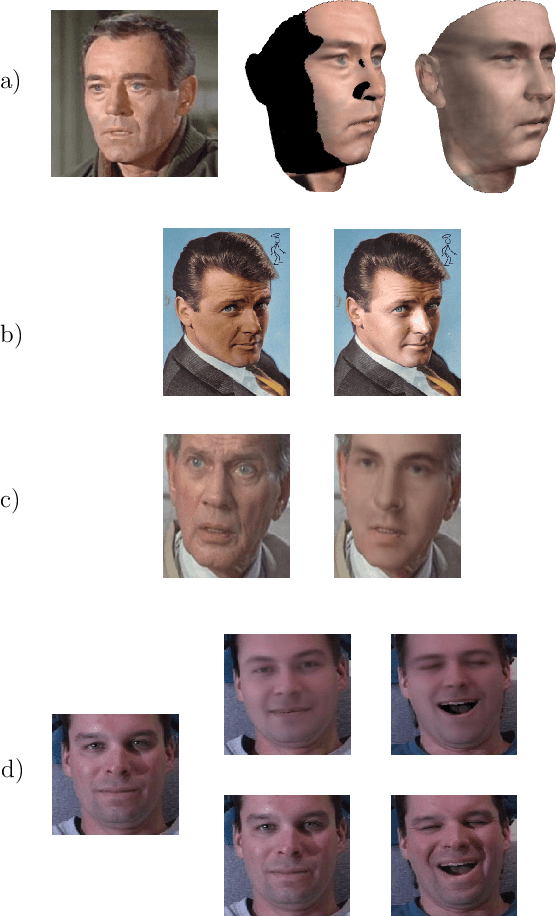

Side Information for Face Completion: a Robust PCA Approach

Jan 20, 2018

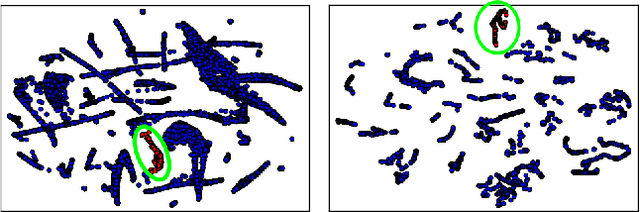

Abstract:Robust principal component analysis (RPCA) is a powerful method for learning low-rank feature representation of various visual data. However, for certain types as well as significant amount of error corruption, it fails to yield satisfactory results; a drawback that can be alleviated by exploiting domain-dependent prior knowledge or information. In this paper, we propose two models for the RPCA that take into account such side information, even in the presence of missing values. We apply this framework to the task of UV completion which is widely used in pose-invariant face recognition. Moreover, we construct a generative adversarial network (GAN) to extract side information as well as subspaces. These subspaces not only assist in the recovery but also speed up the process in case of large-scale data. We quantitatively and qualitatively evaluate the proposed approaches through both synthetic data and five real-world datasets to verify their effectiveness.

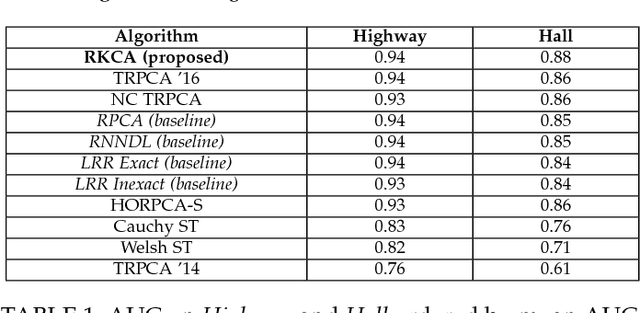

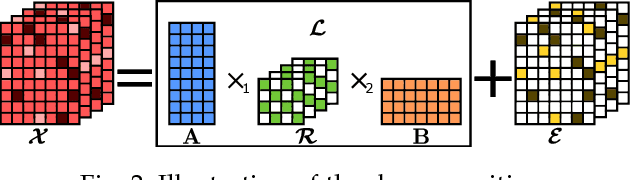

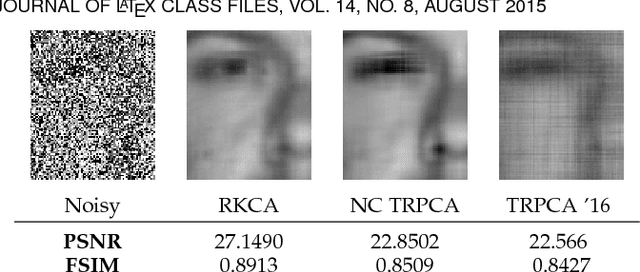

Robust Kronecker Component Analysis

Jan 18, 2018

Abstract:Dictionary learning and component analysis models are fundamental in learning compact representations that are relevant to a given task (feature extraction, dimensionality reduction, denoising, etc.). The model complexity is encoded by means of specific structure, such as sparsity, low-rankness, or nonnegativity. Unfortunately, approaches like K-SVD - that learn dictionaries for sparse coding via Singular Value Decomposition (SVD) - are hard to scale to high-volume and high-dimensional visual data, and fragile in the presence of outliers. Conversely, robust component analysis methods such as the Robust Principle Component Analysis (RPCA) are able to recover low-complexity (e.g., low-rank) representations from data corrupted with noise of unknown magnitude and support, but do not provide a dictionary that respects the structure of the data (e.g., images), and also involve expensive computations. In this paper, we propose a novel Kronecker-decomposable component analysis model, coined as Robust Kronecker Component Analysis (RKCA), that combines ideas from sparse dictionary learning and robust component analysis. RKCA has several appealing properties, including robustness to gross corruption; it can be used for low-rank modeling, and leverages separability to solve significantly smaller problems. We design an efficient learning algorithm by drawing links with a restricted form of tensor factorization, and analyze its optimality and low-rankness properties. The effectiveness of the proposed approach is demonstrated on real-world applications, namely background subtraction and image denoising and completion, by performing a thorough comparison with the current state of the art.

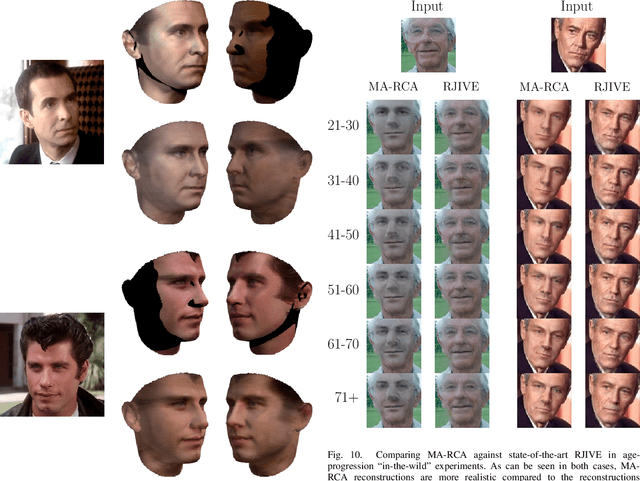

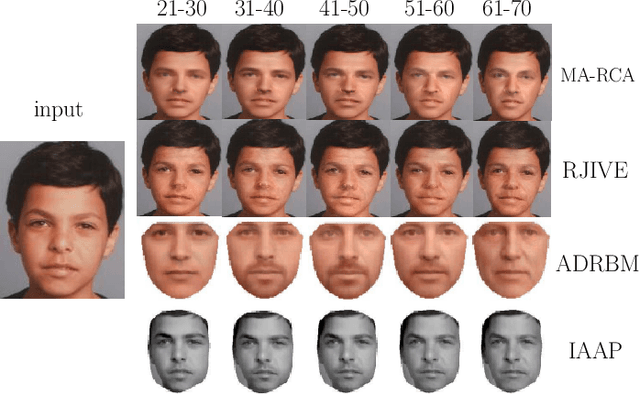

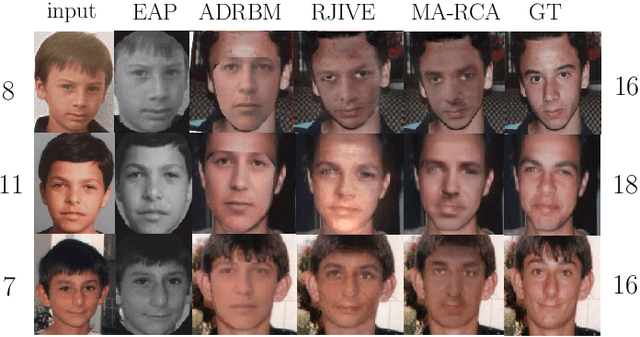

Multi-Attribute Robust Component Analysis for Facial UV Maps

Dec 15, 2017

Abstract:Recently, due to the collection of large scale 3D face models, as well as the advent of deep learning, a significant progress has been made in the field of 3D face alignment "in-the-wild". That is, many methods have been proposed that establish sparse or dense 3D correspondences between a 2D facial image and a 3D face model. The utilization of 3D face alignment introduces new challenges and research directions, especially on the analysis of facial texture images. In particular, texture does not suffer any more from warping effects (that occurred when 2D face alignment methods were used). Nevertheless, since facial images are commonly captured in arbitrary recording conditions, a considerable amount of missing information and gross outliers is observed (e.g., due to self-occlusion, or subjects wearing eye-glasses). Given that many annotated databases have been developed for face analysis tasks, it is evident that component analysis techniques need to be developed in order to alleviate issues arising from the aforementioned challenges. In this paper, we propose a novel component analysis technique that is suitable for facial UV maps containing a considerable amount of missing information and outliers, while additionally, incorporates knowledge from various attributes (such as age and identity). We evaluate the proposed Multi-Attribute Robust Component Analysis (MA-RCA) on problems such as UV completion and age progression, where the proposed method outperforms compared techniques. Finally, we demonstrate that MA-RCA method is powerful enough to provide weak annotations for training deep learning systems for various applications, such as illumination transfer.

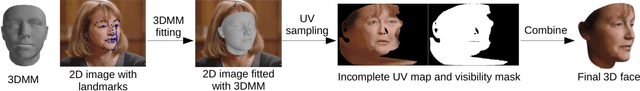

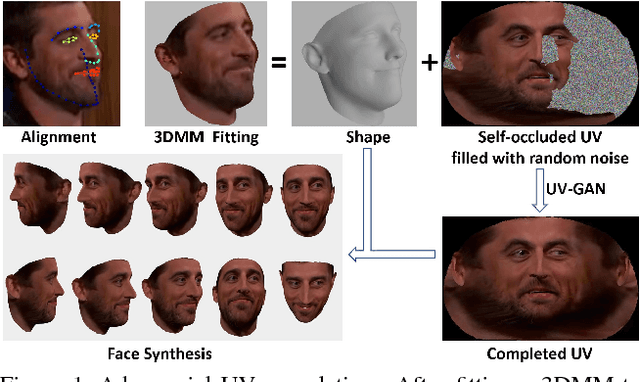

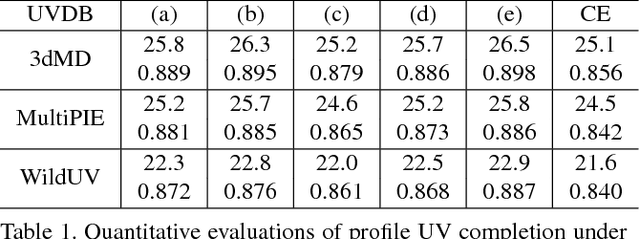

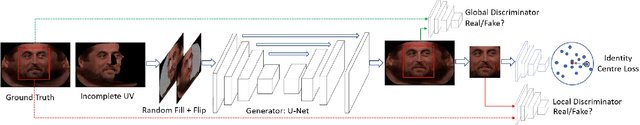

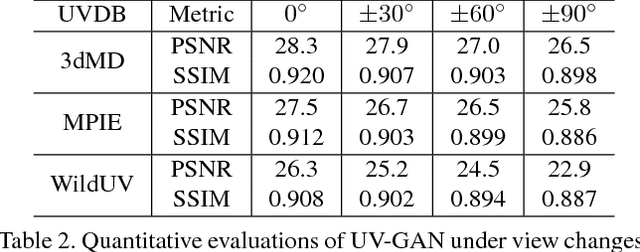

UV-GAN: Adversarial Facial UV Map Completion for Pose-invariant Face Recognition

Dec 13, 2017

Abstract:Recently proposed robust 3D face alignment methods establish either dense or sparse correspondence between a 3D face model and a 2D facial image. The use of these methods presents new challenges as well as opportunities for facial texture analysis. In particular, by sampling the image using the fitted model, a facial UV can be created. Unfortunately, due to self-occlusion, such a UV map is always incomplete. In this paper, we propose a framework for training Deep Convolutional Neural Network (DCNN) to complete the facial UV map extracted from in-the-wild images. To this end, we first gather complete UV maps by fitting a 3D Morphable Model (3DMM) to various multiview image and video datasets, as well as leveraging on a new 3D dataset with over 3,000 identities. Second, we devise a meticulously designed architecture that combines local and global adversarial DCNNs to learn an identity-preserving facial UV completion model. We demonstrate that by attaching the completed UV to the fitted mesh and generating instances of arbitrary poses, we can increase pose variations for training deep face recognition/verification models, and minimise pose discrepancy during testing, which lead to better performance. Experiments on both controlled and in-the-wild UV datasets prove the effectiveness of our adversarial UV completion model. We achieve state-of-the-art verification accuracy, $94.05\%$, under the CFP frontal-profile protocol only by combining pose augmentation during training and pose discrepancy reduction during testing. We will release the first in-the-wild UV dataset (we refer as WildUV) that comprises of complete facial UV maps from 1,892 identities for research purposes.

Informed Non-convex Robust Principal Component Analysis with Features

Sep 14, 2017

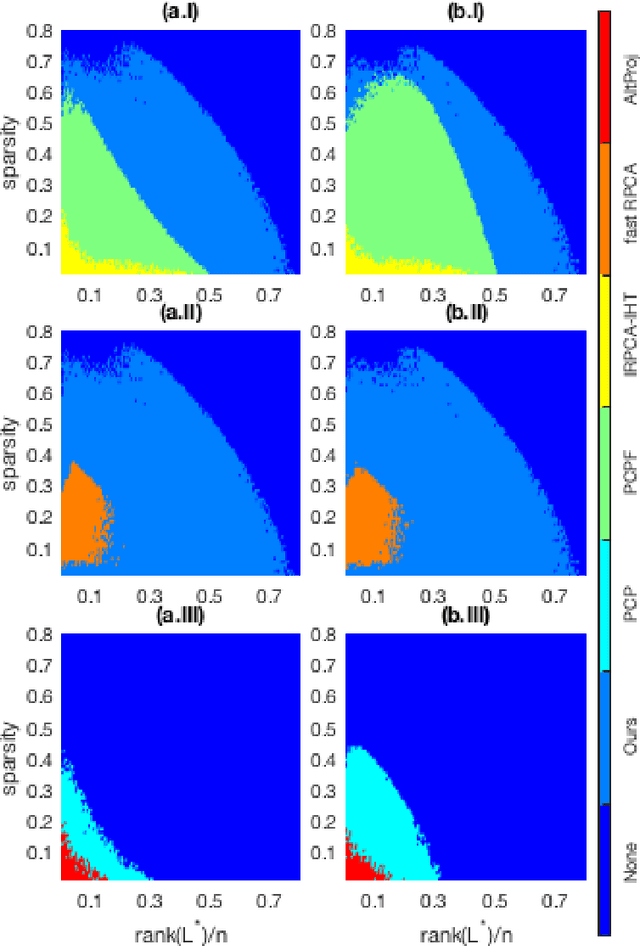

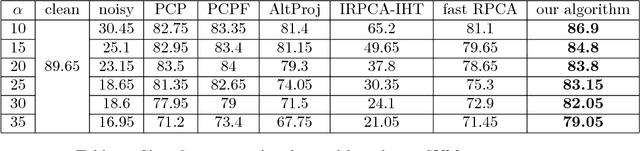

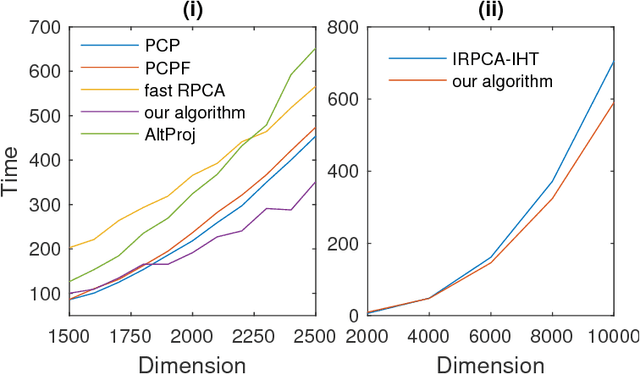

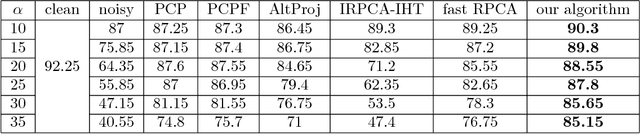

Abstract:We revisit the problem of robust principal component analysis with features acting as prior side information. To this aim, a novel, elegant, non-convex optimization approach is proposed to decompose a given observation matrix into a low-rank core and the corresponding sparse residual. Rigorous theoretical analysis of the proposed algorithm results in exact recovery guarantees with low computational complexity. Aptly designed synthetic experiments demonstrate that our method is the first to wholly harness the power of non-convexity over convexity in terms of both recoverability and speed. That is, the proposed non-convex approach is more accurate and faster compared to the best available algorithms for the problem under study. Two real-world applications, namely image classification and face denoising further exemplify the practical superiority of the proposed method.

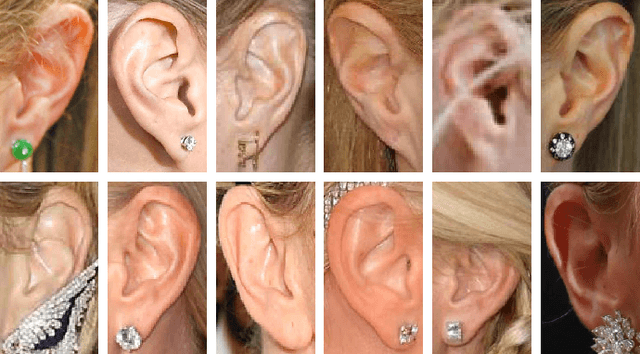

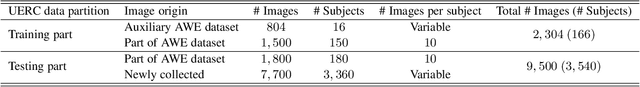

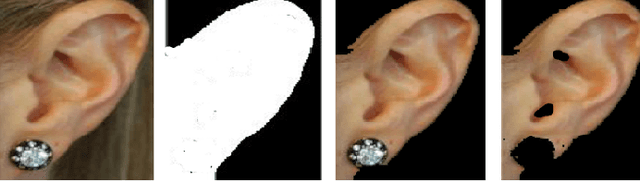

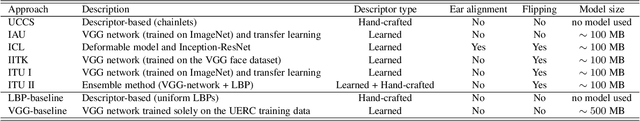

The Unconstrained Ear Recognition Challenge

Aug 23, 2017

Abstract:In this paper we present the results of the Unconstrained Ear Recognition Challenge (UERC), a group benchmarking effort centered around the problem of person recognition from ear images captured in uncontrolled conditions. The goal of the challenge was to assess the performance of existing ear recognition techniques on a challenging large-scale dataset and identify open problems that need to be addressed in the future. Five groups from three continents participated in the challenge and contributed six ear recognition techniques for the evaluation, while multiple baselines were made available for the challenge by the UERC organizers. A comprehensive analysis was conducted with all participating approaches addressing essential research questions pertaining to the sensitivity of the technology to head rotation, flipping, gallery size, large-scale recognition and others. The top performer of the UERC was found to ensure robust performance on a smaller part of the dataset (with 180 subjects) regardless of image characteristics, but still exhibited a significant performance drop when the entire dataset comprising 3,704 subjects was used for testing.

Joint Multi-view Face Alignment in the Wild

Aug 20, 2017

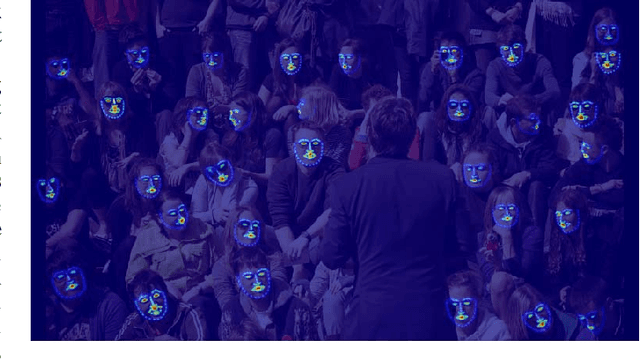

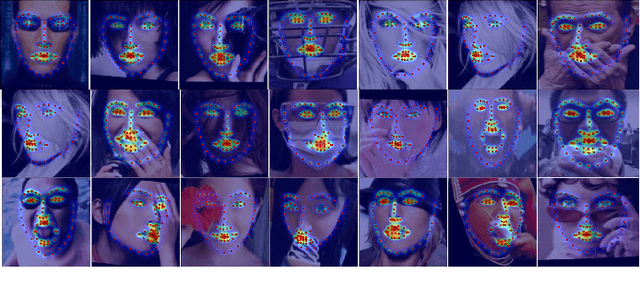

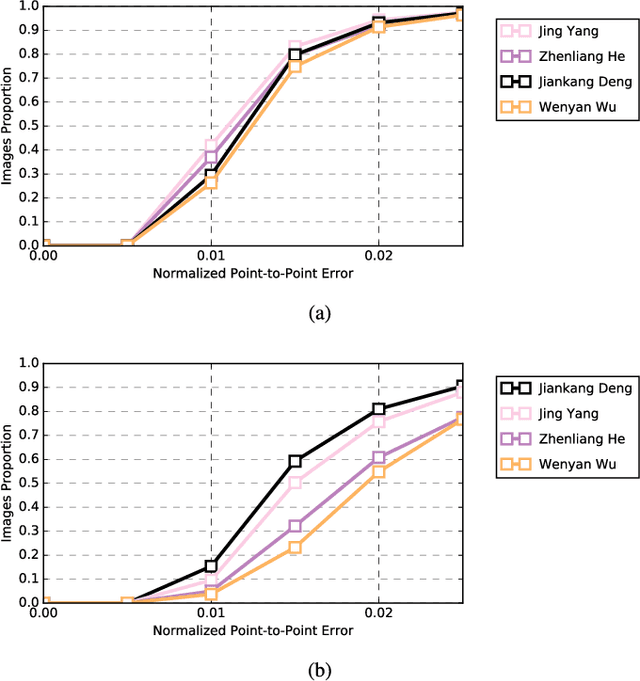

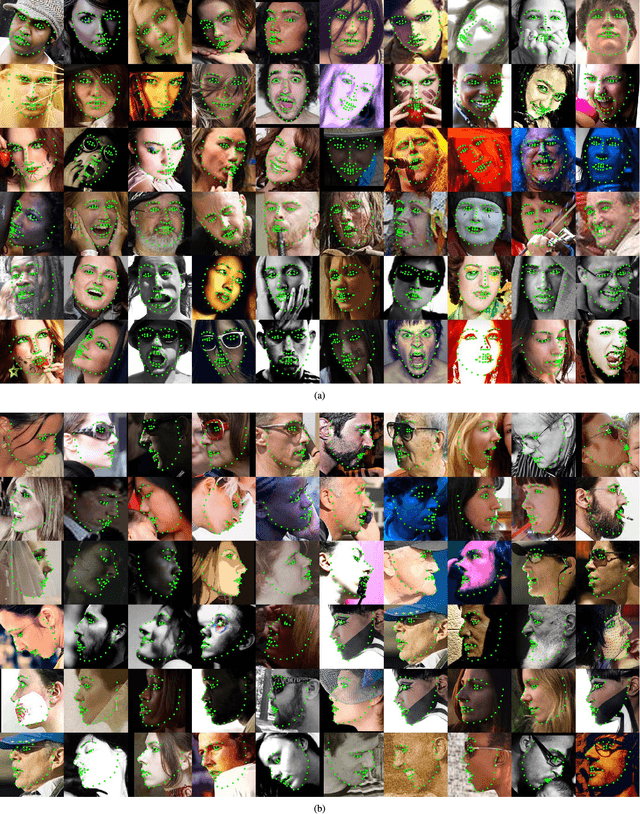

Abstract:The de facto algorithm for facial landmark estimation involves running a face detector with a subsequent deformable model fitting on the bounding box. This encompasses two basic problems: i) the detection and deformable fitting steps are performed independently, while the detector might not provide best-suited initialisation for the fitting step, ii) the face appearance varies hugely across different poses, which makes the deformable face fitting very challenging and thus distinct models have to be used (\eg, one for profile and one for frontal faces). In this work, we propose the first, to the best of our knowledge, joint multi-view convolutional network to handle large pose variations across faces in-the-wild, and elegantly bridge face detection and facial landmark localisation tasks. Existing joint face detection and landmark localisation methods focus only on a very small set of landmarks. By contrast, our method can detect and align a large number of landmarks for semi-frontal (68 landmarks) and profile (39 landmarks) faces. We evaluate our model on a plethora of datasets including standard static image datasets such as IBUG, 300W, COFW, and the latest Menpo Benchmark for both semi-frontal and profile faces. Significant improvement over state-of-the-art methods on deformable face tracking is witnessed on 300VW benchmark. We also demonstrate state-of-the-art results for face detection on FDDB and MALF datasets.

Robust Kronecker-Decomposable Component Analysis for Low-Rank Modeling

Jul 26, 2017

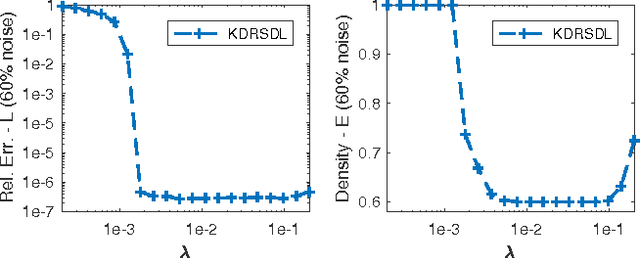

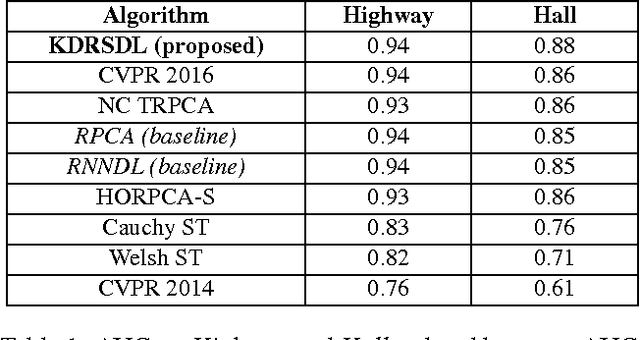

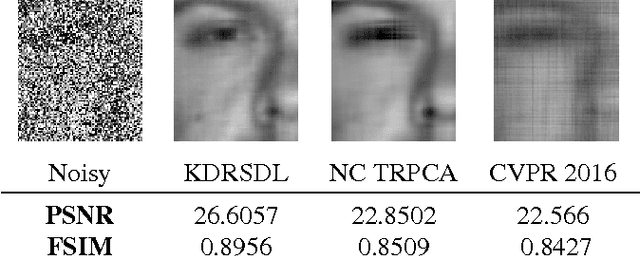

Abstract:Dictionary learning and component analysis are part of one of the most well-studied and active research fields, at the intersection of signal and image processing, computer vision, and statistical machine learning. In dictionary learning, the current methods of choice are arguably K-SVD and its variants, which learn a dictionary (i.e., a decomposition) for sparse coding via Singular Value Decomposition. In robust component analysis, leading methods derive from Principal Component Pursuit (PCP), which recovers a low-rank matrix from sparse corruptions of unknown magnitude and support. However, K-SVD is sensitive to the presence of noise and outliers in the training set. Additionally, PCP does not provide a dictionary that respects the structure of the data (e.g., images), and requires expensive SVD computations when solved by convex relaxation. In this paper, we introduce a new robust decomposition of images by combining ideas from sparse dictionary learning and PCP. We propose a novel Kronecker-decomposable component analysis which is robust to gross corruption, can be used for low-rank modeling, and leverages separability to solve significantly smaller problems. We design an efficient learning algorithm by drawing links with a restricted form of tensor factorization. The effectiveness of the proposed approach is demonstrated on real-world applications, namely background subtraction and image denoising, by performing a thorough comparison with the current state of the art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge