Anais Garrell

HEXAR: a Hierarchical Explainability Architecture for Robots

Jan 06, 2026Abstract:As robotic systems become increasingly complex, the need for explainable decision-making becomes critical. Existing explainability approaches in robotics typically either focus on individual modules, which can be difficult to query from the perspective of high-level behaviour, or employ monolithic approaches, which do not exploit the modularity of robotic architectures. We present HEXAR (Hierarchical EXplainability Architecture for Robots), a novel framework that provides a plug-in, hierarchical approach to generate explanations about robotic systems. HEXAR consists of specialised component explainers using diverse explanation techniques (e.g., LLM-based reasoning, causal models, feature importance, etc) tailored to specific robot modules, orchestrated by an explainer selector that chooses the most appropriate one for a given query. We implement and evaluate HEXAR on a TIAGo robot performing assistive tasks in a home environment, comparing it against end-to-end and aggregated baseline approaches across 180 scenario-query variations. We observe that HEXAR significantly outperforms baselines in root cause identification, incorrect information exclusion, and runtime, offering a promising direction for transparent autonomous systems.

AI or Human? Understanding Perceptions of Embodied Robots with LLMs

Jul 22, 2025Abstract:The pursuit of artificial intelligence has long been associated to the the challenge of effectively measuring intelligence. Even if the Turing Test was introduced as a means of assessing a system intelligence, its relevance and application within the field of human-robot interaction remain largely underexplored. This study investigates the perception of intelligence in embodied robots by performing a Turing Test within a robotic platform. A total of 34 participants were tasked with distinguishing between AI- and human-operated robots while engaging in two interactive tasks: an information retrieval and a package handover. These tasks assessed the robot perception and navigation abilities under both static and dynamic conditions. Results indicate that participants were unable to reliably differentiate between AI- and human-controlled robots beyond chance levels. Furthermore, analysis of participant responses reveals key factors influencing the perception of artificial versus human intelligence in embodied robotic systems. These findings provide insights into the design of future interactive robots and contribute to the ongoing discourse on intelligence assessment in AI-driven systems.

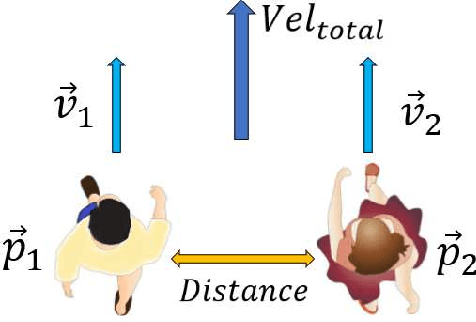

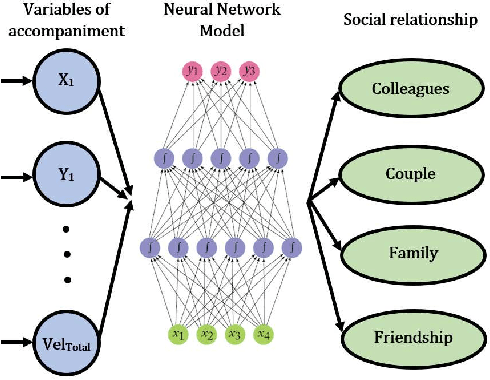

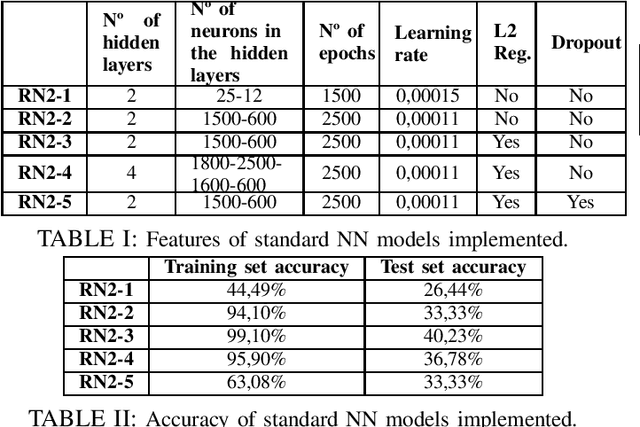

Humans Social Relationship Classification during Accompaniment

Jul 06, 2022

Abstract:This paper presents the design of deep learning architectures which allow to classify the social relationship existing between two people who are walking in a side-by-side formation into four possible categories --colleagues, couple, family or friendship. The models are developed using Neural Networks or Recurrent Neural Networks to achieve the classification and are trained and evaluated using a database of readings obtained from humans performing an accompaniment process in an urban environment. The best achieved model accomplishes a relatively good accuracy in the classification problem and its results enhance partially the outcomes from a previous study [1]. Furthermore, the model proposed shows its future potential to improve its efficiency and to be implemented in a real robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge