Shirui Lyu

Imitation Learning for Adaptive Control of a Virtual Soft Exoglove

May 14, 2025

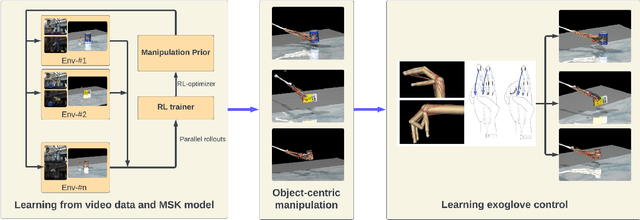

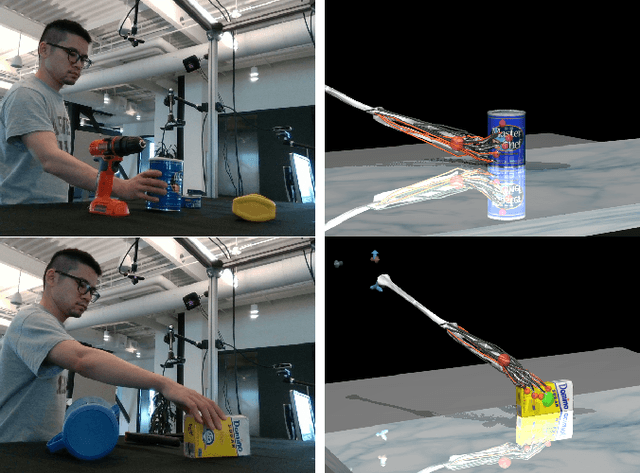

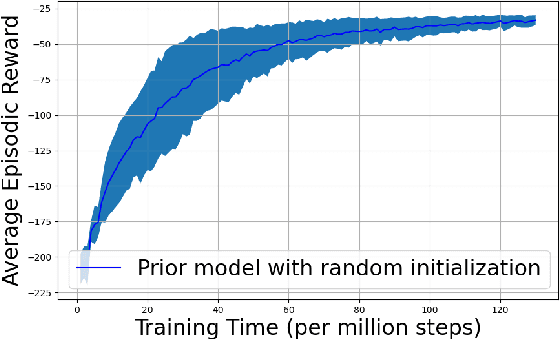

Abstract:The use of wearable robots has been widely adopted in rehabilitation training for patients with hand motor impairments. However, the uniqueness of patients' muscle loss is often overlooked. Leveraging reinforcement learning and a biologically accurate musculoskeletal model in simulation, we propose a customized wearable robotic controller that is able to address specific muscle deficits and to provide compensation for hand-object manipulation tasks. Video data of a same subject performing human grasping tasks is used to train a manipulation model through learning from demonstration. This manipulation model is subsequently fine-tuned to perform object-specific interaction tasks. The muscle forces in the musculoskeletal manipulation model are then weakened to simulate neurological motor impairments, which are later compensated by the actuation of a virtual wearable robotics glove. Results shows that integrating the virtual wearable robotic glove provides shared assistance to support the hand manipulator with weakened muscle forces. The learned exoglove controller achieved an average of 90.5\% of the original manipulation proficiency.

PointGrasp: Point Cloud-based Grasping for Tendon-driven Soft Robotic Glove Applications

Mar 19, 2024Abstract:Controlling hand exoskeletons to assist individuals with grasping tasks poses a challenge due to the difficulty in understanding user intentions. We propose that most daily grasping tasks during activities of daily living (ADL) can be deduced by analyzing object geometries (simple and complex) from 3D point clouds. The study introduces PointGrasp, a real-time system designed for identifying household scenes semantically, aiming to support and enhance assistance during ADL for tailored end-to-end grasping tasks. The system comprises an RGB-D camera with an inertial measurement unit and a microprocessor integrated into a tendon-driven soft robotic glove. The RGB-D camera processes 3D scenes at a rate exceeding 30 frames per second. The proposed pipeline demonstrates an average RMSE of 0.8 $\pm$ 0.39 cm for simple and 0.11 $\pm$ 0.06 cm for complex geometries. Within each mode, it identifies and pinpoints reachable objects. This system shows promise in end-to-end vision-driven robotic-assisted rehabilitation manual tasks.

ViT-A*: Legged Robot Path Planning using Vision Transformer A*

Oct 11, 2023Abstract:Legged robots, particularly quadrupeds, offer promising navigation capabilities, especially in scenarios requiring traversal over diverse terrains and obstacle avoidance. This paper addresses the challenge of enabling legged robots to navigate complex environments effectively through the integration of data-driven path-planning methods. We propose an approach that utilizes differentiable planners, allowing the learning of end-to-end global plans via a neural network for commanding quadruped robots. The approach leverages 2D maps and obstacle specifications as inputs to generate a global path. To enhance the functionality of the developed neural network-based path planner, we use Vision Transformers (ViT) for map pre-processing, to enable the effective handling of larger maps. Experimental evaluations on two real robotic quadrupeds (Boston Dynamics Spot and Unitree Go1) demonstrate the effectiveness and versatility of the proposed approach in generating reliable path plans.

* 6 pages, 6 figures, conference

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge