Inspiration Learning through Preferences

Sep 16, 2018Nir Baram, Shie Mannor

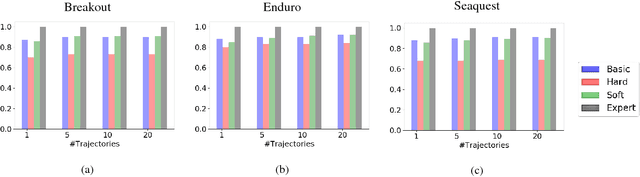

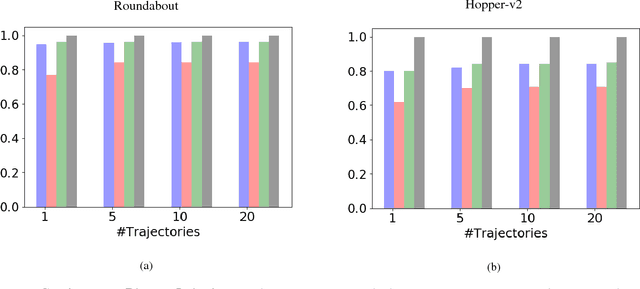

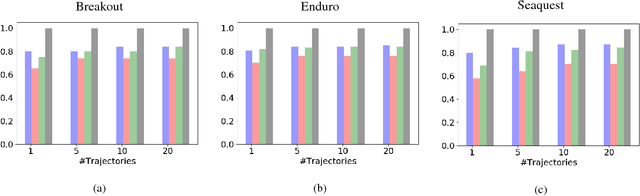

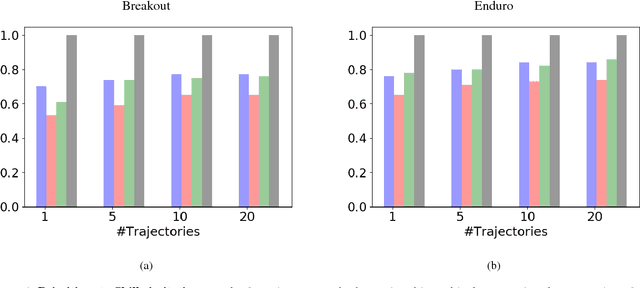

Current imitation learning techniques are too restrictive because they require the agent and expert to share the same action space. However, oftentimes agents that act differently from the expert can solve the task just as good. For example, a person lifting a box can be imitated by a ceiling mounted robot or a desktop-based robotic-arm. In both cases, the end goal of lifting the box is achieved, perhaps using different strategies. We denote this setup as \textit{Inspiration Learning} - knowledge transfer between agents that operate in different action spaces. Since state-action expert demonstrations can no longer be used, Inspiration learning requires novel methods to guide the agent towards the end goal. In this work, we rely on ideas of Preferential based Reinforcement Learning (PbRL) to design Advantage Actor-Critic algorithms for solving inspiration learning tasks. Unlike classic actor-critic architectures, the critic we use consists of two parts: a) a state-value estimation as in common actor-critic algorithms and b) a single step reward function derived from an expert/agent classifier. We show that our method is capable of extending the current imitation framework to new horizons. This includes continuous-to-discrete action imitation, as well as primitive-to-macro action imitation.

On-Line Learning of Linear Dynamical Systems: Exponential Forgetting in Kalman Filters

Sep 16, 2018Mark Kozdoba, Jakub Marecek, Tigran Tchrakian, Shie Mannor

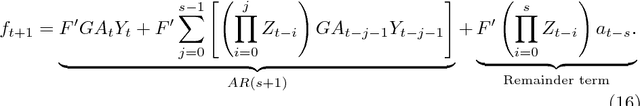

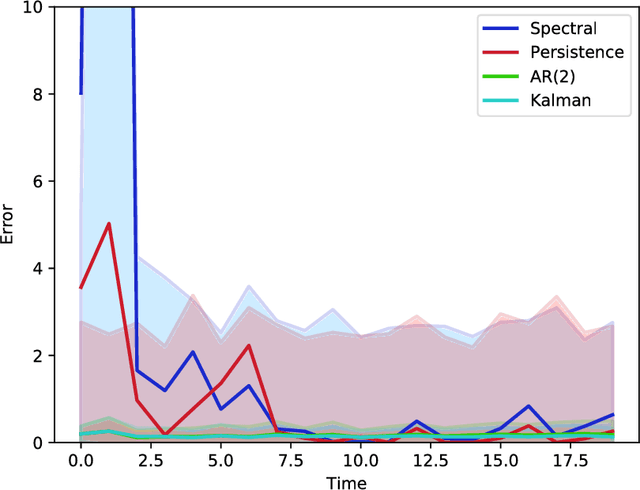

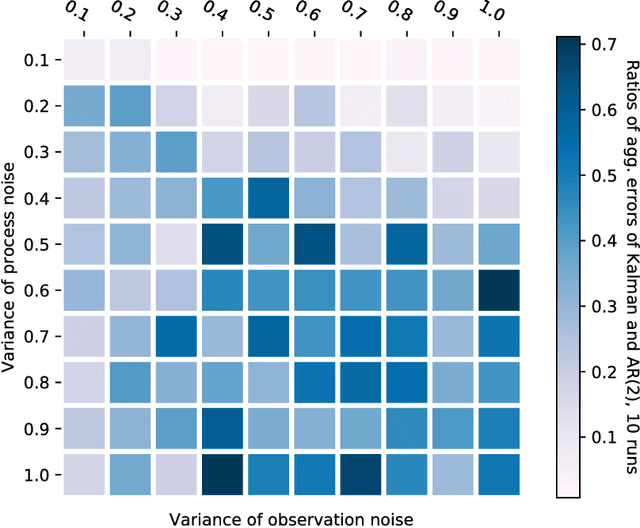

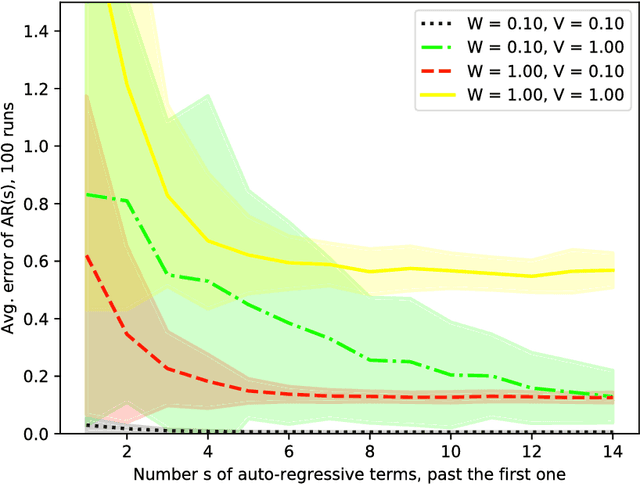

Kalman filter is a key tool for time-series forecasting and analysis. We show that the dependence of a prediction of Kalman filter on the past is decaying exponentially, whenever the process noise is non-degenerate. Therefore, Kalman filter may be approximated by regression on a few recent observations. Surprisingly, we also show that having some process noise is essential for the exponential decay. With no process noise, it may happen that the forecast depends on all of the past uniformly, which makes forecasting more difficult. Based on this insight, we devise an on-line algorithm for improper learning of a linear dynamical system (LDS), which considers only a few most recent observations. We use our decay results to provide the first regret bounds w.r.t. to Kalman filters within learning an LDS. That is, we compare the results of our algorithm to the best, in hindsight, Kalman filter for a given signal. Also, the algorithm is practical: its per-update run-time is linear in the regression depth.

Learn What Not to Learn: Action Elimination with Deep Reinforcement Learning

Sep 06, 2018Tom Zahavy, Matan Haroush, Nadav Merlis, Daniel J. Mankowitz, Shie Mannor

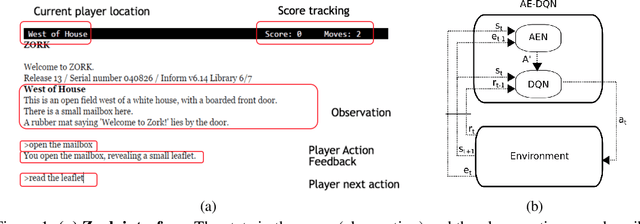

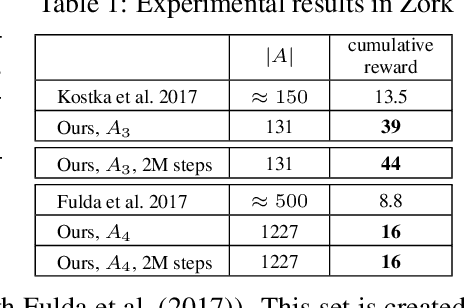

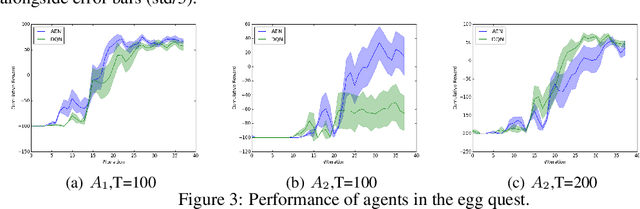

Learning how to act when there are many available actions in each state is a challenging task for Reinforcement Learning (RL) agents, especially when many of the actions are redundant or irrelevant. In such cases, it is sometimes easier to learn which actions not to take. In this work, we propose the Action-Elimination Deep Q-Network (AE-DQN) architecture that combines a Deep RL algorithm with an Action Elimination Network (AEN) that eliminates sub-optimal actions. The AEN is trained to predict invalid actions, supervised by an external elimination signal provided by the environment. Simulations demonstrate a considerable speedup and added robustness over vanilla DQN in text-based games with over a thousand discrete actions.

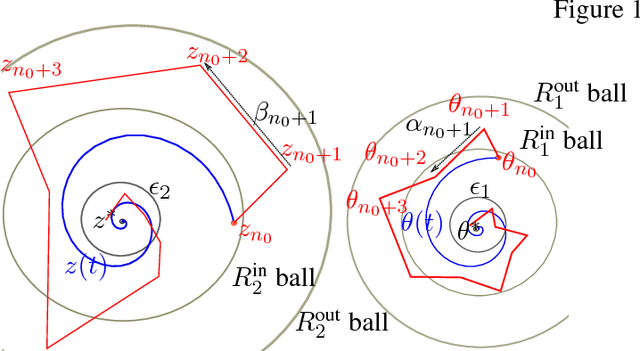

How to Combine Tree-Search Methods in Reinforcement Learning

Sep 06, 2018Yonathan Efroni, Gal Dalal, Bruno Scherrer, Shie Mannor

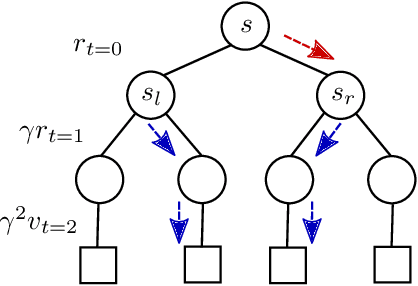

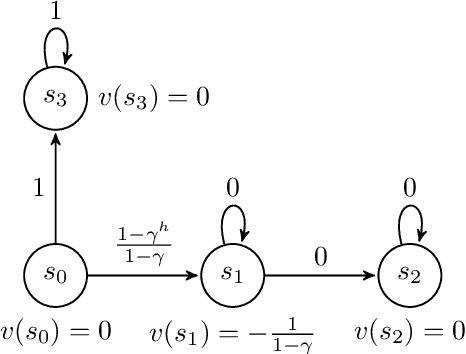

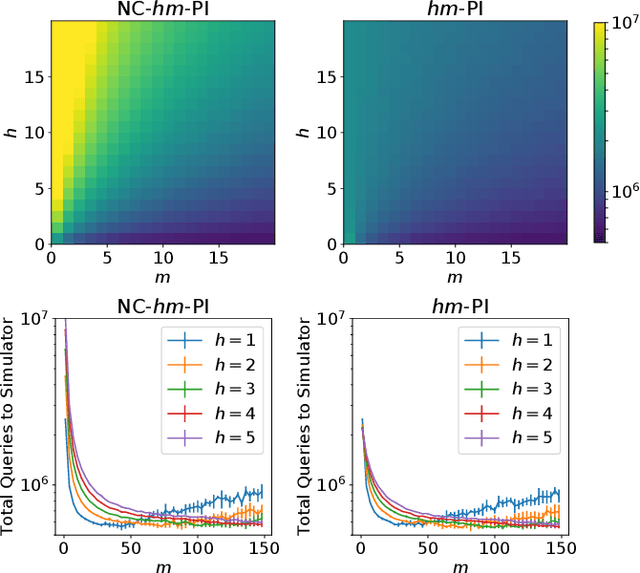

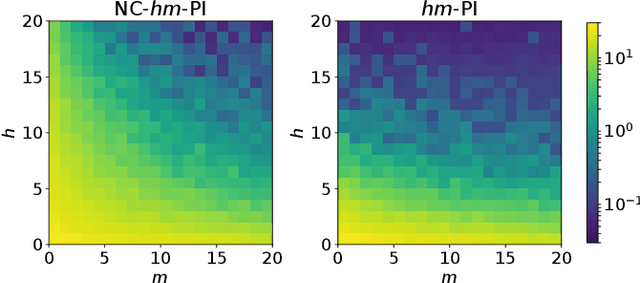

Finite-horizon lookahead policies are abundantly used in Reinforcement Learning and demonstrate impressive empirical success. Usually, the lookahead policies are implemented with specific planning methods such as Monte Carlo Tree Search (e.g. in AlphaZero). Referring to the planning problem as tree search, a reasonable practice in these implementations is to back up the value only at the leaves while the information obtained at the root is not leveraged other than for updating the policy. Here, we question the potency of this approach.Namely, the latter procedure is non-contractive in general, and its convergence is not guaranteed. Our proposed enhancement is straightforward and simple: use the return from the optimal tree path to back up the values at the descendants of the root. This leads to a \gamma^h-contracting procedure, where \gamma is the discount factor and $h$ is the tree depth. To establish our results, we first introduce a notion called multiple-step greedy consistency. We then provide convergence rates for two algorithmic instantiations of the above enhancement in the presence of noise injected to both the tree search stage and value estimation stage.

Multi-user Communication Networks: A Coordinated Multi-armed Bandit Approach

Aug 14, 2018Orly Avner, Shie Mannor

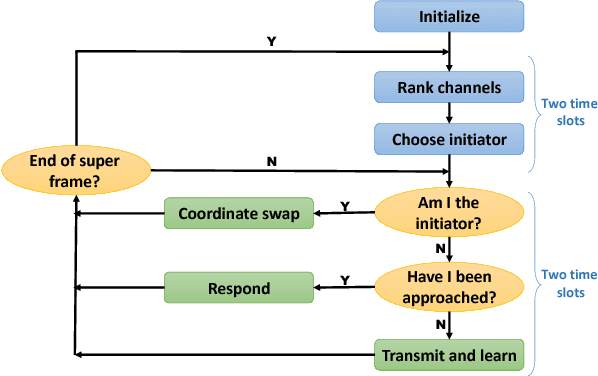

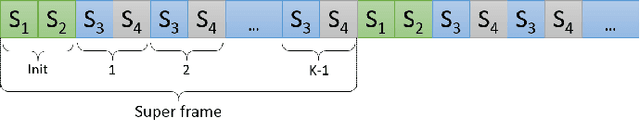

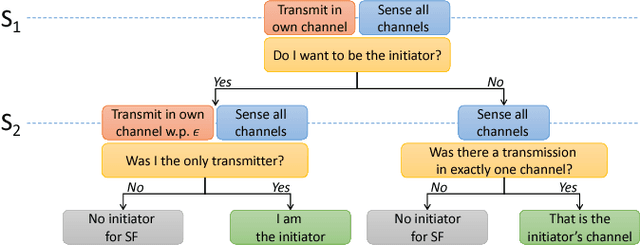

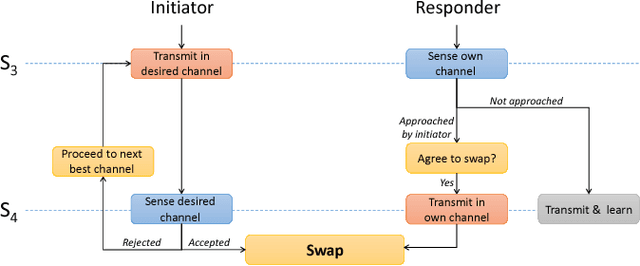

Communication networks shared by many users are a widespread challenge nowadays. In this paper we address several aspects of this challenge simultaneously: learning unknown stochastic network characteristics, sharing resources with other users while keeping coordination overhead to a minimum. The proposed solution combines Multi-Armed Bandit learning with a lightweight signalling-based coordination scheme, and ensures convergence to a stable allocation of resources. Our work considers single-user level algorithms for two scenarios: an unknown fixed number of users, and a dynamic number of users. Analytic performance guarantees, proving convergence to stable marriage configurations, are presented for both setups. The algorithms are designed based on a system-wide perspective, rather than focusing on single user welfare. Thus, maximal resource utilization is ensured. An extensive experimental analysis covers convergence to a stable configuration as well as reward maximization. Experiments are carried out over a wide range of setups, demonstrating the advantages of our approach over existing state-of-the-art methods.

Beyond the One Step Greedy Approach in Reinforcement Learning

Jul 30, 2018Yonathan Efroni, Gal Dalal, Bruno Scherrer, Shie Mannor

The famous Policy Iteration algorithm alternates between policy improvement and policy evaluation. Implementations of this algorithm with several variants of the latter evaluation stage, e.g, $n$-step and trace-based returns, have been analyzed in previous works. However, the case of multiple-step lookahead policy improvement, despite the recent increase in empirical evidence of its strength, has to our knowledge not been carefully analyzed yet. In this work, we introduce the first such analysis. Namely, we formulate variants of multiple-step policy improvement, derive new algorithms using these definitions and prove their convergence. Moreover, we show that recent prominent Reinforcement Learning algorithms are, in fact, instances of our framework. We thus shed light on their empirical success and give a recipe for deriving new algorithms for future study.

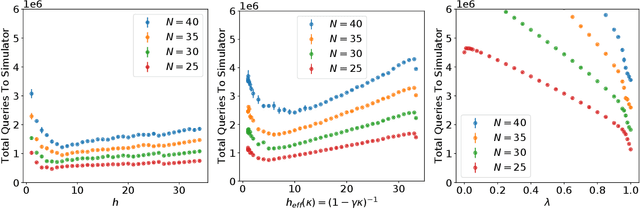

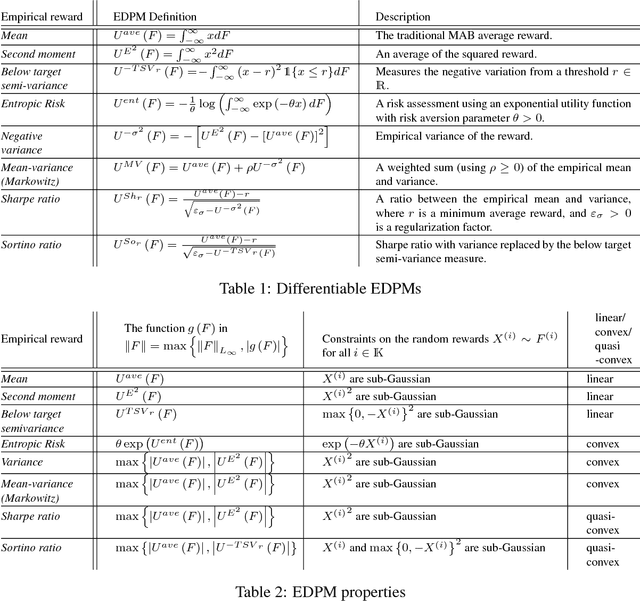

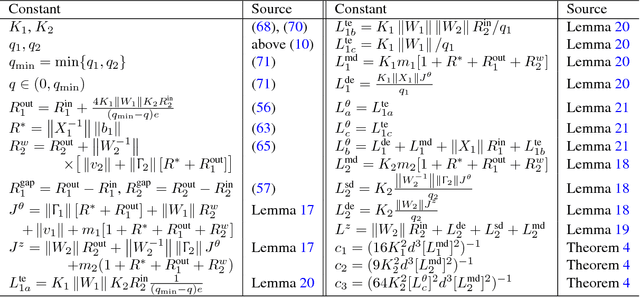

A General Approach to Multi-Armed Bandits Under Risk Criteria

Jun 04, 2018Asaf Cassel, Shie Mannor, Assaf Zeevi

Different risk-related criteria have received recent interest in learning problems, where typically each case is treated in a customized manner. In this paper we provide a more systematic approach to analyzing such risk criteria within a stochastic multi-armed bandit (MAB) formulation. We identify a set of general conditions that yield a simple characterization of the oracle rule (which serves as the regret benchmark), and facilitate the design of upper confidence bound (UCB) learning policies. The conditions are derived from problem primitives, primarily focusing on the relation between the arm reward distributions and the (risk criteria) performance metric. Among other things, the work highlights some (possibly non-intuitive) subtleties that differentiate various criteria in conjunction with statistical properties of the arms. Our main findings are illustrated on several widely used objectives such as conditional value-at-risk, mean-variance, Sharpe-ratio, and more.

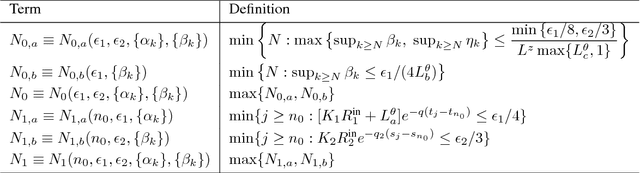

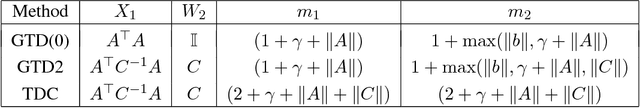

Finite Sample Analysis of Two-Timescale Stochastic Approximation with Applications to Reinforcement Learning

Jun 04, 2018Gal Dalal, Balazs Szorenyi, Gugan Thoppe, Shie Mannor

Two-timescale Stochastic Approximation (SA) algorithms are widely used in Reinforcement Learning (RL). Their iterates have two parts that are updated using distinct stepsizes. In this work, we develop a novel recipe for their finite sample analysis. Using this, we provide a concentration bound, which is the first such result for a two-timescale SA. The type of bound we obtain is known as `lock-in probability'. We also introduce a new projection scheme, in which the time between successive projections increases exponentially. This scheme allows one to elegantly transform a lock-in probability into a convergence rate result for projected two-timescale SA. From this latter result, we then extract key insights on stepsize selection. As an application, we finally obtain convergence rates for the projected two-timescale RL algorithms GTD(0), GTD2, and TDC.

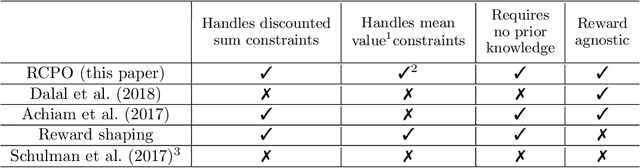

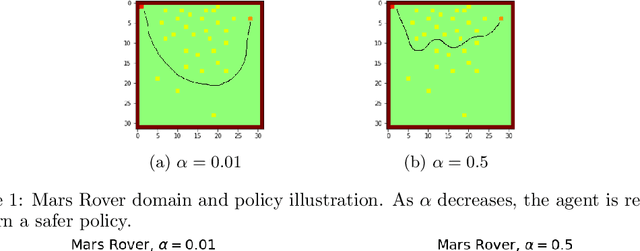

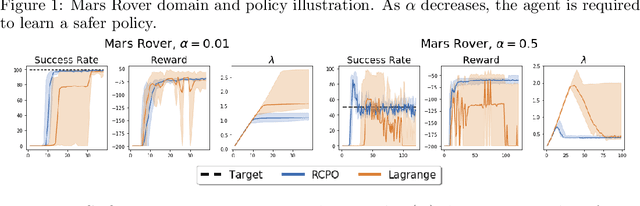

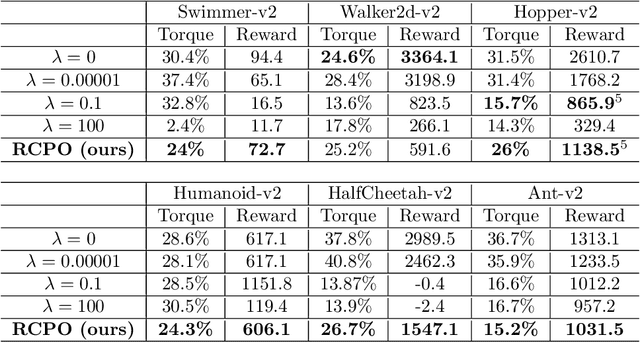

Reward Constrained Policy Optimization

May 28, 2018Chen Tessler, Daniel J. Mankowitz, Shie Mannor

Teaching agents to perform tasks using Reinforcement Learning is no easy feat. As the goal of reinforcement learning agents is to maximize the accumulated reward, they often find loopholes and misspecifications in the reward signal which lead to unwanted behavior. To overcome this, often, regularization is employed through the technique of reward shaping - the agent is provided an additional weighted reward signal, meant to lead it towards a desired behavior. The weight is considered as a hyper-parameter and is selected through trial and error, a time consuming and computationally intensive task. In this work, we present a novel multi-timescale approach for constrained policy optimization, called, 'Reward Constrained Policy Optimization' (RCPO), which enables policy regularization without the use of reward shaping. We prove the convergence of our approach and provide empirical evidence of its ability to train constraint satisfying policies.

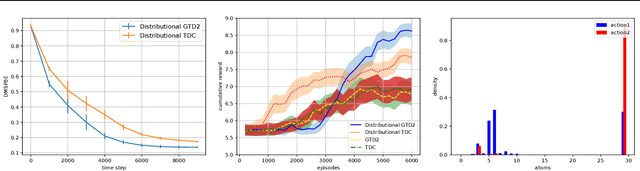

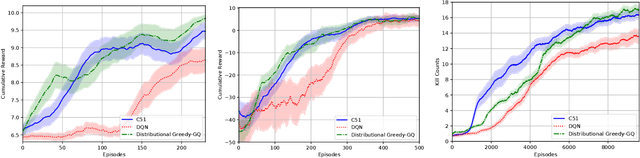

Nonlinear Distributional Gradient Temporal-Difference Learning

May 20, 2018Chao Qu, Shie Mannor, Huan Xu

We devise a distributional variant of gradient temporal-difference (TD) learning. Distributional reinforcement learning has been demonstrated to outperform the regular one in the recent study \citep{bellemare2017distributional}. In our paper, we design two new algorithms called distributional GTD2 and distributional TDC using the Cram{\'e}r distance on the distributional version of the Bellman error objective function, which inherits advantages of both the nonlinear gradient TD algorithms and the distributional RL approach. We prove the asymptotic almost-sure convergence to a local optimal solution for general smooth function approximators, which includes neural networks that have been widely used in recent study to solve the real-life RL problems. In each step, the computational complexity is linear w.r.t.\ the number of the parameters of the function approximator, thus can be implemented efficiently for neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge