Sergi Molina Mellado

Collection and Evaluation of a Long-Term 4D Agri-Robotic Dataset

Nov 25, 2022

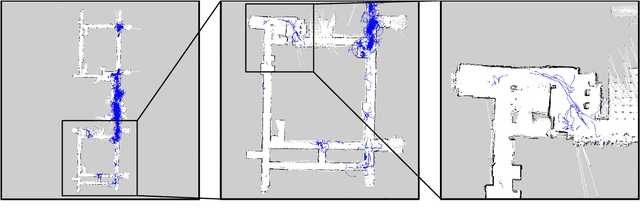

Abstract:Long-term autonomy is one of the most demanded capabilities looked into a robot. The possibility to perform the same task over and over on a long temporal horizon, offering a high standard of reproducibility and robustness, is appealing. Long-term autonomy can play a crucial role in the adoption of robotics systems for precision agriculture, for example in assisting humans in monitoring and harvesting crops in a large orchard. With this scope in mind, we report an ongoing effort in the long-term deployment of an autonomous mobile robot in a vineyard for data collection across multiple months. The main aim is to collect data from the same area at different points in time so to be able to analyse the impact of the environmental changes in the mapping and localisation tasks. In this work, we present a map-based localisation study taking 4 data sessions. We identify expected failures when the pre-built map visually differs from the environment's current appearance and we anticipate LTS-Net, a solution pointed at extracting stable temporal features for improving long-term 4D localisation results.

3DOF Pedestrian Trajectory Prediction Learned from Long-Term Autonomous Mobile Robot Deployment Data

Sep 30, 2017

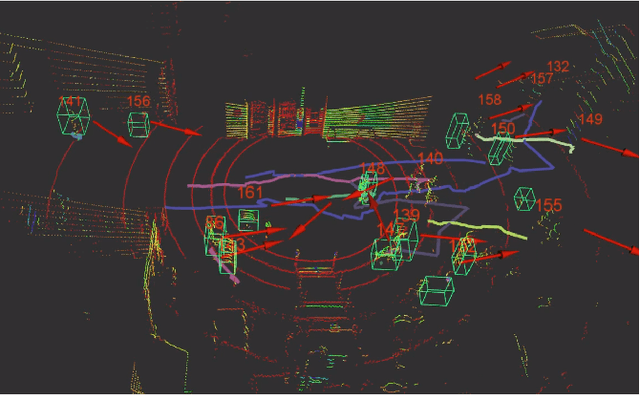

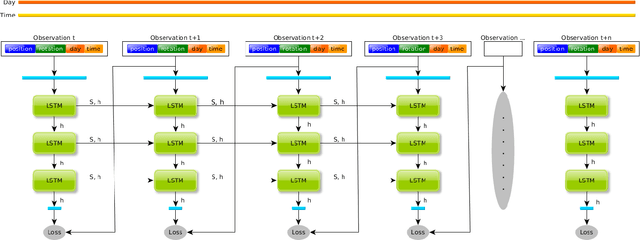

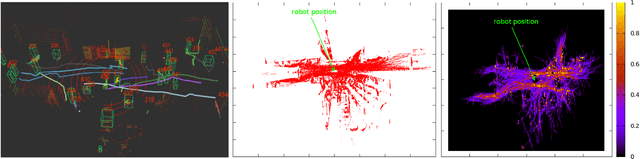

Abstract:This paper presents a novel 3DOF pedestrian trajectory prediction approach for autonomous mobile service robots. While most previously reported methods are based on learning of 2D positions in monocular camera images, our approach uses range-finder sensors to learn and predict 3DOF pose trajectories (i.e. 2D position plus 1D rotation within the world coordinate system). Our approach, T-Pose-LSTM (Temporal 3DOF-Pose Long-Short-Term Memory), is trained using long-term data from real-world robot deployments and aims to learn context-dependent (environment- and time-specific) human activities. Our approach incorporates long-term temporal information (i.e. date and time) with short-term pose observations as input. A sequence-to-sequence LSTM encoder-decoder is trained, which encodes observations into LSTM and then decodes as predictions. For deployment, it can perform on-the-fly prediction in real-time. Instead of using manually annotated data, we rely on a robust human detection, tracking and SLAM system, providing us with examples in a global coordinate system. We validate the approach using more than 15K pedestrian trajectories recorded in a care home environment over a period of three months. The experiment shows that the proposed T-Pose-LSTM model advances the state-of-the-art 2D-based method for human trajectory prediction in long-term mobile robot deployments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge