Rui Tan

PriMask: Cascadable and Collusion-Resilient Data Masking for Mobile Cloud Inference

Nov 12, 2022

Abstract:Mobile cloud offloading is indispensable for inference tasks based on large-scale deep models. However, transmitting privacy-rich inference data to the cloud incurs concerns. This paper presents the design of a system called PriMask, in which the mobile device uses a secret small-scale neural network called MaskNet to mask the data before transmission. PriMask significantly weakens the cloud's capability to recover the data or extract certain private attributes. The MaskNet is em cascadable in that the mobile can opt in to or out of its use seamlessly without any modifications to the cloud's inference service. Moreover, the mobiles use different MaskNets, such that the collusion between the cloud and some mobiles does not weaken the protection for other mobiles. We devise a {\em split adversarial learning} method to train a neural network that generates a new MaskNet quickly (within two seconds) at run time. We apply PriMask to three mobile sensing applications with diverse modalities and complexities, i.e., human activity recognition, urban environment crowdsensing, and driver behavior recognition. Results show PriMask's effectiveness in all three applications.

Indoor Smartphone SLAM with Learned Echoic Location Features

Oct 16, 2022

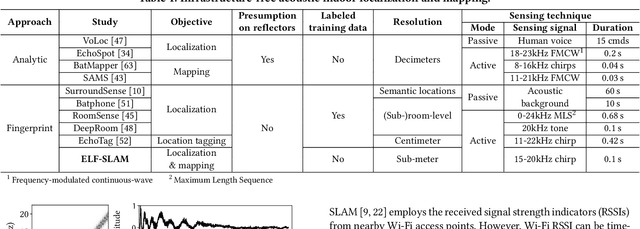

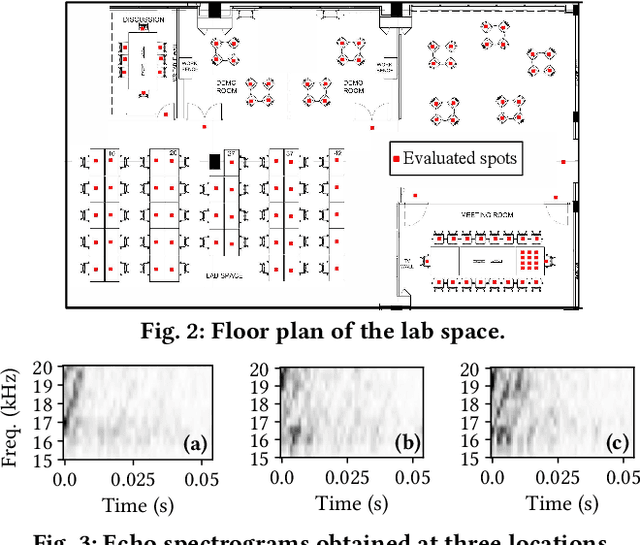

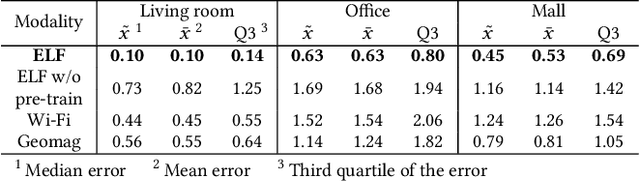

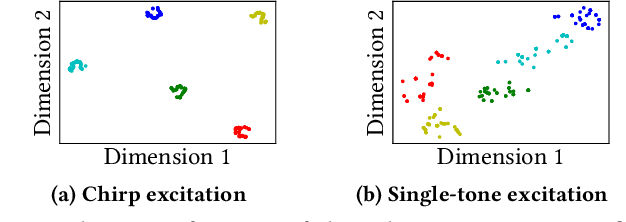

Abstract:Indoor self-localization is a highly demanded system function for smartphones. The current solutions based on inertial, radio frequency, and geomagnetic sensing may have degraded performance when their limiting factors take effect. In this paper, we present a new indoor simultaneous localization and mapping (SLAM) system that utilizes the smartphone's built-in audio hardware and inertial measurement unit (IMU). Our system uses a smartphone's loudspeaker to emit near-inaudible chirps and then the microphone to record the acoustic echoes from the indoor environment. Our profiling measurements show that the echoes carry location information with sub-meter granularity. To enable SLAM, we apply contrastive learning to construct an echoic location feature (ELF) extractor, such that the loop closures on the smartphone's trajectory can be accurately detected from the associated ELF trace. The detection results effectively regulate the IMU-based trajectory reconstruction. Extensive experiments show that our ELF-based SLAM achieves median localization errors of $0.1\,\text{m}$, $0.53\,\text{m}$, and $0.4\,\text{m}$ on the reconstructed trajectories in a living room, an office, and a shopping mall, and outperforms the Wi-Fi and geomagnetic SLAM systems.

Sardino: Ultra-Fast Dynamic Ensemble for Secure Visual Sensing at Mobile Edge

Apr 18, 2022

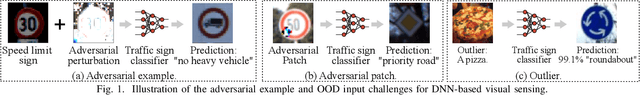

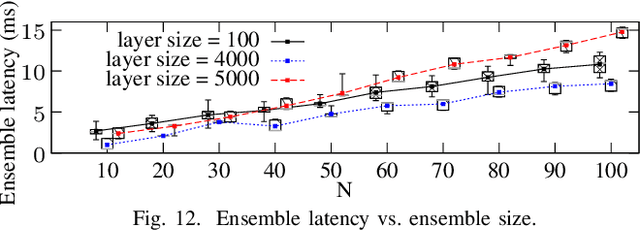

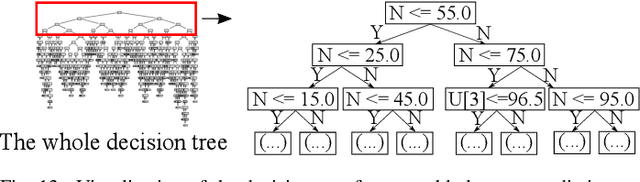

Abstract:Adversarial example attack endangers the mobile edge systems such as vehicles and drones that adopt deep neural networks for visual sensing. This paper presents {\em Sardino}, an active and dynamic defense approach that renews the inference ensemble at run time to develop security against the adaptive adversary who tries to exfiltrate the ensemble and construct the corresponding effective adversarial examples. By applying consistency check and data fusion on the ensemble's predictions, Sardino can detect and thwart adversarial inputs. Compared with the training-based ensemble renewal, we use HyperNet to achieve {\em one million times} acceleration and per-frame ensemble renewal that presents the highest level of difficulty to the prerequisite exfiltration attacks. Moreover, the robustness of the renewed ensembles against adversarial examples is enhanced with adversarial learning for the HyperNet. We design a run-time planner that maximizes the ensemble size in favor of security while maintaining the processing frame rate. Beyond adversarial examples, Sardino can also address the issue of out-of-distribution inputs effectively. This paper presents extensive evaluation of Sardino's performance in counteracting adversarial examples and applies it to build a real-time car-borne traffic sign recognition system. Live on-road tests show the built system's effectiveness in maintaining frame rate and detecting out-of-distribution inputs due to the false positives of a preceding YOLO-based traffic sign detector.

PhyAug: Physics-Directed Data Augmentation for Deep Sensing Model Transfer in Cyber-Physical Systems

Apr 19, 2021

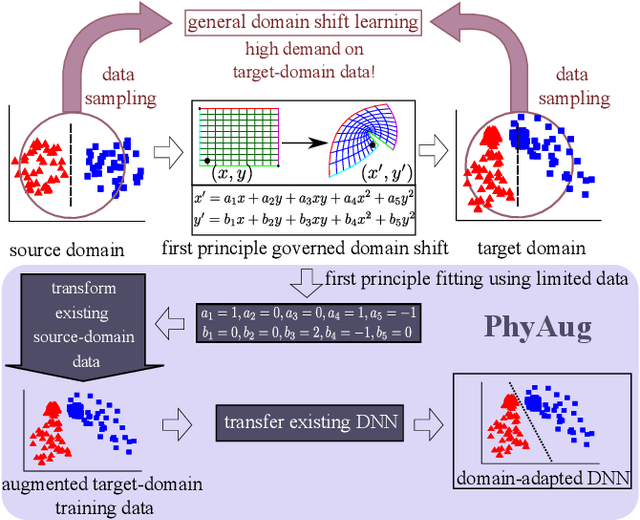

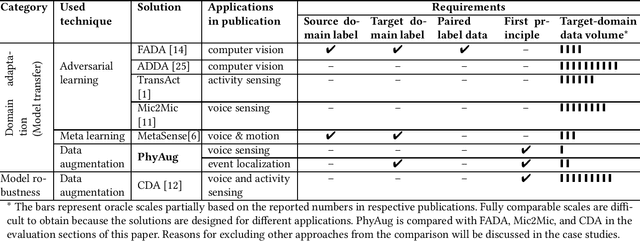

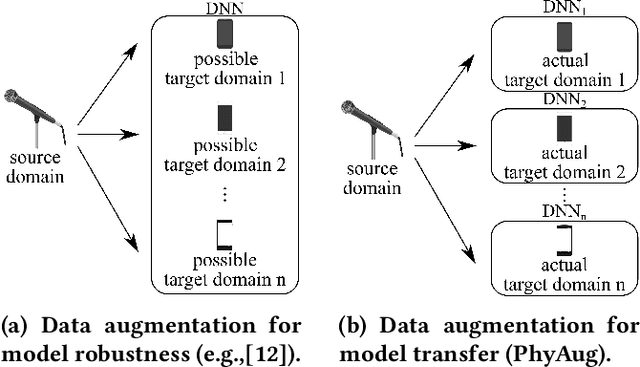

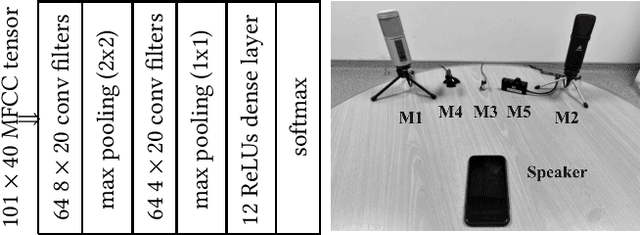

Abstract:Run-time domain shifts from training-phase domains are common in sensing systems designed with deep learning. The shifts can be caused by sensor characteristic variations and/or discrepancies between the design-phase model and the actual model of the sensed physical process. To address these issues, existing transfer learning techniques require substantial target-domain data and thus incur high post-deployment overhead. This paper proposes to exploit the first principle governing the domain shift to reduce the demand on target-domain data. Specifically, our proposed approach called PhyAug uses the first principle fitted with few labeled or unlabeled source/target-domain data pairs to transform the existing source-domain training data into augmented data for updating the deep neural networks. In two case studies of keyword spotting and DeepSpeech2-based automatic speech recognition, with 5-second unlabeled data collected from the target microphones, PhyAug recovers the recognition accuracy losses due to microphone characteristic variations by 37% to 72%. In a case study of seismic source localization with TDoA fngerprints, by exploiting the frst principle of signal propagation in uneven media, PhyAug only requires 3% to 8% of labeled TDoA measurements required by the vanilla fingerprinting approach in achieving the same localization accuracy.

Origin-Aware Next Destination Recommendation with Personalized Preference Attention

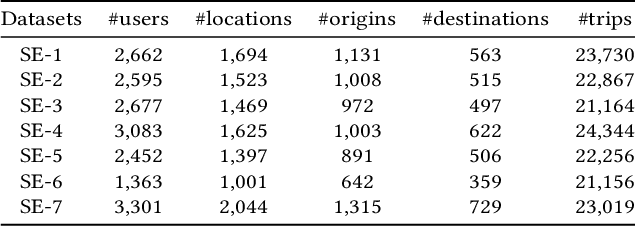

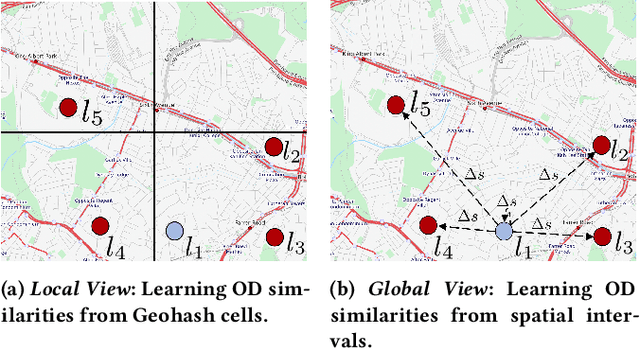

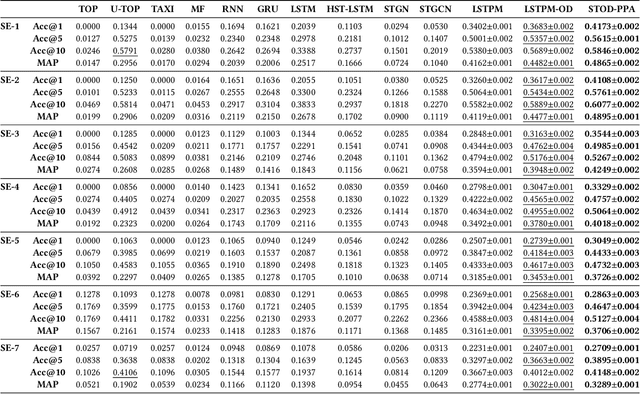

Jan 11, 2021

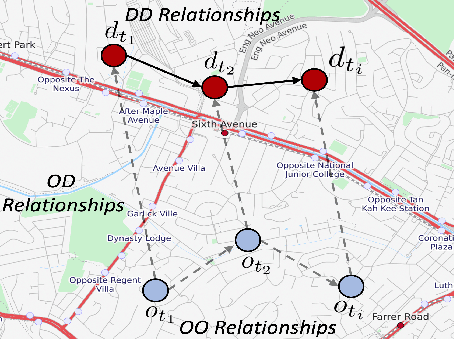

Abstract:Next destination recommendation is an important task in the transportation domain of taxi and ride-hailing services, where users are recommended with personalized destinations given their current origin location. However, recent recommendation works do not satisfy this origin-awareness property, and only consider learning from historical destination locations, without origin information. Thus, the resulting approaches are unable to learn and predict origin-aware recommendations based on the user's current location, leading to sub-optimal performance and poor real-world practicality. Hence, in this work, we study the origin-aware next destination recommendation task. We propose the Spatial-Temporal Origin-Destination Personalized Preference Attention (STOD-PPA) encoder-decoder model to learn origin-origin (OO), destination-destination (DD), and origin-destination (OD) relationships by first encoding both origin and destination sequences with spatial and temporal factors in local and global views, then decoding them through personalized preference attention to predict the next destination. Experimental results on seven real-world user trajectory taxi datasets show that our model significantly outperforms baseline and state-of-the-art methods.

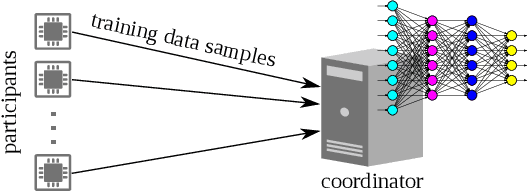

On Lightweight Privacy-Preserving Collaborative Learning for Internet of Things by Independent Random Projections

Dec 11, 2020

Abstract:The Internet of Things (IoT) will be a main data generation infrastructure for achieving better system intelligence. This paper considers the design and implementation of a practical privacy-preserving collaborative learning scheme, in which a curious learning coordinator trains a better machine learning model based on the data samples contributed by a number of IoT objects, while the confidentiality of the raw forms of the training data is protected against the coordinator. Existing distributed machine learning and data encryption approaches incur significant computation and communication overhead, rendering them ill-suited for resource-constrained IoT objects. We study an approach that applies independent random projection at each IoT object to obfuscate data and trains a deep neural network at the coordinator based on the projected data from the IoT objects. This approach introduces light computation overhead to the IoT objects and moves most workload to the coordinator that can have sufficient computing resources. Although the independent projections performed by the IoT objects address the potential collusion between the curious coordinator and some compromised IoT objects, they significantly increase the complexity of the projected data. In this paper, we leverage the superior learning capability of deep learning in capturing sophisticated patterns to maintain good learning performance. The extensive comparative evaluation shows that this approach outperforms other lightweight approaches that apply additive noisification for differential privacy and/or support vector machines for learning in the applications with light to moderate data pattern complexities.

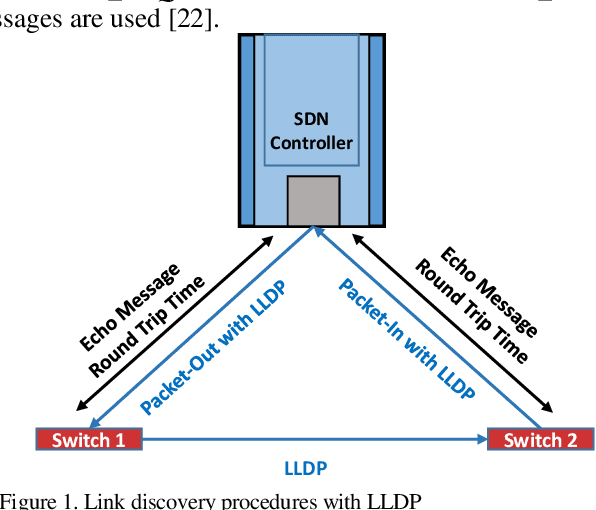

Managing Industrial Communication Delays with Software-Defined Networking

Apr 14, 2020

Abstract:Recent technological advances have fostered the development of complex industrial cyber-physical systems which demand real-time communication with delay guarantees. The consequences of delay requirement violation in such systems may become increasingly severe. In this paper, we propose a contract-based fault-resilient methodology which aims at managing the communication delays of real-time flows in industries. With this objective, we present a light-weight mechanism to estimate end-to-end delay in the network in which the clocks of the switches are not synchronized. The mechanism aims at providing high level of accuracy with lower communication overhead. We then propose a contract-based framework using software-defined networking where the components are associated with delay contracts and a resilience manager. The proposed resilience management framework contains: (1) contracts which state guarantees about components behaviors, (2) observers which are responsible to detect contract failure (fault), (3) monitors to detect events such as run-time changes in the delay requirements and link failure, (4) control logic to take suitable decisions based on the type of the fault, (5) resilience manager to decide response strategies containing the best course of action as per the control logic decision. Finally, we present a delay-aware path finding algorithm which is used to route/reroute the real-time flows to provide resiliency in the case of faults and, to adapt to the changes in the network state. Performance of the proposed framework is evaluated with the Ryu SDN controller and Mininet network emulator.

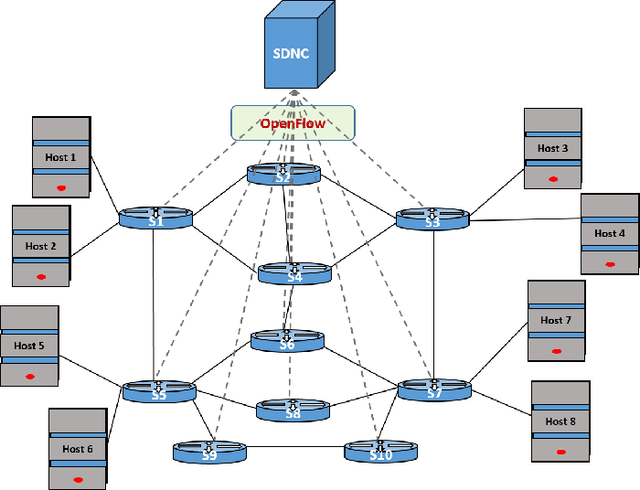

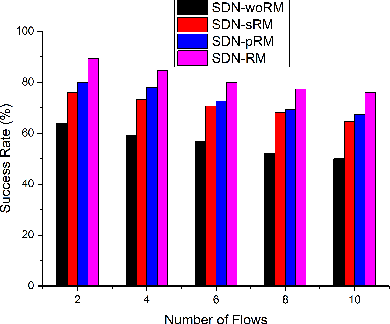

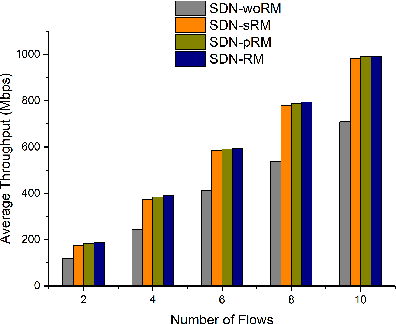

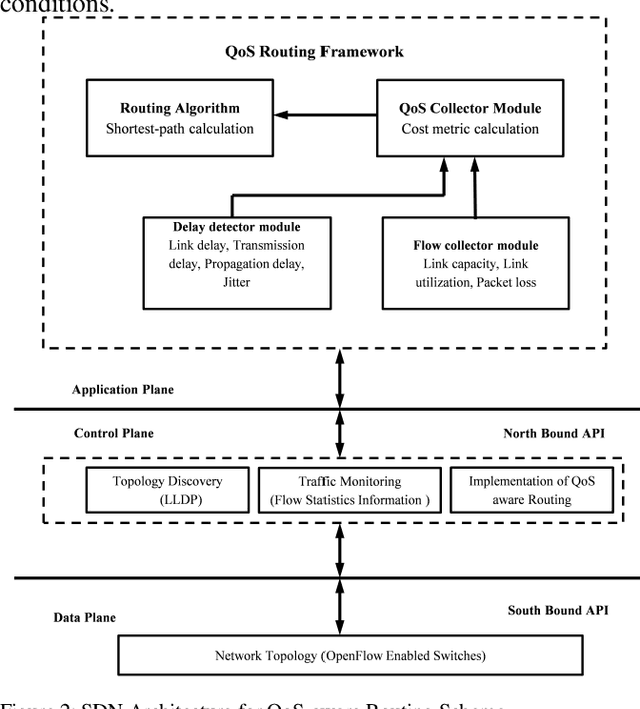

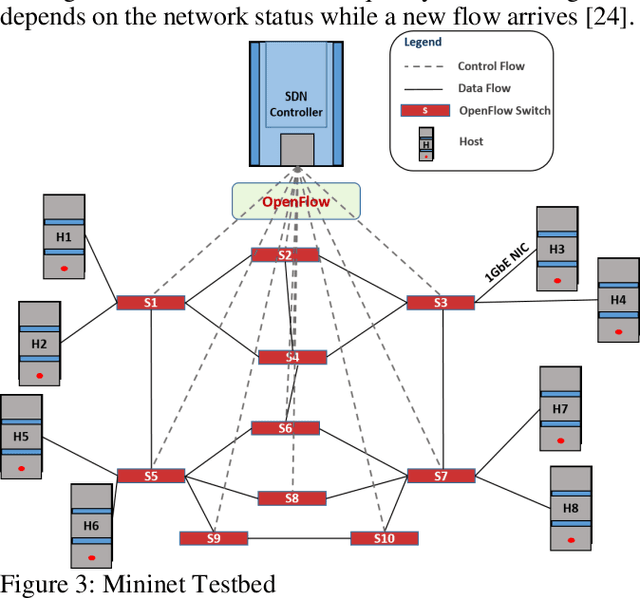

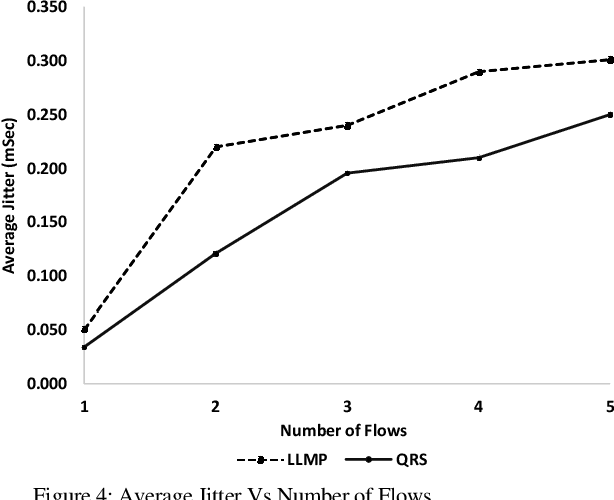

Real-time QoS Routing Scheme in SDN-based Robotic Cyber-Physical Systems

Apr 09, 2020

Abstract:Industrial cyber-physical systems (CPS) have gained enormous attention of manufacturers in recent years due to their automation and cost reduction capabilities in the fourth industrial revolution (Industry 4.0). Such an industrial network of connected cyber and physical components may consist of highly expensive components such as robots. In order to provide efficient communication in such a network, it is imperative to improve the Quality-of-Service (QoS). Software Defined Networking (SDN) has become a key technology in realizing QoS concepts in a dynamic fashion by allowing a centralized controller to program each flow with a unified interface. However, state-of-the-art solutions do not effectively use the centralized visibility of SDN to fulfill QoS requirements of such industrial networks. In this paper, we propose an SDN-based routing mechanism which attempts to improve QoS in robotic cyber-physical systems which have hard real-time requirements. We exploit the SDN capabilities to dynamically select paths based on current link parameters in order to improve the QoS in such delay-constrained networks. We verify the efficiency of the proposed approach on a realistic industrial OpenFlow topology. Our experiments reveal that the proposed approach significantly outperforms an existing delay-based routing mechanism in terms of average throughput, end-to-end delay and jitter. The proposed solution would prove to be significant for the industrial applications in robotic cyber-physical systems.

Lightweight and Unobtrusive Privacy Preservation for Remote Inference via Edge Data Obfuscation

Dec 20, 2019

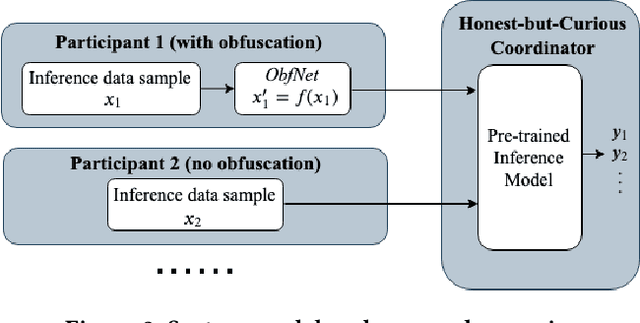

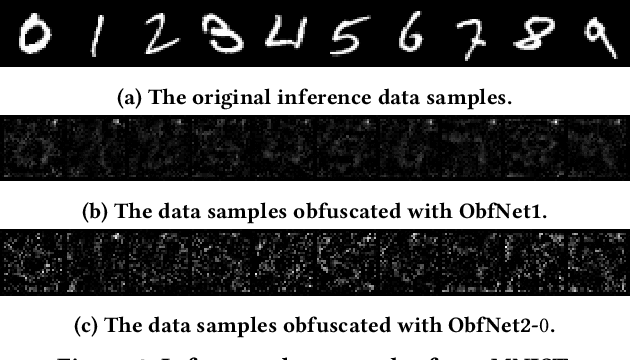

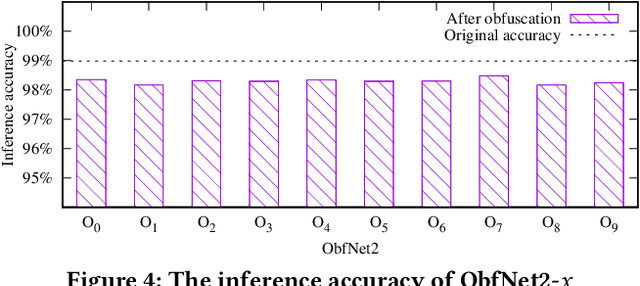

Abstract:The growing momentum of instrumenting the Internet of Things (IoT) with advanced machine learning techniques such as deep neural networks (DNNs) faces two practical challenges of limited compute power of edge devices and the need of protecting the confidentiality of the DNNs. The remote inference scheme that executes the DNNs on the server-class or cloud backend can address the above two challenges. However, it brings the concern of leaking the privacy of the IoT devices' users to the curious backend since the user-generated/related data is to be transmitted to the backend. This work develops a lightweight and unobtrusive approach to obfuscate the data before being transmitted to the backend for remote inference. In this approach, the edge device only needs to execute a small-scale neural network, incurring light compute overhead. Moreover, the edge device does not need to inform the backend on whether the data is obfuscated, making the protection unobtrusive. We apply the approach to three case studies of free spoken digit recognition, handwritten digit recognition, and American sign language recognition. The evaluation results obtained from the case studies show that our approach prevents the backend from obtaining the raw forms of the inference data while maintaining the DNN's inference accuracy at the backend.

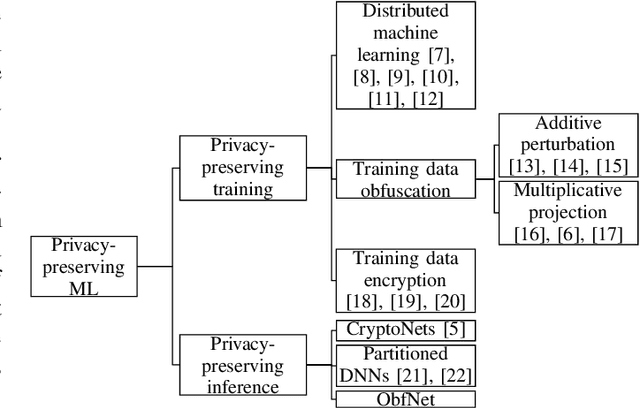

Challenges of Privacy-Preserving Machine Learning in IoT

Sep 21, 2019

Abstract:The Internet of Things (IoT) will be a main data generation infrastructure for achieving better system intelligence. However, the extensive data collection and processing in IoT also engender various privacy concerns. This paper provides a taxonomy of the existing privacy-preserving machine learning approaches developed in the context of cloud computing and discusses the challenges of applying them in the context of IoT. Moreover, we present a privacy-preserving inference approach that runs a lightweight neural network at IoT objects to obfuscate the data before transmission and a deep neural network in the cloud to classify the obfuscated data. Evaluation based on the MNIST dataset shows satisfactory performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge