Richard Hawkins

Evaluating Metrics for Safety with LLM-as-Judges

Dec 17, 2025Abstract:LLMs (Large Language Models) are increasingly used in text processing pipelines to intelligently respond to a variety of inputs and generation tasks. This raises the possibility of replacing human roles that bottleneck existing information flows, either due to insufficient staff or process complexity. However, LLMs make mistakes and some processing roles are safety critical. For example, triaging post-operative care to patients based on hospital referral letters, or updating site access schedules in nuclear facilities for work crews. If we want to introduce LLMs into critical information flows that were previously performed by humans, how can we make them safe and reliable? Rather than make performative claims about augmented generation frameworks or graph-based techniques, this paper argues that the safety argument should focus on the type of evidence we get from evaluation points in LLM processes, particularly in frameworks that employ LLM-as-Judges (LaJ) evaluators. This paper argues that although we cannot get deterministic evaluations from many natural language processing tasks, by adopting a basket of weighted metrics it may be possible to lower the risk of errors within an evaluation, use context sensitivity to define error severity and design confidence thresholds that trigger human review of critical LaJ judgments when concordance across evaluators is low.

Robustness Requirement Coverage using a Situation Coverage Approach for Vision-based AI Systems

Jul 17, 2025Abstract:AI-based robots and vehicles are expected to operate safely in complex and dynamic environments, even in the presence of component degradation. In such systems, perception relies on sensors such as cameras to capture environmental data, which is then processed by AI models to support decision-making. However, degradation in sensor performance directly impacts input data quality and can impair AI inference. Specifying safety requirements for all possible sensor degradation scenarios leads to unmanageable complexity and inevitable gaps. In this position paper, we present a novel framework that integrates camera noise factor identification with situation coverage analysis to systematically elicit robustness-related safety requirements for AI-based perception systems. We focus specifically on camera degradation in the automotive domain. Building on an existing framework for identifying degradation modes, we propose involving domain, sensor, and safety experts, and incorporating Operational Design Domain specifications to extend the degradation model by incorporating noise factors relevant to AI performance. Situation coverage analysis is then applied to identify representative operational contexts. This work marks an initial step toward integrating noise factor analysis and situational coverage to support principled formulation and completeness assessment of robustness requirements for camera-based AI perception.

Learning Run-time Safety Monitors for Machine Learning Components

Jun 23, 2024

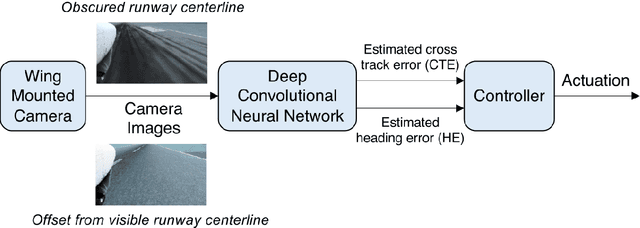

Abstract:For machine learning components used as part of autonomous systems (AS) in carrying out critical tasks it is crucial that assurance of the models can be maintained in the face of post-deployment changes (such as changes in the operating environment of the system). A critical part of this is to be able to monitor when the performance of the model at runtime (as a result of changes) poses a safety risk to the system. This is a particularly difficult challenge when ground truth is unavailable at runtime. In this paper we introduce a process for creating safety monitors for ML components through the use of degraded datasets and machine learning. The safety monitor that is created is deployed to the AS in parallel to the ML component to provide a prediction of the safety risk associated with the model output. We demonstrate the viability of our approach through some initial experiments using publicly available speed sign datasets.

Operationalizing Assurance Cases for Data Scientists: A Showcase of Concepts and Tooling in the Context of Test Data Quality for Machine Learning

Dec 08, 2023Abstract:Assurance Cases (ACs) are an established approach in safety engineering to argue quality claims in a structured way. In the context of quality assurance for Machine Learning (ML)-based software components, ACs are also being discussed and appear promising. Tools for operationalizing ACs do exist, yet mainly focus on supporting safety engineers on the system level. However, assuring the quality of an ML component within the system is commonly the responsibility of data scientists, who are usually less familiar with these tools. To address this gap, we propose a framework to support the operationalization of ACs for ML components based on technologies that data scientists use on a daily basis: Python and Jupyter Notebook. Our aim is to make the process of creating ML-related evidence in ACs more effective. Results from the application of the framework, documented through notebooks, can be integrated into existing AC tools. We illustrate the application of the framework on an example excerpt concerned with the quality of the test data.

Creating a Safety Assurance Case for an ML Satellite-Based Wildfire Detection and Alert System

Nov 08, 2022

Abstract:Wildfires are a common problem in many areas of the world with often catastrophic consequences. A number of systems have been created to provide early warnings of wildfires, including those that use satellite data to detect fires. The increased availability of small satellites, such as CubeSats, allows the wildfire detection response time to be reduced by deploying constellations of multiple satellites over regions of interest. By using machine learned components on-board the satellites, constraints which limit the amount of data that can be processed and sent back to ground stations can be overcome. There are hazards associated with wildfire alert systems, such as failing to detect the presence of a wildfire, or detecting a wildfire in the incorrect location. It is therefore necessary to be able to create a safety assurance case for the wildfire alert ML component that demonstrates it is sufficiently safe for use. This paper describes in detail how a safety assurance case for an ML wildfire alert system is created. This represents the first fully developed safety case for an ML component containing explicit argument and evidence as to the safety of the machine learning.

Analysing Ultra-Wide Band Positioning for Geofencing in a Safety Assurance Context

Mar 11, 2022

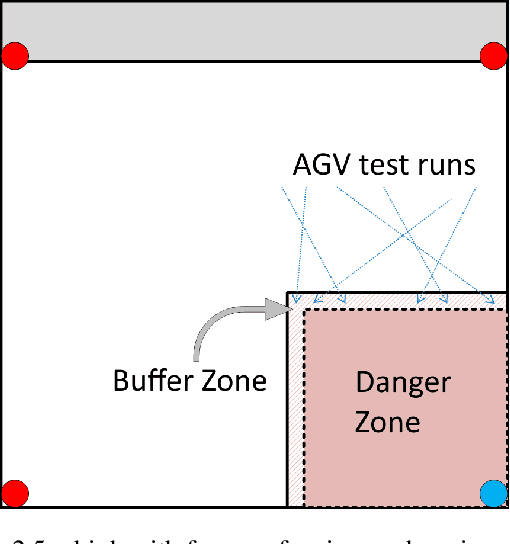

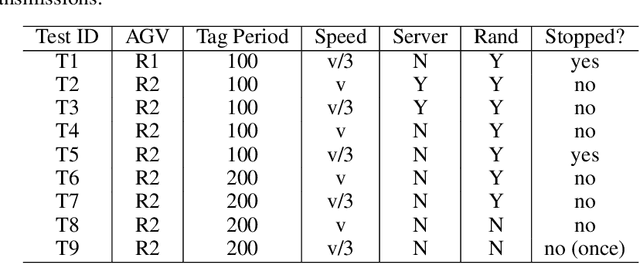

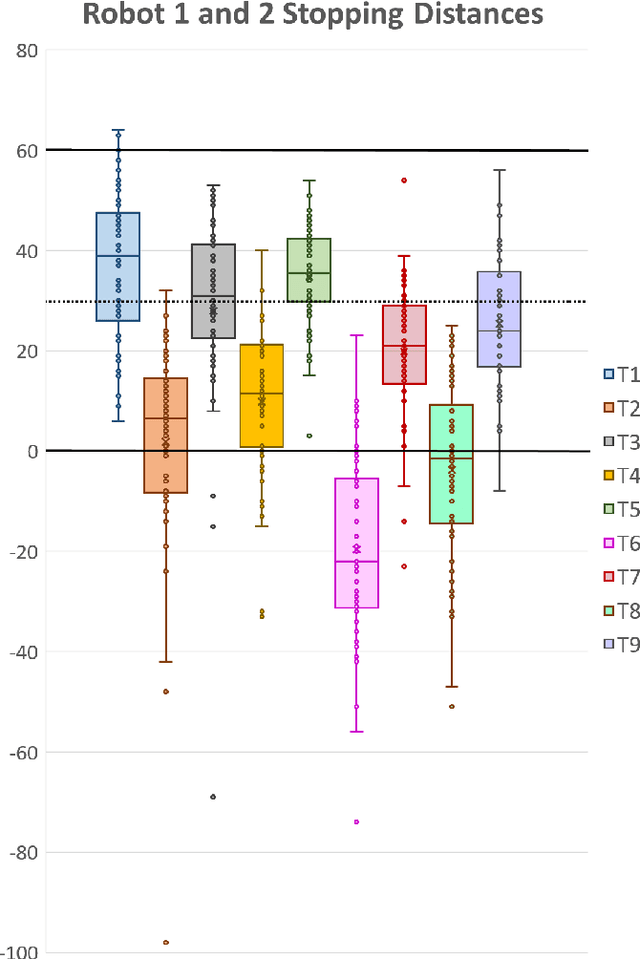

Abstract:There is a desire to move towards more flexible and automated factories. To enable this, we need to assure the safety of these dynamic factories. This safety assurance must be achieved in a manner that does not unnecessarily constrain the systems and thus negate the benefits of flexibility and automation. We previously developed a modular safety assurance approach, using safety contracts, as a way to achieve this. In this case study we show how this approach can be applied to Autonomous Guided Vehicles (AGV) operating as part of a dynamic factory and why it is necessary. We empirically evaluate commercial, indoor fog/edge localisation technology to provide geofencing for hazardous areas in a laboratory. The experiments determine how factors such as AGV speeds, tag transmission timings, control software and AGV capabilities affect the ability of the AGV to stop outside the hazardous areas. We describe how this approach could be used to create a safety case for the AGV operation.

Guidance on the Assurance of Machine Learning in Autonomous Systems

Feb 02, 2021

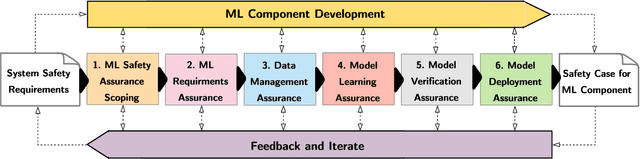

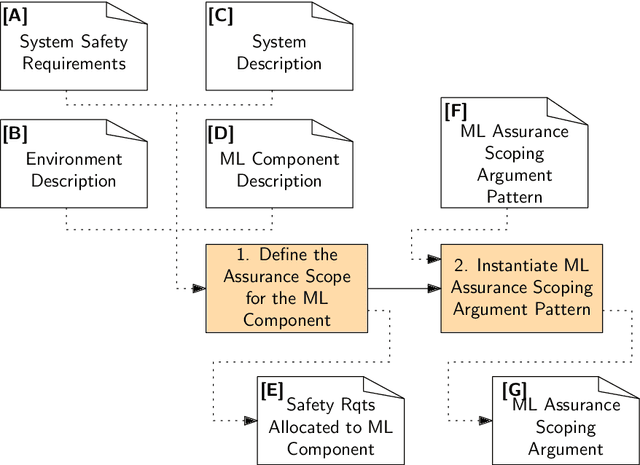

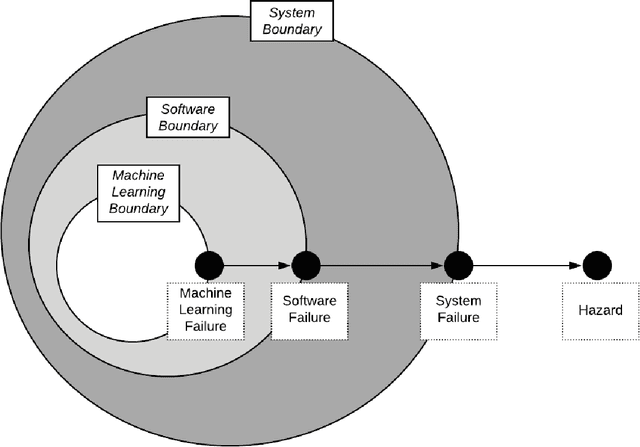

Abstract:Machine Learning (ML) is now used in a range of systems with results that are reported to exceed, under certain conditions, human performance. Many of these systems, in domains such as healthcare , automotive and manufacturing, exhibit high degrees of autonomy and are safety critical. Establishing justified confidence in ML forms a core part of the safety case for these systems. In this document we introduce a methodology for the Assurance of Machine Learning for use in Autonomous Systems (AMLAS). AMLAS comprises a set of safety case patterns and a process for (1) systematically integrating safety assurance into the development of ML components and (2) for generating the evidence base for explicitly justifying the acceptable safety of these components when integrated into autonomous system applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge