Radu Calinescu

University of York

Symbolic Runtime Verification and Adaptive Decision-Making for Robot-Assisted Dressing

Apr 22, 2025Abstract:We present a control framework for robot-assisted dressing that augments low-level hazard response with runtime monitoring and formal verification. A parametric discrete-time Markov chain (pDTMC) models the dressing process, while Bayesian inference dynamically updates this pDTMC's transition probabilities based on sensory and user feedback. Safety constraints from hazard analysis are expressed in probabilistic computation tree logic, and symbolically verified using a probabilistic model checker. We evaluate reachability, cost, and reward trade-offs for garment-snag mitigation and escalation, enabling real-time adaptation. Our approach provides a formal yet lightweight foundation for safety-aware, explainable robotic assistance.

Safe Reinforcement Learning in Black-Box Environments via Adaptive Shielding

May 28, 2024Abstract:Empowering safe exploration of reinforcement learning (RL) agents during training is a critical impediment towards deploying RL agents in many real-world scenarios. Training RL agents in unknown, black-box environments poses an even greater safety risk when prior knowledge of the domain/task is unavailable. We introduce ADVICE (Adaptive Shielding with a Contrastive Autoencoder), a novel post-shielding technique that distinguishes safe and unsafe features of state-action pairs during training, thus protecting the RL agent from executing actions that yield potentially hazardous outcomes. Our comprehensive experimental evaluation against state-of-the-art safe RL exploration techniques demonstrates how ADVICE can significantly reduce safety violations during training while maintaining a competitive outcome reward.

Out-of-distribution Object Detection through Bayesian Uncertainty Estimation

Oct 29, 2023

Abstract:The superior performance of object detectors is often established under the condition that the test samples are in the same distribution as the training data. However, in many practical applications, out-of-distribution (OOD) instances are inevitable and usually lead to uncertainty in the results. In this paper, we propose a novel, intuitive, and scalable probabilistic object detection method for OOD detection. Unlike other uncertainty-modeling methods that either require huge computational costs to infer the weight distributions or rely on model training through synthetic outlier data, our method is able to distinguish between in-distribution (ID) data and OOD data via weight parameter sampling from proposed Gaussian distributions based on pre-trained networks. We demonstrate that our Bayesian object detector can achieve satisfactory OOD identification performance by reducing the FPR95 score by up to 8.19% and increasing the AUROC score by up to 13.94% when trained on BDD100k and VOC datasets as the ID datasets and evaluated on COCO2017 dataset as the OOD dataset.

Robust Uncertainty Quantification using Conformalised Monte Carlo Prediction

Aug 18, 2023

Abstract:Deploying deep learning models in safety-critical applications remains a very challenging task, mandating the provision of assurances for the dependable operation of these models. Uncertainty quantification (UQ) methods estimate the model's confidence per prediction, informing decision-making by considering the effect of randomness and model misspecification. Despite the advances of state-of-the-art UQ methods, they are computationally expensive or produce conservative prediction sets/intervals. We introduce MC-CP, a novel hybrid UQ method that combines a new adaptive Monte Carlo (MC) dropout method with conformal prediction (CP). MC-CP adaptively modulates the traditional MC dropout at runtime to save memory and computation resources, enabling predictions to be consumed by CP, yielding robust prediction sets/intervals. Throughout comprehensive experiments, we show that MC-CP delivers significant improvements over advanced UQ methods, like MC dropout, RAPS and CQR, both in classification and regression benchmarks. MC-CP can be easily added to existing models, making its deployment simple.

Bayesian Learning for the Robust Verification of Autonomous Robots

Mar 15, 2023Abstract:We develop a novel Bayesian learning framework that enables the runtime verification of autonomous robots performing critical missions in uncertain environments. Our framework exploits prior knowledge and observations of the verified robotic system to learn expected ranges of values for the occurrence rates of its events. We support both events observed regularly during system operation, and singular events such as catastrophic failures or the completion of difficult one-off tasks. Furthermore, we use the learnt event-rate ranges to assemble interval continuous-time Markov models, and we apply quantitative verification to these models to compute expected intervals of variation for key system properties. These intervals reflect the uncertainty intrinsic to many real-world systems, enabling the robust verification of their quantitative properties under parametric uncertainty. We apply the proposed framework to the case study of verification of an autonomous robotic mission for underwater infrastructure inspection and repair.

Closed-loop Analysis of Vision-based Autonomous Systems: A Case Study

Feb 06, 2023Abstract:Deep neural networks (DNNs) are increasingly used in safety-critical autonomous systems as perception components processing high-dimensional image data. Formal analysis of these systems is particularly challenging due to the complexity of the perception DNNs, the sensors (cameras), and the environment conditions. We present a case study applying formal probabilistic analysis techniques to an experimental autonomous system that guides airplanes on taxiways using a perception DNN. We address the above challenges by replacing the camera and the network with a compact probabilistic abstraction built from the confusion matrices computed for the DNN on a representative image data set. We also show how to leverage local, DNN-specific analyses as run-time guards to increase the safety of the overall system. Our findings are applicable to other autonomous systems that use complex DNNs for perception.

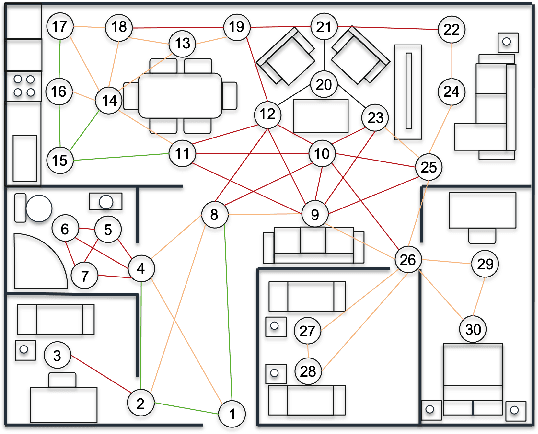

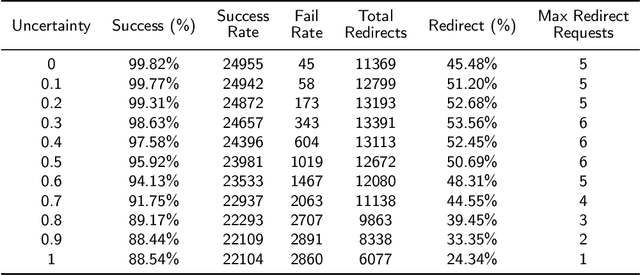

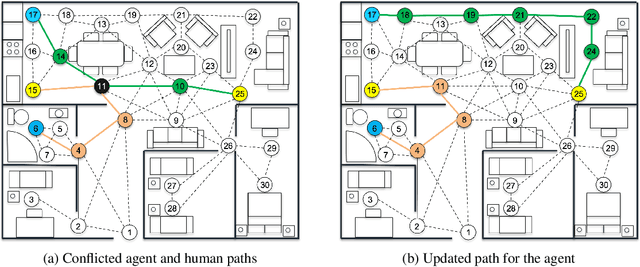

Towards Adaptive Planning of Assistive-care Robot Tasks

Sep 28, 2022

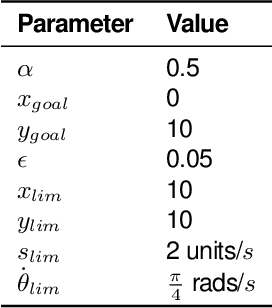

Abstract:This 'research preview' paper introduces an adaptive path planning framework for robotic mission execution in assistive-care applications. The framework provides a graph-based environment modelling approach, with dynamic path finding performed using Dijkstra's algorithm. A predictive module that uses probabilistic model checking is applied to estimate the human's movement through the environment, allowing run-time re-planning of the robot's path. We illustrate the use of the framework for a simulated assistive-care case study in which a mobile robot navigates through the environment and monitors an end user with mild physical or cognitive impairments.

* In Proceedings FMAS2022 ASYDE2022, arXiv:2209.13181

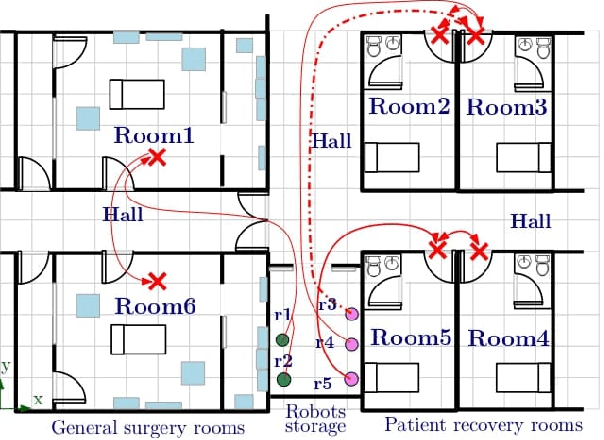

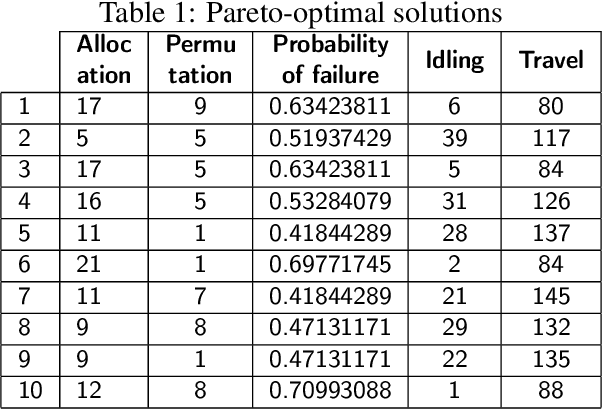

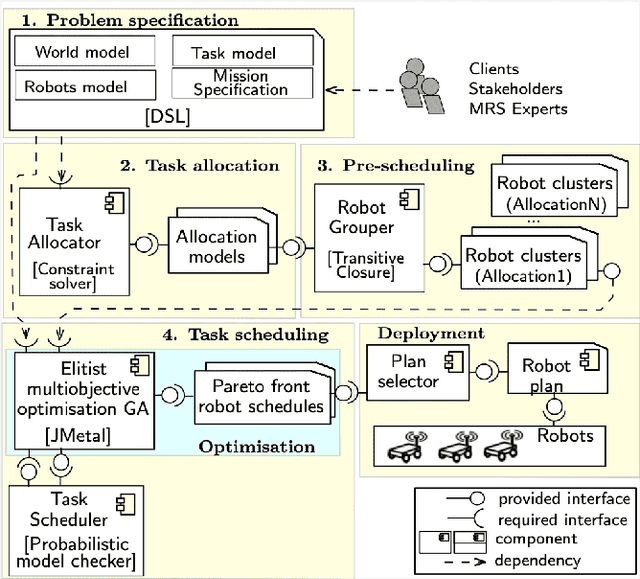

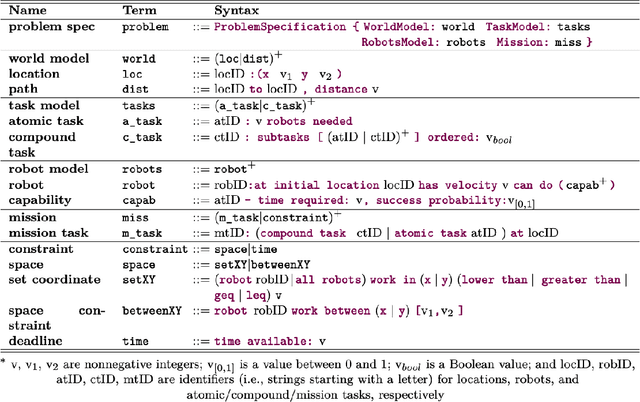

Scheduling of Missions with Constrained Tasks for Heterogeneous Robot Systems

Sep 28, 2022

Abstract:We present a formal tasK AllocatioN and scheduling apprOAch for multi-robot missions (KANOA). KANOA supports two important types of task constraints: task ordering, which requires the execution of several tasks in a specified order; and joint tasks, which indicates tasks that must be performed by more than one robot. To mitigate the complexity of robotic mission planning, KANOA handles the allocation of the mission tasks to robots, and the scheduling of the allocated tasks separately. To that end, the task allocation problem is formalised in first-order logic and resolved using the Alloy model analyzer, and the task scheduling problem is encoded as a Markov decision process and resolved using the PRISM probabilistic model checker. We illustrate the application of KANOA through a case study in which a heterogeneous robotic team is assigned a hospital maintenance mission.

* In Proceedings FMAS2022 ASYDE2022, arXiv:2209.13181

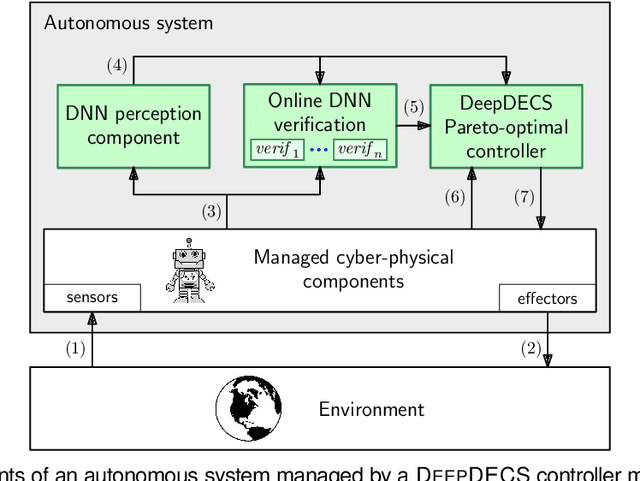

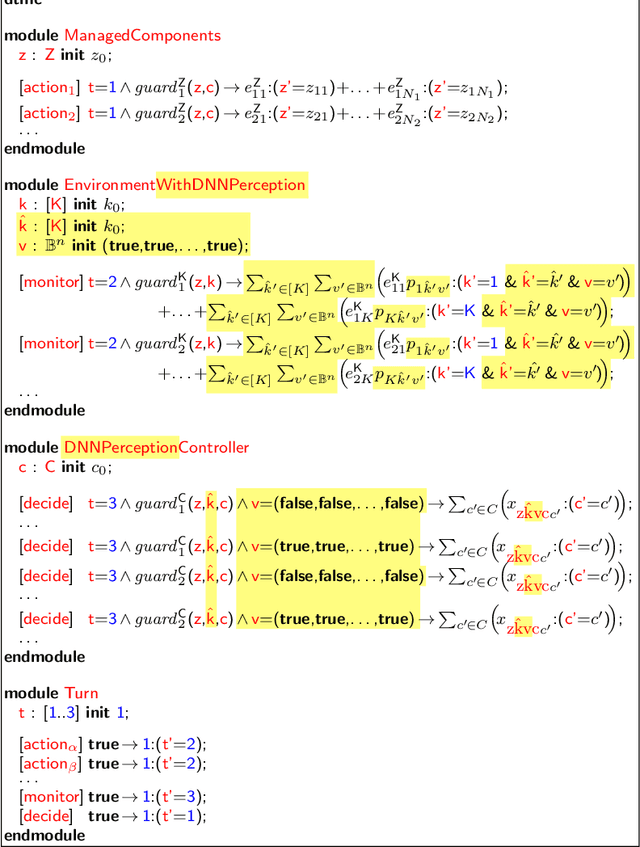

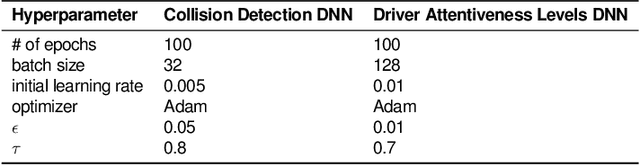

Discrete-Event Controller Synthesis for Autonomous Systems with Deep-Learning Perception Components

Feb 07, 2022

Abstract:We present DEEPDECS, a new method for the synthesis of correct-by-construction discrete-event controllers for autonomous systems that use deep neural network (DNN) classifiers for the perception step of their decision-making processes. Despite major advances in deep learning in recent years, providing safety guarantees for these systems remains very challenging. Our controller synthesis method addresses this challenge by integrating DNN verification with the synthesis of verified Markov models. The synthesised models correspond to discrete-event controllers guaranteed to satisfy the safety, dependability and performance requirements of the autonomous system, and to be Pareto optimal with respect to a set of optimisation criteria. We use the method in simulation to synthesise controllers for mobile-robot collision avoidance, and for maintaining driver attentiveness in shared-control autonomous driving.

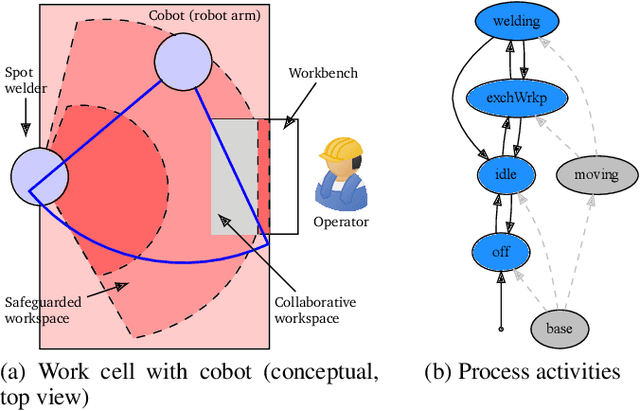

Verified Synthesis of Optimal Safety Controllers for Human-Robot Collaboration

Jun 11, 2021

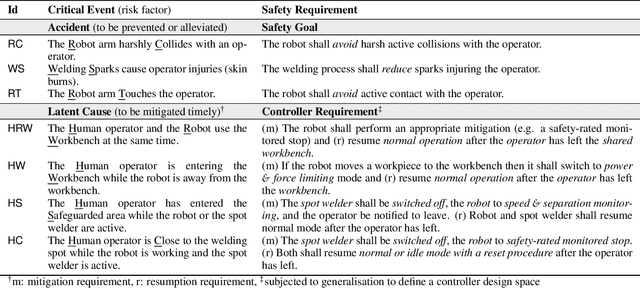

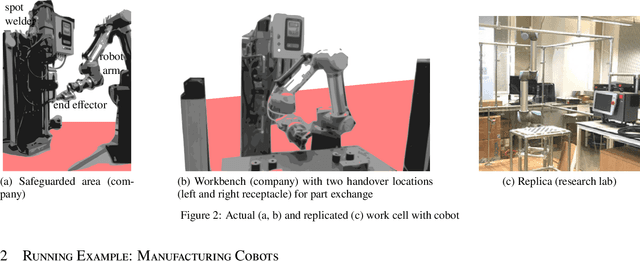

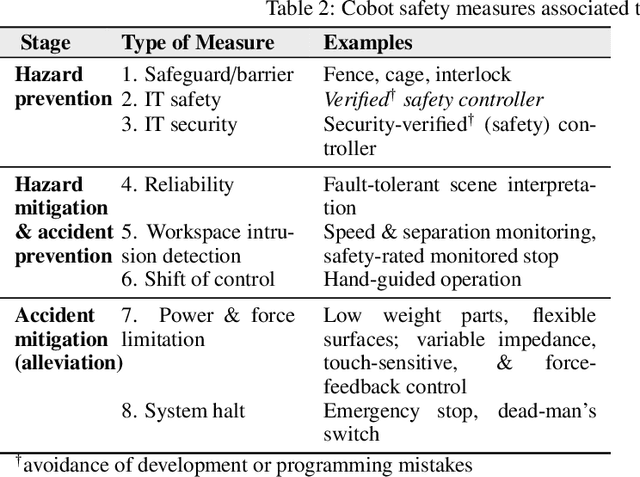

Abstract:We present a tool-supported approach for the synthesis, verification and validation of the control software responsible for the safety of the human-robot interaction in manufacturing processes that use collaborative robots. In human-robot collaboration, software-based safety controllers are used to improve operational safety, e.g., by triggering shutdown mechanisms or emergency stops to avoid accidents. Complex robotic tasks and increasingly close human-robot interaction pose new challenges to controller developers and certification authorities. Key among these challenges is the need to assure the correctness of safety controllers under explicit (and preferably weak) assumptions. Our controller synthesis, verification and validation approach is informed by the process, risk analysis, and relevant safety regulations for the target application. Controllers are selected from a design space of feasible controllers according to a set of optimality criteria, are formally verified against correctness criteria, and are translated into executable code and validated in a digital twin. The resulting controller can detect the occurrence of hazards, move the process into a safe state, and, in certain circumstances, return the process to an operational state from which it can resume its original task. We show the effectiveness of our software engineering approach through a case study involving the development of a safety controller for a manufacturing work cell equipped with a collaborative robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge