Quan Nguyen

Q-ShiftDP: A Differentially Private Parameter-Shift Rule for Quantum Machine Learning

Feb 03, 2026Abstract:Quantum Machine Learning (QML) promises significant computational advantages, but preserving training data privacy remains challenging. Classical approaches like differentially private stochastic gradient descent (DP-SGD) add noise to gradients but fail to exploit the unique properties of quantum gradient estimation. In this work, we introduce the Differentially Private Parameter-Shift Rule (Q-ShiftDP), the first privacy mechanism tailored to QML. By leveraging the inherent boundedness and stochasticity of quantum gradients computed via the parameter-shift rule, Q-ShiftDP enables tighter sensitivity analysis and reduces noise requirements. We combine carefully calibrated Gaussian noise with intrinsic quantum noise to provide formal privacy and utility guarantees, and show that harnessing quantum noise further improves the privacy-utility trade-off. Experiments on benchmark datasets demonstrate that Q-ShiftDP consistently outperforms classical DP methods in QML.

Counterfactual Explanations on Robust Perceptual Geodesics

Jan 26, 2026Abstract:Latent-space optimization methods for counterfactual explanations - framed as minimal semantic perturbations that change model predictions - inherit the ambiguity of Wachter et al.'s objective: the choice of distance metric dictates whether perturbations are meaningful or adversarial. Existing approaches adopt flat or misaligned geometries, leading to off-manifold artifacts, semantic drift, or adversarial collapse. We introduce Perceptual Counterfactual Geodesics (PCG), a method that constructs counterfactuals by tracing geodesics under a perceptually Riemannian metric induced from robust vision features. This geometry aligns with human perception and penalizes brittle directions, enabling smooth, on-manifold, semantically valid transitions. Experiments on three vision datasets show that PCG outperforms baselines and reveals failure modes hidden under standard metrics.

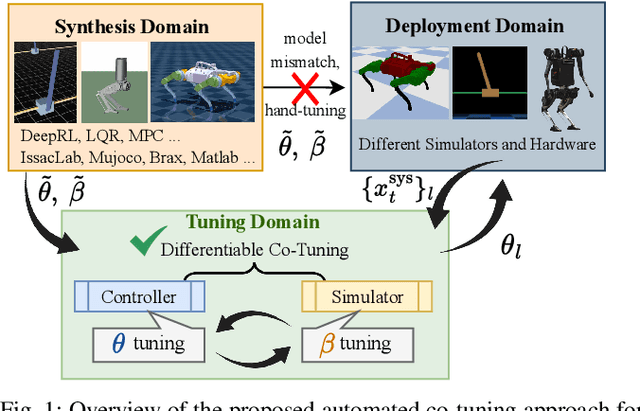

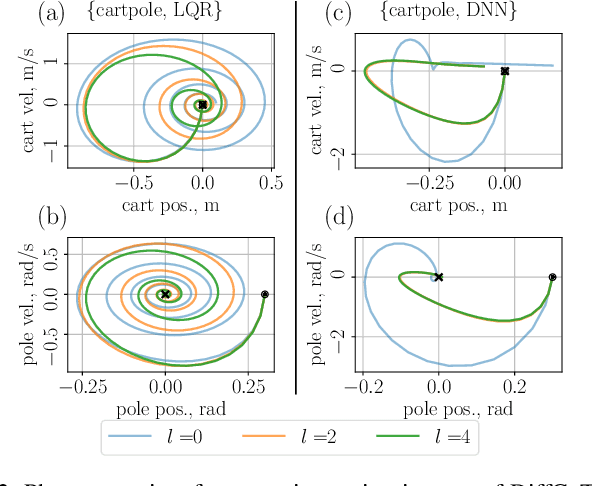

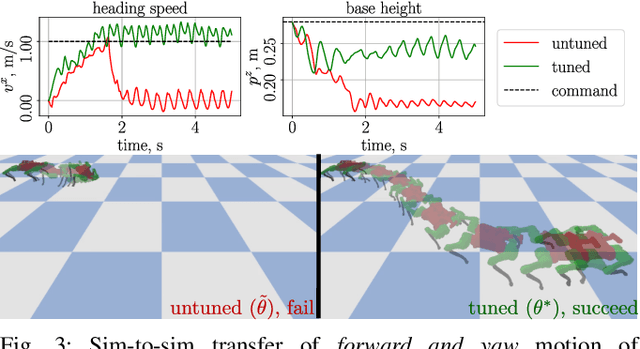

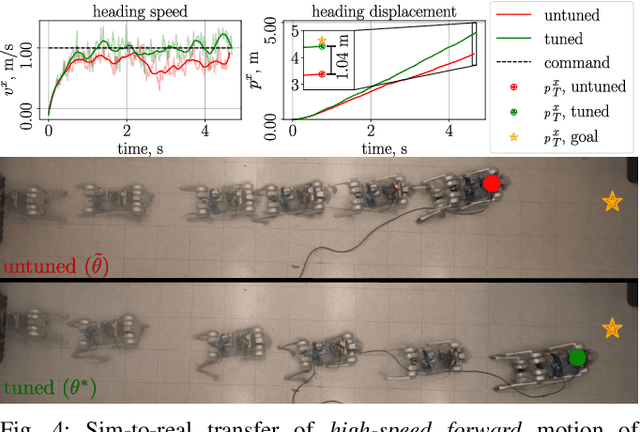

DiffCoTune: Differentiable Co-Tuning for Cross-domain Robot Control

May 29, 2025

Abstract:The deployment of robot controllers is hindered by modeling discrepancies due to necessary simplifications for computational tractability or inaccuracies in data-generating simulators. Such discrepancies typically require ad-hoc tuning to meet the desired performance, thereby ensuring successful transfer to a target domain. We propose a framework for automated, gradient-based tuning to enhance performance in the deployment domain by leveraging differentiable simulators. Our method collects rollouts in an iterative manner to co-tune the simulator and controller parameters, enabling systematic transfer within a few trials in the deployment domain. Specifically, we formulate multi-step objectives for tuning and employ alternating optimization to effectively adapt the controller to the deployment domain. The scalability of our framework is demonstrated by co-tuning model-based and learning-based controllers of arbitrary complexity for tasks ranging from low-dimensional cart-pole stabilization to high-dimensional quadruped and biped tracking, showing performance improvements across different deployment domains.

Reduce Computational Cost In Deep Reinforcement Learning Via Randomized Policy Learning

May 25, 2025Abstract:Recent advancements in reinforcement learning (RL) have leveraged neural networks to achieve state-of-the-art performance across various control tasks. However, these successes often come at the cost of significant computational resources, as training deep neural networks requires substantial time and data. In this paper, we introduce an actor-critic algorithm that utilizes randomized neural networks to drastically reduce computational costs while maintaining strong performance. Despite its simple architecture, our method effectively solves a range of control problems, including the locomotion control of a highly dynamic 12-motor quadruped robot, and achieves results comparable to leading algorithms such as Proximal Policy Optimization (PPO). Notably, our approach does not outperform other algorithms in terms of sample efficnency but rather in terms of wall-clock training time. That is, although our algorithm requires more timesteps to converge to an optimal policy, the actual time required for training turns out to be lower.

One-Layer Transformers are Provably Optimal for In-context Reasoning and Distributional Association Learning in Next-Token Prediction Tasks

May 21, 2025Abstract:We study the approximation capabilities and on-convergence behaviors of one-layer transformers on the noiseless and noisy in-context reasoning of next-token prediction. Existing theoretical results focus on understanding the in-context reasoning behaviors for either the first gradient step or when the number of samples is infinite. Furthermore, no convergence rates nor generalization abilities were known. Our work addresses these gaps by showing that there exists a class of one-layer transformers that are provably Bayes-optimal with both linear and ReLU attention. When being trained with gradient descent, we show via a finite-sample analysis that the expected loss of these transformers converges at linear rate to the Bayes risk. Moreover, we prove that the trained models generalize to unseen samples as well as exhibit learning behaviors that were empirically observed in previous works. Our theoretical findings are further supported by extensive empirical validations.

Multimodal Integrated Knowledge Transfer to Large Language Models through Preference Optimization with Biomedical Applications

May 09, 2025Abstract:The scarcity of high-quality multimodal biomedical data limits the ability to effectively fine-tune pretrained Large Language Models (LLMs) for specialized biomedical tasks. To address this challenge, we introduce MINT (Multimodal Integrated kNowledge Transfer), a framework that aligns unimodal large decoder models with domain-specific decision patterns from multimodal biomedical data through preference optimization. While MINT supports different optimization techniques, we primarily implement it with the Odds Ratio Preference Optimization (ORPO) framework as its backbone. This strategy enables the aligned LLMs to perform predictive tasks using text-only or image-only inputs while retaining knowledge learnt from multimodal data. MINT leverages an upstream multimodal machine learning (MML) model trained on high-quality multimodal data to transfer domain-specific insights to downstream text-only or image-only LLMs. We demonstrate its effectiveness through two key applications: (1) Rare genetic disease prediction from texts, where MINT uses a multimodal encoder model, trained on facial photos and clinical notes, to generate a preference dataset for aligning a lightweight Llama 3.2-3B-Instruct. Despite relying on text input only, the MINT-derived model outperforms models trained with SFT, RAG, or DPO, and even outperforms Llama 3.1-405B-Instruct. (2) Tissue type classification using cell nucleus images, where MINT uses a vision-language foundation model as the preference generator, containing knowledge learnt from both text and histopathological images to align downstream image-only models. The resulting MINT-derived model significantly improves the performance of Llama 3.2-Vision-11B-Instruct on tissue type classification. In summary, MINT provides an effective strategy to align unimodal LLMs with high-quality multimodal expertise through preference optimization.

IGL-DT: Iterative Global-Local Feature Learning with Dual-Teacher Semantic Segmentation Framework under Limited Annotation Scheme

Apr 14, 2025

Abstract:Semi-Supervised Semantic Segmentation (SSSS) aims to improve segmentation accuracy by leveraging a small set of labeled images alongside a larger pool of unlabeled data. Recent advances primarily focus on pseudo-labeling, consistency regularization, and co-training strategies. However, existing methods struggle to balance global semantic representation with fine-grained local feature extraction. To address this challenge, we propose a novel tri-branch semi-supervised segmentation framework incorporating a dual-teacher strategy, named IGL-DT. Our approach employs SwinUnet for high-level semantic guidance through Global Context Learning and ResUnet for detailed feature refinement via Local Regional Learning. Additionally, a Discrepancy Learning mechanism mitigates over-reliance on a single teacher, promoting adaptive feature learning. Extensive experiments on benchmark datasets demonstrate that our method outperforms state-of-the-art approaches, achieving superior segmentation performance across various data regimes.

HDC: Hierarchical Distillation for Multi-level Noisy Consistency in Semi-Supervised Fetal Ultrasound Segmentation

Apr 14, 2025Abstract:Transvaginal ultrasound is a critical imaging modality for evaluating cervical anatomy and detecting physiological changes. However, accurate segmentation of cervical structures remains challenging due to low contrast, shadow artifacts, and fuzzy boundaries. While convolutional neural networks (CNNs) have shown promising results in medical image segmentation, their performance is often limited by the need for large-scale annotated datasets - an impractical requirement in clinical ultrasound imaging. Semi-supervised learning (SSL) offers a compelling solution by leveraging unlabeled data, but existing teacher-student frameworks often suffer from confirmation bias and high computational costs. We propose HDC, a novel semi-supervised segmentation framework that integrates Hierarchical Distillation and Consistency learning within a multi-level noise mean-teacher framework. Unlike conventional approaches that rely solely on pseudo-labeling, we introduce a hierarchical distillation mechanism that guides feature-level learning via two novel objectives: (1) Correlation Guidance Loss to align feature representations between the teacher and main student branch, and (2) Mutual Information Loss to stabilize representations between the main and noisy student branches. Our framework reduces model complexity while improving generalization. Extensive experiments on two fetal ultrasound datasets, FUGC and PSFH, demonstrate that our method achieves competitive performance with significantly lower computational overhead than existing multi-teacher models.

A Lightweight Moment Retrieval System with Global Re-Ranking and Robust Adaptive Bidirectional Temporal Search

Apr 12, 2025

Abstract:The exponential growth of digital video content has posed critical challenges in moment-level video retrieval, where existing methodologies struggle to efficiently localize specific segments within an expansive video corpus. Current retrieval systems are constrained by computational inefficiencies, temporal context limitations, and the intrinsic complexity of navigating video content. In this paper, we address these limitations through a novel Interactive Video Corpus Moment Retrieval framework that integrates a SuperGlobal Reranking mechanism and Adaptive Bidirectional Temporal Search (ABTS), strategically optimizing query similarity, temporal stability, and computational resources. By preprocessing a large corpus of videos using a keyframe extraction model and deduplication technique through image hashing, our approach provides a scalable solution that significantly reduces storage requirements while maintaining high localization precision across diverse video repositories.

Cycle Training with Semi-Supervised Domain Adaptation: Bridging Accuracy and Efficiency for Real-Time Mobile Scene Detection

Apr 12, 2025Abstract:Nowadays, smartphones are ubiquitous, and almost everyone owns one. At the same time, the rapid development of AI has spurred extensive research on applying deep learning techniques to image classification. However, due to the limited resources available on mobile devices, significant challenges remain in balancing accuracy with computational efficiency. In this paper, we propose a novel training framework called Cycle Training, which adopts a three-stage training process that alternates between exploration and stabilization phases to optimize model performance. Additionally, we incorporate Semi-Supervised Domain Adaptation (SSDA) to leverage the power of large models and unlabeled data, thereby effectively expanding the training dataset. Comprehensive experiments on the CamSSD dataset for mobile scene detection demonstrate that our framework not only significantly improves classification accuracy but also ensures real-time inference efficiency. Specifically, our method achieves a 94.00% in Top-1 accuracy and a 99.17% in Top-3 accuracy and runs inference in just 1.61ms using CPU, demonstrating its suitability for real-world mobile deployment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge