RobustBench: a standardized adversarial robustness benchmark

Oct 19, 2020Francesco Croce, Maksym Andriushchenko, Vikash Sehwag, Nicolas Flammarion, Mung Chiang, Prateek Mittal, Matthias Hein

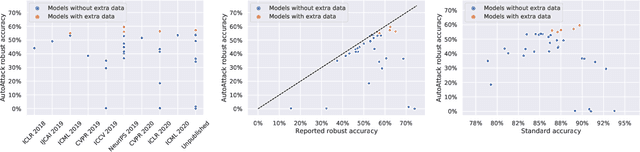

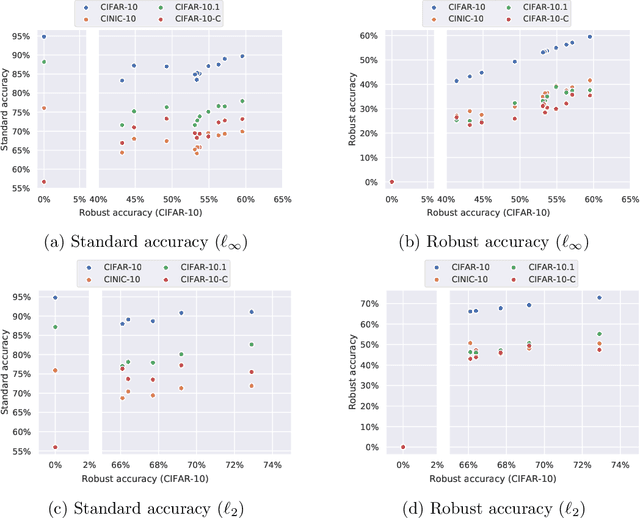

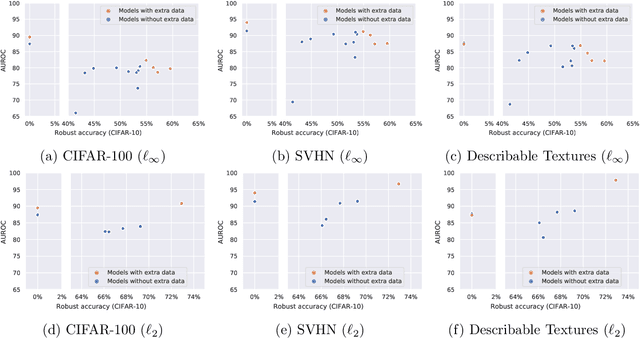

Evaluation of adversarial robustness is often error-prone leading to overestimation of the true robustness of models. While adaptive attacks designed for a particular defense are a way out of this, there are only approximate guidelines on how to perform them. Moreover, adaptive evaluations are highly customized for particular models, which makes it difficult to compare different defenses. Our goal is to establish a standardized benchmark of adversarial robustness, which as accurately as possible reflects the robustness of the considered models within a reasonable computational budget. This requires to impose some restrictions on the admitted models to rule out defenses that only make gradient-based attacks ineffective without improving actual robustness. We evaluate robustness of models for our benchmark with AutoAttack, an ensemble of white- and black-box attacks which was recently shown in a large-scale study to improve almost all robustness evaluations compared to the original publications. Our leaderboard, hosted at http://robustbench.github.io/, aims at reflecting the current state of the art on a set of well-defined tasks in $\ell_\infty$- and $\ell_2$-threat models with possible extensions in the future. Additionally, we open-source the library http://github.com/RobustBench/robustbench that provides unified access to state-of-the-art robust models to facilitate their downstream applications. Finally, based on the collected models, we analyze general trends in $\ell_p$-robustness and its impact on other tasks such as robustness to various distribution shifts and out-of-distribution detection.

A Critical Evaluation of Open-World Machine Learning

Jul 08, 2020Liwei Song, Vikash Sehwag, Arjun Nitin Bhagoji, Prateek Mittal

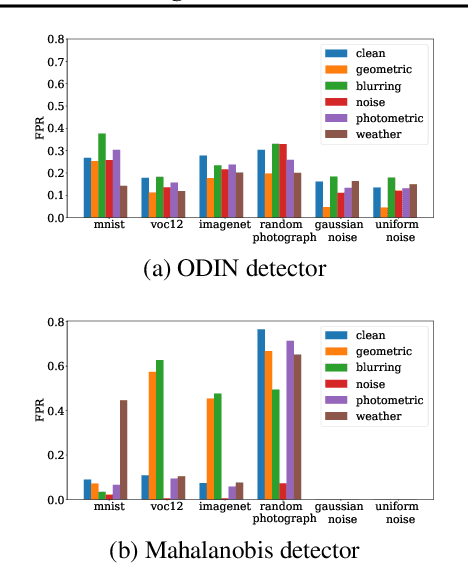

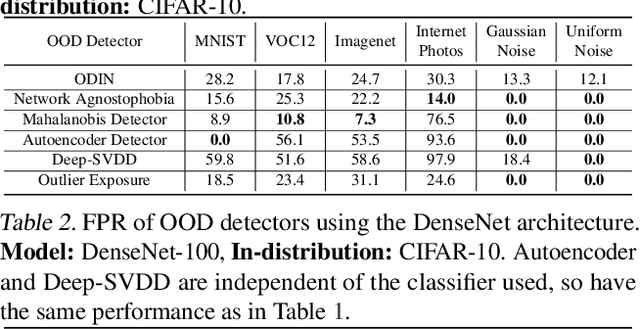

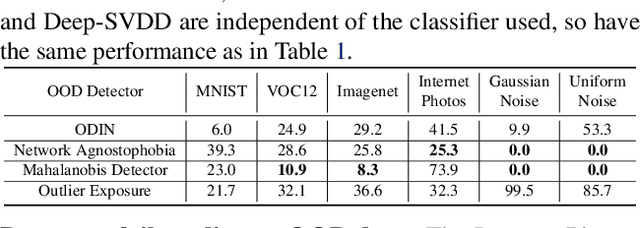

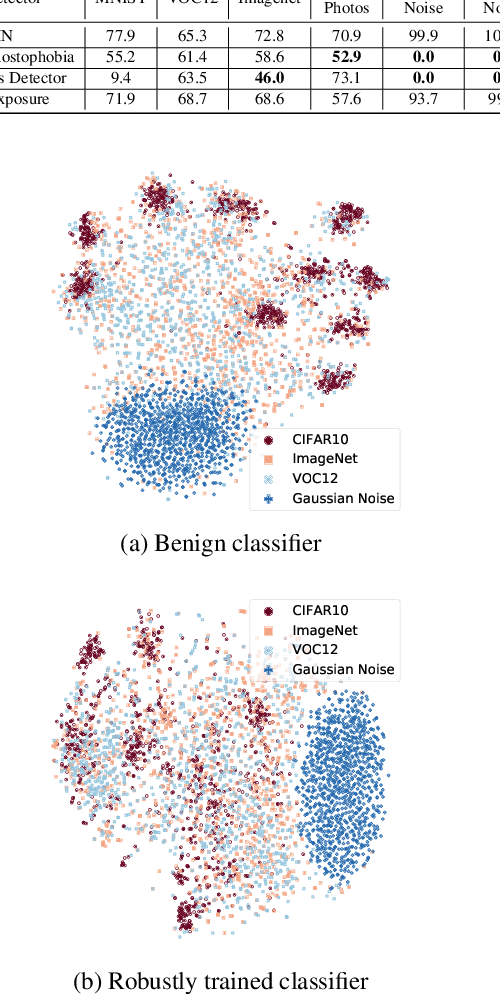

Open-world machine learning (ML) combines closed-world models trained on in-distribution data with out-of-distribution (OOD) detectors, which aim to detect and reject OOD inputs. Previous works on open-world ML systems usually fail to test their reliability under diverse, and possibly adversarial conditions. Therefore, in this paper, we seek to understand how resilient are state-of-the-art open-world ML systems to changes in system components? With our evaluation across 6 OOD detectors, we find that the choice of in-distribution data, model architecture and OOD data have a strong impact on OOD detection performance, inducing false positive rates in excess of $70\%$. We further show that OOD inputs with 22 unintentional corruptions or adversarial perturbations render open-world ML systems unusable with false positive rates of up to $100\%$. To increase the resilience of open-world ML, we combine robust classifiers with OOD detection techniques and uncover a new trade-off between OOD detection and robustness.

Time for a Background Check! Uncovering the impact of Background Features on Deep Neural Networks

Jun 24, 2020Vikash Sehwag, Rajvardhan Oak, Mung Chiang, Prateek Mittal

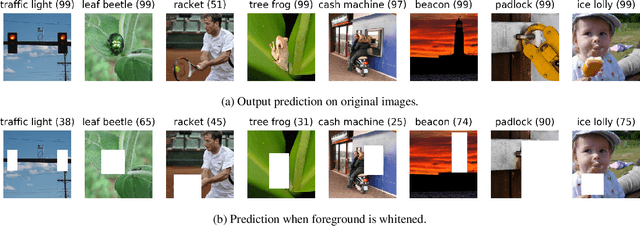

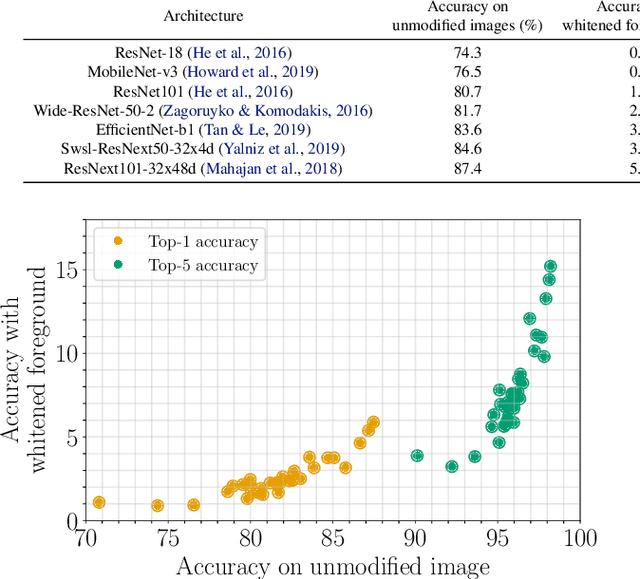

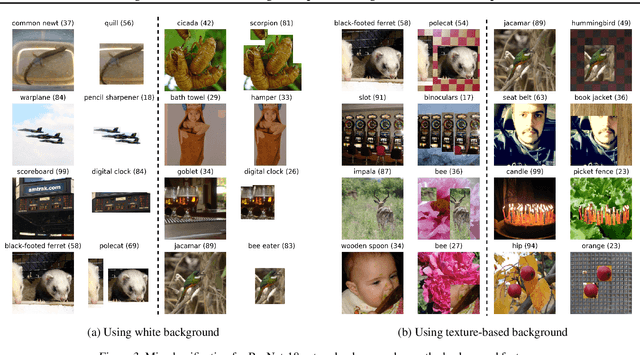

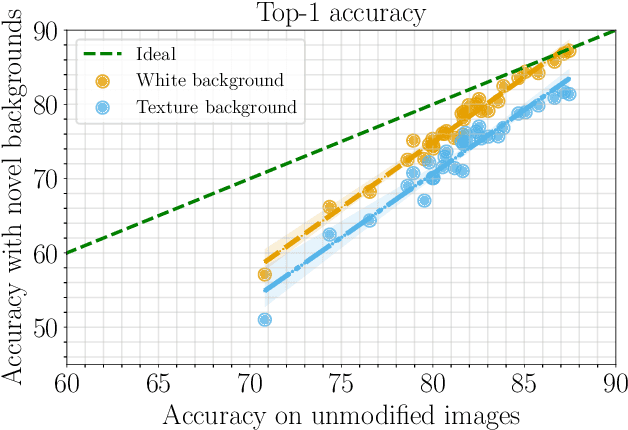

With increasing expressive power, deep neural networks have significantly improved the state-of-the-art on image classification datasets, such as ImageNet. In this paper, we investigate to what extent the increasing performance of deep neural networks is impacted by background features? In particular, we focus on background invariance, i.e., accuracy unaffected by switching background features and background influence, i.e., predictive power of background features itself when foreground is masked. We perform experiments with 32 different neural networks ranging from small-size networks to large-scale networks trained with up to one Billion images. Our investigations reveal that increasing expressive power of DNNs leads to higher influence of background features, while simultaneously, increases their ability to make the correct prediction when background features are removed or replaced with a randomly selected texture-based background.

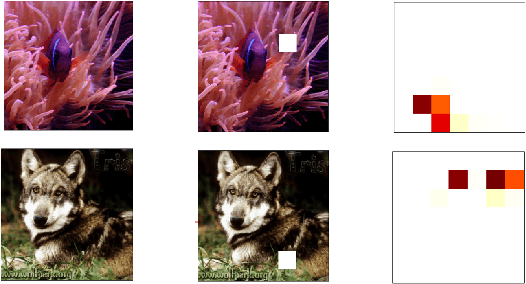

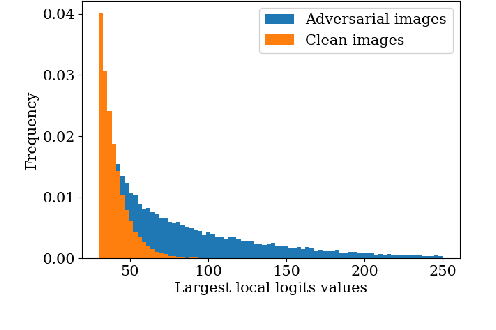

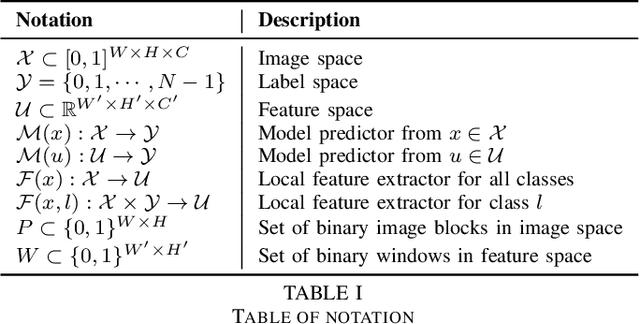

PatchGuard: Provable Defense against Adversarial Patches Using Masks on Small Receptive Fields

Jun 08, 2020Chong Xiang, Arjun Nitin Bhagoji, Vikash Sehwag, Prateek Mittal

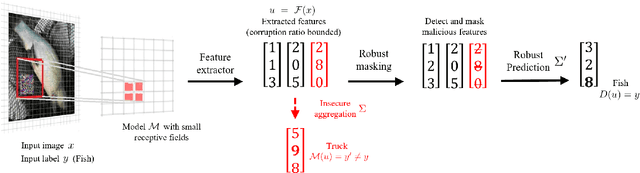

Localized adversarial patches aim to induce misclassification in machine learning models by arbitrarily modifying pixels within a restricted region of an image. Such attacks can be realized in the physical world by attaching the adversarial patch to the object to be misclassified. In this paper, we propose a general defense framework called PatchGuard that can achieve both high clean accuracy and provable robustness against localized adversarial patches. The cornerstone of PatchGuard is to use convolutional networks with small receptive fields that impose a bound on the number of features corrupted by an adversarial patch. Given a bound on the number of corrupted features, the problem of designing an adversarial patch defense reduces to that of designing a secure feature aggregation mechanism. Towards this end, we present our robust masking defense that robustly detects and masks corrupted features to recover the correct prediction. Our defense achieves state-of-the-art provable robust accuracy on ImageNette (a 10-class subset of ImageNet), ImageNet, and CIFAR-10 datasets. Against the strongest untargeted white-box adaptive attacker, we achieve 92.4% clean accuracy and 85.2% provable robust accuracy on 10-class ImageNette images against an adversarial patch consisting of 2% image pixels, 51.9% clean accuracy and 14.4% provable robust accuracy on 1000-class ImageNet images against a 2% pixel patch, and 80.0% clean accuracy and 62.2% provable accuracy on CIFAR-10 images against a 2.4% pixel patch.

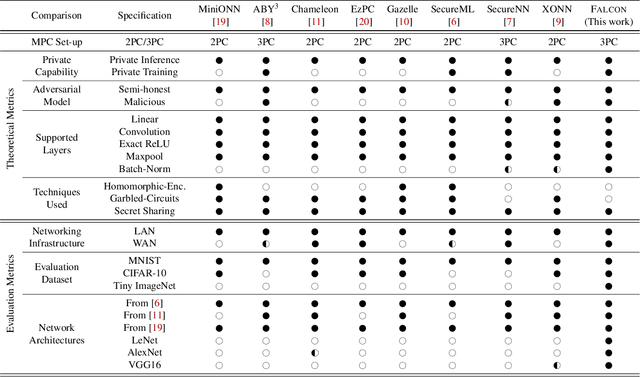

FALCON: Honest-Majority Maliciously Secure Framework for Private Deep Learning

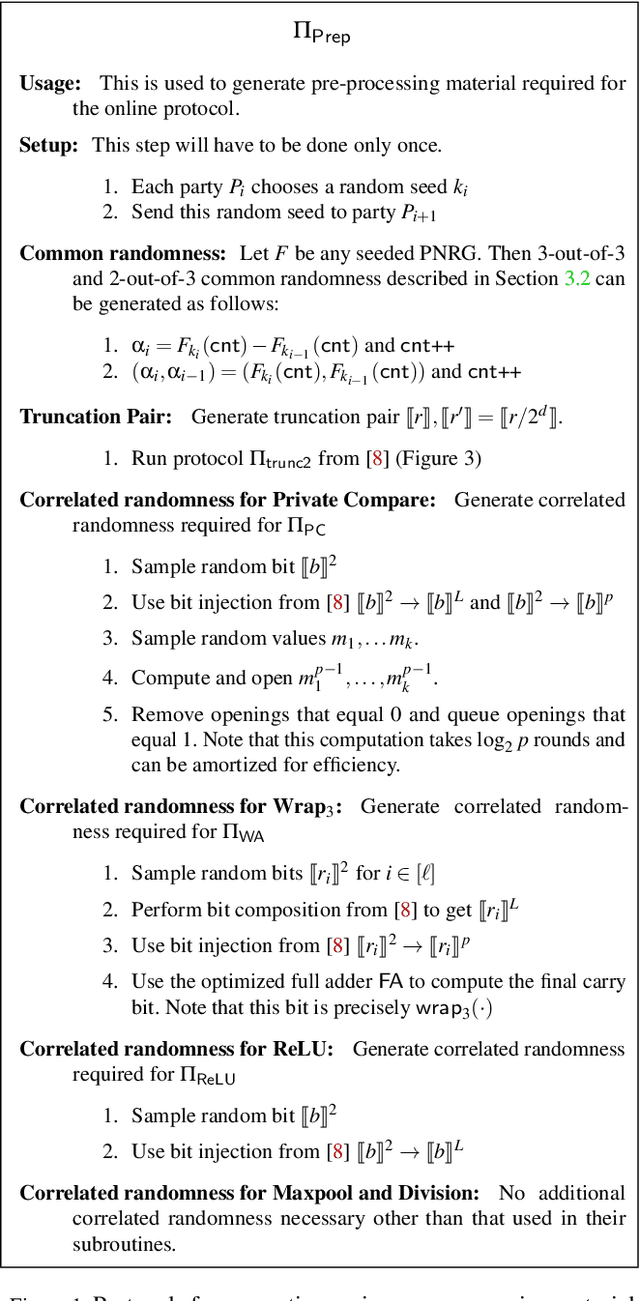

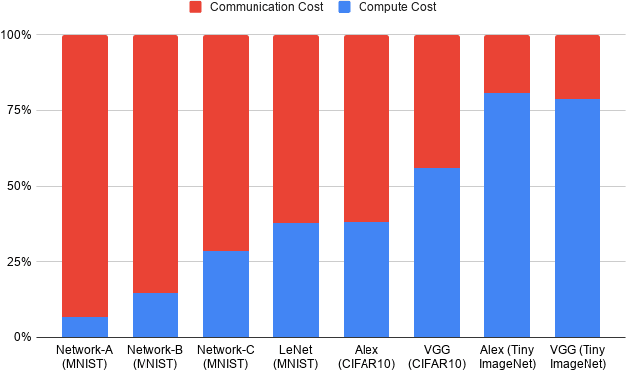

Apr 05, 2020Sameer Wagh, Shruti Tople, Fabrice Benhamouda, Eyal Kushilevitz, Prateek Mittal, Tal Rabin

This paper aims to enable training and inference of neural networks in a manner that protects the privacy of sensitive data. We propose FALCON - an end-to-end 3-party protocol for fast and secure computation of deep learning algorithms on large networks. FALCON presents three main advantages. It is highly expressive. To the best of our knowledge, it is the first secure framework to support high capacity networks with over a hundred million parameters such as VGG16 as well as the first to support batch normalization, a critical component of deep learning that enables training of complex network architectures such as AlexNet. Next, FALCON guarantees security with abort against malicious adversaries, assuming an honest majority. It ensures that the protocol always completes with correct output for honest participants or aborts when it detects the presence of a malicious adversary. Lastly, FALCON presents new theoretical insights for protocol design that make it highly efficient and allow it to outperform existing secure deep learning solutions. Compared to prior art for private inference, we are about 8x faster than SecureNN (PETS '19) on average and comparable to ABY3 (CCS '18). We are about 16-200x more communication efficient than either of these. For private training, we are about 6x faster than SecureNN, 4.4x faster than ABY3 and about 2-60x more communication efficient. This is the first paper to show via experiments in the WAN setting, that for multi-party machine learning computations over large networks and datasets, compute operations dominate the overall latency, as opposed to the communication.

Systematic Evaluation of Privacy Risks of Machine Learning Models

Mar 24, 2020Liwei Song, Prateek Mittal

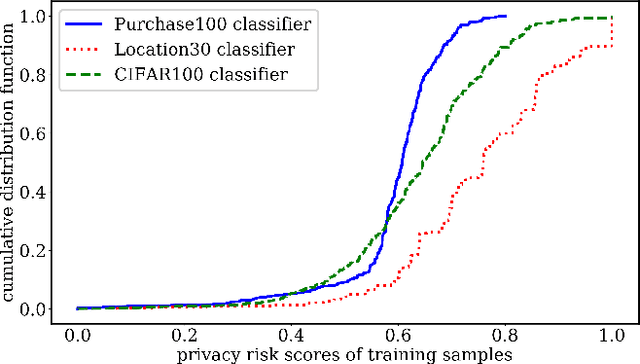

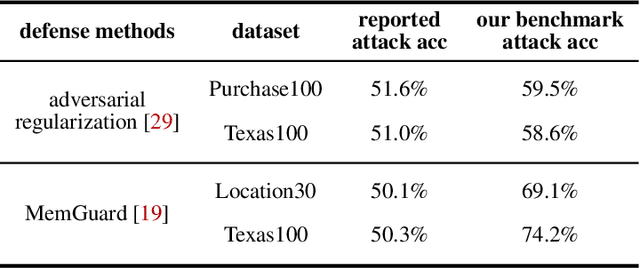

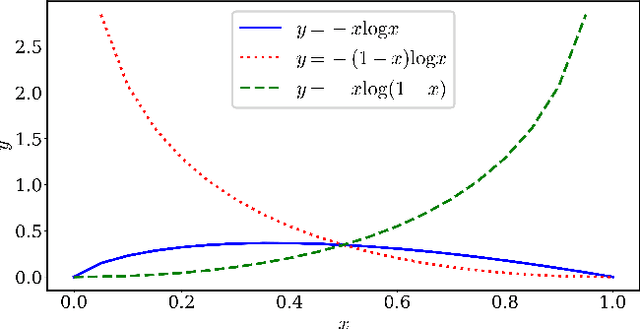

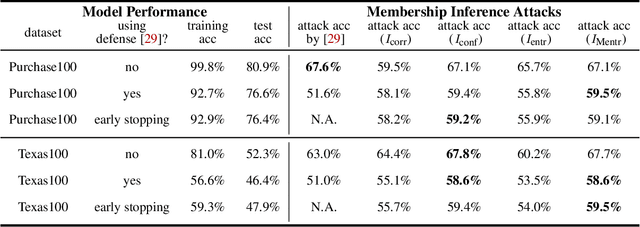

Machine learning models are prone to memorizing sensitive data, making them vulnerable to membership inference attacks in which an adversary aims to guess if an input sample was used to train the model. In this paper, we show that prior work on membership inference attacks may severely underestimate the privacy risks by relying solely on training custom neural network classifiers to perform attacks and focusing only on the aggregate results over data samples, such as the attack accuracy. To overcome these limitations, we first propose to benchmark membership inference privacy risks by improving existing non-neural network based inference attacks and proposing a new inference attack method based on a modification of prediction entropy. We also propose benchmarks for defense mechanisms by accounting for adaptive adversaries with knowledge of the defense and also accounting for the trade-off between model accuracy and privacy risks. Using our benchmark attacks, we demonstrate that existing defense approaches are not as effective as previously reported. Next, we introduce a new approach for fine-grained privacy analysis by formulating and deriving a new metric called the privacy risk score. Our privacy risk score metric measures an individual sample's likelihood of being a training member, which allows an adversary to perform membership inference attacks with high confidence. We experimentally validate the effectiveness of the privacy risk score metric and demonstrate that the distribution of the privacy risk score across individual samples is heterogeneous. Finally, we perform an in-depth investigation for understanding why certain samples have high privacy risk scores, including correlations with model sensitivity, generalization error, and feature embeddings. Our work emphasizes the importance of a systematic and rigorous evaluation of privacy risks of machine learning models.

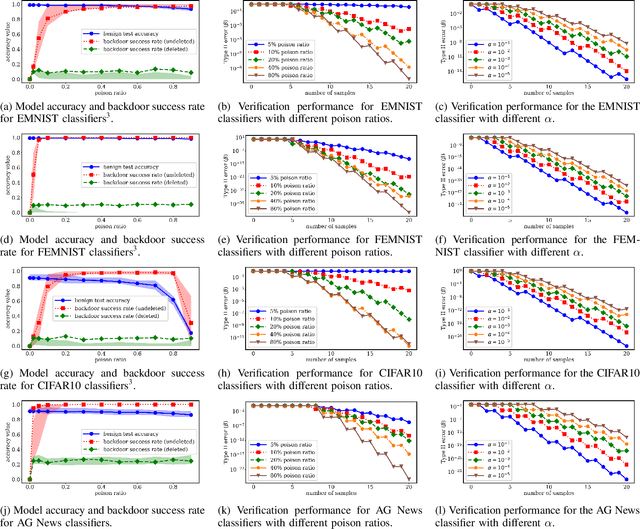

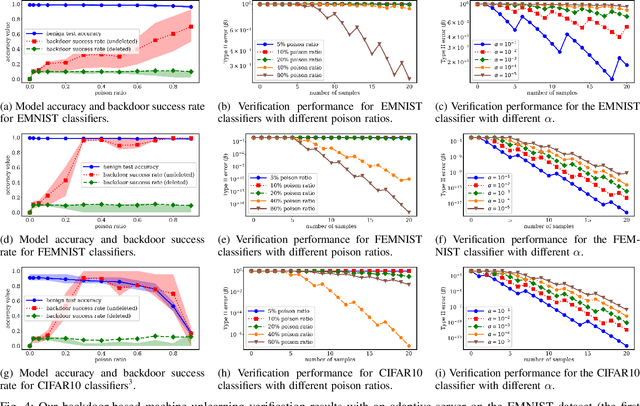

Towards Probabilistic Verification of Machine Unlearning

Mar 09, 2020David Marco Sommer, Liwei Song, Sameer Wagh, Prateek Mittal

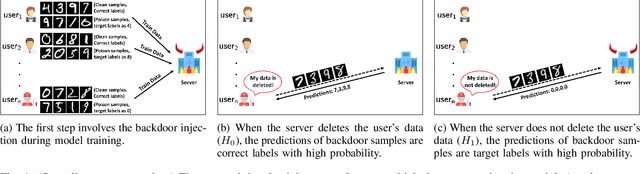

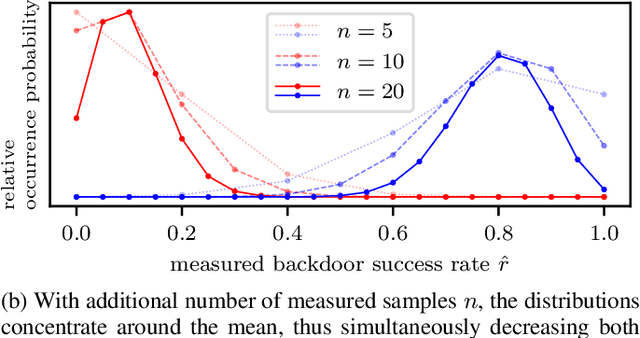

Right to be forgotten, also known as the right to erasure, is the right of individuals to have their data erased from an entity storing it. The General Data Protection Regulation in the European Union legally solidified the status of this long held notion. As a consequence, there is a growing need for the development of mechanisms whereby users can verify if service providers comply with their deletion requests. In this work, we take the first step in proposing a formal framework to study the design of such verification mechanisms for data deletion requests -- also known as machine unlearning -- in the context of systems that provide machine learning as a service. We propose a backdoor-based verification mechanism and demonstrate its effectiveness in certifying data deletion with high confidence using the above framework. Our mechanism makes a novel use of backdoor attacks in ML as a basis for quantitatively inferring machine unlearning. In our mechanism, each user poisons part of its training data by injecting a user-specific backdoor trigger associated with a user-specific target label. The prediction of target labels on test samples with the backdoor trigger is then used as an indication of the user's data being used to train the ML model. We formalize the verification process as a hypothesis testing problem, and provide theoretical guarantees on the statistical power of the hypothesis test. We experimentally demonstrate that our approach has minimal effect on the machine learning service but provides high confidence verification of unlearning. We show that with a $30\%$ poison ratio and merely $20$ test queries, our verification mechanism has both false positive and false negative ratios below $10^{-5}$. Furthermore, we also show the effectiveness of our approach by testing it against an adaptive adversary that uses a state-of-the-art backdoor defense method.

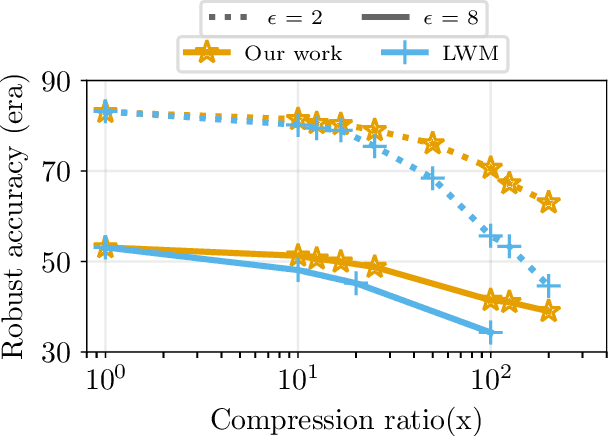

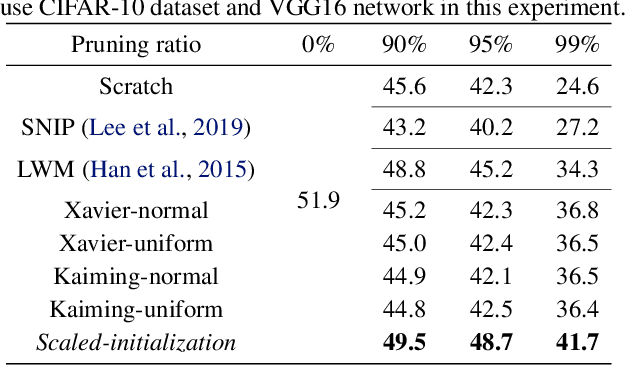

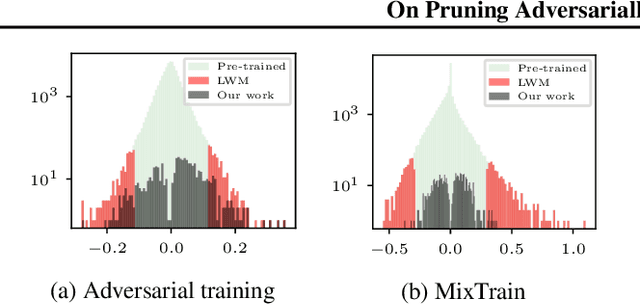

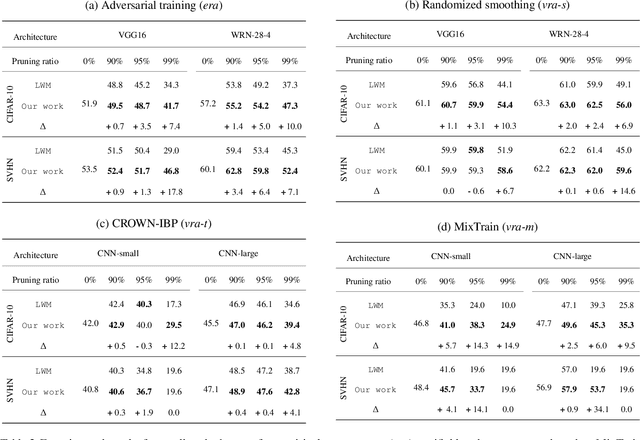

On Pruning Adversarially Robust Neural Networks

Feb 24, 2020Vikash Sehwag, Shiqi Wang, Prateek Mittal, Suman Jana

In safety-critical but computationally resource-constrained applications, deep learning faces two key challenges: lack of robustness against adversarial attacks and large neural network size (often millions of parameters). While the research community has extensively explored the use of robust training and network pruning \emph{independently} to address one of these challenges, we show that integrating existing pruning techniques with multiple types of robust training techniques, including verifiably robust training, leads to poor robust accuracy even though such techniques can preserve high regular accuracy. We further demonstrate that making pruning techniques aware of the robust learning objective can lead to a large improvement in performance. We realize this insight by formulating the pruning objective as an empirical risk minimization problem which is then solved using SGD. We demonstrate the success of the proposed pruning technique across CIFAR-10, SVHN, and ImageNet dataset with four different robust training techniques: iterative adversarial training, randomized smoothing, MixTrain, and CROWN-IBP. Specifically, at 99\% connection pruning ratio, we achieve gains up to 3.2, 10.0, and 17.8 percentage points in robust accuracy under state-of-the-art adversarial attacks for ImageNet, CIFAR-10, and SVHN dataset, respectively. Our code and compressed networks are publicly available at https://github.com/inspire-group/compactness-robustness

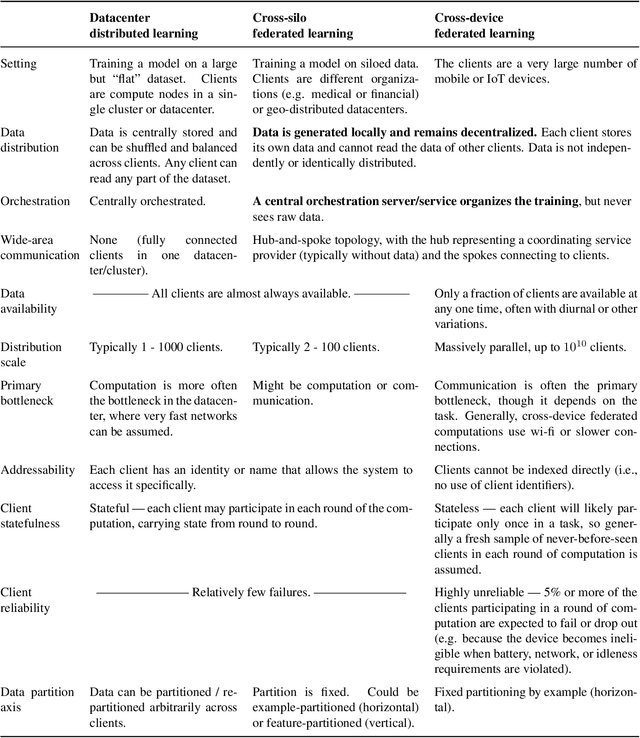

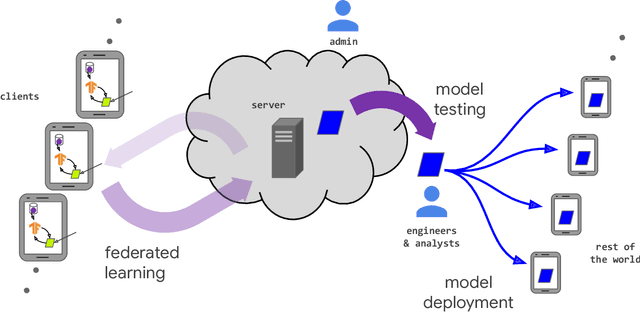

Advances and Open Problems in Federated Learning

Dec 10, 2019Peter Kairouz, H. Brendan McMahan, Brendan Avent, Aurélien Bellet, Mehdi Bennis, Arjun Nitin Bhagoji, Keith Bonawitz, Zachary Charles, Graham Cormode, Rachel Cummings, Rafael G. L. D'Oliveira, Salim El Rouayheb, David Evans, Josh Gardner, Zachary Garrett, Adrià Gascón, Badih Ghazi, Phillip B. Gibbons, Marco Gruteser, Zaid Harchaoui, Chaoyang He, Lie He, Zhouyuan Huo, Ben Hutchinson, Justin Hsu, Martin Jaggi, Tara Javidi, Gauri Joshi, Mikhail Khodak, Jakub Konečný, Aleksandra Korolova, Farinaz Koushanfar, Sanmi Koyejo, Tancrède Lepoint, Yang Liu, Prateek Mittal, Mehryar Mohri, Richard Nock, Ayfer Özgür, Rasmus Pagh, Mariana Raykova, Hang Qi, Daniel Ramage, Ramesh Raskar, Dawn Song, Weikang Song, Sebastian U. Stich, Ziteng Sun, Ananda Theertha Suresh, Florian Tramèr, Praneeth Vepakomma, Jianyu Wang, Li Xiong, Zheng Xu, Qiang Yang, Felix X. Yu, Han Yu, Sen Zhao

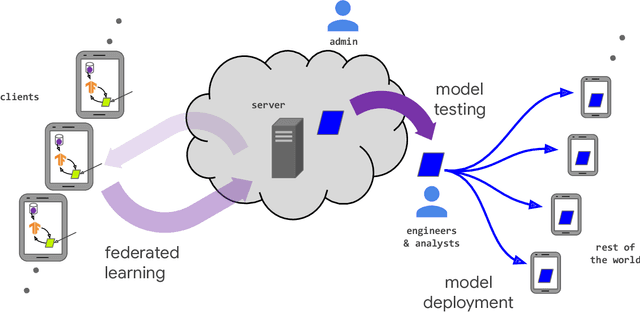

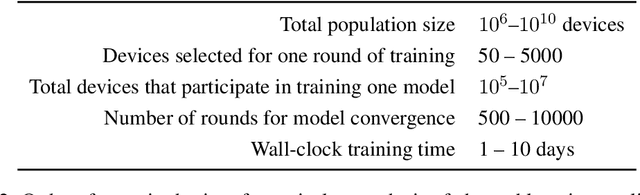

Federated learning (FL) is a machine learning setting where many clients (e.g. mobile devices or whole organizations) collaboratively train a model under the orchestration of a central server (e.g. service provider), while keeping the training data decentralized. FL embodies the principles of focused data collection and minimization, and can mitigate many of the systemic privacy risks and costs resulting from traditional, centralized machine learning and data science approaches. Motivated by the explosive growth in FL research, this paper discusses recent advances and presents an extensive collection of open problems and challenges.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge