Michael Dohopolski

Medical Artificial Intelligence and Automation Laboratory and Department of Radiation Oncology, UT Southwestern Medical Center, Dallas TX 75235, USA

AI-Assisted Decision-Making for Clinical Assessment of Auto-Segmented Contour Quality

May 01, 2025Abstract:Purpose: This study presents a Deep Learning (DL)-based quality assessment (QA) approach for evaluating auto-generated contours (auto-contours) in radiotherapy, with emphasis on Online Adaptive Radiotherapy (OART). Leveraging Bayesian Ordinal Classification (BOC) and calibrated uncertainty thresholds, the method enables confident QA predictions without relying on ground truth contours or extensive manual labeling. Methods: We developed a BOC model to classify auto-contour quality and quantify prediction uncertainty. A calibration step was used to optimize uncertainty thresholds that meet clinical accuracy needs. The method was validated under three data scenarios: no manual labels, limited labels, and extensive labels. For rectum contours in prostate cancer, we applied geometric surrogate labels when manual labels were absent, transfer learning when limited, and direct supervision when ample labels were available. Results: The BOC model delivered robust performance across all scenarios. Fine-tuning with just 30 manual labels and calibrating with 34 subjects yielded over 90% accuracy on test data. Using the calibrated threshold, over 93% of the auto-contours' qualities were accurately predicted in over 98% of cases, reducing unnecessary manual reviews and highlighting cases needing correction. Conclusion: The proposed QA model enhances contouring efficiency in OART by reducing manual workload and enabling fast, informed clinical decisions. Through uncertainty quantification, it ensures safer, more reliable radiotherapy workflows.

TransAnaNet: Transformer-based Anatomy Change Prediction Network for Head and Neck Cancer Patient Radiotherapy

May 09, 2024

Abstract:Early identification of head and neck cancer (HNC) patients who would experience significant anatomical change during radiotherapy (RT) is important to optimize patient clinical benefit and treatment resources. This study aims to assess the feasibility of using a vision-transformer (ViT) based neural network to predict RT-induced anatomic change in HNC patients. We retrospectively included 121 HNC patients treated with definitive RT/CRT. We collected the planning CT (pCT), planned dose, CBCTs acquired at the initial treatment (CBCT01) and fraction 21 (CBCT21), and primary tumor volume (GTVp) and involved nodal volume (GTVn) delineated on both pCT and CBCTs for model construction and evaluation. A UNet-style ViT network was designed to learn spatial correspondence and contextual information from embedded CT, dose, CBCT01, GTVp, and GTVn image patches. The model estimated the deformation vector field between CBCT01 and CBCT21 as the prediction of anatomic change, and deformed CBCT01 was used as the prediction of CBCT21. We also generated binary masks of GTVp, GTVn, and patient body for volumetric change evaluation. The predicted image from the proposed method yielded the best similarity to the real image (CBCT21) over pCT, CBCT01, and predicted CBCTs from other comparison models. The average MSE and SSIM between the normalized predicted CBCT to CBCT21 are 0.009 and 0.933, while the average dice coefficient between body mask, GTVp mask, and GTVn mask are 0.972, 0.792, and 0.821 respectively. The proposed method showed promising performance for predicting radiotherapy-induced anatomic change, which has the potential to assist in the decision-making of HNC Adaptive RT.

Deep Learning (DL)-based Automatic Segmentation of the Internal Pudendal Artery (IPA) for Reduction of Erectile Dysfunction in Definitive Radiotherapy of Localized Prostate Cancer

Feb 03, 2023Abstract:Background and purpose: Radiation-induced erectile dysfunction (RiED) is commonly seen in prostate cancer patients. Clinical trials have been developed in multiple institutions to investigate whether dose-sparing to the internal-pudendal-arteries (IPA) will improve retention of sexual potency. The IPA is usually not considered a conventional organ-at-risk (OAR) due to segmentation difficulty. In this work, we propose a deep learning (DL)-based auto-segmentation model for the IPA that utilizes CT and MRI or CT alone as the input image modality to accommodate variation in clinical practice. Materials and methods: 86 patients with CT and MRI images and noisy IPA labels were recruited in this study. We split the data into 42/14/30 for model training, testing, and a clinical observer study, respectively. There were three major innovations in this model: 1) we designed an architecture with squeeze-and-excite blocks and modality attention for effective feature extraction and production of accurate segmentation, 2) a novel loss function was used for training the model effectively with noisy labels, and 3) modality dropout strategy was used for making the model capable of segmentation in the absence of MRI. Results: The DSC, ASD, and HD95 values for the test dataset were 62.2%, 2.54mm, and 7mm, respectively. AI segmented contours were dosimetrically equivalent to the expert physician's contours. The observer study showed that expert physicians' scored AI contours (mean=3.7) higher than inexperienced physicians' contours (mean=3.1). When inexperienced physicians started with AI contours, the score improved to 3.7. Conclusion: The proposed model achieved good quality IPA contours to improve uniformity of segmentation and to facilitate introduction of standardized IPA segmentation into clinical trials and practice.

Prior Guided Deep Difference Meta-Learner for Fast Adaptation to Stylized Segmentation

Nov 19, 2022Abstract:When a pre-trained general auto-segmentation model is deployed at a new institution, a support framework in the proposed Prior-guided DDL network will learn the systematic difference between the model predictions and the final contours revised and approved by clinicians for an initial group of patients. The learned style feature differences are concatenated with the new patients (query) features and then decoded to get the style-adapted segmentations. The model is independent of practice styles and anatomical structures. It meta-learns with simulated style differences and does not need to be exposed to any real clinical stylized structures during training. Once trained on the simulated data, it can be deployed for clinical use to adapt to new practice styles and new anatomical structures without further training. To show the proof of concept, we tested the Prior-guided DDL network on six different practice style variations for three different anatomical structures. Pre-trained segmentation models were adapted from post-operative clinical target volume (CTV) segmentation to segment CTVstyle1, CTVstyle2, and CTVstyle3, from parotid gland segmentation to segment Parotidsuperficial, and from rectum segmentation to segment Rectumsuperior and Rectumposterior. The mode performance was quantified with Dice Similarity Coefficient (DSC). With adaptation based on only the first three patients, the average DSCs were improved from 78.6, 71.9, 63.0, 52.2, 46.3 and 69.6 to 84.4, 77.8, 73.0, 77.8, 70.5, 68.1, for CTVstyle1, CTVstyle2, and CTVstyle3, Parotidsuperficial, Rectumsuperior, and Rectumposterior, respectively, showing the great potential of the Priorguided DDL network for a fast and effortless adaptation to new practice styles

Performance Deterioration of Deep Learning Models after Clinical Deployment: A Case Study with Auto-segmentation for Definitive Prostate Cancer Radiotherapy

Oct 11, 2022

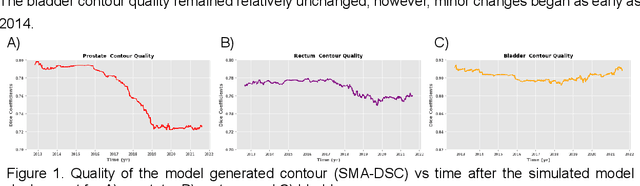

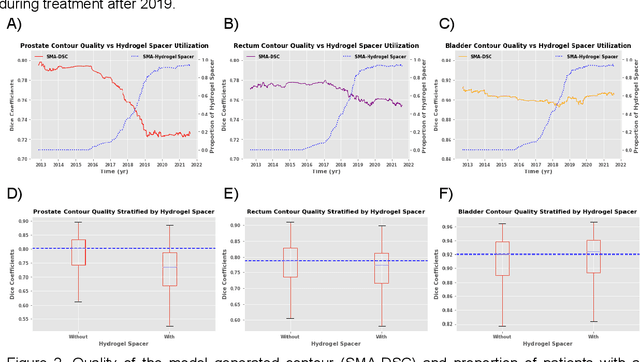

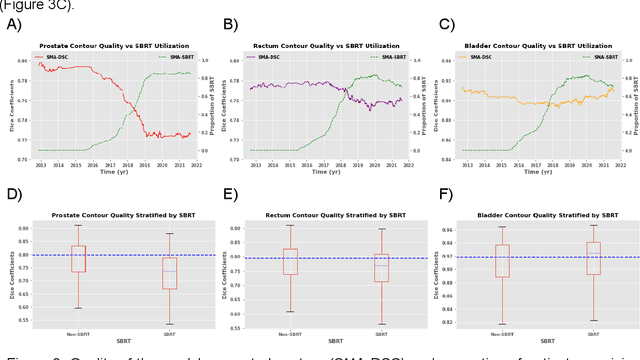

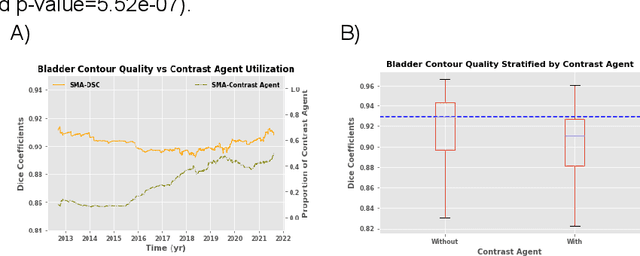

Abstract:In the past decade, deep learning (DL)-based artificial intelligence (AI) has witnessed unprecedented success and has led to much excitement in medicine. However, many successful models have not been implemented in the clinic predominantly due to concerns regarding the lack of interpretability and generalizability in both spatial and temporal domains. In this work, we used a DL-based auto segmentation model for intact prostate patients to observe any temporal performance changes and then correlate them to possible explanatory variables. We retrospectively simulated the clinical implementation of our DL model to investigate temporal performance trends. Our cohort included 912 patients with prostate cancer treated with definitive radiotherapy from January 2006 to August 2021 at the University of Texas Southwestern Medical Center (UTSW). We trained a U-Net-based DL auto segmentation model on the data collected before 2012 and tested it on data collected from 2012 to 2021 to simulate the clinical deployment of the trained model starting in 2012. We visualize the trends using a simple moving average curve and used ANOVA and t-test to investigate the impact of various clinical factors. The prostate and rectum contour quality decreased rapidly after 2016-2017. Stereotactic body radiotherapy (SBRT) and hydrogel spacer use were significantly associated with prostate contour quality (p=5.6e-12 and 0.002, respectively). SBRT and physicians' styles are significantly associated with the rectum contour quality (p=0.0005 and 0.02, respectively). Only the presence of contrast within the bladder significantly affected the bladder contour quality (p=1.6e-7). We showed that DL model performance decreased over time in concordance with changes in clinical practice patterns and changes in clinical personnel.

Uncertainty estimations methods for a deep learning model to aid in clinical decision-making -- a clinician's perspective

Oct 02, 2022

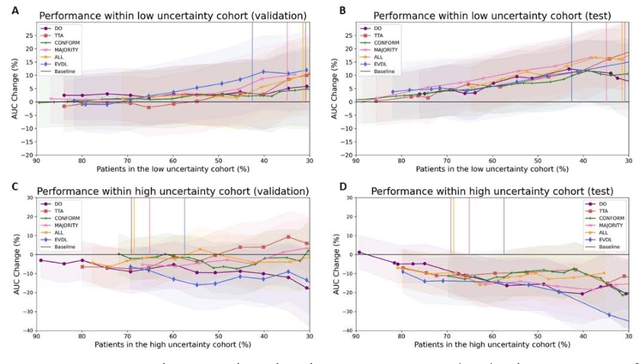

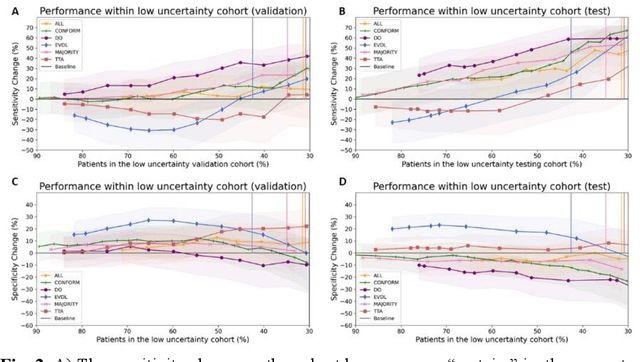

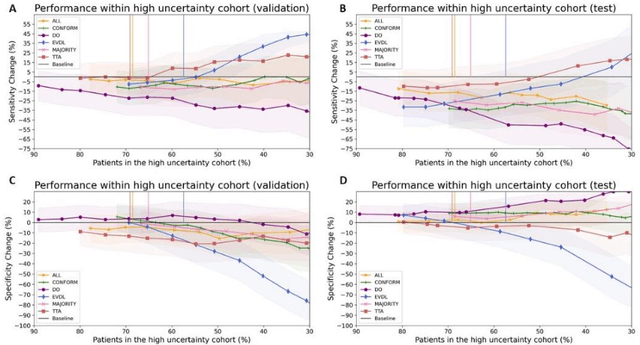

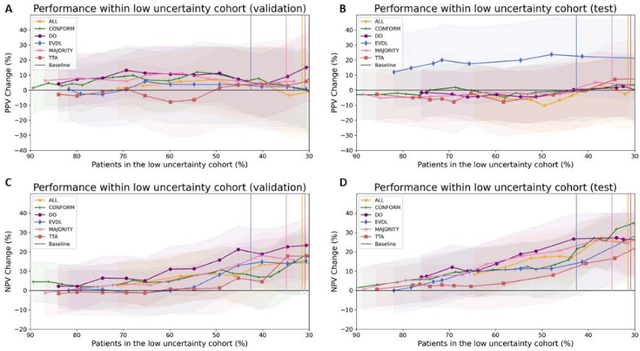

Abstract:Prediction uncertainty estimation has clinical significance as it can potentially quantify prediction reliability. Clinicians may trust 'blackbox' models more if robust reliability information is available, which may lead to more models being adopted into clinical practice. There are several deep learning-inspired uncertainty estimation techniques, but few are implemented on medical datasets -- fewer on single institutional datasets/models. We sought to compare dropout variational inference (DO), test-time augmentation (TTA), conformal predictions, and single deterministic methods for estimating uncertainty using our model trained to predict feeding tube placement for 271 head and neck cancer patients treated with radiation. We compared the area under the curve (AUC), sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV) trends for each method at various cutoffs that sought to stratify patients into 'certain' and 'uncertain' cohorts. These cutoffs were obtained by calculating the percentile "uncertainty" within the validation cohort and applied to the testing cohort. Broadly, the AUC, sensitivity, and NPV increased as the predictions were more 'certain' -- i.e., lower uncertainty estimates. However, when a majority vote (implementing 2/3 criteria: DO, TTA, conformal predictions) or a stricter approach (3/3 criteria) were used, AUC, sensitivity, and NPV improved without a notable loss in specificity or PPV. Especially for smaller, single institutional datasets, it may be important to evaluate multiple estimations techniques before incorporating a model into clinical practice.

Recurrence-free Survival Prediction under the Guidance of Automatic Gross Tumor Volume Segmentation for Head and Neck Cancers

Sep 22, 2022

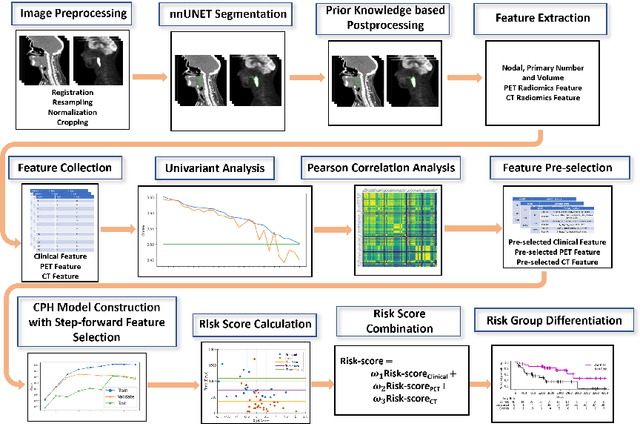

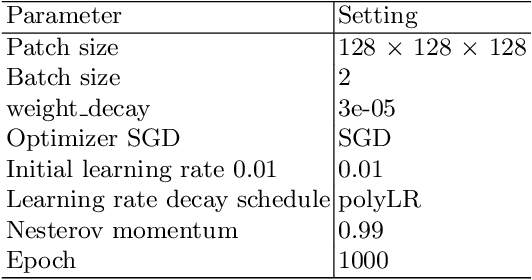

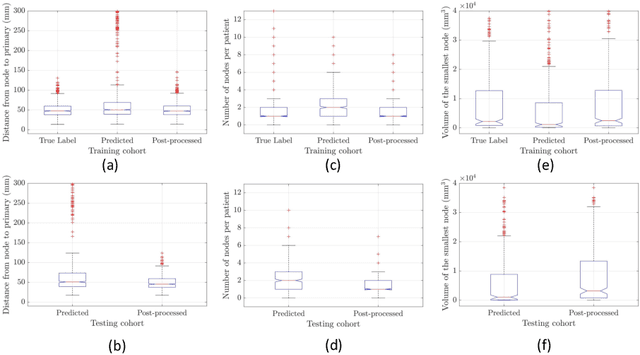

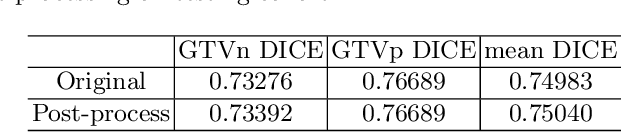

Abstract:For Head and Neck Cancers (HNC) patient management, automatic gross tumor volume (GTV) segmentation and accurate pre-treatment cancer recurrence prediction are of great importance to assist physicians in designing personalized management plans, which have the potential to improve the treatment outcome and quality of life for HNC patients. In this paper, we developed an automated primary tumor (GTVp) and lymph nodes (GTVn) segmentation method based on combined pre-treatment positron emission tomography/computed tomography (PET/CT) scans of HNC patients. We extracted radiomics features from the segmented tumor volume and constructed a multi-modality tumor recurrence-free survival (RFS) prediction model, which fused the prediction results from separate CT radiomics, PET radiomics, and clinical models. We performed 5-fold cross-validation to train and evaluate our methods on the MICCAI 2022 HEad and neCK TumOR segmentation and outcome prediction challenge (HECKTOR) dataset. The ensemble prediction results on the testing cohort achieved Dice scores of 0.77 and 0.73 for GTVp and GTVn segmentation, respectively, and a C-index value of 0.67 for RFS prediction. The code is publicly available (https://github.com/wangkaiwan/HECKTOR-2022-AIRT). Our team's name is AIRT.

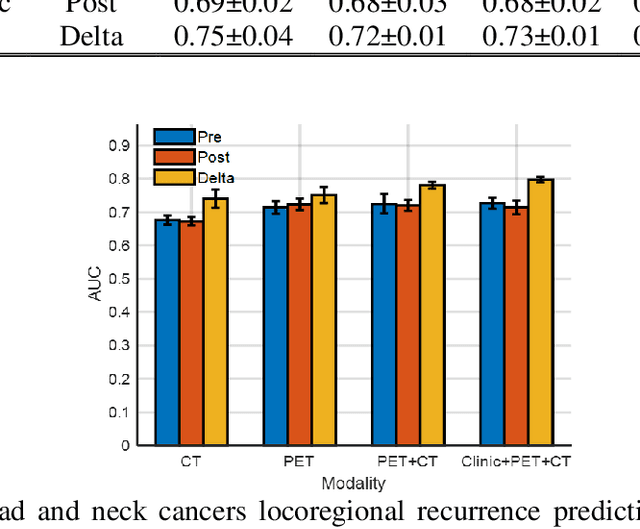

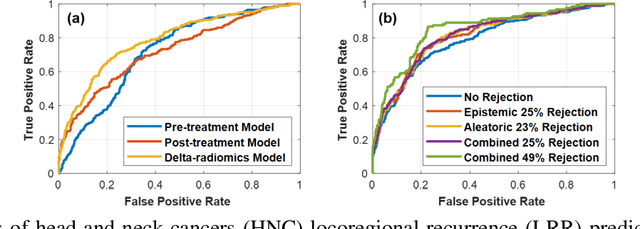

Towards reliable head and neck cancers locoregional recurrence prediction using delta-radiomics and learning with rejection option

Aug 30, 2022

Abstract:A reliable locoregional recurrence (LRR) prediction model is important for the personalized management of head and neck cancers (HNC) patients. This work aims to develop a delta-radiomics feature-based multi-classifier, multi-objective, and multi-modality (Delta-mCOM) model for post-treatment HNC LRR prediction and adopting a learning with rejection option (LRO) strategy to boost the prediction reliability by rejecting samples with high prediction uncertainties. In this retrospective study, we collected PET/CT image and clinical data from 224 HNC patients. We calculated the differences between radiomics features extracted from PET/CT images acquired before and after radiotherapy as the input features. Using clinical parameters, PET and CT radiomics features, we built and optimized three separate single-modality models. We used multiple classifiers for model construction and employed sensitivity and specificity simultaneously as the training objectives. For testing samples, we fused the output probabilities from all these single-modality models to obtain the final output probabilities of the Delta-mCOM model. In the LRO strategy, we estimated the epistemic and aleatoric uncertainties when predicting with Delta-mCOM model and identified patients associated with prediction of higher reliability. Predictions with higher epistemic uncertainty or higher aleatoric uncertainty than given thresholds were deemed unreliable, and they were rejected before providing a final prediction. Different thresholds corresponding to different low-reliability prediction rejection ratios were applied. The inclusion of the delta-radiomics feature improved the accuracy of HNC LRR prediction, and the proposed Delta-mCOM model can give more reliable predictions by rejecting predictions for samples of high uncertainty using the LRO strategy.

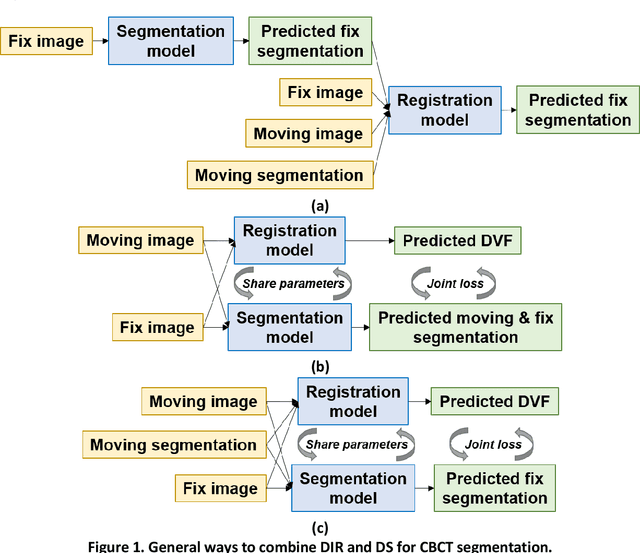

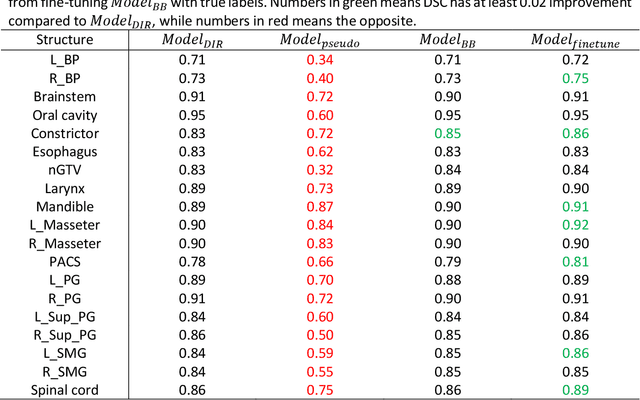

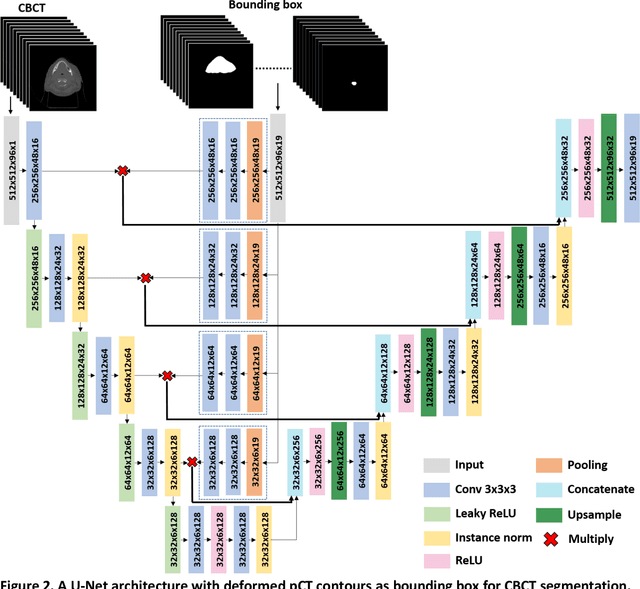

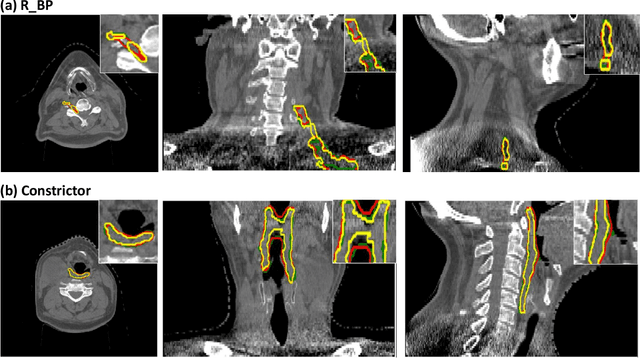

Exploring the combination of deep-learning based direct segmentation and deformable image registration for cone-beam CT based auto-segmentation for adaptive radiotherapy

Jun 07, 2022

Abstract:CBCT-based online adaptive radiotherapy (ART) calls for accurate auto-segmentation models to reduce the time cost for physicians to edit contours, since the patient is immobilized on the treatment table waiting for treatment to start. However, auto-segmentation of CBCT images is a difficult task, majorly due to low image quality and lack of true labels for training a deep learning (DL) model. Meanwhile CBCT auto-segmentation in ART is a unique task compared to other segmentation problems, where manual contours on planning CT (pCT) are available. To make use of this prior knowledge, we propose to combine deformable image registration (DIR) and direct segmentation (DS) on CBCT for head and neck patients. First, we use deformed pCT contours derived from multiple DIR methods between pCT and CBCT as pseudo labels for training. Second, we use deformed pCT contours as bounding box to constrain the region of interest for DS. Meanwhile deformed pCT contours are used as pseudo labels for training, but are generated from different DIR algorithms from bounding box. Third, we fine-tune the model with bounding box on true labels. We found that DS on CBCT trained with pseudo labels and without utilizing any prior knowledge has very poor segmentation performance compared to DIR-only segmentation. However, adding deformed pCT contours as bounding box in the DS network can dramatically improve segmentation performance, comparable to DIR-only segmentation. The DS model with bounding box can be further improved by fine-tuning it with some real labels. Experiments showed that 7 out of 19 structures have at least 0.2 dice similarity coefficient increase compared to DIR-only segmentation. Utilizing deformed pCT contours as pseudo labels for training and as bounding box for shape and location feature extraction in a DS model is a good way to combine DIR and DS.

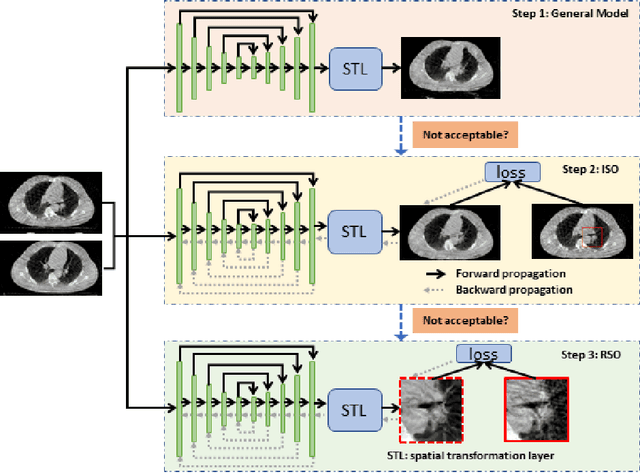

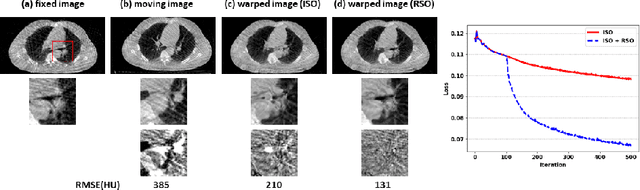

Region Specific Optimization (RSO)-based Deep Interactive Registration

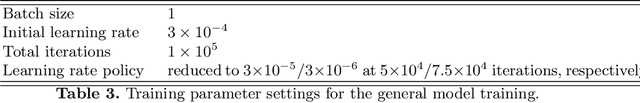

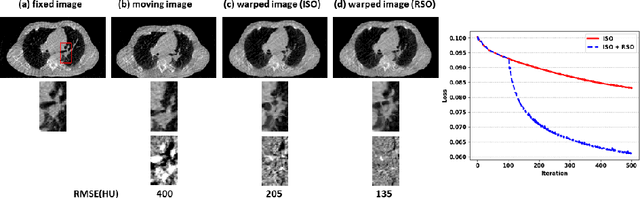

Mar 08, 2022

Abstract:Medical image registration is a fundamental and vital task which will affect the efficacy of many downstream clinical tasks. Deep learning (DL)-based deformable image registration (DIR) methods have been investigated, showing state-of-the-art performance. A test time optimization (TTO) technique was proposed to further improve the DL models' performance. Despite the substantial accuracy improvement with this TTO technique, there still remained some regions that exhibited large registration errors even after many TTO iterations. To mitigate this challenge, we firstly identified the reason why the TTO technique was slow, or even failed, to improve those regions' registration results. We then proposed a two-levels TTO technique, i.e., image-specific optimization (ISO) and region-specific optimization (RSO), where the region can be interactively indicated by the clinician during the registration result reviewing process. For both efficiency and accuracy, we further envisioned a three-step DL-based image registration workflow. Experimental results showed that our proposed method outperformed the conventional method qualitatively and quantitatively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge