Michael C. Yip

Data-driven Actuator Selection for Artificial Muscle-Powered Robots

Apr 15, 2021

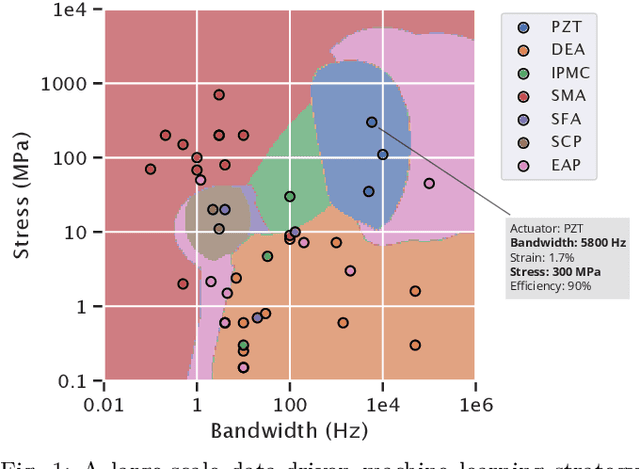

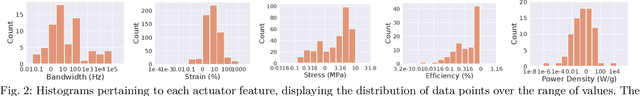

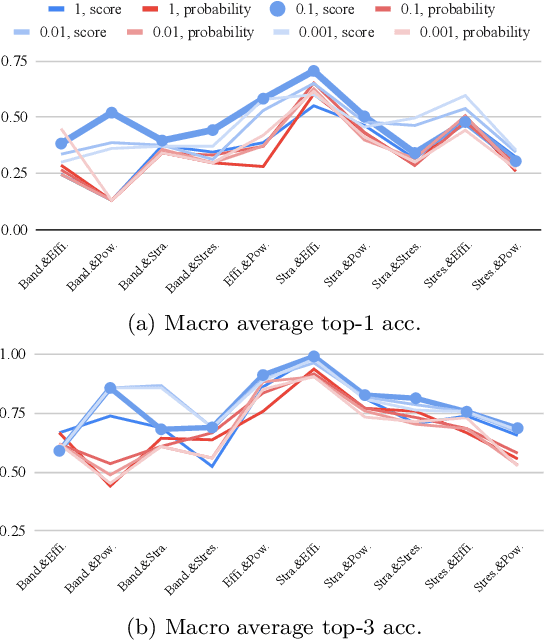

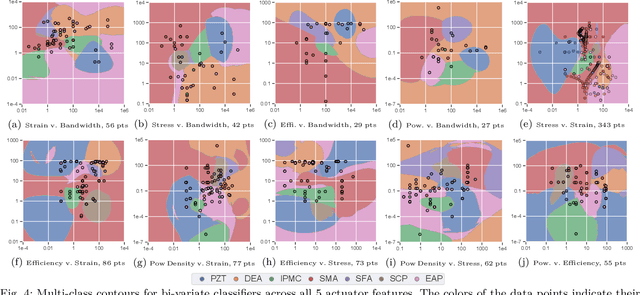

Abstract:Even though artificial muscles have gained popularity due to their compliant, flexible, and compact properties, there currently does not exist an easy way of making informed decisions on the appropriate actuation strategy when designing a muscle-powered robot; thus limiting the transition of such technologies into broader applications. What's more, when a new muscle actuation technology is developed, it is difficult to compare it against existing robot muscles. To accelerate the development of artificial muscle applications, we propose a data driven approach for robot muscle actuator selection using Support Vector Machines (SVM). This first-of-its-kind method gives users gives users insight into which actuators fit their specific needs and actuation performance criteria, making it possible for researchers and engineer with little to no prior knowledge of artificial muscles to focus on application design. It also provides a platform to benchmark existing, new, or yet-to-be-discovered artificial muscle technologies. We test our method on unseen existing robot muscle designs to prove its usability on real-world applications. We provide an open-access, web-searchable interface for easy access to our models that will additionally allow for continuous contribution of new actuator data from groups around the world to enhance and expand these models.

Optimal Multi-Manipulator Arm Placement for Maximal Dexterity during Robotics Surgery

Apr 13, 2021

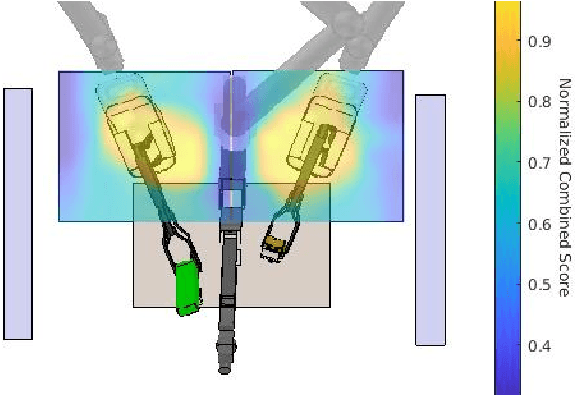

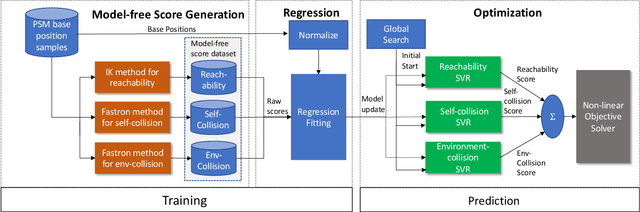

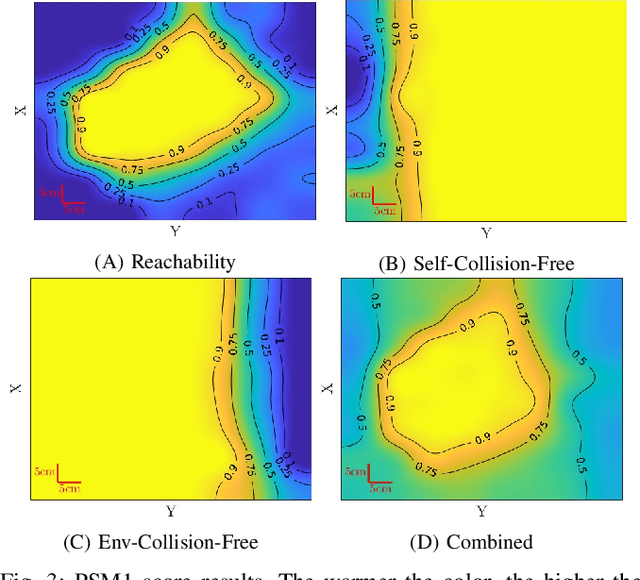

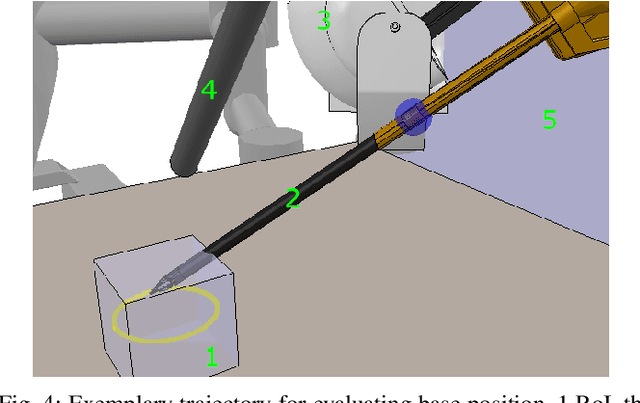

Abstract:Robot arm placements are oftentimes a limitation in surgical preoperative procedures, relying on trained staff to evaluate and decide on the optimal positions for the arms. Given new and different patient anatomies, it can be challenging to make an informed choice, leading to more frequently colliding arms or limited manipulator workspaces. In this paper, we develop a method to generate the optimal manipulator base positions for the multi-port da Vinci surgical system that minimizes self-collision and environment-collision, and maximizes the surgeon's reachability inside the patient. Scoring functions are defined for each criterion so that they may be optimized over. Since for multi-manipulator setups, a large number of free parameters are available to adjust the base positioning of each arm, a challenge becomes how one can expediently assess possible setups. We thus also propose methods that perform fast queries of each measure with the use of a proxy collision-checker. We then develop an optimization method to determine the optimal position using the scoring functions. We evaluate the optimality of the base positions for the robot arms on canonical trajectories, and show that the solution yielded by the optimization program can satisfy each criterion. The metrics and optimization strategy are generalizable to other surgical robotic platforms so that patient-side manipulator positioning may be optimized and solved.

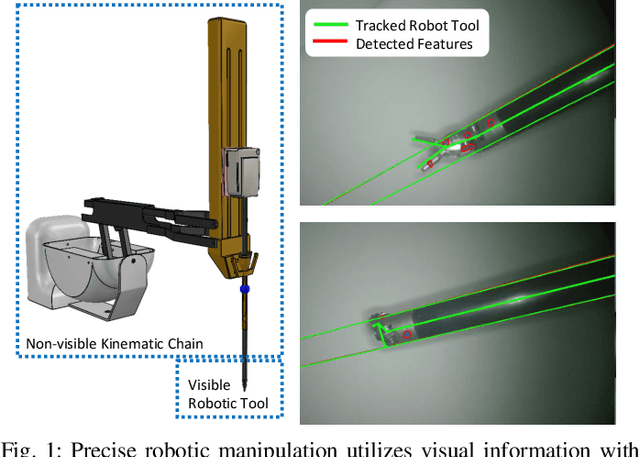

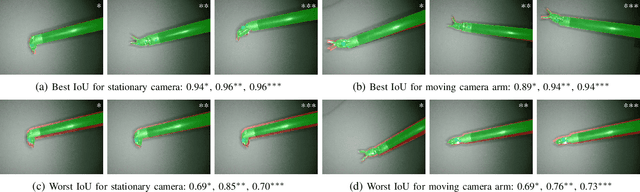

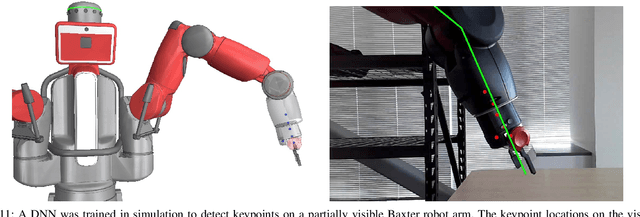

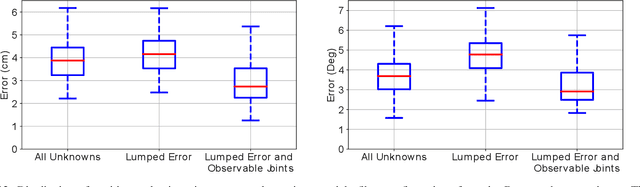

Robotic Tool Tracking under Partially Visible Kinematic Chain: A Unified Approach

Feb 11, 2021

Abstract:Anytime a robot manipulator is controlled via visual feedback, the transformation between the robot and camera frame must be known. However, in the case where cameras can only capture a portion of the robot manipulator in order to better perceive the environment being interacted with, there is greater sensitivity to errors in calibration of the base-to-camera transform. A secondary source of uncertainty during robotic control are inaccuracies in joint angle measurements which can be caused by biases in positioning and complex transmission effects such as backlash and cable stretch. In this work, we bring together these two sets of unknown parameters into a unified problem formulation when the kinematic chain is partially visible in the camera view. We prove that these parameters are non-identifiable implying that explicit estimation of them is infeasible. To overcome this, we derive a smaller set of parameters we call Lumped Error since it lumps together the errors of calibration and joint angle measurements. A particle filter method is presented and tested in simulation and on two real world robots to estimate the Lumped Error and show the efficiency of this parameter reduction.

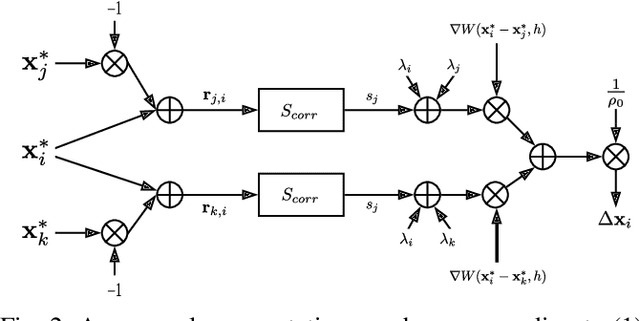

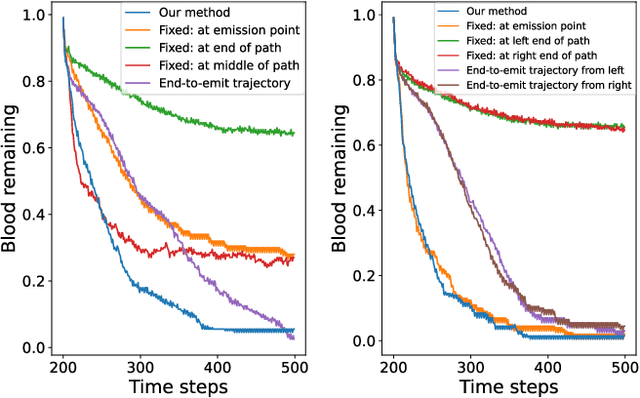

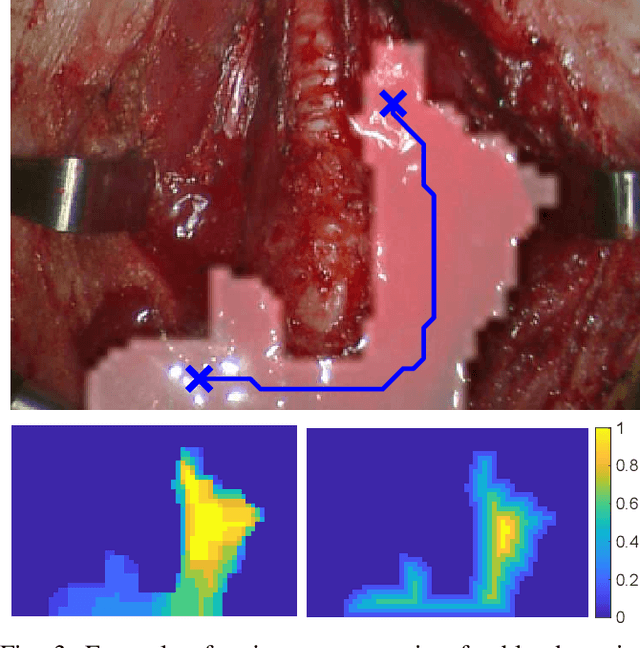

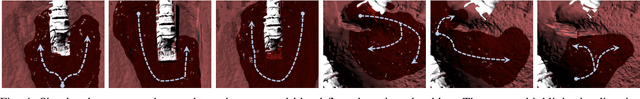

Model-Predictive Control of Blood Suction for Surgical Hemostasis using Differentiable Fluid Simulations

Feb 02, 2021

Abstract:Recent developments in surgical robotics have led to new advancements in the automation of surgical sub-tasks such as suturing, soft tissue manipulation, tissue tensioning and cutting. However, integration of dynamics to optimize these control policies for the variety of scenes encountered in surgery remains unsolved. Towards this effort, we investigate the integration of differentiable fluid dynamics to optimizing a suction tool's trajectory to clear the surgical field from blood as fast as possible. The fully differentiable fluid dynamics is integrated with a novel suction model for effective model predictive control of the tool. The differentiability of the fluid model is crucial because we utilize the gradients of the fluid states with respect to the suction tool position to optimize the trajectory. Through a series of experiments, we demonstrate how, by incorporating fluid models, the trajectories generated by our method can perform as good as or better than handcrafted human-intuitive suction policies. We also show that our method is adaptable and can work in different cavity conditions while using a single handcrafted strategy fails.

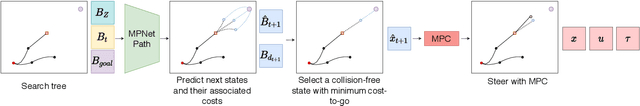

MPC-MPNet: Model-Predictive Motion Planning Networks for Fast, Near-Optimal Planning under Kinodynamic Constraints

Jan 17, 2021

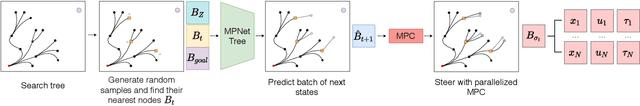

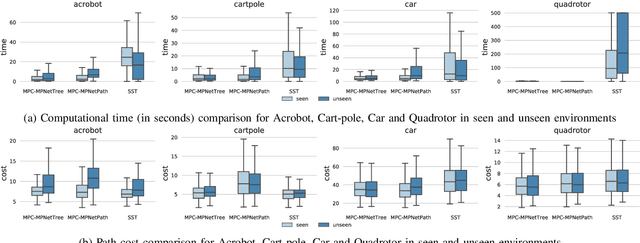

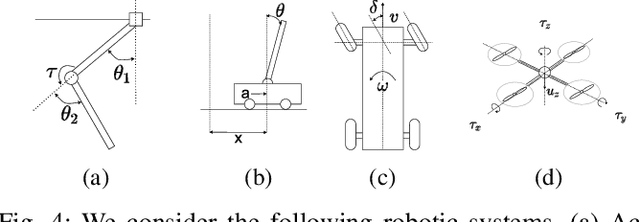

Abstract:Kinodynamic Motion Planning (KMP) is to find a robot motion subject to concurrent kinematics and dynamics constraints. To date, quite a few methods solve KMP problems and those that exist struggle to find near-optimal solutions and exhibit high computational complexity as the planning space dimensionality increases. To address these challenges, we present a scalable, imitation learning-based, Model-Predictive Motion Planning Networks framework that quickly finds near-optimal path solutions with worst-case theoretical guarantees under kinodynamic constraints for practical underactuated systems. Our framework introduces two algorithms built on a neural generator, discriminator, and a parallelizable Model Predictive Controller (MPC). The generator outputs various informed states towards the given target, and the discriminator selects the best possible subset from them for the extension. The MPC locally connects the selected informed states while satisfying the given constraints leading to feasible, near-optimal solutions. We evaluate our algorithms on a range of cluttered, kinodynamically constrained, and underactuated planning problems with results indicating significant improvements in computation times, path qualities, and success rates over existing methods.

Bimanual Regrasping for Suture Needles using Reinforcement Learning for Rapid Motion Planning

Nov 09, 2020

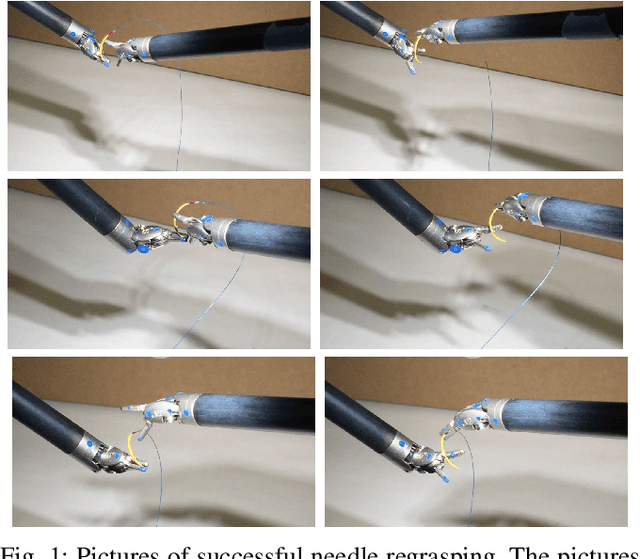

Abstract:Regrasping a suture needle is an important process in suturing, and previous study has shown that it takes on average 7.4s before the needle is thrown again. To bring efficiency into suturing, prior work either designs a task-specific mechanism or guides the gripper toward some specific pick-up point for proper grasping of a needle. Yet, these methods are usually not deployable when the working space is changed. These prior efforts highlight the need for more efficient regrasping and more generalizability of a proposed method. Therefore, in this work, we present rapid trajectory generation for bimanual needle regrasping via reinforcement learning (RL). Demonstrations from a sampling-based motion planning algorithm is incorporated to speed up the learning. In addition, we propose the ego-centric state and action spaces for this bimanual planning problem, where the reference frames are on the end-effectors instead of some fixed frame. Thus, the learned policy can be directly applied to any robot configuration and even to different robot arms. Our experiments in simulation show that the success rate of a single pass is 97%, and the planning time is 0.0212s on average, which outperforms other widely used motion planning algorithms. For the real-world experiments, the success rate is 73.3% if the needle pose is reconstructed from an RGB image, with a planning time of 0.0846s and a run time of 5.1454s. If the needle pose is known beforehand, the success rate becomes 90.5%, with a planning time of 0.0807s and a run time of 2.8801s.

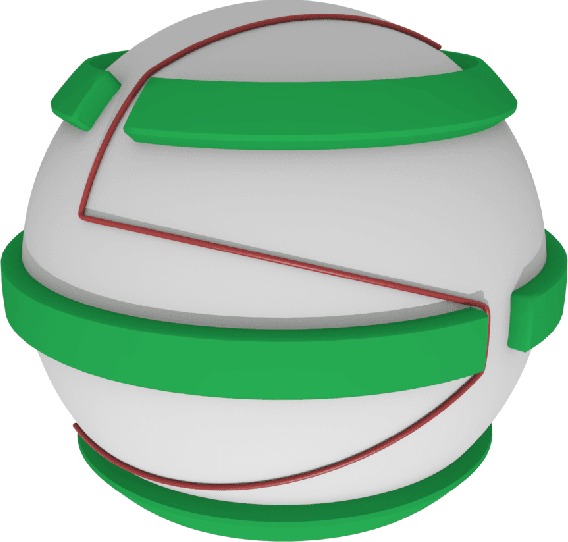

Real-to-Sim Registration of Deformable Soft Tissue with Position-Based Dynamics for Surgical Robot Autonomy

Nov 03, 2020

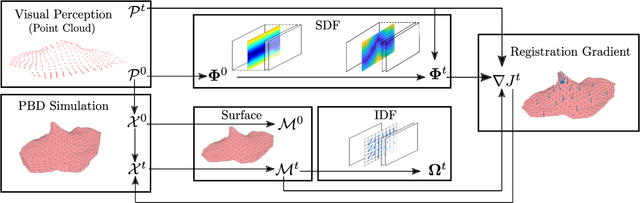

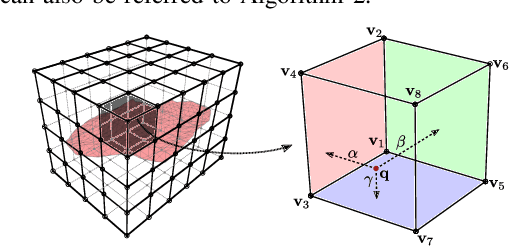

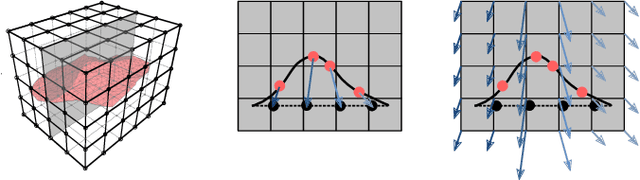

Abstract:Autonomy in robotic surgery is very challenging in unstructured environments, especially when interacting with deformable soft tissues. This creates a challenge for model-based control methods that must account for deformation dynamics during tissue manipulation. Previous works in vision-based perception can capture the geometric changes within the scene, however, integration with dynamic properties toachieve accurate and safe model-based controllers has not been considered before. Considering the mechanic coupling between the robot and the environment, it is crucial to develop a registered, simulated dynamical model. In this work, we propose an online, continuous, real-to-sim registration method to bridge from 3D visual perception to position-based dynamics(PBD) modeling of tissues. The PBD method is employed to simulate soft tissue dynamics as well as rigid tool interactions for model-based control. Meanwhile, a vision-based strategy is used to generate 3D reconstructed point cloud surfaces that can be used to register and update the simulation, accounting for differences between the simulation and the real world. To verify this real-to-sim approach, tissue manipulation experiments have been conducted on the da Vinci Researach Kit. Our real-to-sim approach successfully reduced registration errors online, which is especially important for safety during autonomous control. Moreover, the result shows higher accuracy in occluded areas than fusion-based reconstruction.

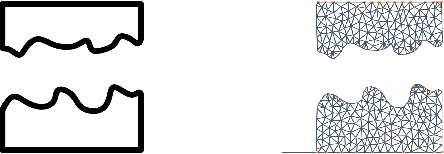

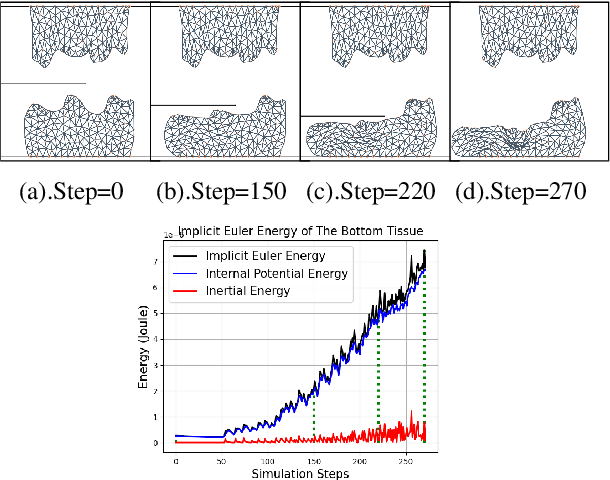

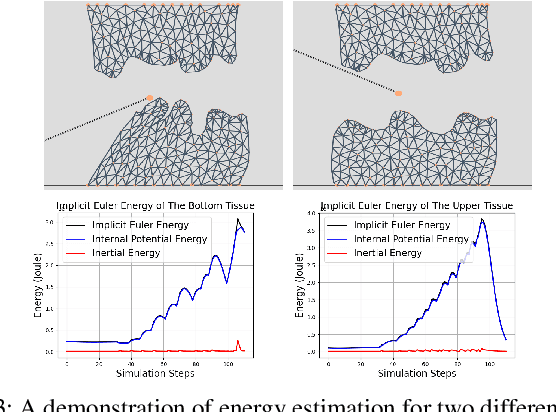

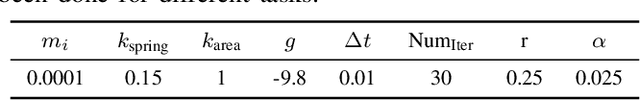

A 2D Surgical Simulation Framework for Tool-Tissue Interaction

Oct 26, 2020

Abstract:The control and task automation of robotic surgical system is very challenging, especially in soft tissue manipulation, due to the unpredictable deformations. Thus, an accurate simulator of soft tissues with the ability of interacting with robot manipulators is necessary. In this work, we propose a novel 2D simulation framework for tool-tissue interaction. This framework continuously tracks the motion of manipulator and simulates the tissue deformation in presence of collision detection. The deformation energy can be computed for the control and planning task.

Constrained Motion Planning Networks X

Oct 17, 2020

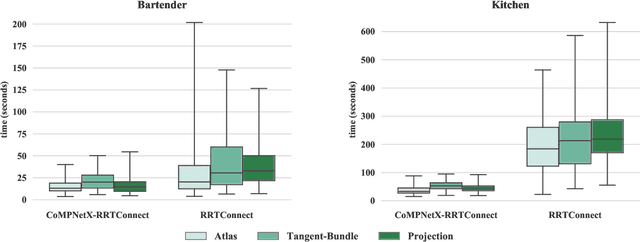

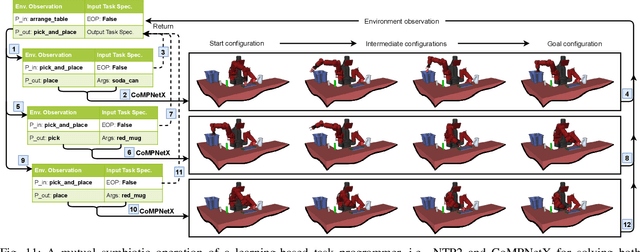

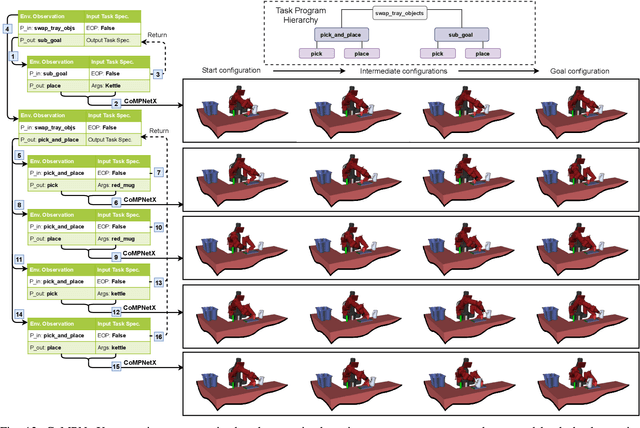

Abstract:Constrained motion planning is a challenging field of research, aiming for computationally efficient methods that can find a collision-free path connecting a given start and goal by transversing zero-volume constraint manifolds for a given planning problem. These planning problems come up surprisingly frequently, such as in robot manipulation for performing daily life assistive tasks. However, few solutions to constrained motion planning are available, and those that exist struggle with high computational time complexity in finding a path solution on the manifolds. To address this challenge, we present Constrained Motion Planning Networks X (CoMPNetX). It is a neural planning approach, comprising a conditional deep neural generator and discriminator with neural gradients-based fast projections to the constraint manifolds. We also introduce neural task and scene representations conditioned on which the CoMPNetX generates implicit manifold configurations to turbo-charge any underlying classical planner such as Sampling-based Motion Planning methods for quickly solving complex constrained planning tasks. We show that our method, equipped with any constrained-adherence technique, finds path solutions with high success rates and lower computation times than state-of-the-art traditional path-finding tools on various challenging scenarios.

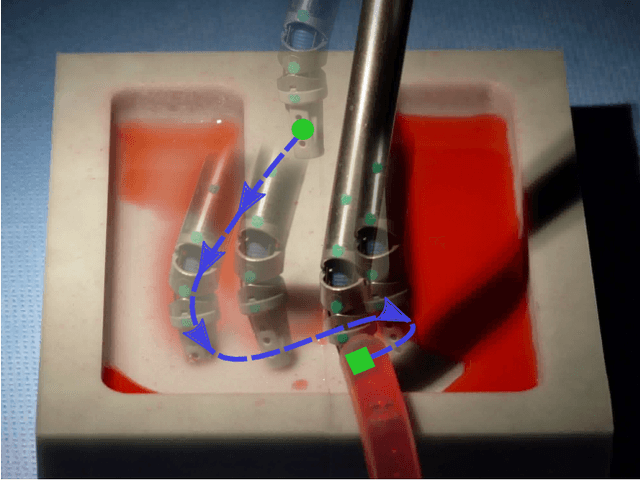

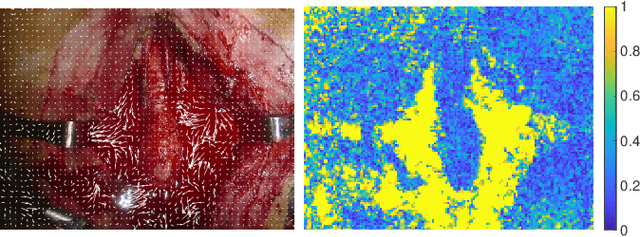

Autonomous Robotic Suction to Clear the Surgical Field for Hemostasis using Image-based Blood Flow Detection

Oct 16, 2020

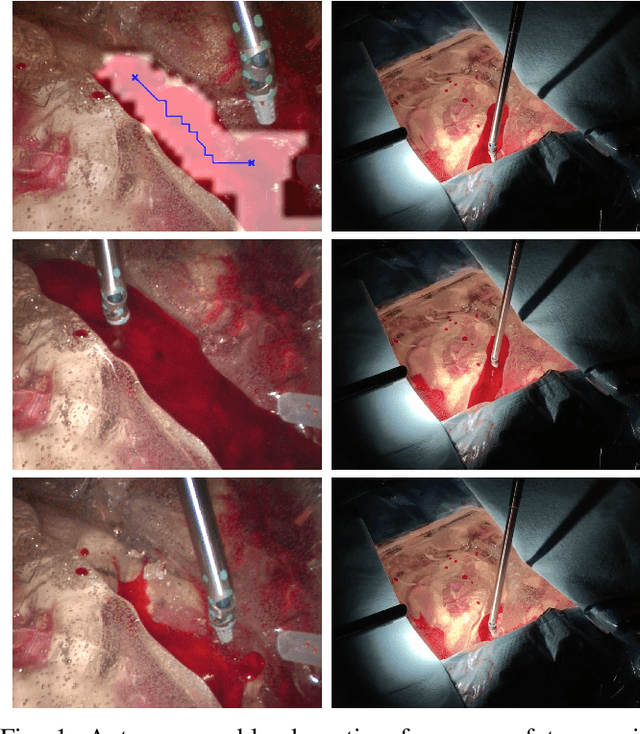

Abstract:Autonomous robotic surgery has seen significant progression over the last decade with the aims of reducing surgeon fatigue, improving procedural consistency, and perhaps one day take over surgery itself. However, automation has not been applied to the critical surgical task of controlling tissue and blood vessel bleeding--known as hemostasis. The task of hemostasis covers a spectrum of bleeding sources and a range of blood velocity, trajectory, and volume. In an extreme case, an un-controlled blood vessel fills the surgical field with flowing blood. In this work, we present the first, automated solution for hemostasis through development of a novel probabilistic blood flow detection algorithm and a trajectory generation technique that guides autonomous suction tools towards pooling blood. The blood flow detection algorithm is tested in both simulated scenes and in a real-life trauma scenario involving a hemorrhage that occurred during thyroidectomy. The complete solution is tested in a physical lab setting with the da Vinci Research Kit (dVRK) and a simulated surgical cavity for blood to flow through. The results show that our automated solution has accurate detection, a fast reaction time, and effective removal of the flowing blood. Therefore, the proposed methods are powerful tools to clearing the surgical field which can be followed by either a surgeon or future robotic automation developments to close the vessel rupture.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge