Agent Modeling as Auxiliary Task for Deep Reinforcement Learning

Jul 22, 2019Pablo Hernandez-Leal, Bilal Kartal, Matthew E. Taylor

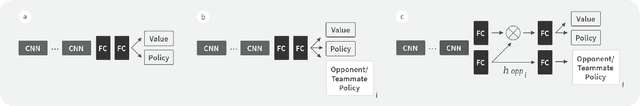

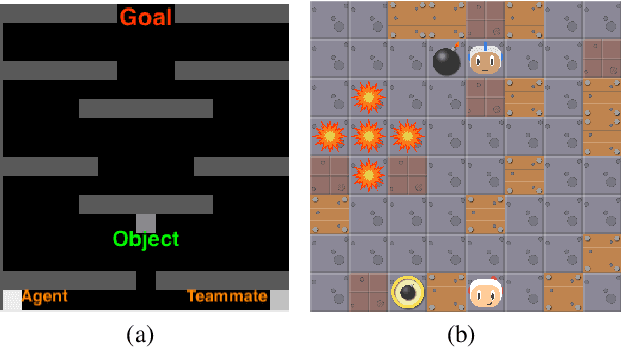

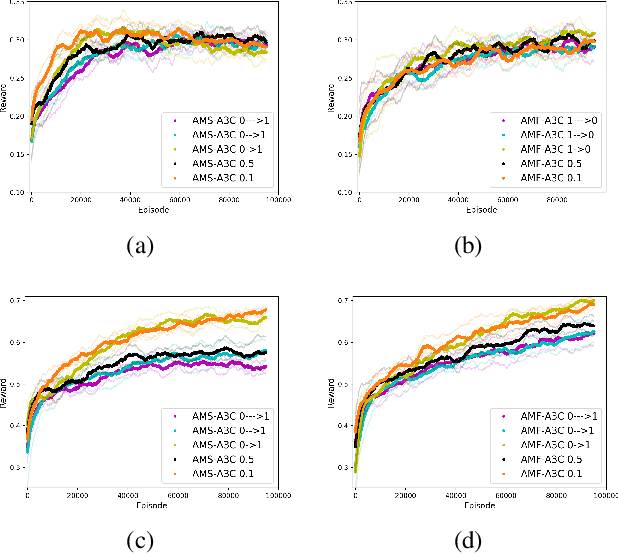

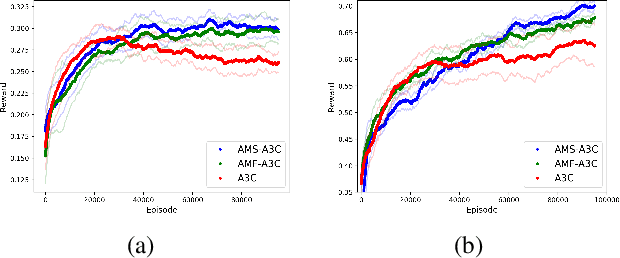

In this paper we explore how actor-critic methods in deep reinforcement learning, in particular Asynchronous Advantage Actor-Critic (A3C), can be extended with agent modeling. Inspired by recent works on representation learning and multiagent deep reinforcement learning, we propose two architectures to perform agent modeling: the first one based on parameter sharing, and the second one based on agent policy features. Both architectures aim to learn other agents' policies as auxiliary tasks, besides the standard actor (policy) and critic (values). We performed experiments in both cooperative and competitive domains. The former is a problem of coordinated multiagent object transportation and the latter is a two-player mini version of the Pommerman game. Our results show that the proposed architectures stabilize learning and outperform the standard A3C architecture when learning a best response in terms of expected rewards.

Interactive Learning of Environment Dynamics for Sequential Tasks

Jul 19, 2019Robert Loftin, Bei Peng, Matthew E. Taylor, Michael L. Littman, David L. Roberts

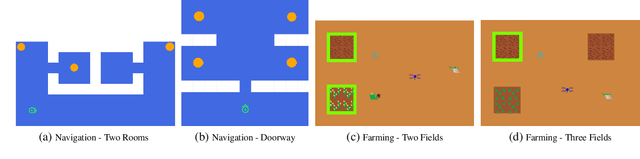

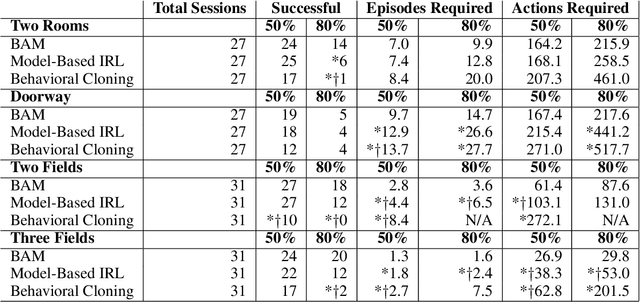

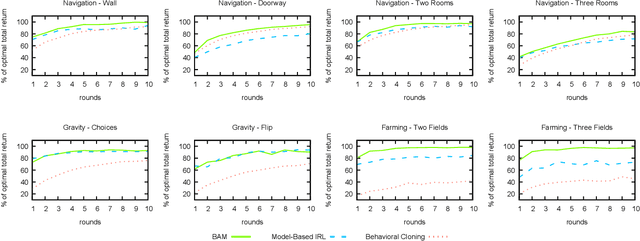

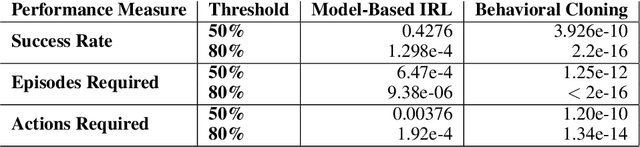

In order for robots and other artificial agents to efficiently learn to perform useful tasks defined by an end user, they must understand not only the goals of those tasks, but also the structure and dynamics of that user's environment. While existing work has looked at how the goals of a task can be inferred from a human teacher, the agent is often left to learn about the environment on its own. To address this limitation, we develop an algorithm, Behavior Aware Modeling (BAM), which incorporates a teacher's knowledge into a model of the transition dynamics of an agent's environment. We evaluate BAM both in simulation and with real human teachers, learning from a combination of task demonstrations and evaluative feedback, and show that it can outperform approaches which do not explicitly consider this source of dynamics knowledge.

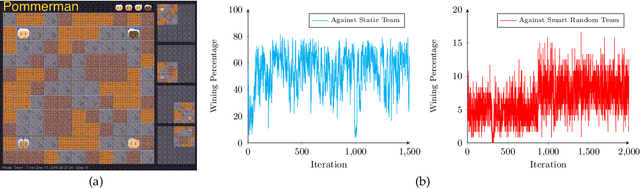

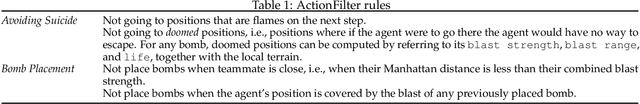

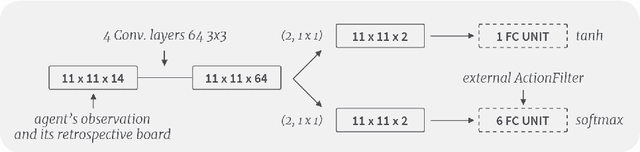

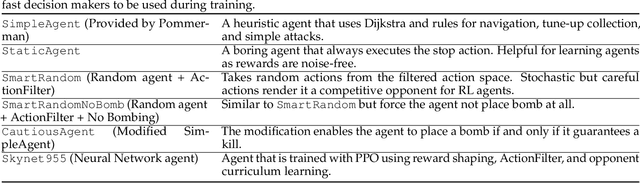

Skynet: A Top Deep RL Agent in the Inaugural Pommerman Team Competition

Apr 20, 2019Chao Gao, Pablo Hernandez-Leal, Bilal Kartal, Matthew E. Taylor

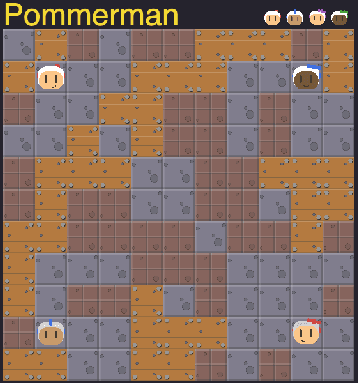

The Pommerman Team Environment is a recently proposed benchmark which involves a multi-agent domain with challenges such as partial observability, decentralized execution (without communication), and very sparse and delayed rewards. The inaugural Pommerman Team Competition held at NeurIPS 2018 hosted 25 participants who submitted a team of 2 agents. Our submission nn_team_skynet955_skynet955 won 2nd place of the "learning agents'' category. Our team is composed of 2 neural networks trained with state of the art deep reinforcement learning algorithms and makes use of concepts like reward shaping, curriculum learning, and an automatic reasoning module for action pruning. Here, we describe these elements and additionally we present a collection of open-sourced agents that can be used for training and testing in the Pommerman environment. Code available at: https://github.com/BorealisAI/pommerman-baseline

Safer Deep RL with Shallow MCTS: A Case Study in Pommerman

Apr 10, 2019Bilal Kartal, Pablo Hernandez-Leal, Chao Gao, Matthew E. Taylor

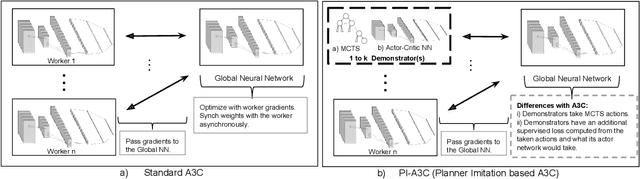

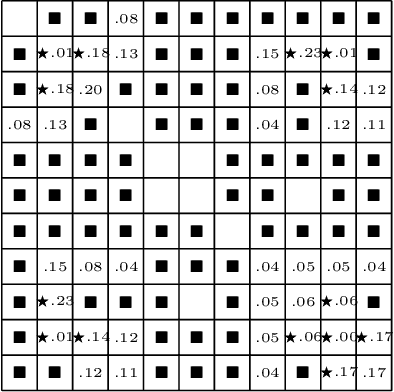

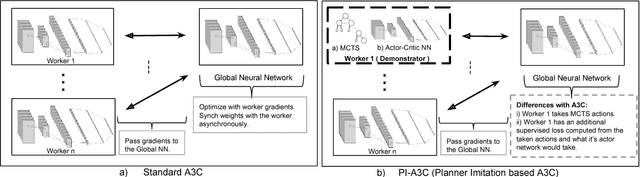

Safe reinforcement learning has many variants and it is still an open research problem. Here, we focus on how to use action guidance by means of a non-expert demonstrator to avoid catastrophic events in a domain with sparse, delayed, and deceptive rewards: the recently-proposed multi-agent benchmark of Pommerman. This domain is very challenging for reinforcement learning (RL) --- past work has shown that model-free RL algorithms fail to achieve significant learning. In this paper, we shed light into the reasons behind this failure by exemplifying and analyzing the high rate of catastrophic events (i.e., suicides) that happen under random exploration in this domain. While model-free random exploration is typically futile, we propose a new framework where even a non-expert simulated demonstrator, e.g., planning algorithms such as Monte Carlo tree search with small number of rollouts, can be integrated to asynchronous distributed deep reinforcement learning methods. Compared to vanilla deep RL algorithms, our proposed methods both learn faster and converge to better policies on a two-player mini version of the Pommerman game.

Jointly Pre-training with Supervised, Autoencoder, and Value Losses for Deep Reinforcement Learning

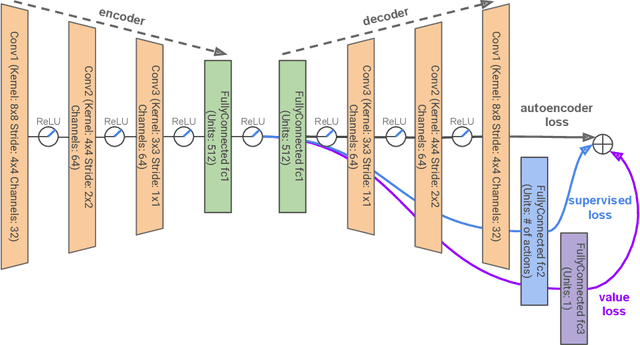

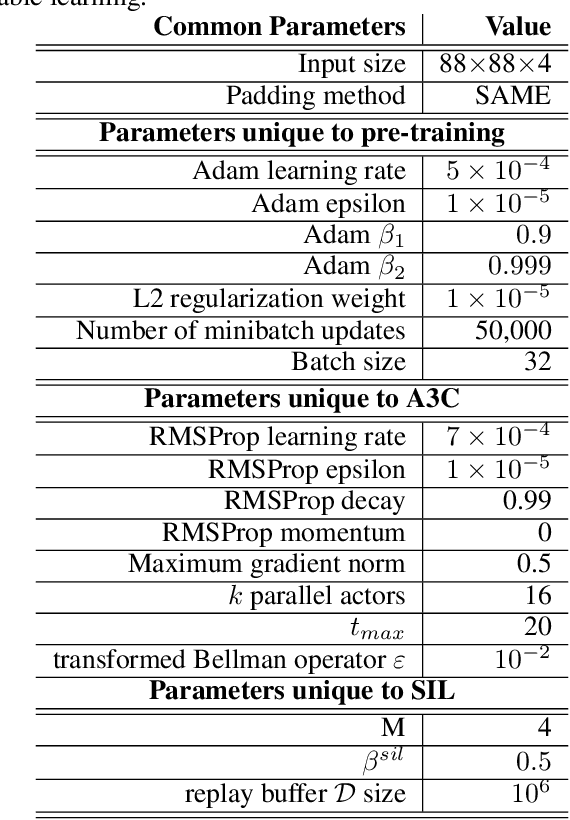

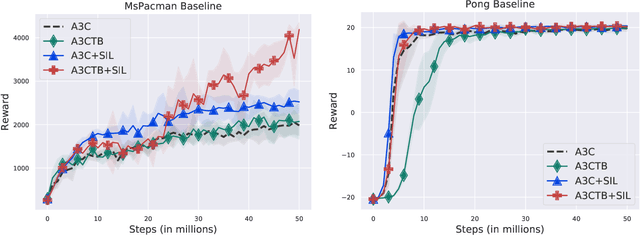

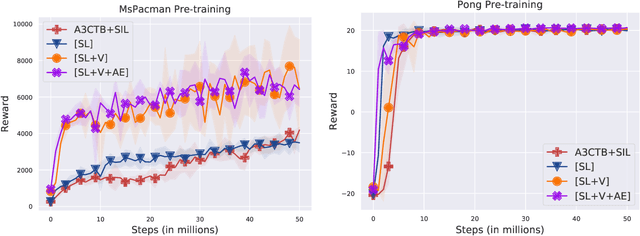

Apr 03, 2019Gabriel V. de la Cruz Jr., Yunshu Du, Matthew E. Taylor

Deep Reinforcement Learning (DRL) algorithms are known to be data inefficient. One reason is that a DRL agent learns both the feature and the policy tabula rasa. Integrating prior knowledge into DRL algorithms is one way to improve learning efficiency since it helps to build helpful representations. In this work, we consider incorporating human knowledge to accelerate the asynchronous advantage actor-critic (A3C) algorithm by pre-training a small amount of non-expert human demonstrations. We leverage the supervised autoencoder framework and propose a novel pre-training strategy that jointly trains a weighted supervised classification loss, an unsupervised reconstruction loss, and an expected return loss. The resulting pre-trained model learns more useful features compared to independently training in supervised or unsupervised fashion. Our pre-training method drastically improved the learning performance of the A3C agent in Atari games of Pong and MsPacman, exceeding the performance of the state-of-the-art algorithms at a much smaller number of game interactions. Our method is light-weight and easy to implement in a single machine. For reproducibility, our code is available at github.com/gabrieledcjr/DeepRL/tree/A3C-ALA2019

Pre-training with Non-expert Human Demonstration for Deep Reinforcement Learning

Dec 21, 2018Gabriel V. de la Cruz, Yunshu Du, Matthew E. Taylor

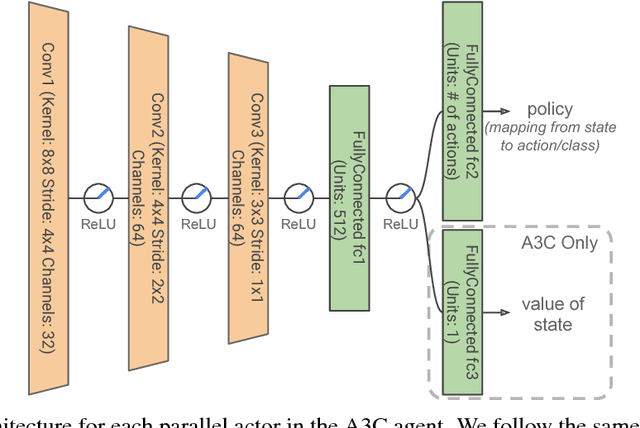

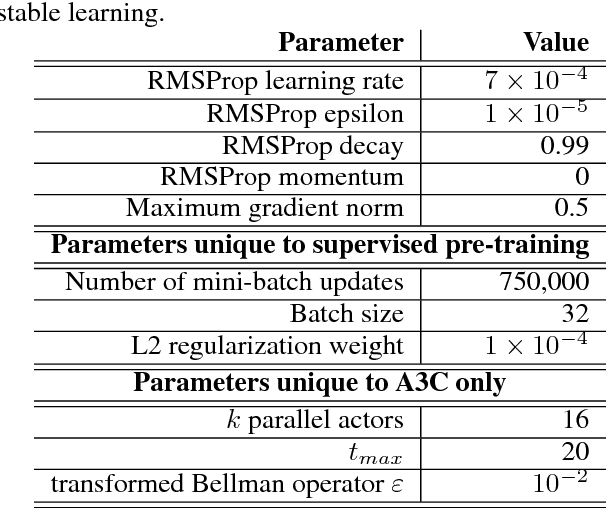

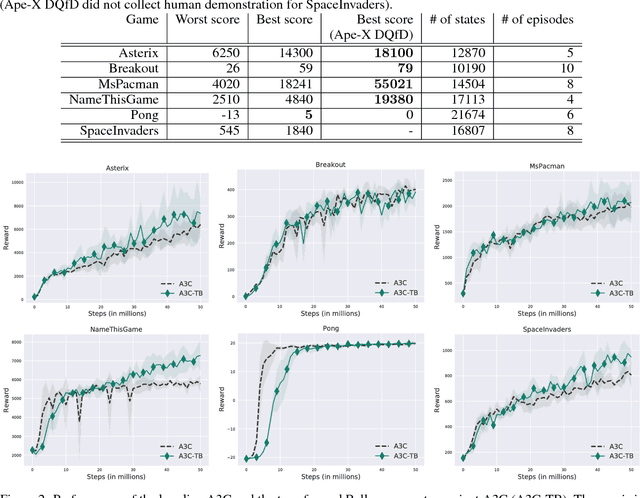

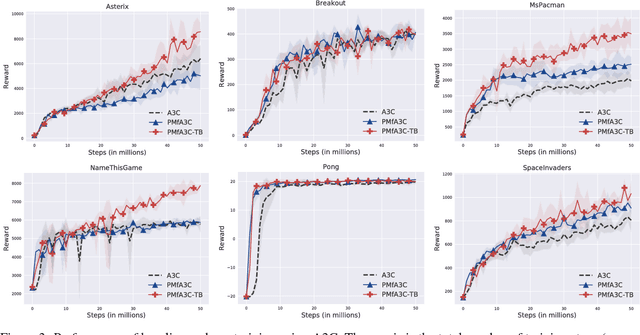

Deep reinforcement learning (deep RL) has achieved superior performance in complex sequential tasks by using deep neural networks as function approximators to learn directly from raw input images. However, learning directly from raw images is data inefficient. The agent must learn feature representation of complex states in addition to learning a policy. As a result, deep RL typically suffers from slow learning speeds and often requires a prohibitively large amount of training time and data to reach reasonable performance, making it inapplicable to real-world settings where data is expensive. In this work, we improve data efficiency in deep RL by addressing one of the two learning goals, feature learning. We leverage supervised learning to pre-train on a small set of non-expert human demonstrations and empirically evaluate our approach using the asynchronous advantage actor-critic algorithms (A3C) in the Atari domain. Our results show significant improvements in learning speed, even when the provided demonstration is noisy and of low quality.

Using Monte Carlo Tree Search as a Demonstrator within Asynchronous Deep RL

Nov 30, 2018Bilal Kartal, Pablo Hernandez-Leal, Matthew E. Taylor

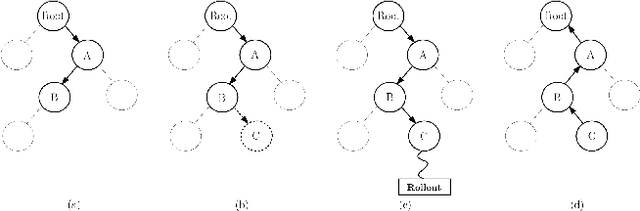

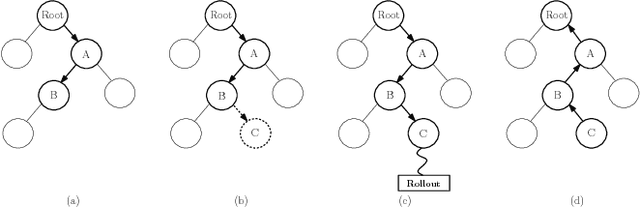

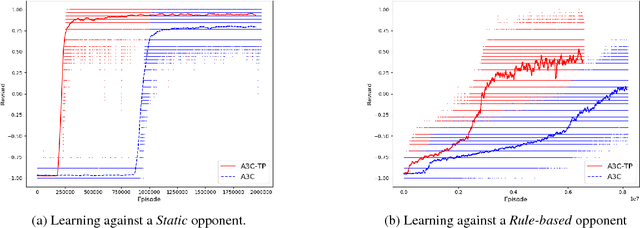

Deep reinforcement learning (DRL) has achieved great successes in recent years with the help of novel methods and higher compute power. However, there are still several challenges to be addressed such as convergence to locally optimal policies and long training times. In this paper, firstly, we augment Asynchronous Advantage Actor-Critic (A3C) method with a novel self-supervised auxiliary task, i.e. \emph{Terminal Prediction}, measuring temporal closeness to terminal states, namely A3C-TP. Secondly, we propose a new framework where planning algorithms such as Monte Carlo tree search or other sources of (simulated) demonstrators can be integrated to asynchronous distributed DRL methods. Compared to vanilla A3C, our proposed methods both learn faster and converge to better policies on a two-player mini version of the Pommerman game.

Autonomous Extraction of a Hierarchical Structure of Tasks in Reinforcement Learning, A Sequential Associate Rule Mining Approach

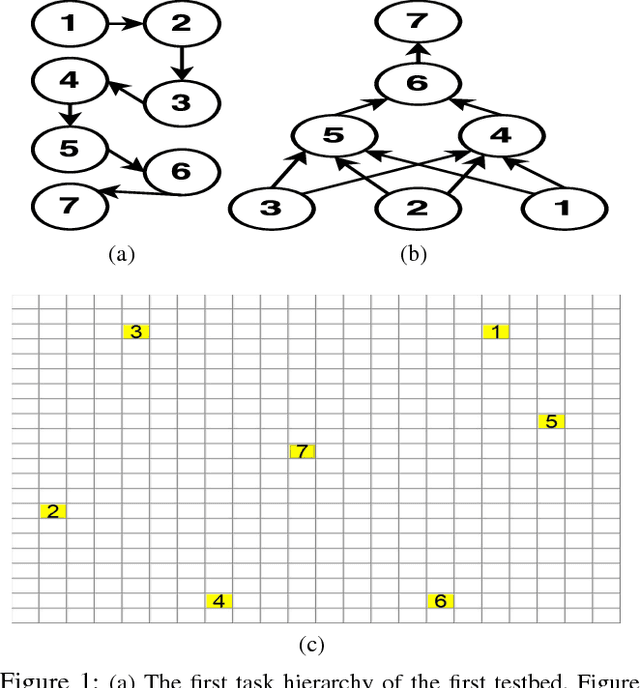

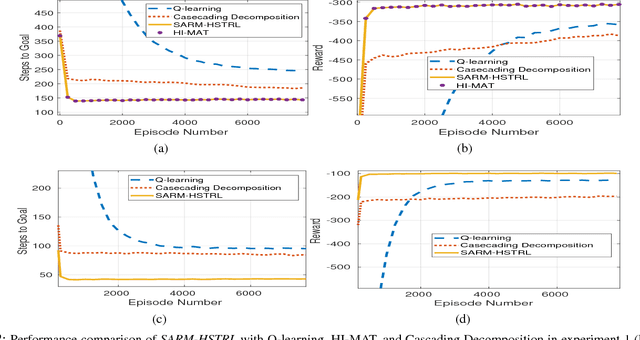

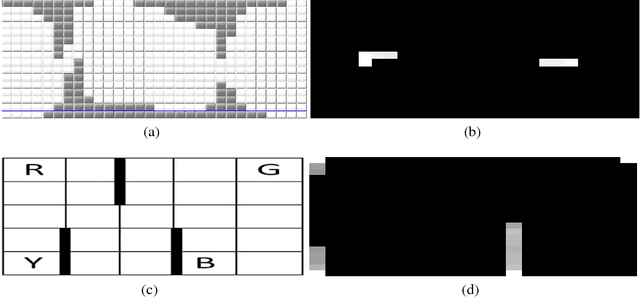

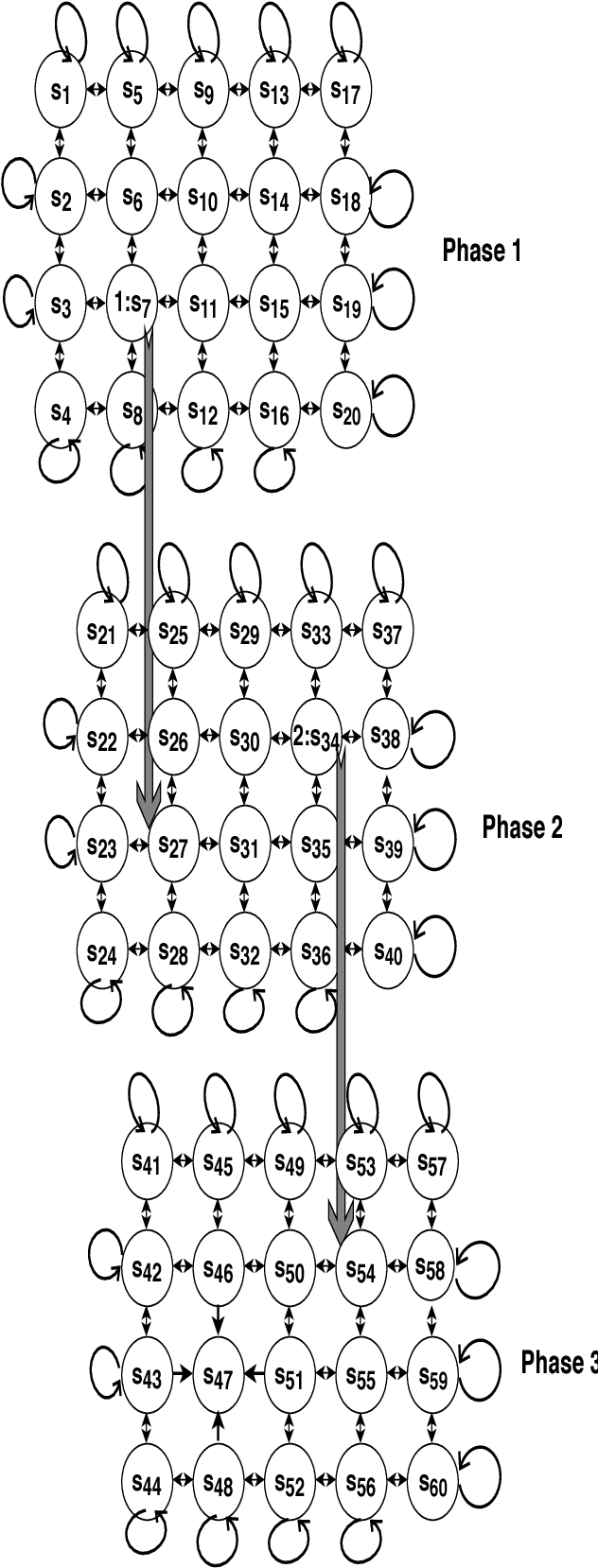

Nov 17, 2018Behzad Ghazanfari, Fatemeh Afghah, Matthew E. Taylor

Reinforcement learning (RL) techniques, while often powerful, can suffer from slow learning speeds, particularly in high dimensional spaces. Decomposition of tasks into a hierarchical structure holds the potential to significantly speed up learning, generalization, and transfer learning. However, the current task decomposition techniques often rely on high-level knowledge provided by an expert (e.g. using dynamic Bayesian networks) to extract a hierarchical task structure; which is not necessarily available in autonomous systems. In this paper, we propose a novel method based on Sequential Association Rule Mining that can extract Hierarchical Structure of Tasks in Reinforcement Learning (SARM-HSTRL) in an autonomous manner for both Markov decision processes (MDPs) and factored MDPs. The proposed method leverages association rule mining to discover the causal and temporal relationships among states in different trajectories, and extracts a task hierarchy that captures these relationships among sub-goals as termination conditions of different sub-tasks. We prove that the extracted hierarchical policy offers a hierarchically optimal policy in MDPs and factored MDPs. It should be noted that SARM-HSTRL extracts this hierarchical optimal policy without having dynamic Bayesian networks in scenarios with a single task trajectory and also with multiple tasks' trajectories. Furthermore, it has been theoretically and empirically shown that the extracted hierarchical task structure is consistent with trajectories and provides the most efficient, reliable, and compact structure under appropriate assumptions. The numerical results compare the performance of the proposed SARM-HSTRL method with conventional HRL algorithms in terms of the accuracy in detecting the sub-goals, the validity of the extracted hierarchies, and the speed of learning in several testbeds.

Is multiagent deep reinforcement learning the answer or the question? A brief survey

Oct 12, 2018Pablo Hernandez-Leal, Bilal Kartal, Matthew E. Taylor

Deep reinforcement learning (DRL) has achieved outstanding results in recent years. This has led to a dramatic increase in the number of applications and methods. Recent works have explored learning beyond single-agent scenarios and have considered multiagent scenarios. Initial results report successes in complex multiagent domains, although there are several challenges to be addressed. In this context, first, this article provides a clear overview of current multiagent deep reinforcement learning (MDRL) literature. Second, it provides guidelines to complement this emerging area by (i) showcasing examples on how methods and algorithms from DRL and multiagent learning (MAL) have helped solve problems in MDRL and (ii) providing general lessons learned from these works. We expect this article will help unify and motivate future research to take advantage of the abundant literature that exists in both areas (DRL and MAL) in a joint effort to promote fruitful research in the multiagent community.

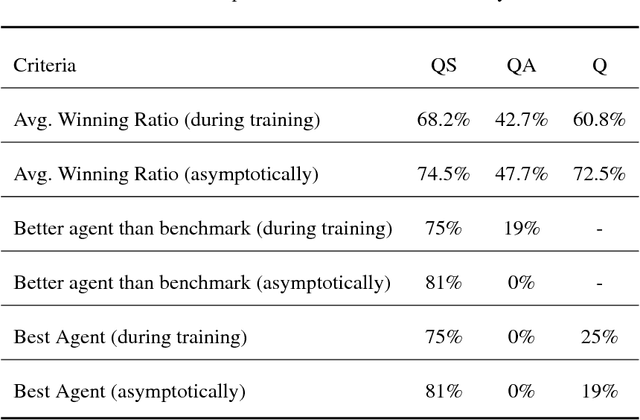

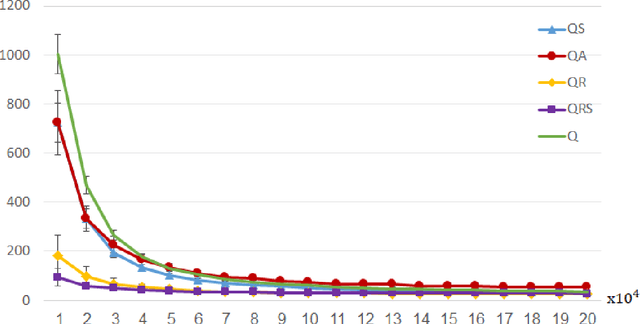

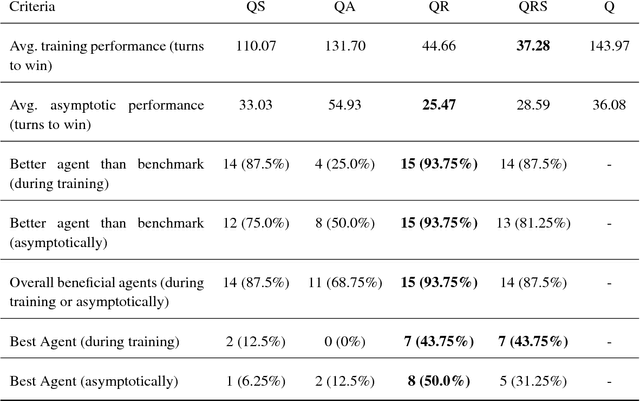

Leveraging human knowledge in tabular reinforcement learning: A study of human subjects

May 15, 2018Ariel Rosenfeld, Moshe Cohen, Matthew E. Taylor, Sarit Kraus

Reinforcement Learning (RL) can be extremely effective in solving complex, real-world problems. However, injecting human knowledge into an RL agent may require extensive effort and expertise on the human designer's part. To date, human factors are generally not considered in the development and evaluation of possible RL approaches. In this article, we set out to investigate how different methods for injecting human knowledge are applied, in practice, by human designers of varying levels of knowledge and skill. We perform the first empirical evaluation of several methods, including a newly proposed method named SASS which is based on the notion of similarities in the agent's state-action space. Through this human study, consisting of 51 human participants, we shed new light on the human factors that play a key role in RL. We find that the classical reward shaping technique seems to be the most natural method for most designers, both expert and non-expert, to speed up RL. However, we further find that our proposed method SASS can be effectively and efficiently combined with reward shaping, and provides a beneficial alternative to using only a single speedup method with minimal human designer effort overhead.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge