Revisiting Sample Selection Approach to Positive-Unlabeled Learning: Turning Unlabeled Data into Positive rather than Negative

Jan 29, 2019Miao Xu, Bingcong Li, Gang Niu, Bo Han, Masashi Sugiyama

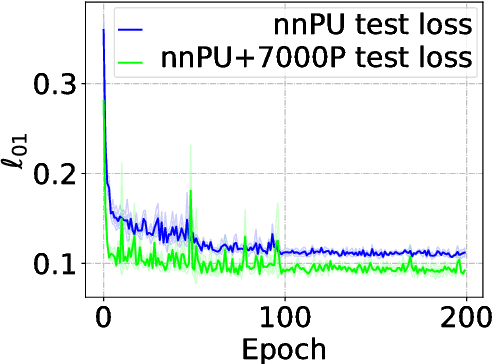

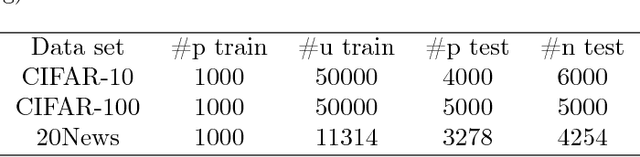

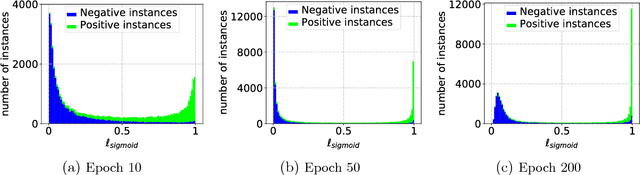

In the early history of positive-unlabeled (PU) learning, the sample selection approach, which heuristically selects negative (N) data from U data, was explored extensively. However, this approach was later dominated by the importance reweighting approach, which carefully treats all U data as N data. May there be a new sample selection method that can outperform the latest importance reweighting method in the deep learning age? This paper is devoted to answering this question affirmatively---we propose to label large-loss U data as P, based on the memorization properties of deep networks. Since P data selected in such a way are biased, we develop a novel learning objective that can handle such biased P data properly. Experiments confirm the superiority of the proposed method.

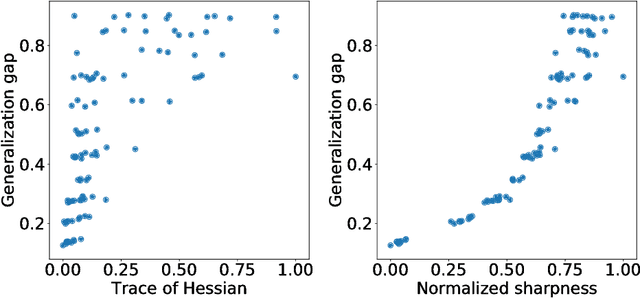

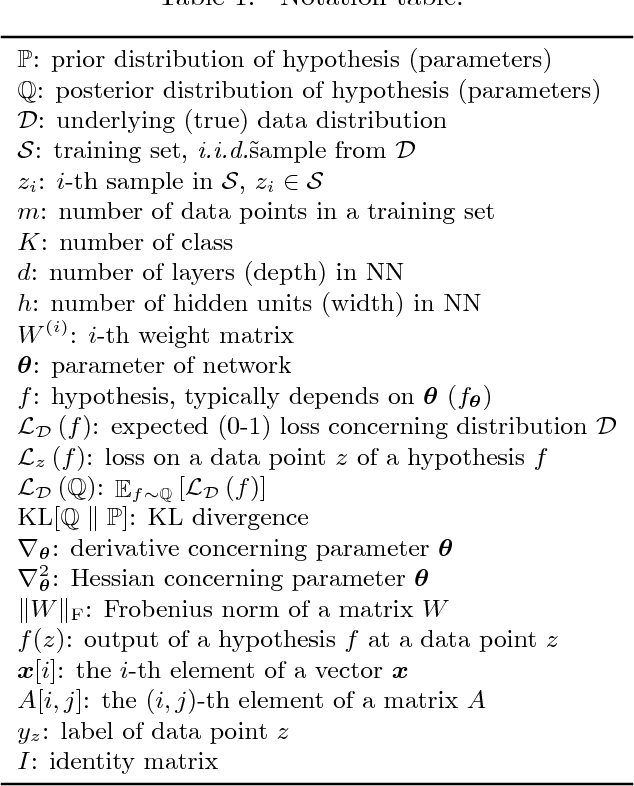

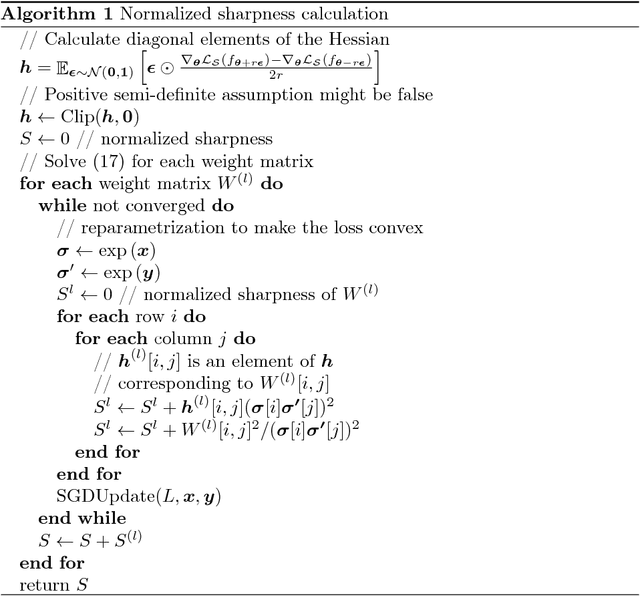

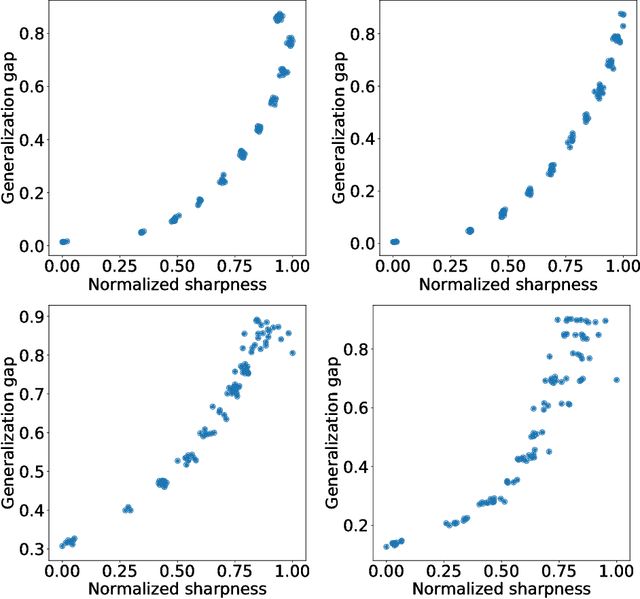

Normalized Flat Minima: Exploring Scale Invariant Definition of Flat Minima for Neural Networks using PAC-Bayesian Analysis

Jan 28, 2019Yusuke Tsuzuku, Issei Sato, Masashi Sugiyama

The notion of flat minima has played a key role in the generalization studies of deep learning models. However, existing definitions of the flatness are known to be sensitive to the rescaling of parameters. The issue suggests that the previous definitions of the flatness might not be a good measure of generalization, because generalization is invariant to such rescalings. In this paper, from the PAC-Bayesian perspective, we scrutinize the discussion concerning the flat minima and introduce the notion of normalized flat minima, which is free from the known scale dependence issues. Additionally, we highlight the scale dependence of existing matrix-norm based generalization error bounds similar to the existing flat minima definitions. Our modified notion of the flatness does not suffer from the insufficiency, either, suggesting it might provide better hierarchy in the hypothesis class.

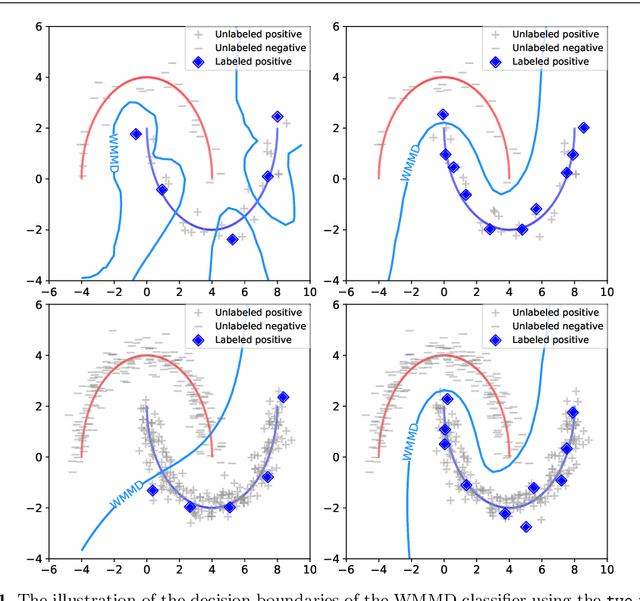

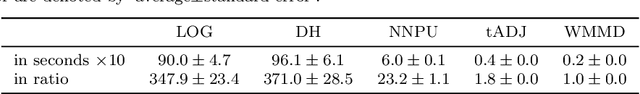

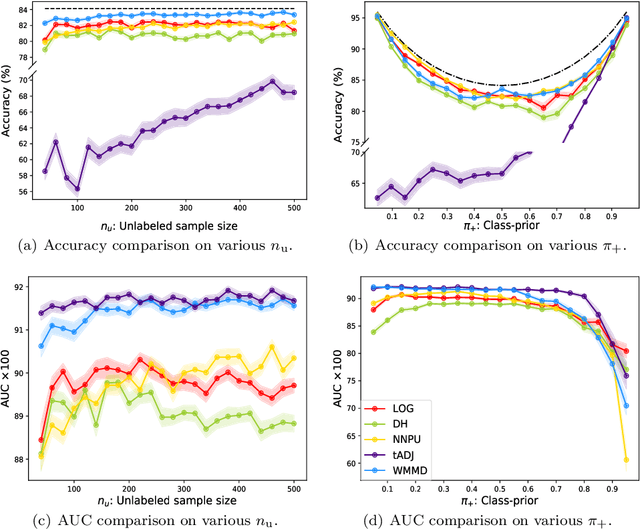

An analytic formulation for positive-unlabeled learning via weighted integral probability metric

Jan 28, 2019Yongchan Kwon, Wonyoung Kim, Masashi Sugiyama, Myunghee Cho Paik

We consider the problem of learning a binary classifier from only positive and unlabeled observations (PU learning). Although recent research in PU learning has succeeded in showing theoretical and empirical performance, most existing algorithms need to solve either a convex or a non-convex optimization problem and thus are not suitable for large-scale datasets. In this paper, we propose a simple yet theoretically grounded PU learning algorithm by extending the previous work proposed for supervised binary classification (Sriperumbudur et al., 2012). The proposed PU learning algorithm produces a closed-form classifier when the hypothesis space is a closed ball in reproducing kernel Hilbert space. In addition, we establish upper bounds of the estimation error and the excess risk. The obtained estimation error bound is sharper than existing results and the excess risk bound does not rely on an approximation error term. To the best of our knowledge, we are the first to explicitly derive the excess risk bound in the field of PU learning. Finally, we conduct extensive numerical experiments using both synthetic and real datasets, demonstrating improved accuracy, scalability, and robustness of the proposed algorithm.

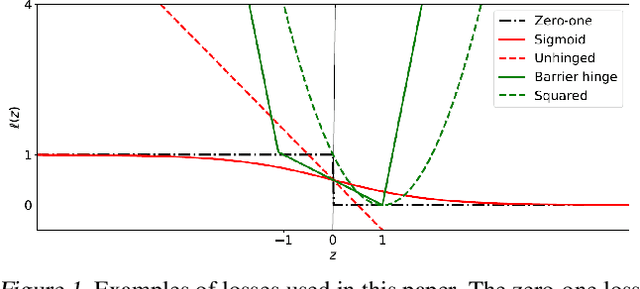

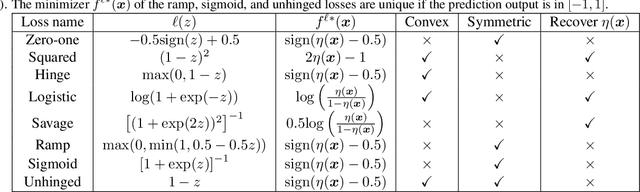

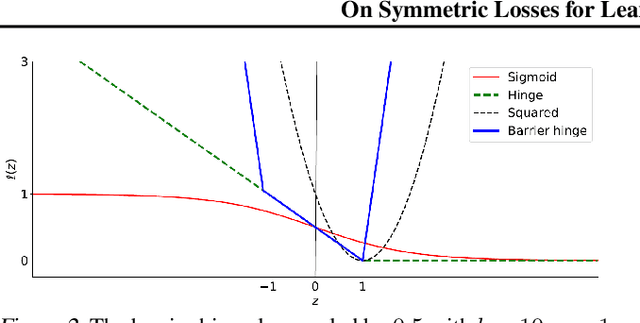

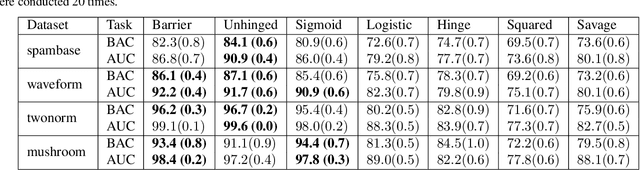

On Symmetric Losses for Learning from Corrupted Labels

Jan 27, 2019Nontawat Charoenphakdee, Jongyeong Lee, Masashi Sugiyama

This paper aims to provide a better understanding of a symmetric loss. First, we show that using a symmetric loss is advantageous in the balanced error rate (BER) minimization and area under the receiver operating characteristic curve (AUC) maximization from corrupted labels. Second, we prove general theoretical properties of symmetric losses, including a classification-calibration condition, excess risk bound, conditional risk minimizer, and AUC-consistency condition. Third, since all nonnegative symmetric losses are non-convex, we propose a convex barrier hinge loss that benefits significantly from the symmetric condition, although it is not symmetric everywhere. Finally, we conduct experiments on BER and AUC optimization from corrupted labels to validate the relevance of the symmetric condition.

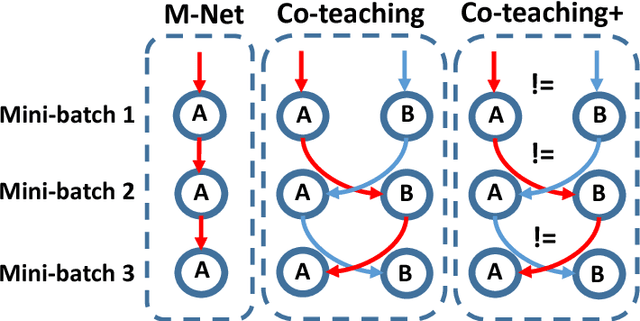

How does Disagreement Help Generalization against Label Corruption?

Jan 26, 2019Xingrui Yu, Bo Han, Jiangchao Yao, Gang Niu, Ivor W. Tsang, Masashi Sugiyama

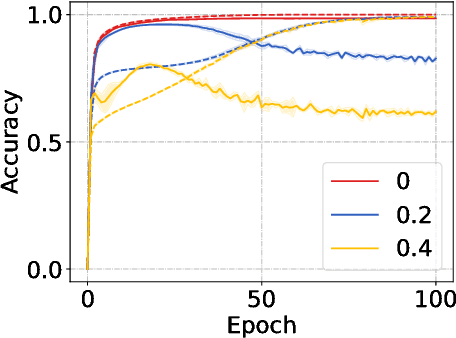

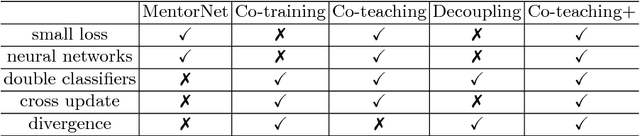

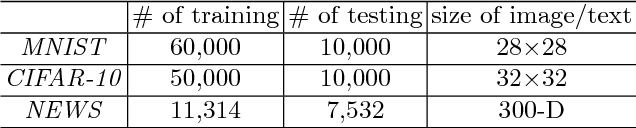

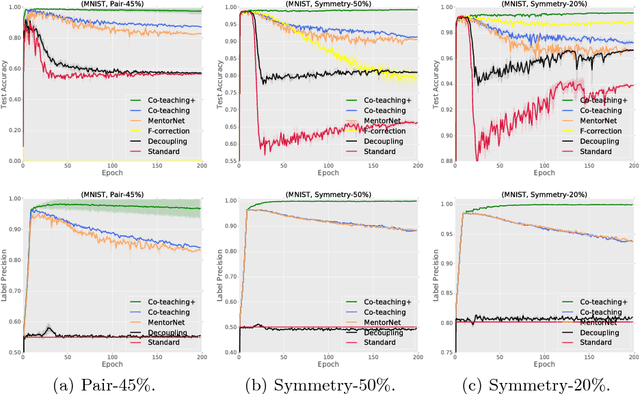

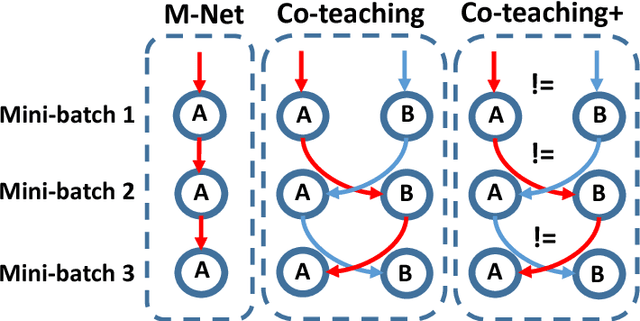

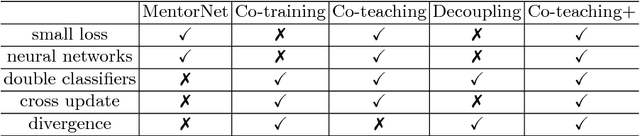

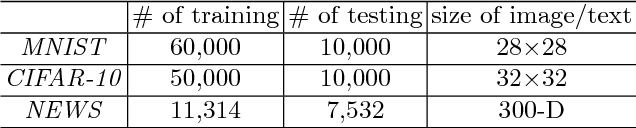

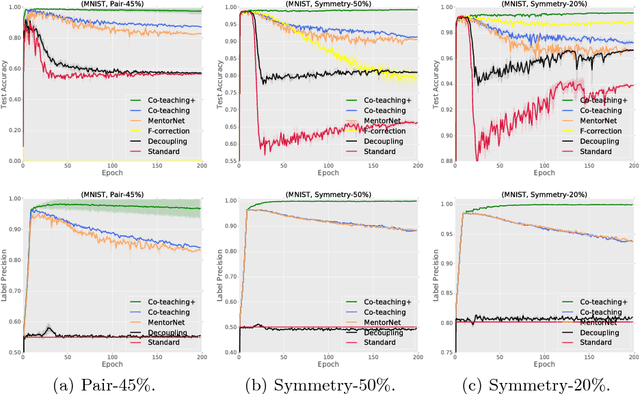

Learning with noisy labels is one of the hottest problems in weakly-supervised learning. Based on memorization effects of deep neural networks, training on small-loss instances becomes very promising for handling noisy labels. This fosters the state-of-the-art approach "Co-teaching" that cross-trains two deep neural networks using the small-loss trick. However, with the increase of epochs, two networks converge to a consensus and Co-teaching reduces to the self-training MentorNet. To tackle this issue, we propose a robust learning paradigm called Co-teaching+, which bridges the "Update by Disagreement" strategy with the original Co-teaching. First, two networks feed forward and predict all data, but keep prediction disagreement data only. Then, among such disagreement data, each network selects its small-loss data, but back propagates the small-loss data from its peer network and updates its own parameters. Empirical results on benchmark datasets demonstrate that Co-teaching+ is much superior to many state-of-the-art methods in the robustness of trained models.

How Does Disagreement Benefit Co-teaching?

Jan 14, 2019Xingrui Yu, Bo Han, Jiangchao Yao, Gang Niu, Ivor W. Tsang, Masashi Sugiyama

Learning with noisy labels is one of the most important question in weakly-supervised learning domain. Classical approaches focus on adding the regularization or estimating the noise transition matrix. However, either a regularization bias is permanently introduced, or the noise transition matrix is hard to be estimated accurately. In this paper, following a novel path to train on small-loss samples, we propose a robust learning paradigm called Co-teaching+. This paradigm naturally bridges "Update by Disagreement" strategy with Co-teaching that trains two deep neural networks, thus consists of disagreement-update step and cross-update step. In disagreement-update step, two networks predicts all data first, and feeds forward prediction disagreement data only. Then, in cross-update step, each network selects its small-loss data from such disagreement data, but back propagates the small-loss data by its peer network and updates itself parameters. Empirical results on noisy versions of MNIST, CIFAR-10 and NEWS demonstrate that Co-teaching+ is much superior to the state-of-the-art methods in the robustness of trained deep models.

Hierarchical Reinforcement Learning via Advantage-Weighted Information Maximization

Jan 05, 2019Takayuki Osa, Voot Tangkaratt, Masashi Sugiyama

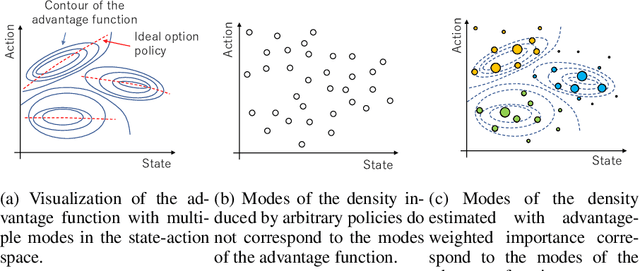

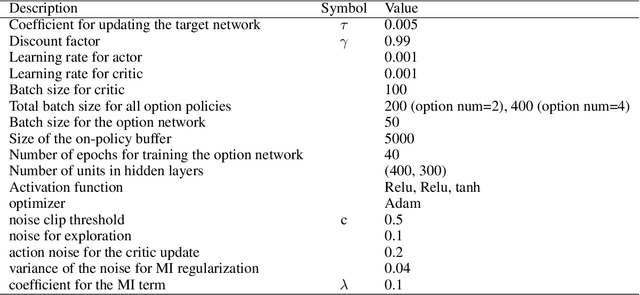

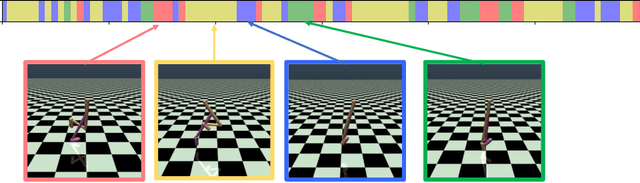

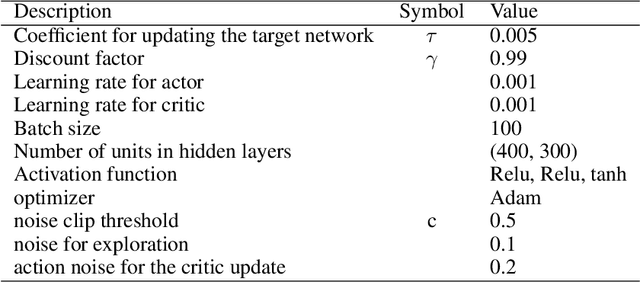

Real-world tasks are often highly structured. Hierarchical reinforcement learning (HRL) has attracted research interest as an approach for leveraging the hierarchical structure of a given task in reinforcement learning (RL). However, identifying the hierarchical policy structure that enhances the performance of RL is not a trivial task. In this paper, we propose an HRL method that learns a latent variable of a hierarchical policy using mutual information maximization. Our approach can be interpreted as a way to learn a discrete and latent representation of the state-action space. To learn option policies that correspond to modes of the advantage function, we introduce advantage-weighted importance sampling. In our HRL method, the gating policy learns to select option policies based on an option-value function, and these option policies are optimized based on the deterministic policy gradient method. This framework is derived by leveraging the analogy between a monolithic policy in standard RL and a hierarchical policy in HRL by using a deterministic option policy. Experimental results indicate that our HRL approach can learn a diversity of options and that it can enhance the performance of RL in continuous control tasks.

Active Deep Q-learning with Demonstration

Dec 06, 2018Si-An Chen, Voot Tangkaratt, Hsuan-Tien Lin, Masashi Sugiyama

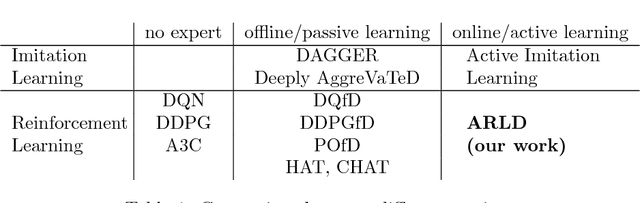

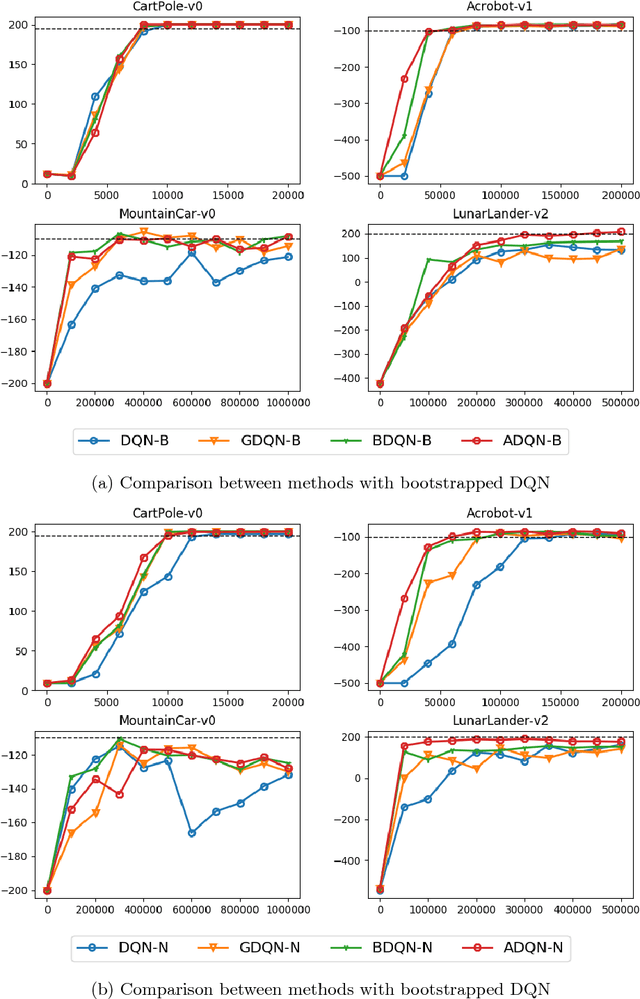

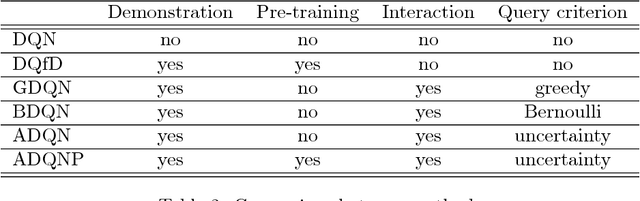

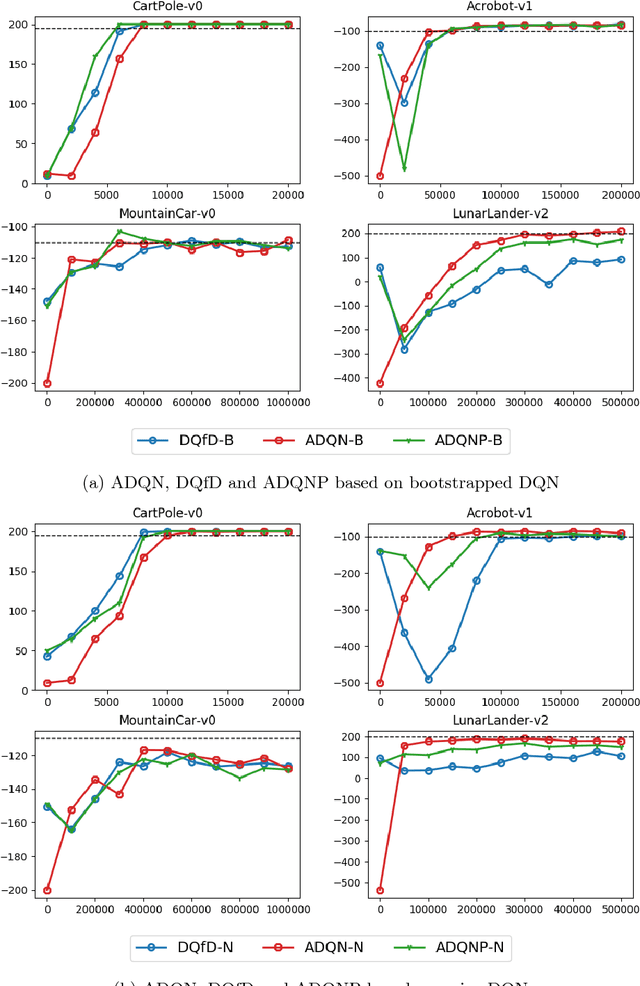

Recent research has shown that although Reinforcement Learning (RL) can benefit from expert demonstration, it usually takes considerable efforts to obtain enough demonstration. The efforts prevent training decent RL agents with expert demonstration in practice. In this work, we propose Active Reinforcement Learning with Demonstration (ARLD), a new framework to streamline RL in terms of demonstration efforts by allowing the RL agent to query for demonstration actively during training. Under the framework, we propose Active Deep Q-Network, a novel query strategy which adapts to the dynamically-changing distributions during the RL training process by estimating the uncertainty of recent states. The expert demonstration data within Active DQN are then utilized by optimizing supervised max-margin loss in addition to temporal difference loss within usual DQN training. We propose two methods of estimating the uncertainty based on two state-of-the-art DQN models, namely the divergence of bootstrapped DQN and the variance of noisy DQN. The empirical results validate that both methods not only learn faster than other passive expert demonstration methods with the same amount of demonstration and but also reach super-expert level of performance across four different tasks.

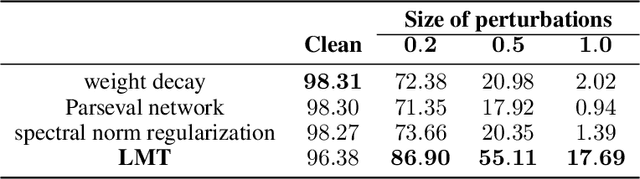

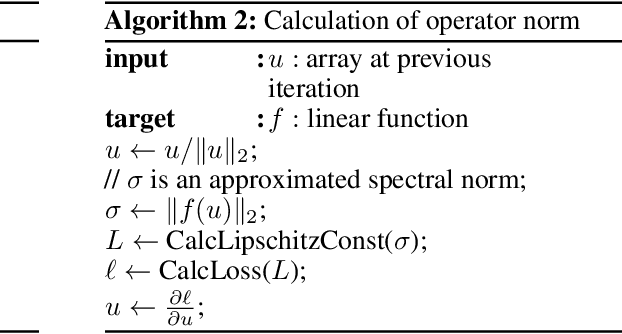

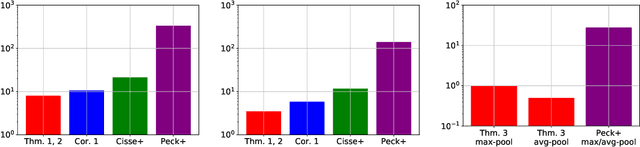

Lipschitz-Margin Training: Scalable Certification of Perturbation Invariance for Deep Neural Networks

Oct 31, 2018Yusuke Tsuzuku, Issei Sato, Masashi Sugiyama

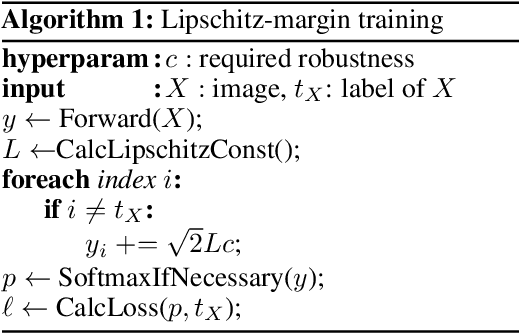

High sensitivity of neural networks against malicious perturbations on inputs causes security concerns. To take a steady step towards robust classifiers, we aim to create neural network models provably defended from perturbations. Prior certification work requires strong assumptions on network structures and massive computational costs, and thus the range of their applications was limited. From the relationship between the Lipschitz constants and prediction margins, we present a computationally efficient calculation technique to lower-bound the size of adversarial perturbations that can deceive networks, and that is widely applicable to various complicated networks. Moreover, we propose an efficient training procedure that robustifies networks and significantly improves the provably guarded areas around data points. In experimental evaluations, our method showed its ability to provide a non-trivial guarantee and enhance robustness for even large networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge