Marcelo Becker

ZeST: an LLM-based Zero-Shot Traversability Navigation for Unknown Environments

Aug 26, 2025Abstract:The advancement of robotics and autonomous navigation systems hinges on the ability to accurately predict terrain traversability. Traditional methods for generating datasets to train these prediction models often involve putting robots into potentially hazardous environments, posing risks to equipment and safety. To solve this problem, we present ZeST, a novel approach leveraging visual reasoning capabilities of Large Language Models (LLMs) to create a traversability map in real-time without exposing robots to danger. Our approach not only performs zero-shot traversability and mitigates the risks associated with real-world data collection but also accelerates the development of advanced navigation systems, offering a cost-effective and scalable solution. To support our findings, we present navigation results, in both controlled indoor and unstructured outdoor environments. As shown in the experiments, our method provides safer navigation when compared to other state-of-the-art methods, constantly reaching the final goal.

A Synthetic Dataset for Manometry Recognition in Robotic Applications

Aug 24, 2025Abstract:This work addresses the challenges of data scarcity and high acquisition costs for training robust object detection models in complex industrial environments, such as offshore oil platforms. The practical and economic barriers to collecting real-world data in these hazardous settings often hamper the development of autonomous inspection systems. To overcome this, in this work we propose and validate a hybrid data synthesis pipeline that combines procedural rendering with AI-driven video generation. Our methodology leverages BlenderProc to create photorealistic images with precise annotations and controlled domain randomization, and integrates NVIDIA's Cosmos-Predict2 world-foundation model to synthesize physically plausible video sequences with temporal diversity, capturing rare viewpoints and adverse conditions. We demonstrate that a YOLO-based detection network trained on a composite dataset, blending real images with our synthetic data, achieves superior performance compared to models trained exclusively on real-world data. Notably, a 1:1 mixture of real and synthetic data yielded the highest accuracy, surpassing the real-only baseline. These findings highlight the viability of a synthetic-first approach as an efficient, cost-effective, and safe alternative for developing reliable perception systems in safety-critical and resource-constrained industrial applications.

Optimizing Grasping in Legged Robots: A Deep Learning Approach to Loco-Manipulation

Aug 24, 2025Abstract:Quadruped robots have emerged as highly efficient and versatile platforms, excelling in navigating complex and unstructured terrains where traditional wheeled robots might fail. Equipping these robots with manipulator arms unlocks the advanced capability of loco-manipulation to perform complex physical interaction tasks in areas ranging from industrial automation to search-and-rescue missions. However, achieving precise and adaptable grasping in such dynamic scenarios remains a significant challenge, often hindered by the need for extensive real-world calibration and pre-programmed grasp configurations. This paper introduces a deep learning framework designed to enhance the grasping capabilities of quadrupeds equipped with arms, focusing on improved precision and adaptability. Our approach centers on a sim-to-real methodology that minimizes reliance on physical data collection. We developed a pipeline within the Genesis simulation environment to generate a synthetic dataset of grasp attempts on common objects. By simulating thousands of interactions from various perspectives, we created pixel-wise annotated grasp-quality maps to serve as the ground truth for our model. This dataset was used to train a custom CNN with a U-Net-like architecture that processes multi-modal input from an onboard RGB and depth cameras, including RGB images, depth maps, segmentation masks, and surface normal maps. The trained model outputs a grasp-quality heatmap to identify the optimal grasp point. We validated the complete framework on a four-legged robot. The system successfully executed a full loco-manipulation task: autonomously navigating to a target object, perceiving it with its sensors, predicting the optimal grasp pose using our model, and performing a precise grasp. This work proves that leveraging simulated training with advanced sensing offers a scalable and effective solution for object handling.

A Vision-Based Shared-Control Teleoperation Scheme for Controlling the Robotic Arm of a Four-Legged Robot

Aug 20, 2025Abstract:In hazardous and remote environments, robotic systems perform critical tasks demanding improved safety and efficiency. Among these, quadruped robots with manipulator arms offer mobility and versatility for complex operations. However, teleoperating quadruped robots is challenging due to the lack of integrated obstacle detection and intuitive control methods for the robotic arm, increasing collision risks in confined or dynamically changing workspaces. Teleoperation via joysticks or pads can be non-intuitive and demands a high level of expertise due to its complexity, culminating in a high cognitive load on the operator. To address this challenge, a teleoperation approach that directly maps human arm movements to the robotic manipulator offers a simpler and more accessible solution. This work proposes an intuitive remote control by leveraging a vision-based pose estimation pipeline that utilizes an external camera with a machine learning-based model to detect the operator's wrist position. The system maps these wrist movements into robotic arm commands to control the robot's arm in real-time. A trajectory planner ensures safe teleoperation by detecting and preventing collisions with both obstacles and the robotic arm itself. The system was validated on the real robot, demonstrating robust performance in real-time control. This teleoperation approach provides a cost-effective solution for industrial applications where safety, precision, and ease of use are paramount, ensuring reliable and intuitive robotic control in high-risk environments.

The Impact of Feature Scaling In Machine Learning: Effects on Regression and Classification Tasks

Jun 11, 2025Abstract:This research addresses the critical lack of comprehensive studies on feature scaling by systematically evaluating 12 scaling techniques - including several less common transformations - across 14 different Machine Learning algorithms and 16 datasets for classification and regression tasks. We meticulously analyzed impacts on predictive performance (using metrics such as accuracy, MAE, MSE, and $R^2$) and computational costs (training time, inference time, and memory usage). Key findings reveal that while ensemble methods (such as Random Forest and gradient boosting models like XGBoost, CatBoost and LightGBM) demonstrate robust performance largely independent of scaling, other widely used models such as Logistic Regression, SVMs, TabNet, and MLPs show significant performance variations highly dependent on the chosen scaler. This extensive empirical analysis, with all source code, experimental results, and model parameters made publicly available to ensure complete transparency and reproducibility, offers model-specific crucial guidance to practitioners on the need for an optimal selection of feature scaling techniques.

A Leaf-Level Dataset for Soybean-Cotton Detection and Segmentation

Mar 03, 2025

Abstract:Soybean and cotton are major drivers of many countries' agricultural sectors, offering substantial economic returns but also facing persistent challenges from volunteer plants and weeds that hamper sustainable management. Effectively controlling volunteer plants and weeds demands advanced recognition strategies that can identify these amidst complex crop canopies. While deep learning methods have demonstrated promising results for leaf-level detection and segmentation, existing datasets often fail to capture the complexity of real-world agricultural fields. To address this, we collected 640 high-resolution images from a commercial farm spanning multiple growth stages, weed pressures, and lighting variations. Each image is annotated at the leaf-instance level, with 7,221 soybean and 5,190 cotton leaves labeled via bounding boxes and segmentation masks, capturing overlapping foliage, small leaf size, and morphological similarities. We validate this dataset using YOLOv11, demonstrating state-of-the-art performance in accurately identifying and segmenting overlapping foliage. Our publicly available dataset supports advanced applications such as selective herbicide spraying and pest monitoring and can foster more robust, data-driven strategies for soybean-cotton management.

CropNav: a Framework for Autonomous Navigation in Real Farms

Nov 17, 2024

Abstract:Small robots that can operate under the plant canopy can enable new possibilities in agriculture. However, unlike larger autonomous tractors, autonomous navigation for such under canopy robots remains an open challenge because Global Navigation Satellite System (GNSS) is unreliable under the plant canopy. We present a hybrid navigation system that autonomously switches between different sets of sensing modalities to enable full field navigation, both inside and outside of crop. By choosing the appropriate path reference source, the robot can accommodate for loss of GNSS signal quality and leverage row-crop structure to autonomously navigate. However, such switching can be tricky and difficult to execute over scale. Our system provides a solution by automatically switching between an exteroceptive sensing based system, such as Light Detection And Ranging (LiDAR) row-following navigation and waypoints path tracking. In addition, we show how our system can detect when the navigate fails and recover automatically extending the autonomous time and mitigating the necessity of human intervention. Our system shows an improvement of about 750 m per intervention over GNSS-based navigation and 500 m over row following navigation.

Breast Cancer Classification Using Gradient Boosting Algorithms Focusing on Reducing the False Negative and SHAP for Explainability

Mar 14, 2024

Abstract:Cancer is one of the diseases that kill the most women in the world, with breast cancer being responsible for the highest number of cancer cases and consequently deaths. However, it can be prevented by early detection and, consequently, early treatment. Any development for detection or perdition this kind of cancer is important for a better healthy life. Many studies focus on a model with high accuracy in cancer prediction, but sometimes accuracy alone may not always be a reliable metric. This study implies an investigative approach to studying the performance of different machine learning algorithms based on boosting to predict breast cancer focusing on the recall metric. Boosting machine learning algorithms has been proven to be an effective tool for detecting medical diseases. The dataset of the University of California, Irvine (UCI) repository has been utilized to train and test the model classifier that contains their attributes. The main objective of this study is to use state-of-the-art boosting algorithms such as AdaBoost, XGBoost, CatBoost and LightGBM to predict and diagnose breast cancer and to find the most effective metric regarding recall, ROC-AUC, and confusion matrix. Furthermore, our study is the first to use these four boosting algorithms with Optuna, a library for hyperparameter optimization, and the SHAP method to improve the interpretability of our model, which can be used as a support to identify and predict breast cancer. We were able to improve AUC or recall for all the models and reduce the False Negative for AdaBoost and LigthGBM the final AUC were more than 99.41\% for all models.

Automatic Routing System for Intelligent Warehouses

Jul 13, 2023

Abstract:Automation of logistic processes is essential to improve productivity and reduce costs. In this context, intelligent warehouses are becoming a key to logistic systems thanks to their ability of optimizing transportation tasks and, consequently, reducing costs. This paper initially presents briefly routing systems applied on intelligent warehouses. Then, we present the approach used to develop our router system. This router system is able to solve traffic jams and collisions, generate conflict-free and optimized paths before sending the final paths to the robotic forklifts. It also verifies the progress of all tasks. When a problem occurs, the router system can change the task priorities, routes, etc. in order to avoid new conflicts. In the routing simulations, each vehicle executes its tasks starting from a predefined initial pose, moving to the desired position. Our algorithm is based on Dijkstra's shortest path and the time window approaches and it was implemented in C language. Computer simulation tests were used to validate the algorithm efficiency under different working conditions. Several simulations were carried out using the Player/Stage Simulator to test the algorithms. Thanks to the simulations, we could solve many faults and refine the algorithms before embedding them in real robots.

Visual Localization and Mapping in Dynamic and Changing Environments

Sep 21, 2022

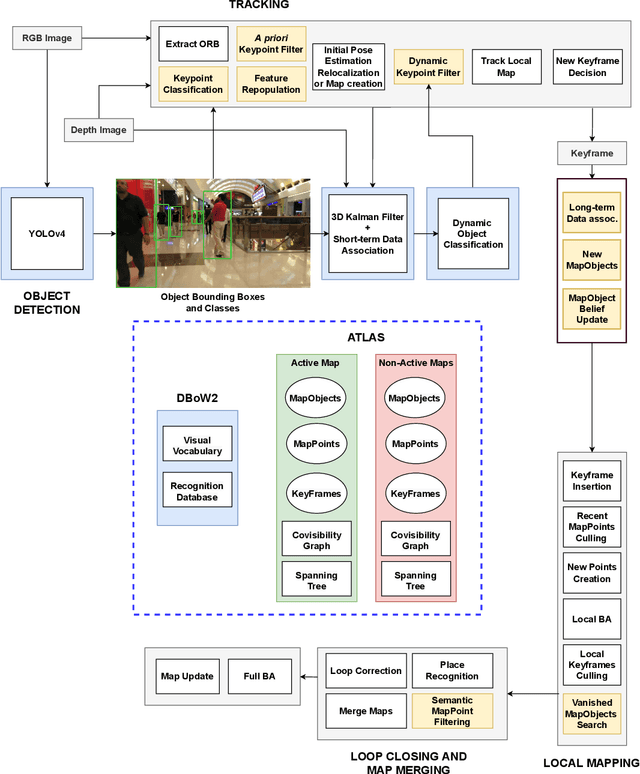

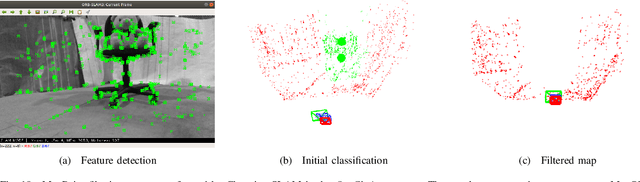

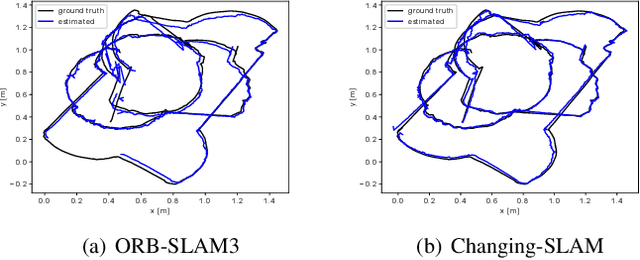

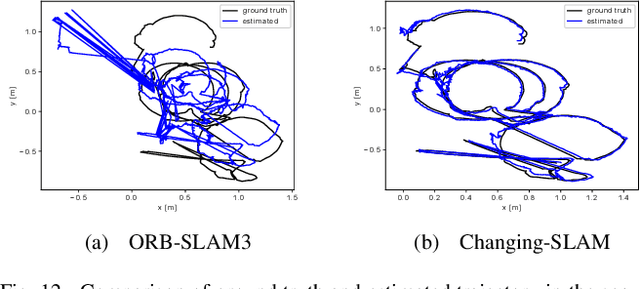

Abstract:The real-world deployment of fully autonomous mobile robots depends on a robust SLAM (Simultaneous Localization and Mapping) system, capable of handling dynamic environments, where objects are moving in front of the robot, and changing environments, where objects are moved or replaced after the robot has already mapped the scene. This paper presents Changing-SLAM, a method for robust Visual SLAM in both dynamic and changing environments. This is achieved by using a Bayesian filter combined with a long-term data association algorithm. Also, it employs an efficient algorithm for dynamic keypoints filtering based on object detection that correctly identify features inside the bounding box that are not dynamic, preventing a depletion of features that could cause lost tracks. Furthermore, a new dataset was developed with RGB-D data especially designed for the evaluation of changing environments on an object level, called PUC-USP dataset. Six sequences were created using a mobile robot, an RGB-D camera and a motion capture system. The sequences were designed to capture different scenarios that could lead to a tracking failure or a map corruption. To the best of our knowledge, Changing-SLAM is the first Visual SLAM system that is robust to both dynamic and changing environments, not assuming a given camera pose or a known map, being also able to operate in real time. The proposed method was evaluated using benchmark datasets and compared with other state-of-the-art methods, proving to be highly accurate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge