Lonneke van der Plas

Modelling Analogies and Analogical Reasoning: Connecting Cognitive Science Theory and NLP Research

Sep 11, 2025Abstract:Analogical reasoning is an essential aspect of human cognition. In this paper, we summarize key theory about the processes underlying analogical reasoning from the cognitive science literature and relate it to current research in natural language processing. While these processes can be easily linked to concepts in NLP, they are generally not viewed through a cognitive lens. Furthermore, we show how these notions are relevant for several major challenges in NLP research, not directly related to analogy solving. This may guide researchers to better optimize relational understanding in text, as opposed to relying heavily on entity-level similarity.

Creative Preference Optimization

May 20, 2025

Abstract:While Large Language Models (LLMs) have demonstrated impressive performance across natural language generation tasks, their ability to generate truly creative content-characterized by novelty, diversity, surprise, and quality-remains limited. Existing methods for enhancing LLM creativity often focus narrowly on diversity or specific tasks, failing to address creativity's multifaceted nature in a generalizable way. In this work, we propose Creative Preference Optimization (CrPO), a novel alignment method that injects signals from multiple creativity dimensions into the preference optimization objective in a modular fashion. We train and evaluate creativity-augmented versions of several models using CrPO and MuCE, a new large-scale human preference dataset spanning over 200,000 human-generated responses and ratings from more than 30 psychological creativity assessments. Our models outperform strong baselines, including GPT-4o, on both automated and human evaluations, producing more novel, diverse, and surprising generations while maintaining high output quality. Additional evaluations on NoveltyBench further confirm the generalizability of our approach. Together, our results demonstrate that directly optimizing for creativity within preference frameworks is a promising direction for advancing the creative capabilities of LLMs without compromising output quality.

HintsOfTruth: A Multimodal Checkworthiness Detection Dataset with Real and Synthetic Claims

Feb 17, 2025

Abstract:Misinformation can be countered with fact-checking, but the process is costly and slow. Identifying checkworthy claims is the first step, where automation can help scale fact-checkers' efforts. However, detection methods struggle with content that is 1) multimodal, 2) from diverse domains, and 3) synthetic. We introduce HintsOfTruth, a public dataset for multimodal checkworthiness detection with $27$K real-world and synthetic image/claim pairs. The mix of real and synthetic data makes this dataset unique and ideal for benchmarking detection methods. We compare fine-tuned and prompted Large Language Models (LLMs). We find that well-configured lightweight text-based encoders perform comparably to multimodal models but the first only focus on identifying non-claim-like content. Multimodal LLMs can be more accurate but come at a significant computational cost, making them impractical for large-scale applications. When faced with synthetic data, multimodal models perform more robustly

Evaluating Creative Short Story Generation in Humans and Large Language Models

Nov 04, 2024

Abstract:Storytelling is a fundamental aspect of human communication, relying heavily on creativity to produce narratives that are novel, appropriate, and surprising. While large language models (LLMs) have recently demonstrated the ability to generate high-quality stories, their creative capabilities remain underexplored. Previous research has either focused on creativity tests requiring short responses or primarily compared model performance in story generation to that of professional writers. However, the question of whether LLMs exhibit creativity in writing short stories on par with the average human remains unanswered. In this work, we conduct a systematic analysis of creativity in short story generation across LLMs and everyday people. Using a five-sentence creative story task, commonly employed in psychology to assess human creativity, we automatically evaluate model- and human-generated stories across several dimensions of creativity, including novelty, surprise, and diversity. Our findings reveal that while LLMs can generate stylistically complex stories, they tend to fall short in terms of creativity when compared to average human writers.

Creativity in AI: Progresses and Challenges

Oct 22, 2024

Abstract:Creativity is the ability to produce novel, useful, and surprising ideas, and has been widely studied as a crucial aspect of human cognition. Machine creativity on the other hand has been a long-standing challenge. With the rise of advanced generative AI, there has been renewed interest and debate regarding AI's creative capabilities. Therefore, it is imperative to revisit the state of creativity in AI and identify key progresses and remaining challenges. In this work, we survey leading works studying the creative capabilities of AI systems, focusing on creative problem-solving, linguistic, artistic, and scientific creativity. Our review suggests that while the latest AI models are largely capable of producing linguistically and artistically creative outputs such as poems, images, and musical pieces, they struggle with tasks that require creative problem-solving, abstract thinking and compositionality and their generations suffer from a lack of diversity, originality, long-range incoherence and hallucinations. We also discuss key questions concerning copyright and authorship issues with generative models. Furthermore, we highlight the need for a comprehensive evaluation of creativity that is process-driven and considers several dimensions of creativity. Finally, we propose future research directions to improve the creativity of AI outputs, drawing inspiration from cognitive science and psychology.

Evaluating Morphological Compositional Generalization in Large Language Models

Oct 16, 2024Abstract:Large language models (LLMs) have demonstrated significant progress in various natural language generation and understanding tasks. However, their linguistic generalization capabilities remain questionable, raising doubts about whether these models learn language similarly to humans. While humans exhibit compositional generalization and linguistic creativity in language use, the extent to which LLMs replicate these abilities, particularly in morphology, is under-explored. In this work, we systematically investigate the morphological generalization abilities of LLMs through the lens of compositionality. We define morphemes as compositional primitives and design a novel suite of generative and discriminative tasks to assess morphological productivity and systematicity. Focusing on agglutinative languages such as Turkish and Finnish, we evaluate several state-of-the-art instruction-finetuned multilingual models, including GPT-4 and Gemini. Our analysis shows that LLMs struggle with morphological compositional generalization particularly when applied to novel word roots, with performance declining sharply as morphological complexity increases. While models can identify individual morphological combinations better than chance, their performance lacks systematicity, leading to significant accuracy gaps compared to humans.

Understanding the effects of language-specific class imbalance in multilingual fine-tuning

Feb 20, 2024Abstract:We study the effect of one type of imbalance often present in real-life multilingual classification datasets: an uneven distribution of labels across languages. We show evidence that fine-tuning a transformer-based Large Language Model (LLM) on a dataset with this imbalance leads to worse performance, a more pronounced separation of languages in the latent space, and the promotion of uninformative features. We modify the traditional class weighing approach to imbalance by calculating class weights separately for each language and show that this helps mitigate those detrimental effects. These results create awareness of the negative effects of language-specific class imbalance in multilingual fine-tuning and the way in which the model learns to rely on the separation of languages to perform the task.

Can language models learn analogical reasoning? Investigating training objectives and comparisons to human performance

Oct 22, 2023Abstract:While analogies are a common way to evaluate word embeddings in NLP, it is also of interest to investigate whether or not analogical reasoning is a task in itself that can be learned. In this paper, we test several ways to learn basic analogical reasoning, specifically focusing on analogies that are more typical of what is used to evaluate analogical reasoning in humans than those in commonly used NLP benchmarks. Our experiments find that models are able to learn analogical reasoning, even with a small amount of data. We additionally compare our models to a dataset with a human baseline, and find that after training, models approach human performance.

Pre-training Data Quality and Quantity for a Low-Resource Language: New Corpus and BERT Models for Maltese

May 26, 2022

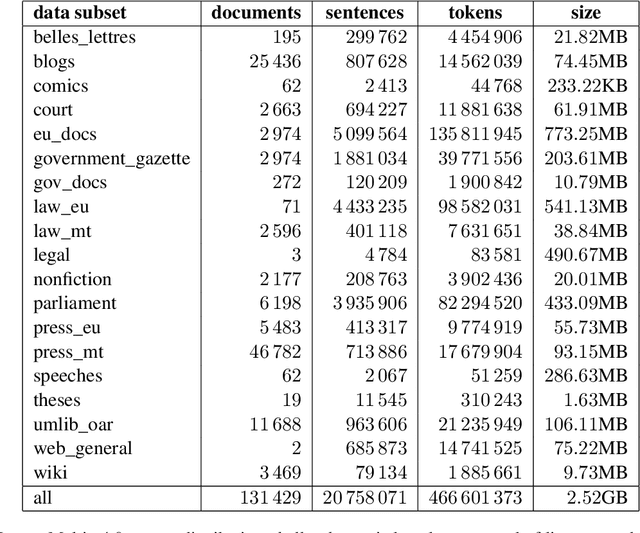

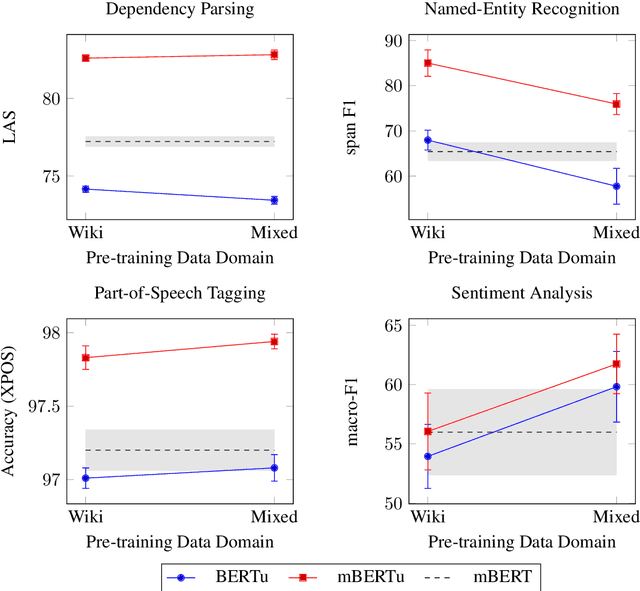

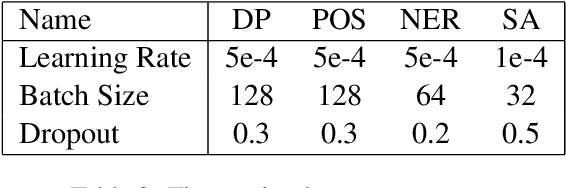

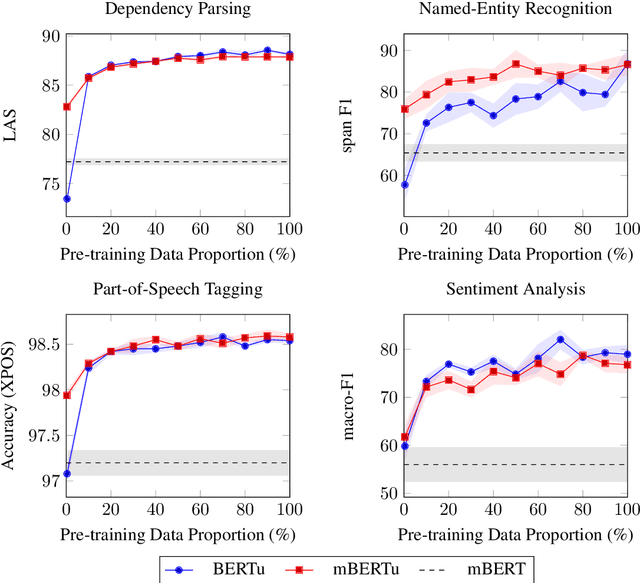

Abstract:Multilingual language models such as mBERT have seen impressive cross-lingual transfer to a variety of languages, but many languages remain excluded from these models. In this paper, we analyse the effect of pre-training with monolingual data for a low-resource language that is not included in mBERT -- Maltese -- with a range of pre-training set ups. We conduct evaluations with the newly pre-trained models on three morphosyntactic tasks -- dependency parsing, part-of-speech tagging, and named-entity recognition -- and one semantic classification task -- sentiment analysis. We also present a newly created corpus for Maltese, and determine the effect that the pre-training data size and domain have on the downstream performance. Our results show that using a mixture of pre-training domains is often superior to using Wikipedia text only. We also find that a fraction of this corpus is enough to make significant leaps in performance over Wikipedia-trained models. We pre-train and compare two models on the new corpus: a monolingual BERT model trained from scratch (BERTu), and a further pre-trained multilingual BERT (mBERTu). The models achieve state-of-the-art performance on these tasks, despite the new corpus being considerably smaller than typically used corpora for high-resourced languages. On average, BERTu outperforms or performs competitively with mBERTu, and the largest gains are observed for higher-level tasks.

Data Augmentation for Speech Recognition in Maltese: A Low-Resource Perspective

Nov 15, 2021

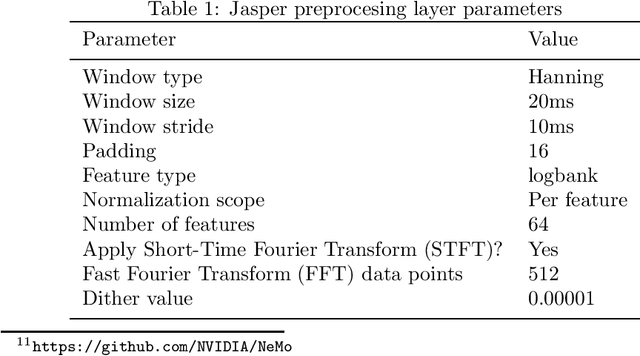

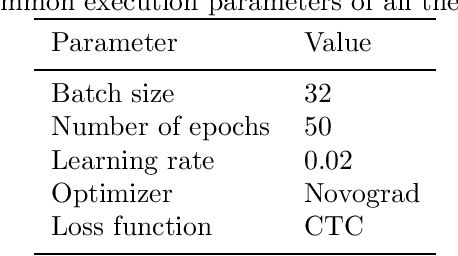

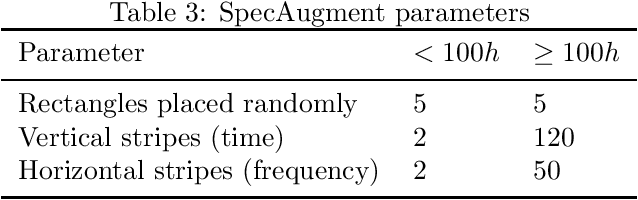

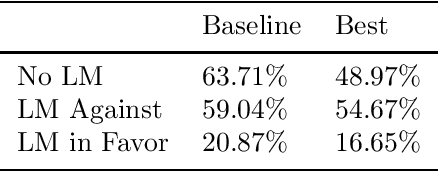

Abstract:Developing speech technologies is a challenge for low-resource languages for which both annotated and raw speech data is sparse. Maltese is one such language. Recent years have seen an increased interest in the computational processing of Maltese, including speech technologies, but resources for the latter remain sparse. In this paper, we consider data augmentation techniques for improving speech recognition for such languages, focusing on Maltese as a test case. We consider three different types of data augmentation: unsupervised training, multilingual training and the use of synthesized speech as training data. The goal is to determine which of these techniques, or combination of them, is the most effective to improve speech recognition for languages where the starting point is a small corpus of approximately 7 hours of transcribed speech. Our results show that combining the three data augmentation techniques studied here lead us to an absolute WER improvement of 15% without the use of a language model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge