Longxiang Gao

An Efficient and Reliable Asynchronous Federated Learning Scheme for Smart Public Transportation

Aug 30, 2022

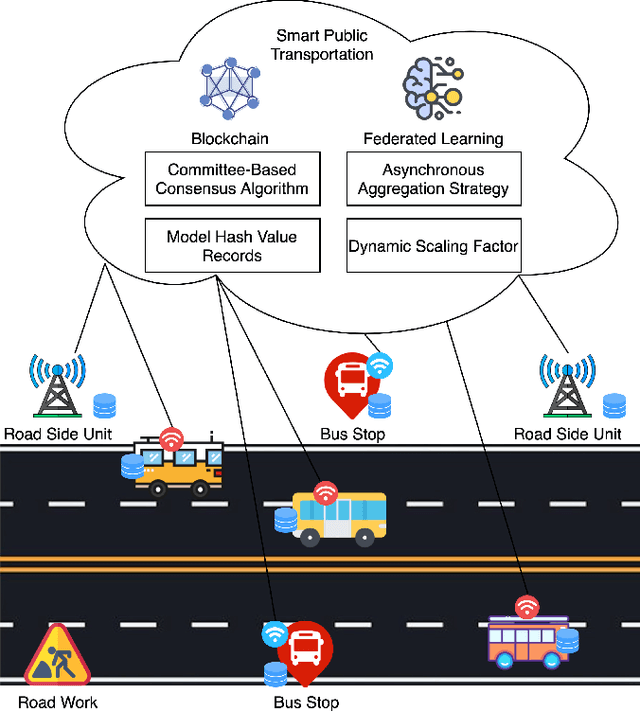

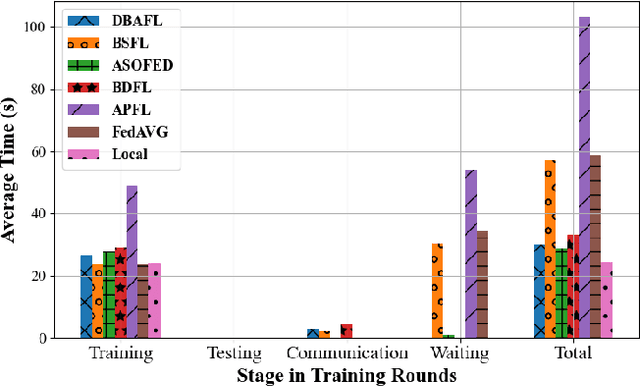

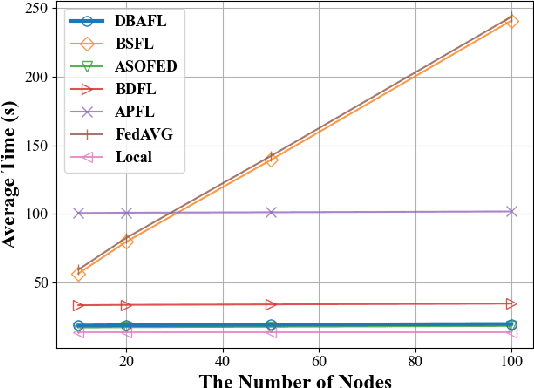

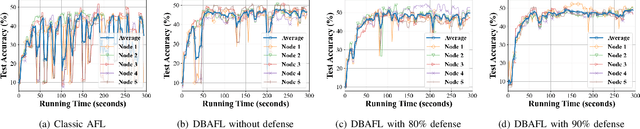

Abstract:Since the traffic conditions change over time, machine learning models that predict traffic flows must be updated continuously and efficiently in smart public transportation. Federated learning (FL) is a distributed machine learning scheme that allows buses to receive model updates without waiting for model training on the cloud. However, FL is vulnerable to poisoning or DDoS attacks since buses travel in public. Some work introduces blockchain to improve reliability, but the additional latency from the consensus process reduces the efficiency of FL. Asynchronous Federated Learning (AFL) is a scheme that reduces the latency of aggregation to improve efficiency, but the learning performance is unstable due to unreasonably weighted local models. To address the above challenges, this paper offers a blockchain-based asynchronous federated learning scheme with a dynamic scaling factor (DBAFL). Specifically, the novel committee-based consensus algorithm for blockchain improves reliability at the lowest possible cost of time. Meanwhile, the devised dynamic scaling factor allows AFL to assign reasonable weights to stale local models. Extensive experiments conducted on heterogeneous devices validate outperformed learning performance, efficiency, and reliability of DBAFL.

Attention Distraction: Watermark Removal Through Continual Learning with Selective Forgetting

Apr 05, 2022

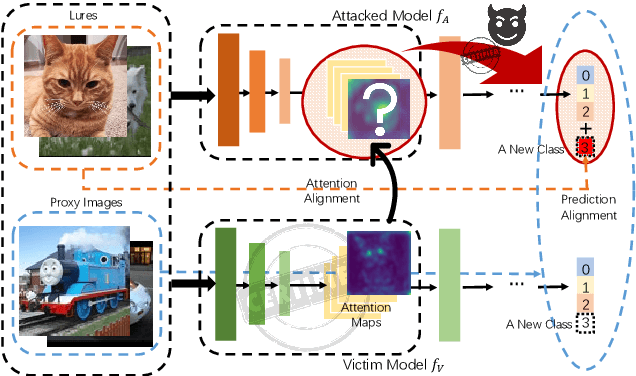

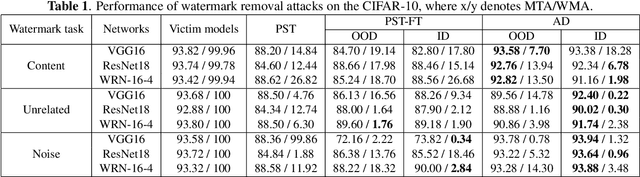

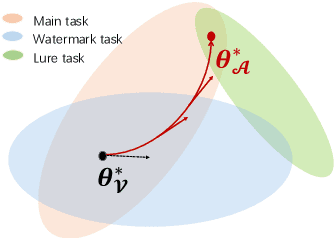

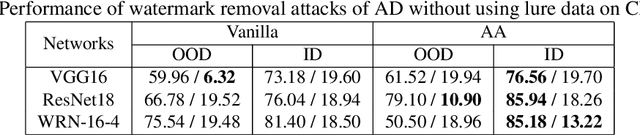

Abstract:Fine-tuning attacks are effective in removing the embedded watermarks in deep learning models. However, when the source data is unavailable, it is challenging to just erase the watermark without jeopardizing the model performance. In this context, we introduce Attention Distraction (AD), a novel source data-free watermark removal attack, to make the model selectively forget the embedded watermarks by customizing continual learning. In particular, AD first anchors the model's attention on the main task using some unlabeled data. Then, through continual learning, a small number of \textit{lures} (randomly selected natural images) that are assigned a new label distract the model's attention away from the watermarks. Experimental results from different datasets and networks corroborate that AD can thoroughly remove the watermark with a small resource budget without compromising the model's performance on the main task, which outperforms the state-of-the-art works.

Temporal Knowledge Graph Completion: A Survey

Jan 16, 2022

Abstract:Knowledge graph completion (KGC) can predict missing links and is crucial for real-world knowledge graphs, which widely suffer from incompleteness. KGC methods assume a knowledge graph is static, but that may lead to inaccurate prediction results because many facts in the knowledge graphs change over time. Recently, emerging methods have shown improved predictive results by further incorporating the timestamps of facts; namely, temporal knowledge graph completion (TKGC). With this temporal information, TKGC methods can learn the dynamic evolution of the knowledge graph that KGC methods fail to capture. In this paper, for the first time, we summarize the recent advances in TKGC research. First, we detail the background of TKGC, including the problem definition, benchmark datasets, and evaluation metrics. Then, we summarize existing TKGC methods based on how timestamps of facts are used to capture the temporal dynamics. Finally, we conclude the paper and present future research directions of TKGC.

Learning Based Task Offloading in Digital Twin Empowered Internet of Vehicles

Dec 28, 2021

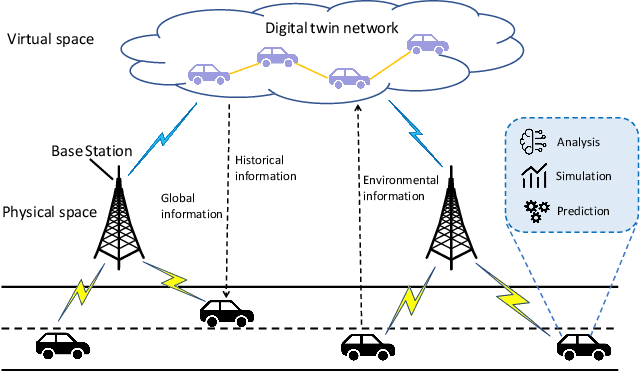

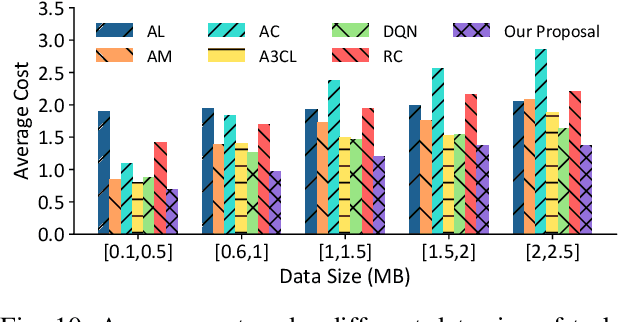

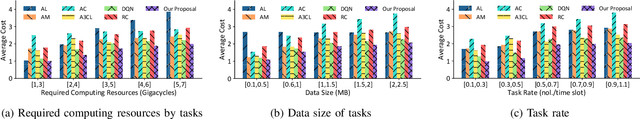

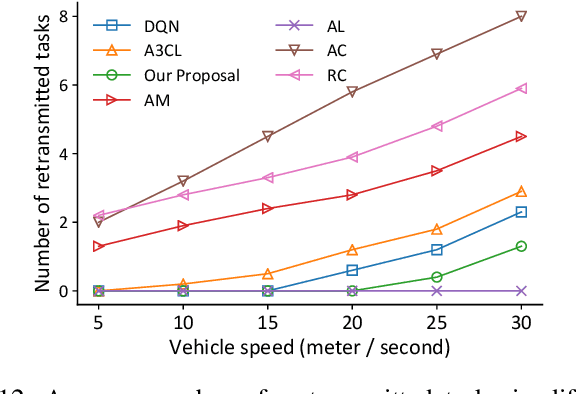

Abstract:Mobile edge computing has become an effective and fundamental paradigm for futuristic autonomous vehicles to offload computing tasks. However, due to the high mobility of vehicles, the dynamics of the wireless conditions, and the uncertainty of the arrival computing tasks, it is difficult for a single vehicle to determine the optimal offloading strategy. In this paper, we propose a Digital Twin (DT) empowered task offloading framework for Internet of Vehicles. As a software agent residing in the cloud, a DT can obtain both global network information by using communications among DTs, and historical information of a vehicle by using the communications within the twin. The global network information and historical vehicular information can significantly facilitate the offloading. In specific, to preserve the precious computing resource at different levels for most appropriate computing tasks, we integrate a learning scheme based on the prediction of futuristic computing tasks in DT. Accordingly, we model the offloading scheduling process as a Markov Decision Process (MDP) to minimize the long-term cost in terms of a trade off between task latency, energy consumption, and renting cost of clouds. Simulation results demonstrate that our algorithm can effectively find the optimal offloading strategy, as well as achieve the fast convergence speed and high performance, compared with other existing approaches.

Semantic Code Search for Smart Contracts

Nov 28, 2021

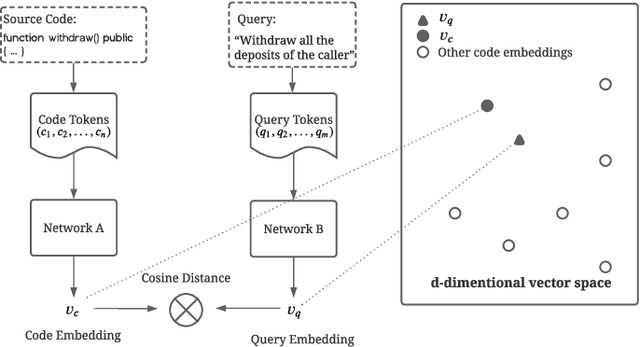

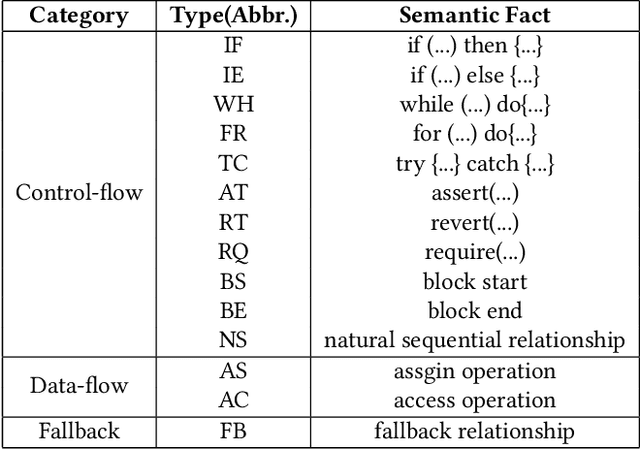

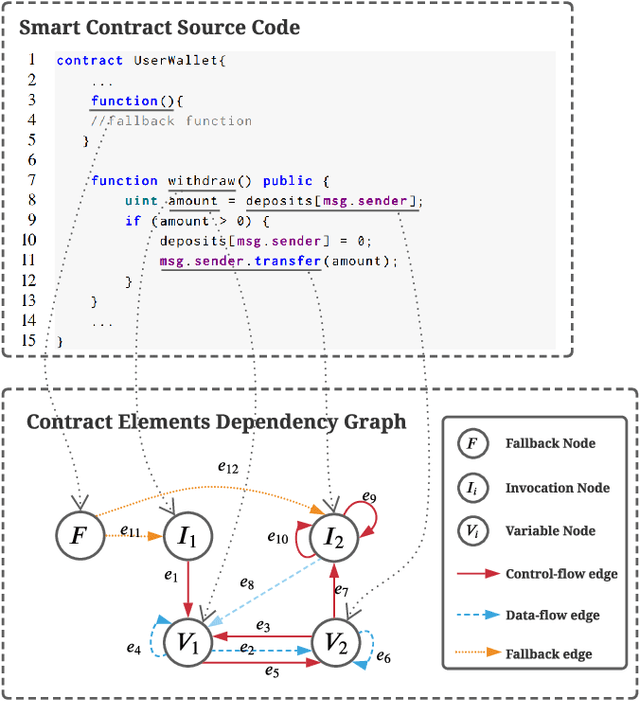

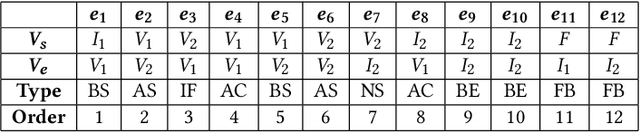

Abstract:Semantic code search technology allows searching for existing code snippets through natural language, which can greatly improve programming efficiency. Smart contracts, programs that run on the blockchain, have a code reuse rate of more than 90%, which means developers have a great demand for semantic code search tools. However, the existing code search models still have a semantic gap between code and query, and perform poorly on specialized queries of smart contracts. In this paper, we propose a Multi-Modal Smart contract Code Search (MM-SCS) model. Specifically, we construct a Contract Elements Dependency Graph (CEDG) for MM-SCS as an additional modality to capture the data-flow and control-flow information of the code. To make the model more focused on the key contextual information, we use a multi-head attention network to generate embeddings for code features. In addition, we use a fine-tuned pretrained model to ensure the model's effectiveness when the training data is small. We compared MM-SCS with four state-of-the-art models on a dataset with 470K (code, docstring) pairs collected from Github and Etherscan. Experimental results show that MM-SCS achieves an MRR (Mean Reciprocal Rank) of 0.572, outperforming four state-of-the-art models UNIF, DeepCS, CARLCS-CNN, and TAB-CS by 34.2%, 59.3%, 36.8%, and 14.1%, respectively. Additionally, the search speed of MM-SCS is second only to UNIF, reaching 0.34s/query.

Prototypes-Guided Memory Replay for Continual Learning

Aug 28, 2021

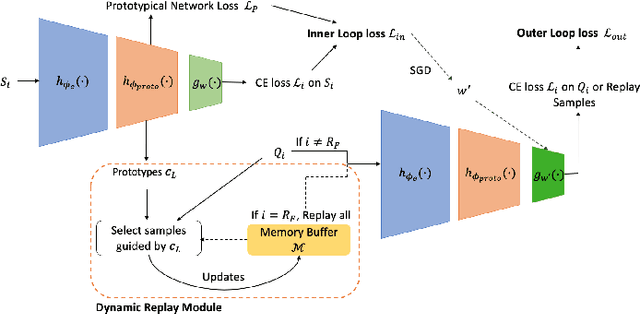

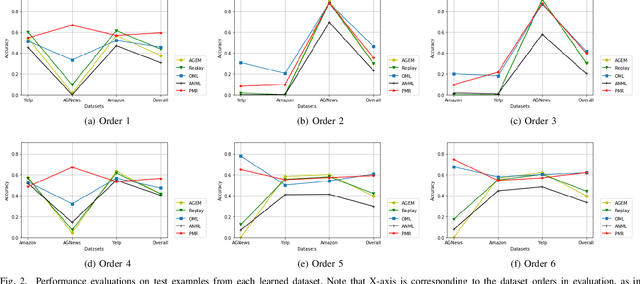

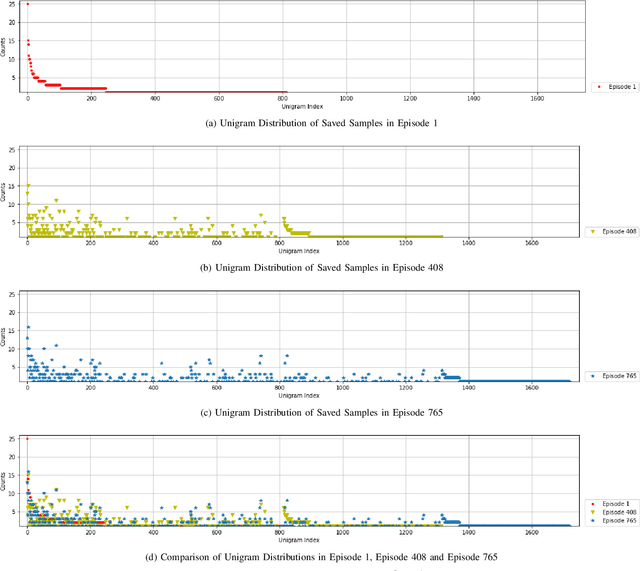

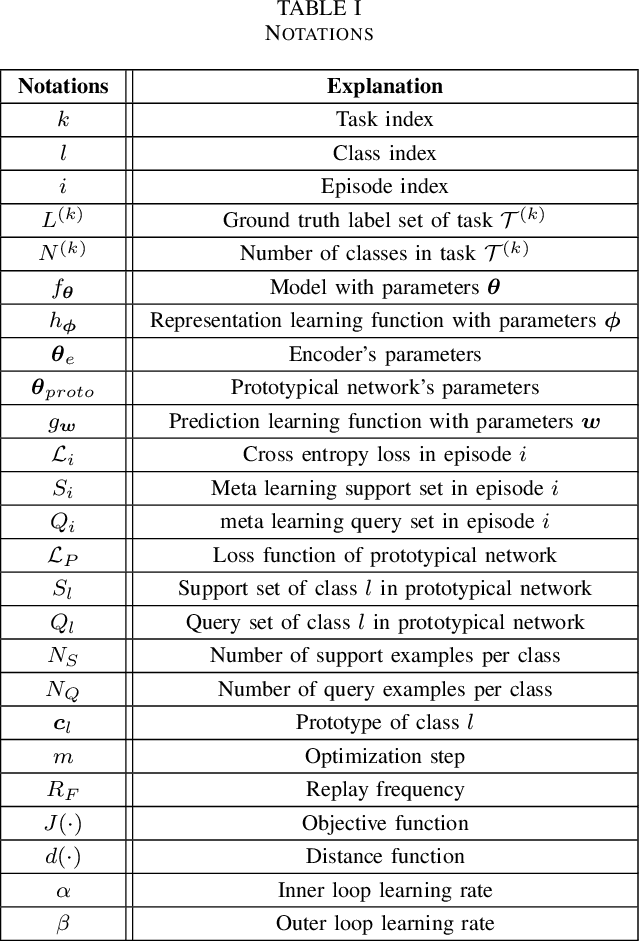

Abstract:Continual learning (CL) refers to a machine learning paradigm that using only a small account of training samples and previously learned knowledge to enhance learning performance. CL models learn tasks from various domains in a sequential manner. The major difficulty in CL is catastrophic forgetting of previously learned tasks, caused by shifts in data distributions. The existing CL models often employ a replay-based approach to diminish catastrophic forgetting. Most CL models stochastically select previously seen samples to retain learned knowledge. However, occupied memory size keeps enlarging along with accumulating learned tasks. Hereby, we propose a memory-efficient CL method. We devise a dynamic prototypes-guided memory replay module, incorporating it into an online meta-learning model. We conduct extensive experiments on text classification and additionally investigate the effect of training set orders on CL model performance. The experimental results testify the superiority of our method in alleviating catastrophic forgetting and enabling efficient knowledge transfer.

Federated Learning Meets Natural Language Processing: A Survey

Jul 27, 2021Abstract:Federated Learning aims to learn machine learning models from multiple decentralized edge devices (e.g. mobiles) or servers without sacrificing local data privacy. Recent Natural Language Processing techniques rely on deep learning and large pre-trained language models. However, both big deep neural and language models are trained with huge amounts of data which often lies on the server side. Since text data is widely originated from end users, in this work, we look into recent NLP models and techniques which use federated learning as the learning framework. Our survey discusses major challenges in federated natural language processing, including the algorithm challenges, system challenges as well as the privacy issues. We also provide a critical review of the existing Federated NLP evaluation methods and tools. Finally, we highlight the current research gaps and future directions.

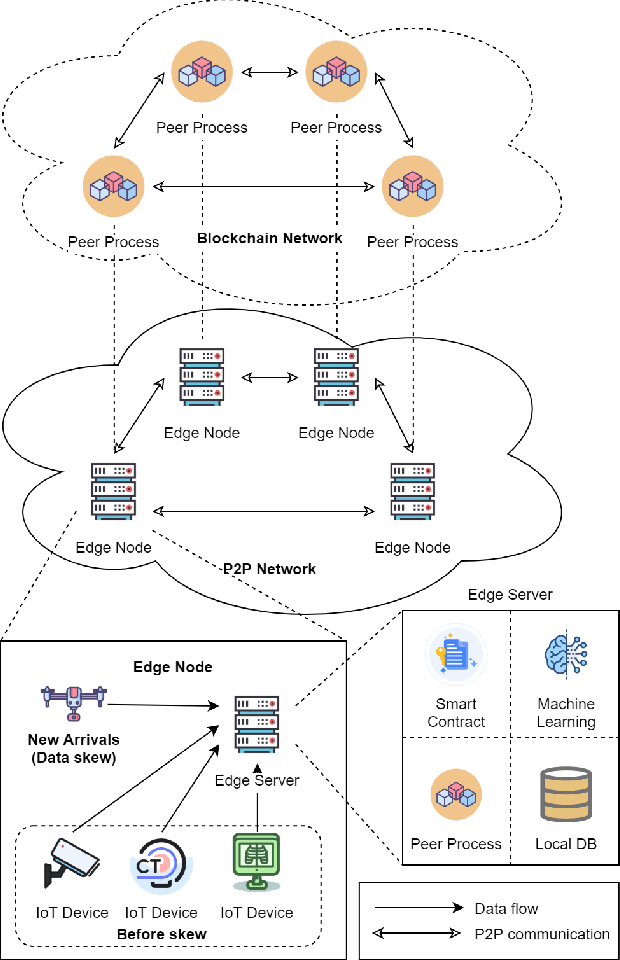

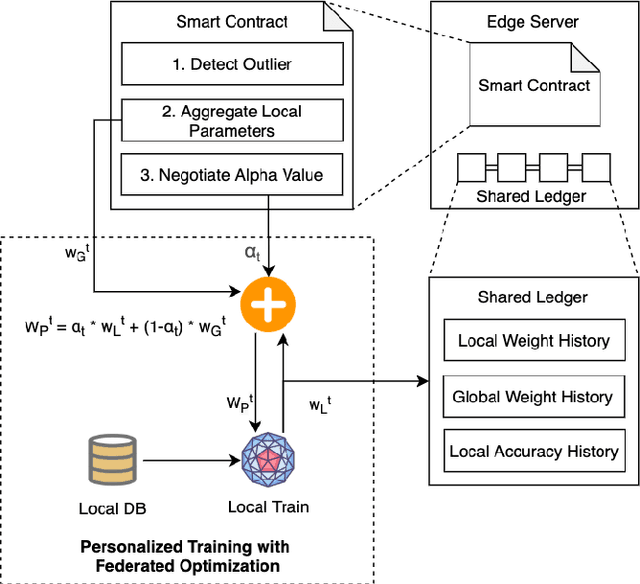

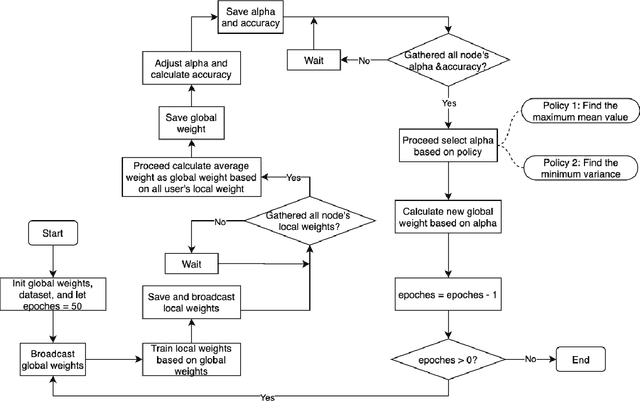

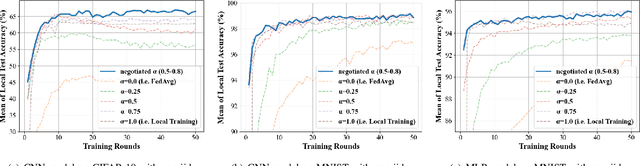

SCEI: A Smart-Contract Driven Edge Intelligence Framework for IoT Systems

Mar 12, 2021

Abstract:Federated learning (FL) utilizes edge computing devices to collaboratively train a shared model while each device can fully control its local data access. Generally, FL techniques focus on learning model on independent and identically distributed (iid) dataset and cannot achieve satisfiable performance on non-iid datasets (e.g. learning a multi-class classifier but each client only has a single class dataset). Some personalized approaches have been proposed to mitigate non-iid issues. However, such approaches cannot handle underlying data distribution shift, namely data distribution skew, which is quite common in real scenarios (e.g. recommendation systems learn user behaviors which change over time). In this work, we provide a solution to the challenge by leveraging smart-contract with federated learning to build optimized, personalized deep learning models. Specifically, our approach utilizes smart contract to reach consensus among distributed trainers on the optimal weights of personalized models. We conduct experiments across multiple models (CNN and MLP) and multiple datasets (MNIST and CIFAR-10). The experimental results demonstrate that our personalized learning models can achieve better accuracy and faster convergence compared to classic federated and personalized learning. Compared with the model given by baseline FedAvg algorithm, the average accuracy of our personalized learning models is improved by 2% to 20%, and the convergence rate is about 2$\times$ faster. Moreover, we also illustrate that our approach is secure against recent attack on distributed learning.

Chaotic-to-Fine Clustering for Unlabeled Plant Disease Images

Jan 18, 2021

Abstract:Current annotation for plant disease images depends on manual sorting and handcrafted features by agricultural experts, which is time-consuming and labour-intensive. In this paper, we propose a self-supervised clustering framework for grouping plant disease images based on the vulnerability of Kernel K-means. The main idea is to establish a cross iterative under-clustering algorithm based on Kernel K-means to produce the pseudo-labeled training set and a chaotic cluster to be further classified by a deep learning module. In order to verify the effectiveness of our proposed framework, we conduct extensive experiments on three different plant disease datatsets with five plants and 17 plant diseases. The experimental results show the high superiority of our method to do image-based plant disease classification over balanced and unbalanced datasets by comparing with five state-of-the-art existing works in terms of different metrics.

SciSummPip: An Unsupervised Scientific Paper Summarization Pipeline

Oct 19, 2020

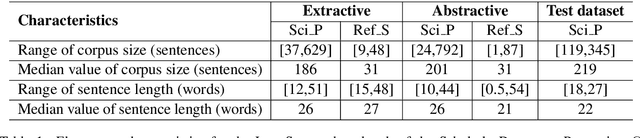

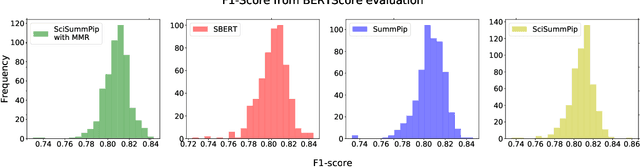

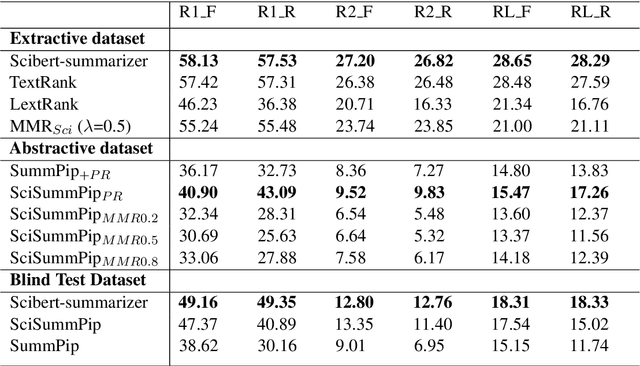

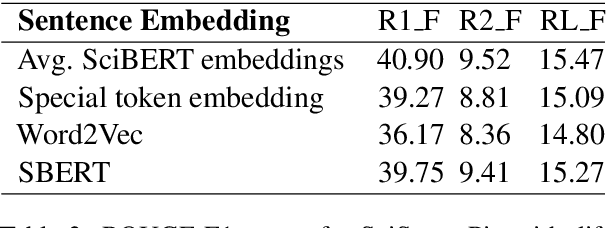

Abstract:The Scholarly Document Processing (SDP) workshop is to encourage more efforts on natural language understanding of scientific task. It contains three shared tasks and we participate in the LongSumm shared task. In this paper, we describe our text summarization system, SciSummPip, inspired by SummPip (Zhao et al., 2020) that is an unsupervised text summarization system for multi-document in news domain. Our SciSummPip includes a transformer-based language model SciBERT (Beltagy et al., 2019) for contextual sentence representation, content selection with PageRank (Page et al., 1999), sentence graph construction with both deep and linguistic information, sentence graph clustering and within-graph summary generation. Our work differs from previous method in that content selection and a summary length constraint is applied to adapt to the scientific domain. The experiment results on both training dataset and blind test dataset show the effectiveness of our method, and we empirically verify the robustness of modules used in SciSummPip with BERTScore (Zhang et al., 2019a).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge