Lian Yang

Cross-Modality Masked Learning for Survival Prediction in ICI Treated NSCLC Patients

Jul 09, 2025Abstract:Accurate prognosis of non-small cell lung cancer (NSCLC) patients undergoing immunotherapy is essential for personalized treatment planning, enabling informed patient decisions, and improving both treatment outcomes and quality of life. However, the lack of large, relevant datasets and effective multi-modal feature fusion strategies pose significant challenges in this domain. To address these challenges, we present a large-scale dataset and introduce a novel framework for multi-modal feature fusion aimed at enhancing the accuracy of survival prediction. The dataset comprises 3D CT images and corresponding clinical records from NSCLC patients treated with immune checkpoint inhibitors (ICI), along with progression-free survival (PFS) and overall survival (OS) data. We further propose a cross-modality masked learning approach for medical feature fusion, consisting of two distinct branches, each tailored to its respective modality: a Slice-Depth Transformer for extracting 3D features from CT images and a graph-based Transformer for learning node features and relationships among clinical variables in tabular data. The fusion process is guided by a masked modality learning strategy, wherein the model utilizes the intact modality to reconstruct missing components. This mechanism improves the integration of modality-specific features, fostering more effective inter-modality relationships and feature interactions. Our approach demonstrates superior performance in multi-modal integration for NSCLC survival prediction, surpassing existing methods and setting a new benchmark for prognostic models in this context.

Dynamic Clustering and Power Control for Two-Tier Wireless Federated Learning

May 19, 2022

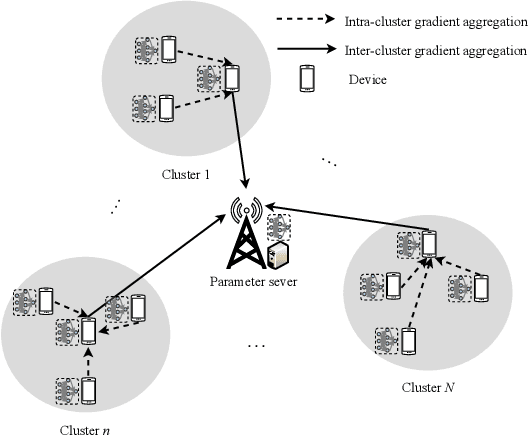

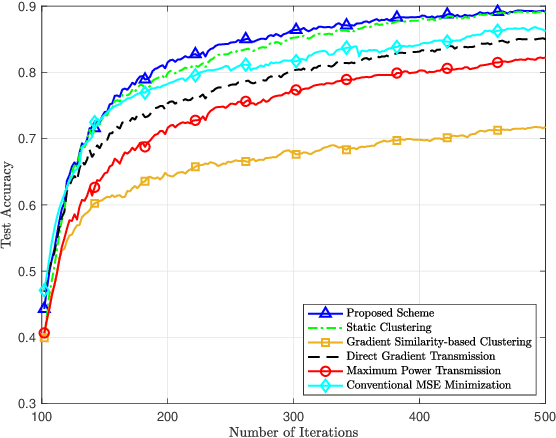

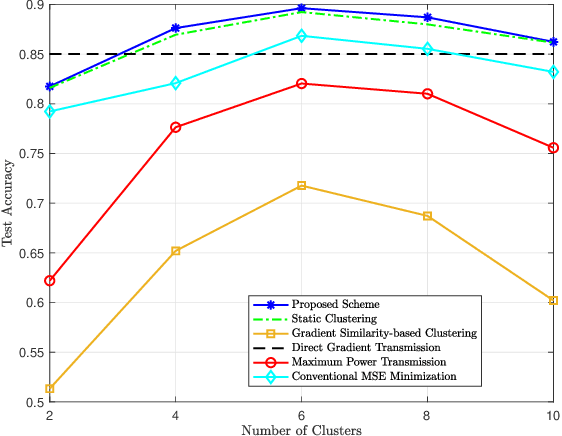

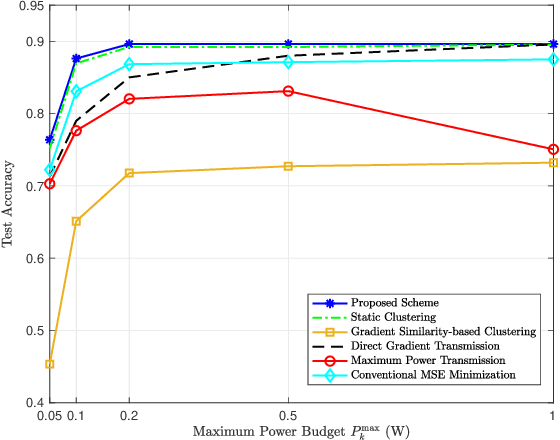

Abstract:Federated learning (FL) has been recognized as a promising distributed learning paradigm to support intelligent applications at the wireless edge, where a global model is trained iteratively through the collaboration of the edge devices without sharing their data. However, due to the relatively large communication cost between the devices and parameter server (PS), direct computing based on the information from the devices may not be resource efficient. This paper studies the joint communication and learning design for the over-the-air computation (AirComp)-based two-tier wireless FL scheme, where the lead devices first collect the local gradients from their nearby subordinate devices, and then send the merged results to the PS for the second round of aggregation. We establish a convergence result for the proposed scheme and derive the upper bound on the optimality gap between the expected and optimal global loss values. Next, based on the device distance and data importance, we propose a hierarchical clustering method to build the two-tier structure. Then, with only the instantaneous channel state information (CSI), we formulate the optimality gap minimization problem and solve it by using an efficient alternating minimization method. Numerical results show that the proposed scheme outperforms the baseline ones.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge