Kyle Julian

Stanford University

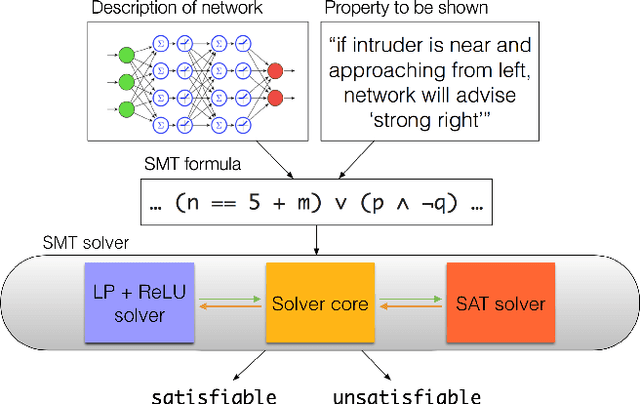

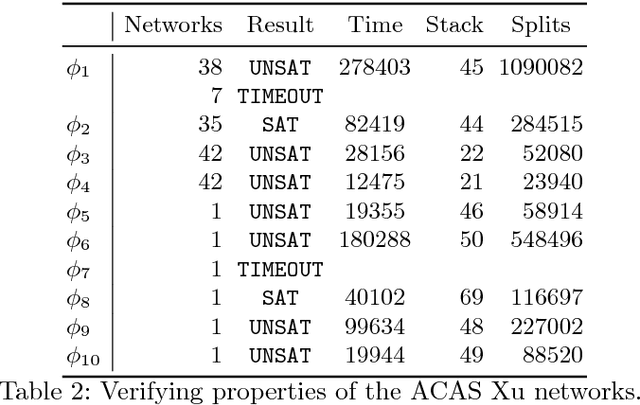

Marabou 2.0: A Versatile Formal Analyzer of Neural Networks

Jan 25, 2024

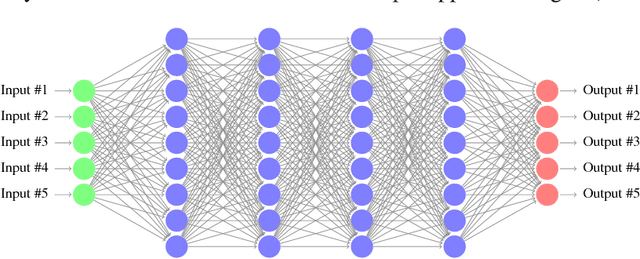

Abstract:This paper serves as a comprehensive system description of version 2.0 of the Marabou framework for formal analysis of neural networks. We discuss the tool's architectural design and highlight the major features and components introduced since its initial release.

Parallelization Techniques for Verifying Neural Networks

Apr 26, 2020

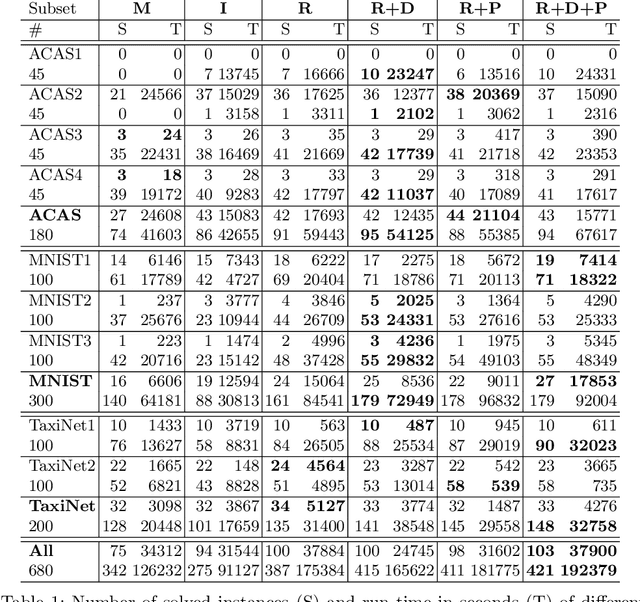

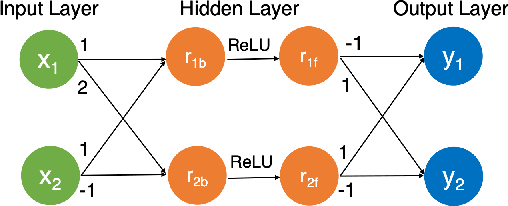

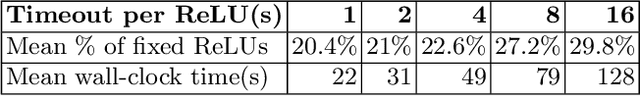

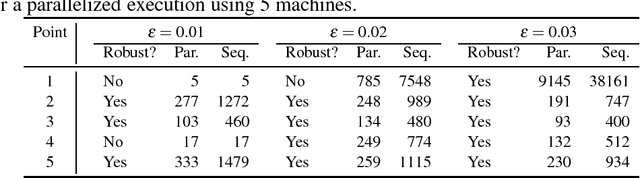

Abstract:Inspired by recent successes with parallel optimization techniques for solving Boolean satisfiability, we investigate a set of strategies and heuristics that aim to leverage parallel computing to improve the scalability of neural network verification. We introduce an algorithm based on partitioning the verification problem in an iterative manner and explore two partitioning strategies, that work by partitioning the input space or by case splitting on the phases of the neuron activations, respectively. We also introduce a highly parallelizable pre-processing algorithm that uses the neuron activation phases to simplify the neural network verification problems. An extensive experimental evaluation shows the benefit of these techniques on both existing benchmarks and new benchmarks from the aviation domain. A preliminary experiment with ultra-scaling our algorithm using a large distributed cloud-based platform also shows promising results.

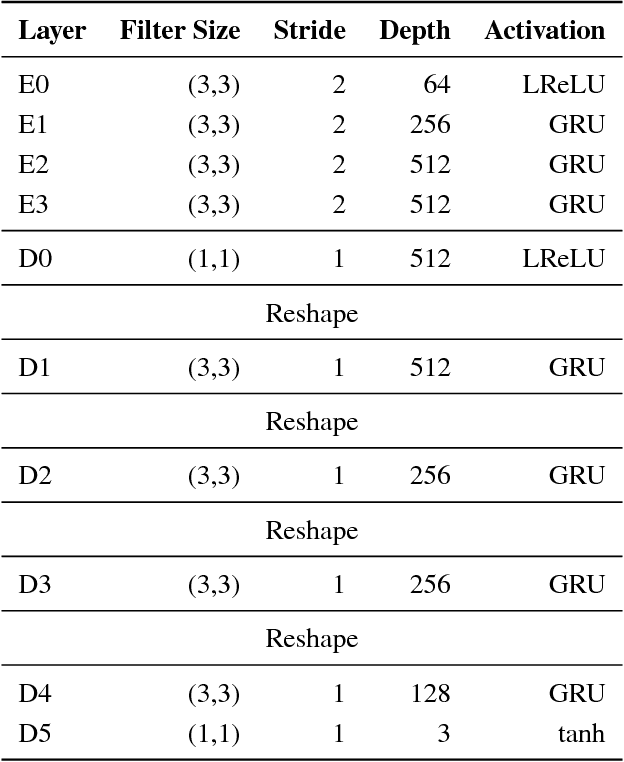

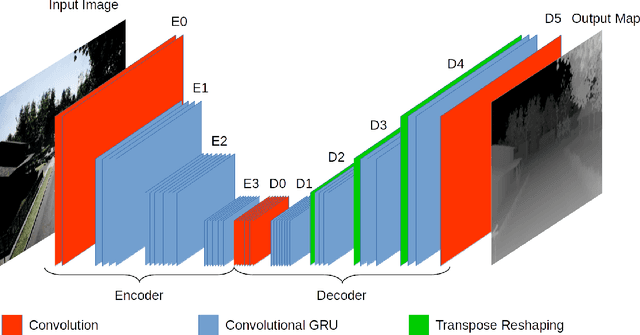

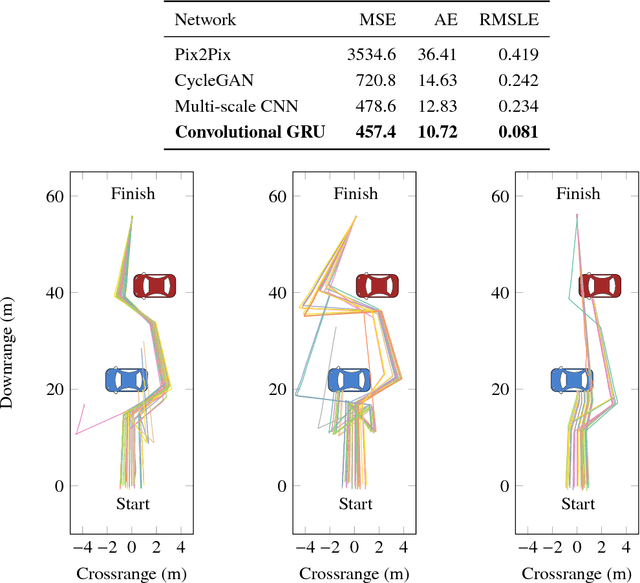

Visual Depth Mapping from Monocular Images using Recurrent Convolutional Neural Networks

Dec 10, 2018

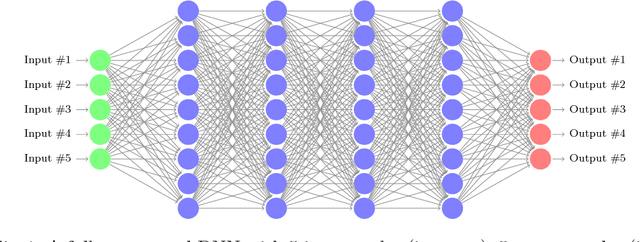

Abstract:A reliable sense-and-avoid system is critical to enabling safe autonomous operation of unmanned aircraft. Existing sense-and-avoid methods often require specialized sensors that are too large or power intensive for use on small unmanned vehicles. This paper presents a method to estimate object distances based on visual image sequences, allowing for the use of low-cost, on-board monocular cameras as simple collision avoidance sensors. We present a deep recurrent convolutional neural network and training method to generate depth maps from video sequences. Our network is trained using simulated camera and depth data generated with Microsoft's AirSim simulator. Empirically, we show that our model achieves superior performance compared to models generated using prior methods.We further demonstrate that the method can be used for sense-and-avoid of obstacles in simulation.

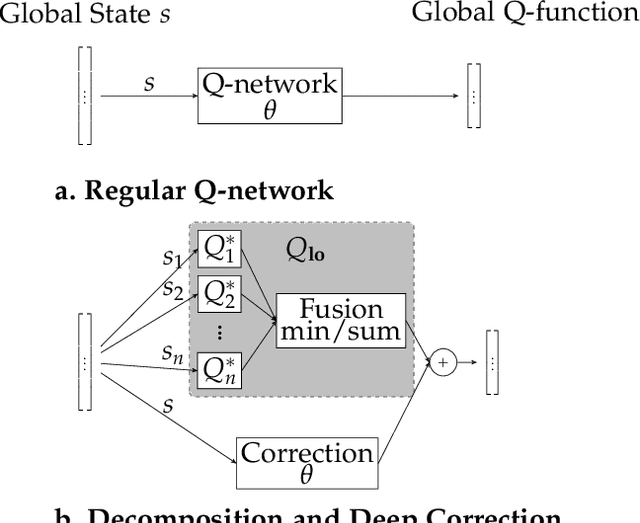

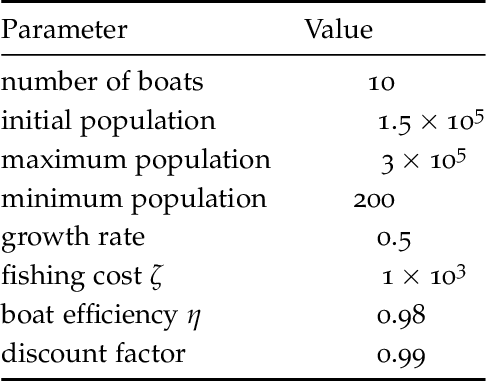

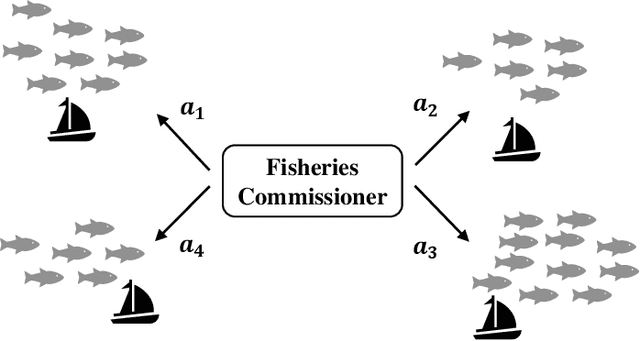

Utility Decomposition with Deep Corrections for Scalable Planning under Uncertainty

Feb 06, 2018

Abstract:Decomposition methods have been proposed in the past to approximate solutions to large sequential decision making problems. In contexts where an agent interacts with multiple entities, utility decomposition can be used where each individual entity is considered independently. The individual utility functions are then combined in real time to solve the global problem. Although these techniques can perform well empirically, they sacrifice optimality. This paper proposes an approach inspired from multi-fidelity optimization to learn a correction term with a neural network representation. Learning this correction can significantly improve performance. We demonstrate this approach on a pedestrian avoidance problem for autonomous driving. By leveraging strategies to avoid a single pedestrian, the decomposition method can scale to avoid multiple pedestrians. We verify empirically that the proposed correction method leads to a significant improvement over the decomposition method alone and outperforms a policy trained on the full scale problem without utility decomposition.

Toward Scalable Verification for Safety-Critical Deep Networks

Feb 02, 2018

Abstract:The increasing use of deep neural networks for safety-critical applications, such as autonomous driving and flight control, raises concerns about their safety and reliability. Formal verification can address these concerns by guaranteeing that a deep learning system operates as intended, but the state of the art is limited to small systems. In this work-in-progress report we give an overview of our work on mitigating this difficulty, by pursuing two complementary directions: devising scalable verification techniques, and identifying design choices that result in deep learning systems that are more amenable to verification.

Towards Proving the Adversarial Robustness of Deep Neural Networks

Sep 08, 2017

Abstract:Autonomous vehicles are highly complex systems, required to function reliably in a wide variety of situations. Manually crafting software controllers for these vehicles is difficult, but there has been some success in using deep neural networks generated using machine-learning. However, deep neural networks are opaque to human engineers, rendering their correctness very difficult to prove manually; and existing automated techniques, which were not designed to operate on neural networks, fail to scale to large systems. This paper focuses on proving the adversarial robustness of deep neural networks, i.e. proving that small perturbations to a correctly-classified input to the network cannot cause it to be misclassified. We describe some of our recent and ongoing work on verifying the adversarial robustness of networks, and discuss some of the open questions we have encountered and how they might be addressed.

* In Proceedings FVAV 2017, arXiv:1709.02126

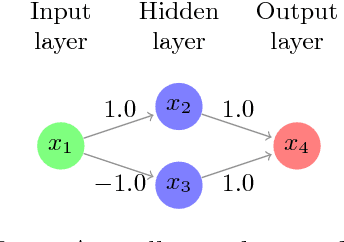

Reluplex: An Efficient SMT Solver for Verifying Deep Neural Networks

May 19, 2017

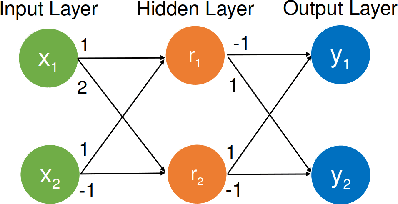

Abstract:Deep neural networks have emerged as a widely used and effective means for tackling complex, real-world problems. However, a major obstacle in applying them to safety-critical systems is the great difficulty in providing formal guarantees about their behavior. We present a novel, scalable, and efficient technique for verifying properties of deep neural networks (or providing counter-examples). The technique is based on the simplex method, extended to handle the non-convex Rectified Linear Unit (ReLU) activation function, which is a crucial ingredient in many modern neural networks. The verification procedure tackles neural networks as a whole, without making any simplifying assumptions. We evaluated our technique on a prototype deep neural network implementation of the next-generation airborne collision avoidance system for unmanned aircraft (ACAS Xu). Results show that our technique can successfully prove properties of networks that are an order of magnitude larger than the largest networks verified using existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge