Kevin Wolfe

Real-Time Planning and Control with a Vortex Particle Model for Fixed-Wing UAVs in Unsteady Flows

Sep 19, 2025Abstract:Unsteady aerodynamic effects can have a profound impact on aerial vehicle flight performance, especially during agile maneuvers and in complex aerodynamic environments. In this paper, we present a real-time planning and control approach capable of reasoning about unsteady aerodynamics. Our approach relies on a lightweight vortex particle model, parallelized to allow GPU acceleration, and a sampling-based policy optimization strategy capable of leveraging the vortex particle model for predictive reasoning. We demonstrate, through both simulation and hardware experiments, that by replanning with our unsteady aerodynamics model, we can improve the performance of aggressive post-stall maneuvers in the presence of unsteady environmental flow disturbances.

Planning and Control for a Dynamic Morphing-Wing UAV Using a Vortex Particle Model

Jul 05, 2023

Abstract:Achieving precise, highly-dynamic maneuvers with Unmanned Aerial Vehicles (UAVs) is a major challenge due to the complexity of the associated aerodynamics. In particular, unsteady effects -- as might be experienced in post-stall regimes or during sudden vehicle morphing -- can have an adverse impact on the performance of modern flight control systems. In this paper, we present a vortex particle model and associated model-based controller capable of reasoning about the unsteady aerodynamics during aggressive maneuvers. We evaluate our approach in hardware on a morphing-wing UAV executing post-stall perching maneuvers. Our results show that the use of the unsteady aerodynamics model improves performance during both fixed-wing and dynamic-wing perching, while the use of wing-morphing planned with quasi-steady aerodynamics results in reduced performance. While the focus of this paper is a pre-computed control policy, we believe that, with sufficient computational resources, our approach could enable online planning in the future.

Occupancy Map Prediction Using Generative and Fully Convolutional Networks for Vehicle Navigation

Mar 06, 2018

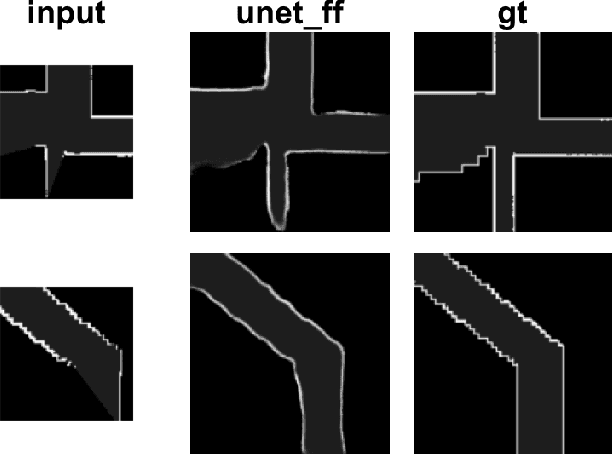

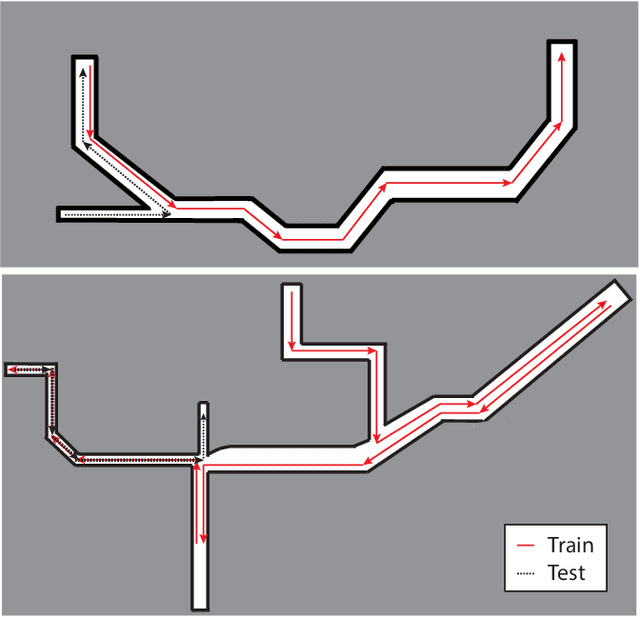

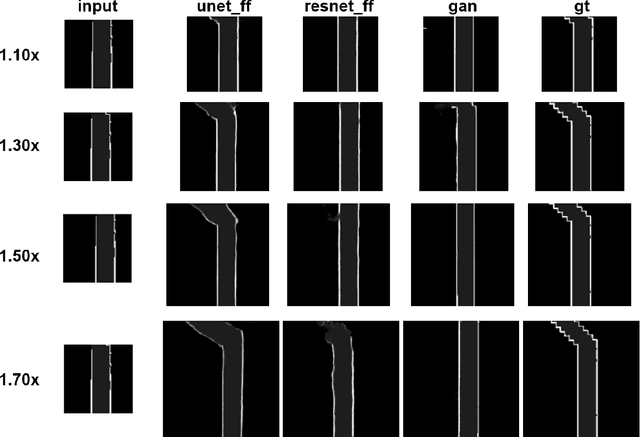

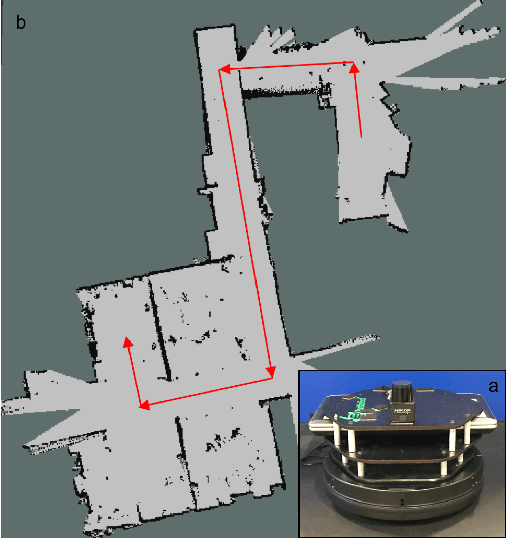

Abstract:Fast, collision-free motion through unknown environments remains a challenging problem for robotic systems. In these situations, the robot's ability to reason about its future motion is often severely limited by sensor field of view (FOV). By contrast, biological systems routinely make decisions by taking into consideration what might exist beyond their FOV based on prior experience. In this paper, we present an approach for predicting occupancy map representations of sensor data for future robot motions using deep neural networks. We evaluate several deep network architectures, including purely generative and adversarial models. Testing on both simulated and real environments we demonstrated performance both qualitatively and quantitatively, with SSIM similarity measure up to 0.899. We showed that it is possible to make predictions about occupied space beyond the physical robot's FOV from simulated training data. In the future, this method will allow robots to navigate through unknown environments in a faster, safer manner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge