Kashyap Todi

XAIR: A Framework of Explainable AI in Augmented Reality

Mar 28, 2023

Abstract:Explainable AI (XAI) has established itself as an important component of AI-driven interactive systems. With Augmented Reality (AR) becoming more integrated in daily lives, the role of XAI also becomes essential in AR because end-users will frequently interact with intelligent services. However, it is unclear how to design effective XAI experiences for AR. We propose XAIR, a design framework that addresses "when", "what", and "how" to provide explanations of AI output in AR. The framework was based on a multi-disciplinary literature review of XAI and HCI research, a large-scale survey probing 500+ end-users' preferences for AR-based explanations, and three workshops with 12 experts collecting their insights about XAI design in AR. XAIR's utility and effectiveness was verified via a study with 10 designers and another study with 12 end-users. XAIR can provide guidelines for designers, inspiring them to identify new design opportunities and achieve effective XAI designs in AR.

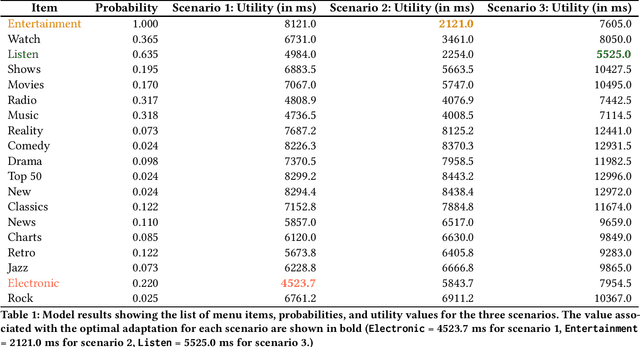

Computational Adaptation of XR Interfaces Through Interaction Simulation

Apr 19, 2022

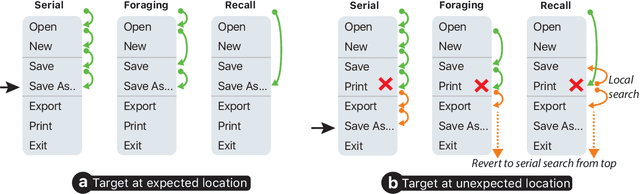

Abstract:Adaptive and intelligent user interfaces have been proposed as a critical component of a successful extended reality (XR) system. In particular, a predictive system can make inferences about a user and provide them with task-relevant recommendations or adaptations. However, we believe such adaptive interfaces should carefully consider the overall \emph{cost} of interactions to better address uncertainty of predictions. In this position paper, we discuss a computational approach to adapt XR interfaces, with the goal of improving user experience and performance. Our novel model, applied to menu selection tasks, simulates user interactions by considering both cognitive and motor costs. In contrast to greedy algorithms that adapt based on predictions alone, our model holistically accounts for costs and benefits of adaptations towards adapting the interface and providing optimal recommendations to the user.

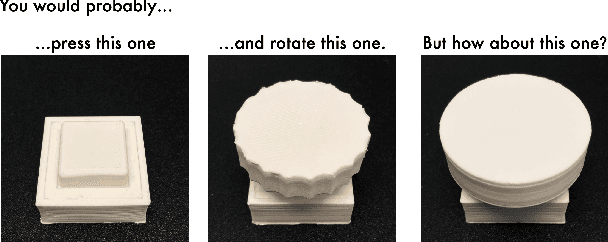

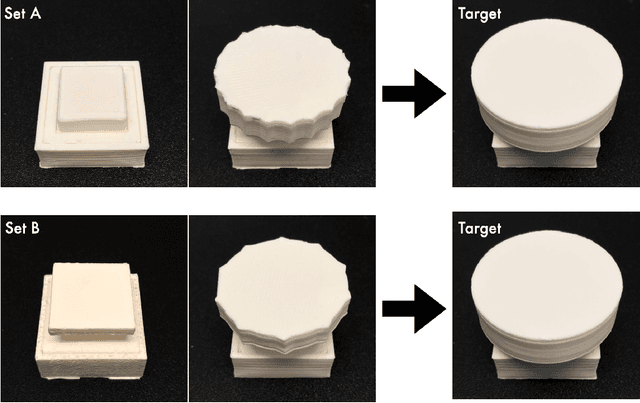

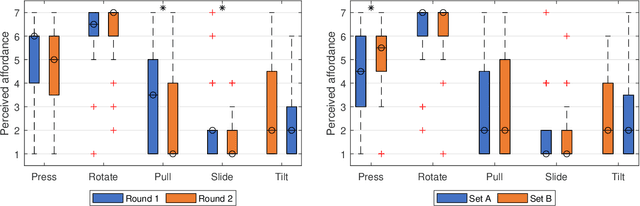

Rediscovering Affordance: A Reinforcement Learning Perspective

Jan 07, 2022

Abstract:Affordance refers to the perception of possible actions allowed by an object. Despite its relevance to human-computer interaction, no existing theory explains the mechanisms that underpin affordance-formation; that is, how affordances are discovered and adapted via interaction. We propose an integrative theory of affordance-formation based on the theory of reinforcement learning in cognitive sciences. The key assumption is that users learn to associate promising motor actions to percepts via experience when reinforcement signals (success/failure) are present. They also learn to categorize actions (e.g., "rotating" a dial), giving them the ability to name and reason about affordance. Upon encountering novel widgets, their ability to generalize these actions determines their ability to perceive affordances. We implement this theory in a virtual robot model, which demonstrates human-like adaptation of affordance in interactive widgets tasks. While its predictions align with trends in human data, humans are able to adapt affordances faster, suggesting the existence of additional mechanisms.

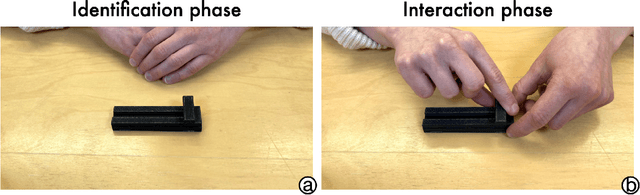

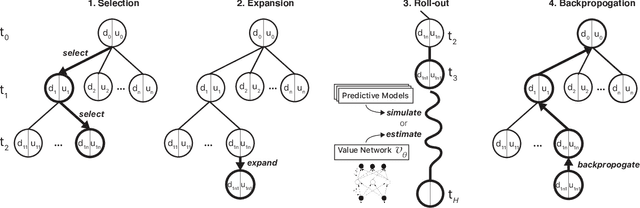

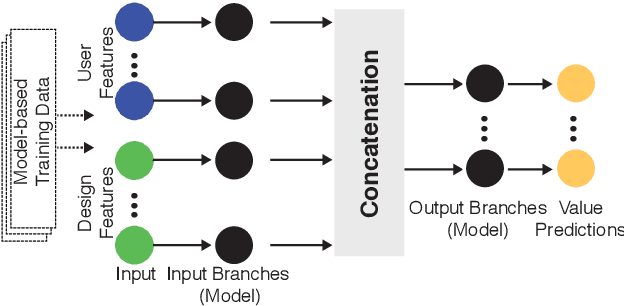

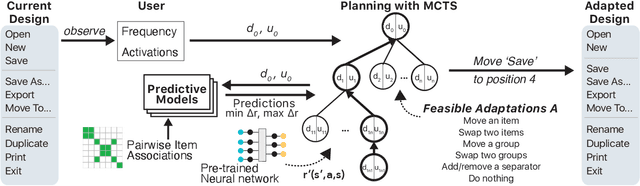

Adapting User Interfaces with Model-based Reinforcement Learning

Mar 11, 2021

Abstract:Adapting an interface requires taking into account both the positive and negative effects that changes may have on the user. A carelessly picked adaptation may impose high costs to the user -- for example, due to surprise or relearning effort -- or "trap" the process to a suboptimal design immaturely. However, effects on users are hard to predict as they depend on factors that are latent and evolve over the course of interaction. We propose a novel approach for adaptive user interfaces that yields a conservative adaptation policy: It finds beneficial changes when there are such and avoids changes when there are none. Our model-based reinforcement learning method plans sequences of adaptations and consults predictive HCI models to estimate their effects. We present empirical and simulation results from the case of adaptive menus, showing that the method outperforms both a non-adaptive and a frequency-based policy.

GRIDS: Interactive Layout Design with Integer Programming

Jan 09, 2020

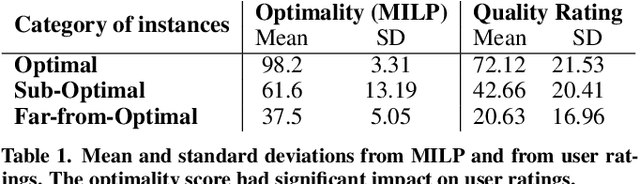

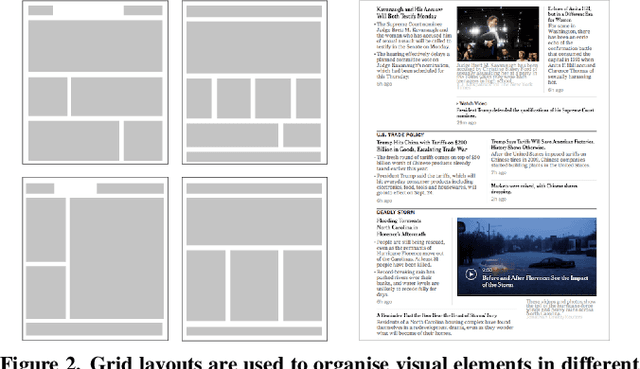

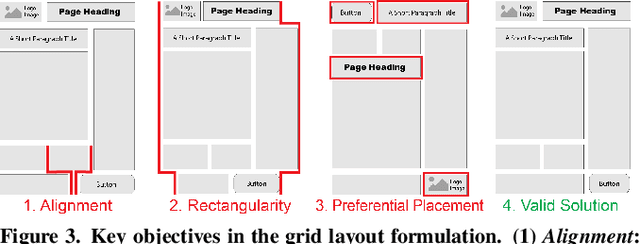

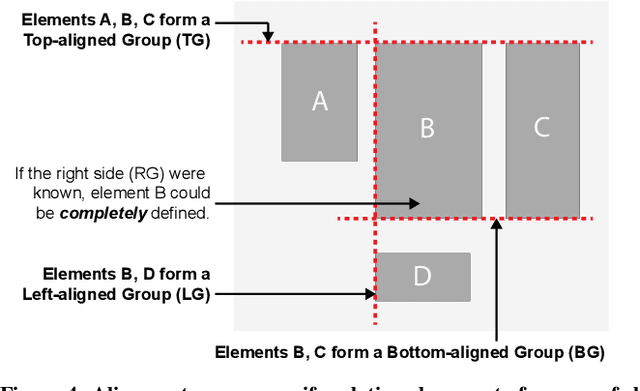

Abstract:Grid layouts are used by designers to spatially organise user interfaces when sketching and wireframing. However, their design is largely time consuming manual work. This is challenging due to combinatorial explosion and complex objectives, such as alignment, balance, and expectations regarding positions. This paper proposes a novel optimisation approach for the generation of diverse grid-based layouts. Our mixed integer linear programming (MILP) model offers a rigorous yet efficient method for grid generation that ensures packing, alignment, grouping, and preferential positioning of elements. Further, we present techniques for interactive diversification, enhancement, and completion of grid layouts (Figure 1). These capabilities are demonstrated using GRIDS1, a wireframing tool that provides designers with real-time layout suggestions. We report findings from a ratings study (N = 13) and a design study (N = 16), lending evidence for the benefit of computational grid generation during early stages of design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge