Kalina Bontcheva

University of Sheffield

It's about Time: Rethinking Evaluation on Rumor Detection Benchmarks using Chronological Splits

Feb 06, 2023Abstract:New events emerge over time influencing the topics of rumors in social media. Current rumor detection benchmarks use random splits as training, development and test sets which typically results in topical overlaps. Consequently, models trained on random splits may not perform well on rumor classification on previously unseen topics due to the temporal concept drift. In this paper, we provide a re-evaluation of classification models on four popular rumor detection benchmarks considering chronological instead of random splits. Our experimental results show that the use of random splits can significantly overestimate predictive performance across all datasets and models. Therefore, we suggest that rumor detection models should always be evaluated using chronological splits for minimizing topical overlaps.

VaxxHesitancy: A Dataset for Studying Hesitancy Towards COVID-19 Vaccination on Twitter

Jan 17, 2023Abstract:Vaccine hesitancy has been a common concern, probably since vaccines were created and, with the popularisation of social media, people started to express their concerns about vaccines online alongside those posting pro- and anti-vaccine content. Predictably, since the first mentions of a COVID-19 vaccine, social media users posted about their fears and concerns or about their support and belief into the effectiveness of these rapidly developing vaccines. Identifying and understanding the reasons behind public hesitancy towards COVID-19 vaccines is important for policy markers that need to develop actions to better inform the population with the aim of increasing vaccine take-up. In the case of COVID-19, where the fast development of the vaccines was mirrored closely by growth in anti-vaxx disinformation, automatic means of detecting citizen attitudes towards vaccination became necessary. This is an important computational social sciences task that requires data analysis in order to gain in-depth understanding of the phenomena at hand. Annotated data is also necessary for training data-driven models for more nuanced analysis of attitudes towards vaccination. To this end, we created a new collection of over 3,101 tweets annotated with users' attitudes towards COVID-19 vaccination (stance). Besides, we also develop a domain-specific language model (VaxxBERT) that achieves the best predictive performance (73.0 accuracy and 69.3 F1-score) as compared to a robust set of baselines. To the best of our knowledge, these are the first dataset and model that model vaccine hesitancy as a category distinct from pro- and anti-vaccine stance.

On the Impact of Temporal Concept Drift on Model Explanations

Oct 17, 2022

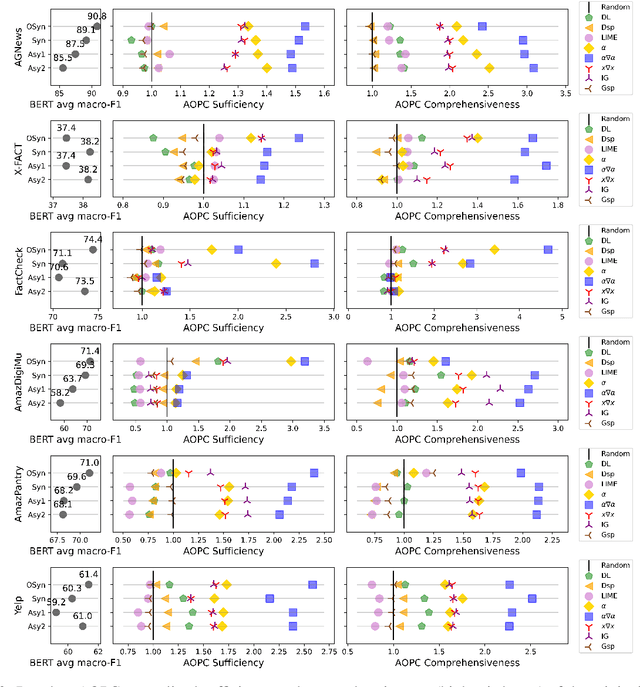

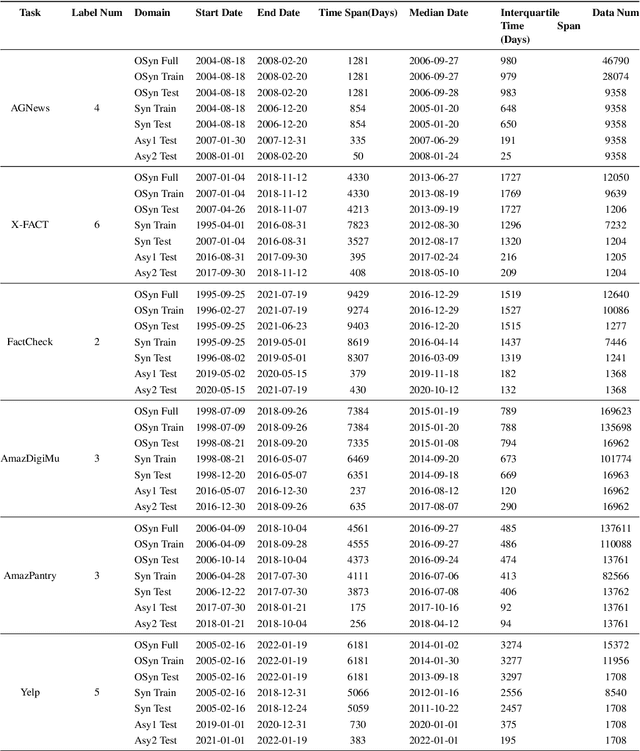

Abstract:Explanation faithfulness of model predictions in natural language processing is typically evaluated on held-out data from the same temporal distribution as the training data (i.e. synchronous settings). While model performance often deteriorates due to temporal variation (i.e. temporal concept drift), it is currently unknown how explanation faithfulness is impacted when the time span of the target data is different from the data used to train the model (i.e. asynchronous settings). For this purpose, we examine the impact of temporal variation on model explanations extracted by eight feature attribution methods and three select-then-predict models across six text classification tasks. Our experiments show that (i)faithfulness is not consistent under temporal variations across feature attribution methods (e.g. it decreases or increases depending on the method), with an attention-based method demonstrating the most robust faithfulness scores across datasets; and (ii) select-then-predict models are mostly robust in asynchronous settings with only small degradation in predictive performance. Finally, feature attribution methods show conflicting behavior when used in FRESH (i.e. a select-and-predict model) and for measuring sufficiency/comprehensiveness (i.e. as post-hoc methods), suggesting that we need more robust metrics to evaluate post-hoc explanation faithfulness.

Classifying COVID-19 vaccine narratives

Jul 18, 2022

Abstract:COVID-19 vaccine hesitancy is widespread, despite governments' information campaigns and WHO efforts. One of the reasons behind this is vaccine disinformation which widely spreads in social media. In particular, recent surveys have established that vaccine disinformation is impacting negatively citizen trust in COVID-19 vaccination. At the same time, fact-checkers are struggling with detecting and tracking of vaccine disinformation, due to the large scale of social media. To assist fact-checkers in monitoring vaccine narratives online, this paper studies a new vaccine narrative classification task, which categorises COVID-19 vaccine claims into one of seven categories. Following a data augmentation approach, we first construct a novel dataset for this new classification task, focusing on the minority classes. We also make use of fact-checker annotated data. The paper also presents a neural vaccine narrative classifier that achieves an accuracy of 84% under cross-validation. The classifier is publicly available for researchers and journalists.

Categorising Fine-to-Coarse Grained Misinformation: An Empirical Study of COVID-19 Infodemic

Jul 08, 2021

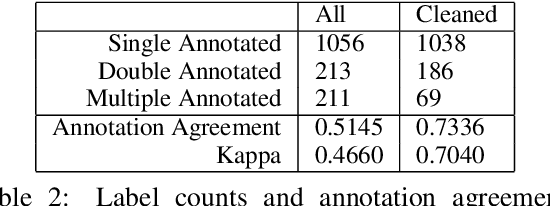

Abstract:The spreading COVID-19 misinformation over social media already draws the attention of many researchers. According to Google Scholar, about 26000 COVID-19 related misinformation studies have been published to date. Most of these studies focusing on 1) detect and/or 2) analysing the characteristics of COVID-19 related misinformation. However, the study of the social behaviours related to misinformation is often neglected. In this paper, we introduce a fine-grained annotated misinformation tweets dataset including social behaviours annotation (e.g. comment or question to the misinformation). The dataset not only allows social behaviours analysis but also suitable for both evidence-based or non-evidence-based misinformation classification task. In addition, we introduce leave claim out validation in our experiments and demonstrate the misinformation classification performance could be significantly different when applying to real-world unseen misinformation.

MP Twitter Engagement and Abuse Post-first COVID-19 Lockdown in the UK: White Paper

Mar 08, 2021

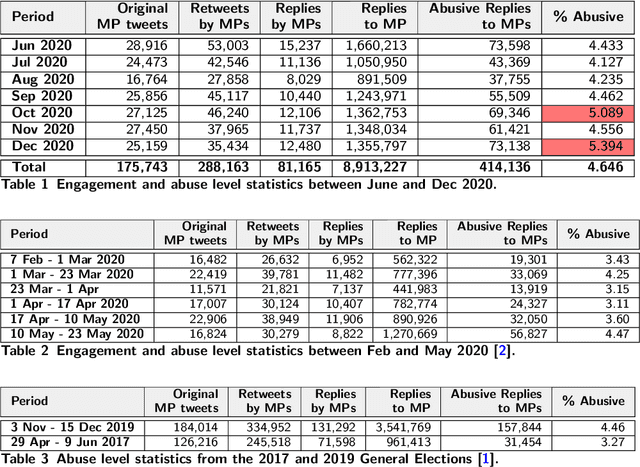

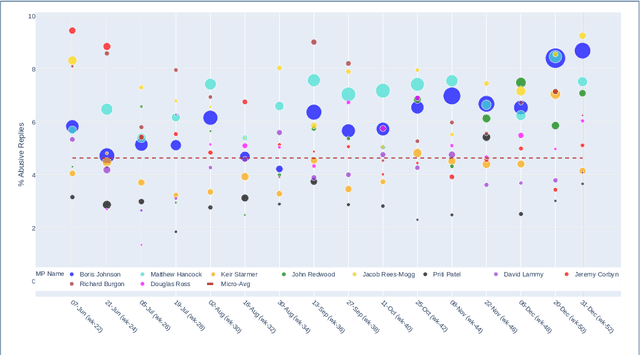

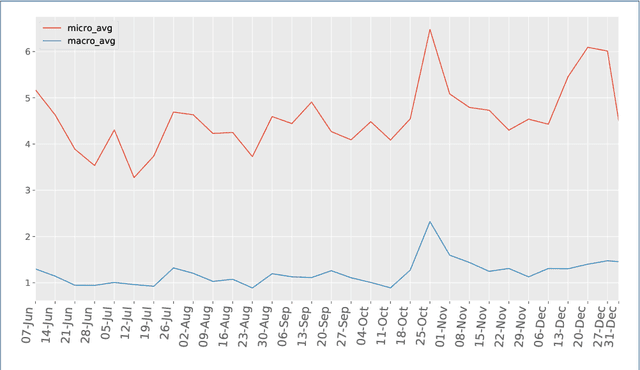

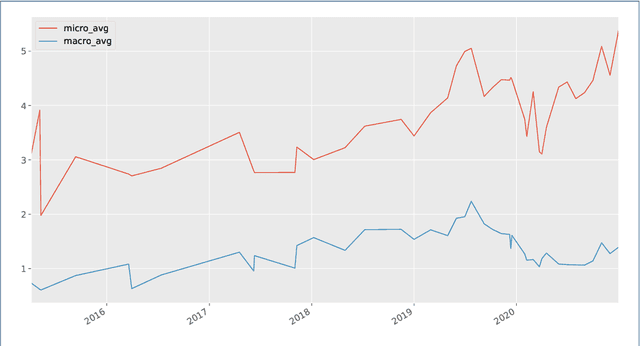

Abstract:The UK has had a volatile political environment for some years now, with Brexit and leadership crises marking the past five years. With this work, we wanted to understand more about how the global health emergency, COVID-19, influences the amount, type or topics of abuse that UK politicians receive when engaging with the public. With this work, we wanted to understand more about how the global health emergency, COVID-19, influences the amount, type or topics of abuse that UK politicians receive when engaging with the public. This work covers the period of June to December 2020 and analyses Twitter abuse in replies to UK MPs. This work is a follow-up from our analysis of online abuse during the first four months of the COVID-19 pandemic in the UK. The paper examines overall abuse levels during this new seven month period, analyses reactions to members of different political parties and the UK government, and the relationship between online abuse and topics such as Brexit, government's COVID-19 response and policies, and social issues. In addition, we have also examined the presence of conspiracy theories posted in abusive replies to MPs during the period. We have found that abuse levels toward UK MPs were at an all-time high in December 2020 (5.4% of all reply tweets sent to MPs). This is almost 1% higher that the two months preceding the General Election. In a departure from the trend seen in the first four months of the pandemic, MPs from the Tory party received the highest percentage of abusive replies from July 2020 onward, which stays above 5% starting from September 2020 onward, as the COVID-19 crisis deepened and the Brexit negotiations with the EU started nearing completion.

Multistage BiCross Encoder: Team GATE Entry for MLIA Multilingual Semantic Search Task 2

Jan 15, 2021

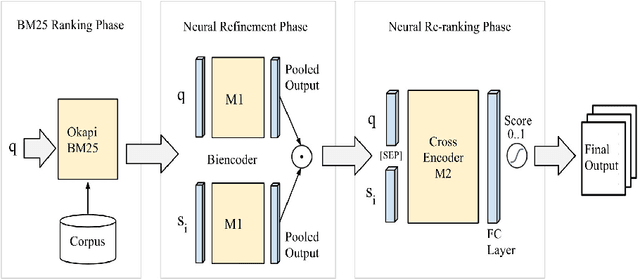

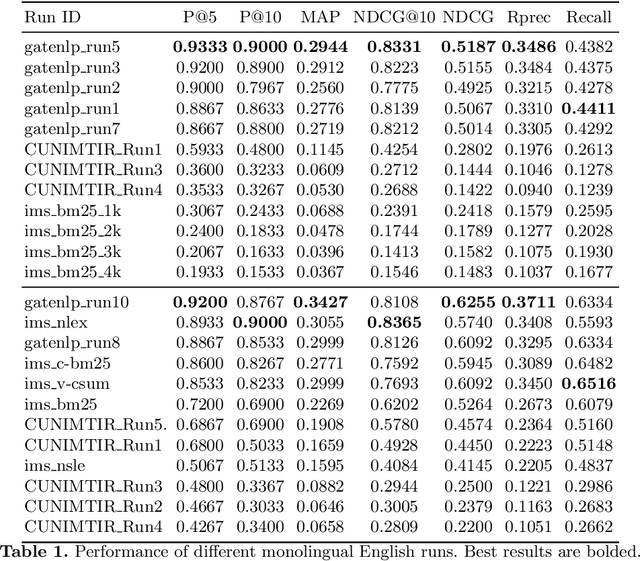

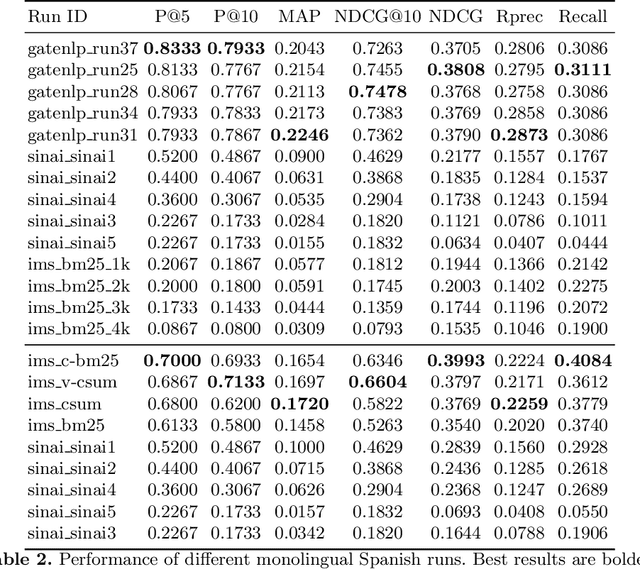

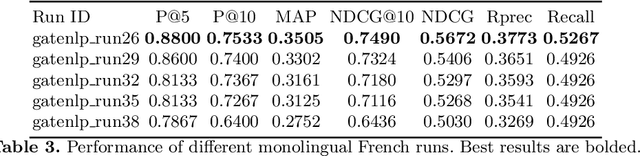

Abstract:The Coronavirus (COVID-19) pandemic has led to a rapidly growing `infodemic' online. Thus, the accurate retrieval of reliable relevant data from millions of documents about COVID-19 has become urgently needed for the general public as well as for other stakeholders. The COVID-19 Multilingual Information Access (MLIA) initiative is a joint effort to ameliorate exchange of COVID-19 related information by developing applications and services through research and community participation. In this work, we present a search system called Multistage BiCross Encoder, developed by team GATE for the MLIA task 2 Multilingual Semantic Search. Multistage BiCross-Encoder is a sequential three stage pipeline which uses the Okapi BM25 algorithm and a transformer based bi-encoder and cross-encoder to effectively rank the documents with respect to the query. The results of round 1 show that our models achieve state-of-the-art performance for all ranking metrics for both monolingual and bilingual runs.

Toxic Language Detection in Social Media for Brazilian Portuguese: New Dataset and Multilingual Analysis

Oct 09, 2020

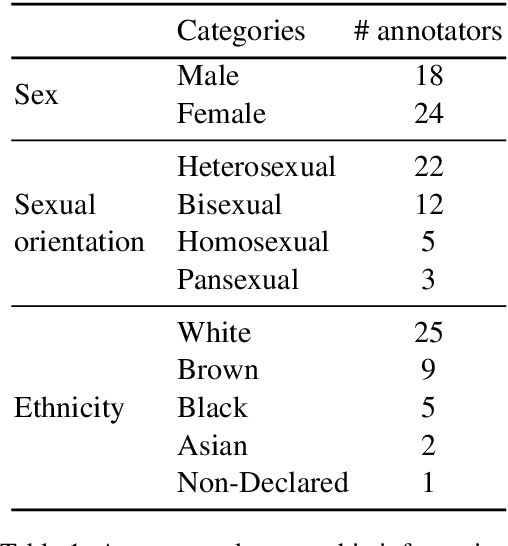

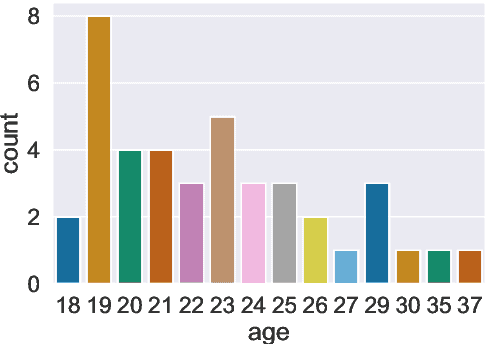

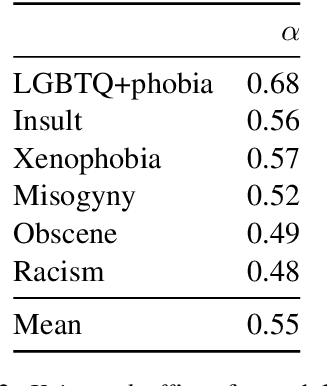

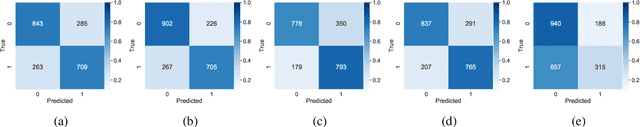

Abstract:Hate speech and toxic comments are a common concern of social media platform users. Although these comments are, fortunately, the minority in these platforms, they are still capable of causing harm. Therefore, identifying these comments is an important task for studying and preventing the proliferation of toxicity in social media. Previous work in automatically detecting toxic comments focus mainly in English, with very few work in languages like Brazilian Portuguese. In this paper, we propose a new large-scale dataset for Brazilian Portuguese with tweets annotated as either toxic or non-toxic or in different types of toxicity. We present our dataset collection and annotation process, where we aimed to select candidates covering multiple demographic groups. State-of-the-art BERT models were able to achieve 76% macro-F1 score using monolingual data in the binary case. We also show that large-scale monolingual data is still needed to create more accurate models, despite recent advances in multilingual approaches. An error analysis and experiments with multi-label classification show the difficulty of classifying certain types of toxic comments that appear less frequently in our data and highlights the need to develop models that are aware of different categories of toxicity.

Measuring What Counts: The case of Rumour Stance Classification

Oct 09, 2020

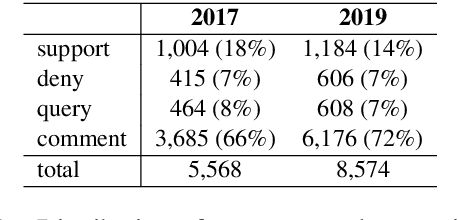

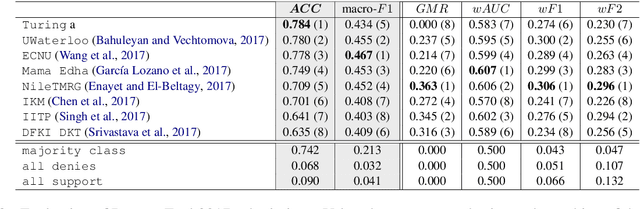

Abstract:Stance classification can be a powerful tool for understanding whether and which users believe in online rumours. The task aims to automatically predict the stance of replies towards a given rumour, namely support, deny, question, or comment. Numerous methods have been proposed and their performance compared in the RumourEval shared tasks in 2017 and 2019. Results demonstrated that this is a challenging problem since naturally occurring rumour stance data is highly imbalanced. This paper specifically questions the evaluation metrics used in these shared tasks. We re-evaluate the systems submitted to the two RumourEval tasks and show that the two widely adopted metrics -- accuracy and macro-F1 -- are not robust for the four-class imbalanced task of rumour stance classification, as they wrongly favour systems with highly skewed accuracy towards the majority class. To overcome this problem, we propose new evaluation metrics for rumour stance detection. These are not only robust to imbalanced data but also score higher systems that are capable of recognising the two most informative minority classes (support and deny).

Classification Aware Neural Topic Model and its Application on a New COVID-19 Disinformation Corpus

Jun 05, 2020

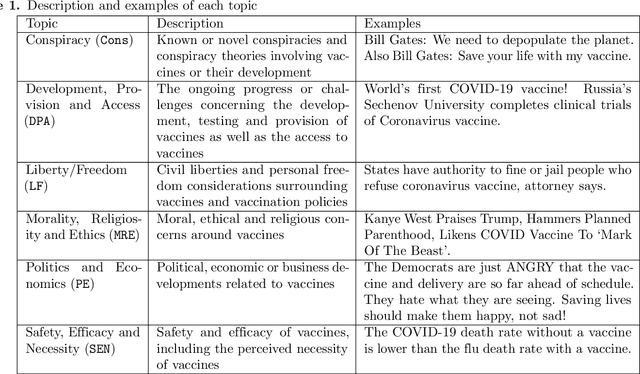

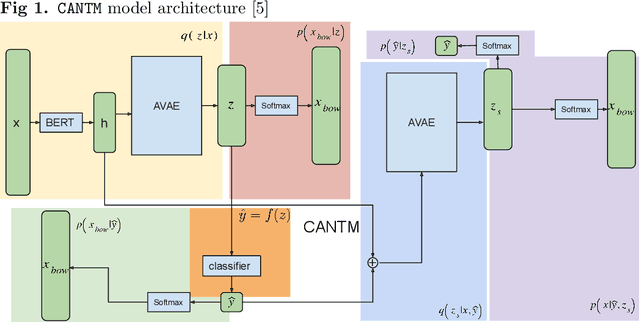

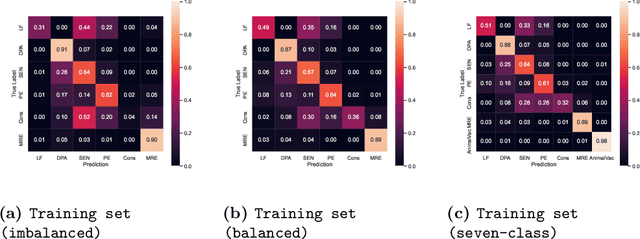

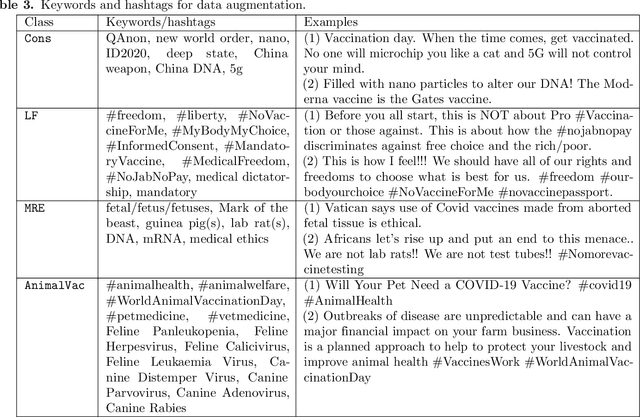

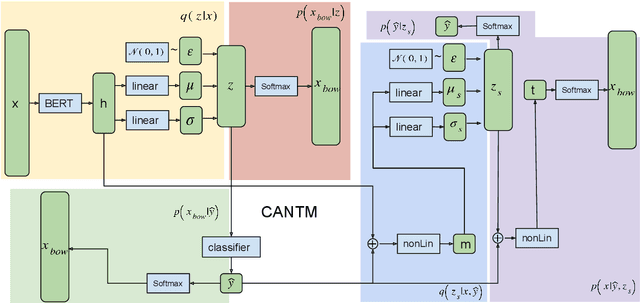

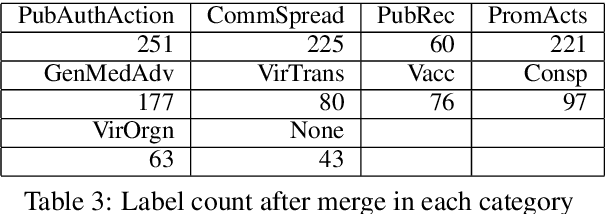

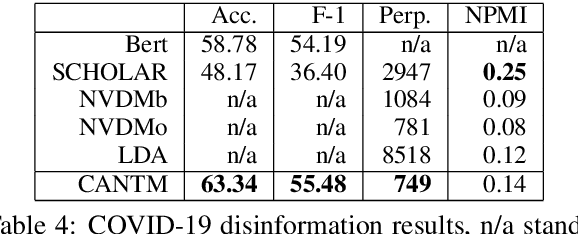

Abstract:The explosion of disinformation related to the COVID-19 pandemic has overloaded fact-checkers and media worldwide. To help tackle this, we developed computational methods to support COVID-19 disinformation debunking and social impacts research. This paper presents: 1) the currently largest available manually annotated COVID-19 disinformation category dataset; and 2) a classification-aware neural topic model (CANTM) that combines classification and topic modelling under a variational autoencoder framework. We demonstrate that CANTM efficiently improves classification performance with low resources, and is scalable. In addition, the classification-aware topics help researchers and end-users to better understand the classification results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge