Johann Petrak

Misogyny classification of German newspaper forum comments

Nov 30, 2022

Abstract:This paper presents work on detecting misogyny in the comments of a large Austrian German language newspaper forum. We describe the creation of a corpus of 6600 comments which were annotated with 5 levels of misogyny. The forum moderators were involved as experts in the creation of the annotation guidelines and the annotation of the comments. We also describe the results of training transformer-based classification models for both binarized and original label classification of that corpus.

Classification Aware Neural Topic Model and its Application on a New COVID-19 Disinformation Corpus

Jun 05, 2020

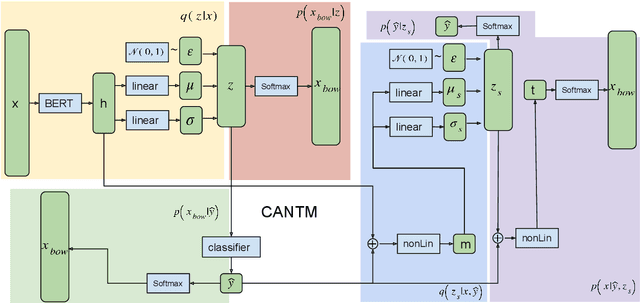

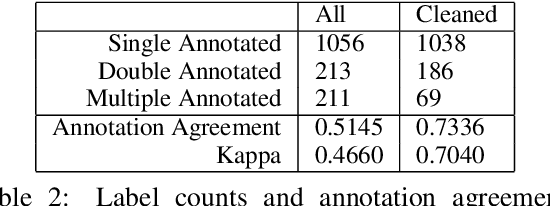

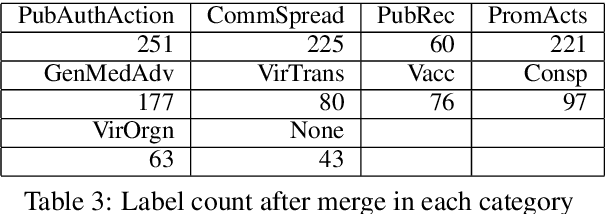

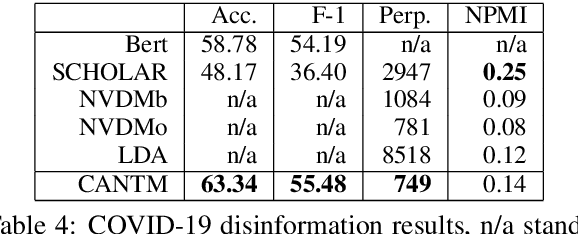

Abstract:The explosion of disinformation related to the COVID-19 pandemic has overloaded fact-checkers and media worldwide. To help tackle this, we developed computational methods to support COVID-19 disinformation debunking and social impacts research. This paper presents: 1) the currently largest available manually annotated COVID-19 disinformation category dataset; and 2) a classification-aware neural topic model (CANTM) that combines classification and topic modelling under a variational autoencoder framework. We demonstrate that CANTM efficiently improves classification performance with low resources, and is scalable. In addition, the classification-aware topics help researchers and end-users to better understand the classification results.

A Deep Neural Network Sentence Level Classification Method with Context Information

Aug 31, 2018

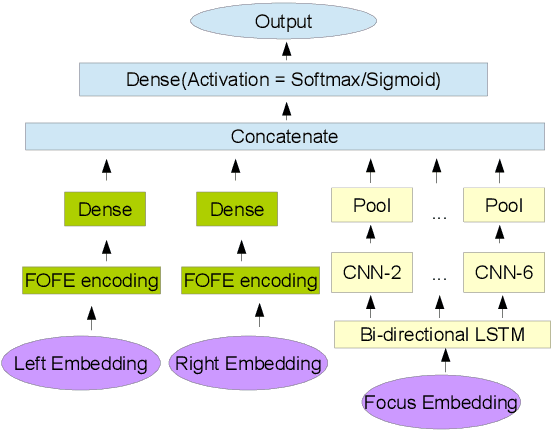

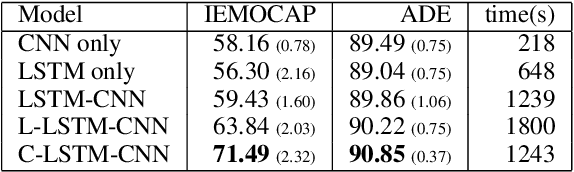

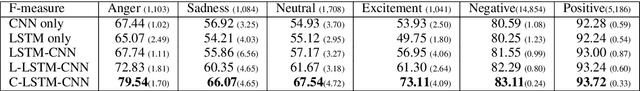

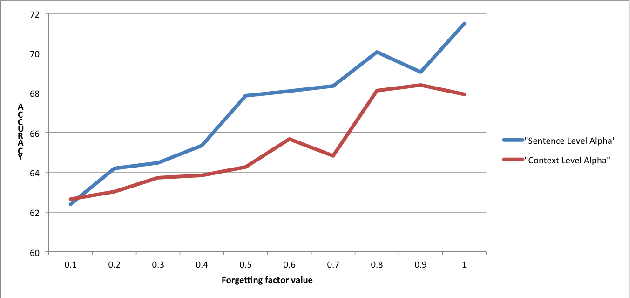

Abstract:In the sentence classification task, context formed from sentences adjacent to the sentence being classified can provide important information for classification. This context is, however, often ignored. Where methods do make use of context, only small amounts are considered, making it difficult to scale. We present a new method for sentence classification, Context-LSTM-CNN, that makes use of potentially large contexts. The method also utilizes long-range dependencies within the sentence being classified, using an LSTM, and short-span features, using a stacked CNN. Our experiments demonstrate that this approach consistently improves over previous methods on two different datasets.

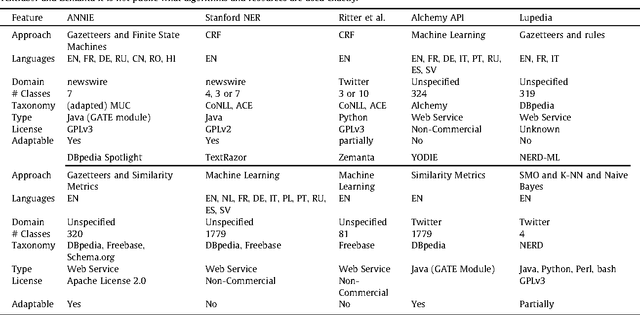

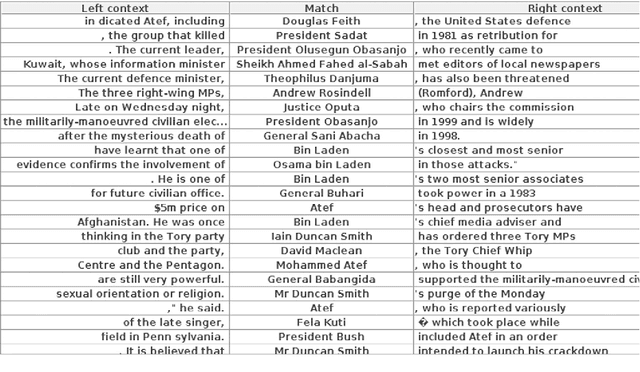

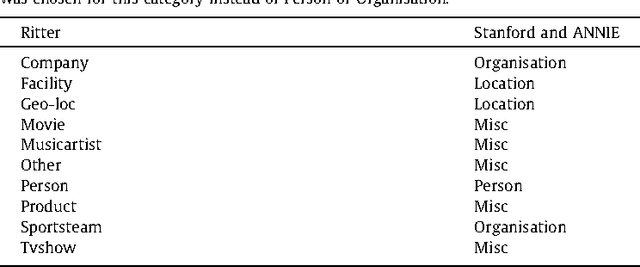

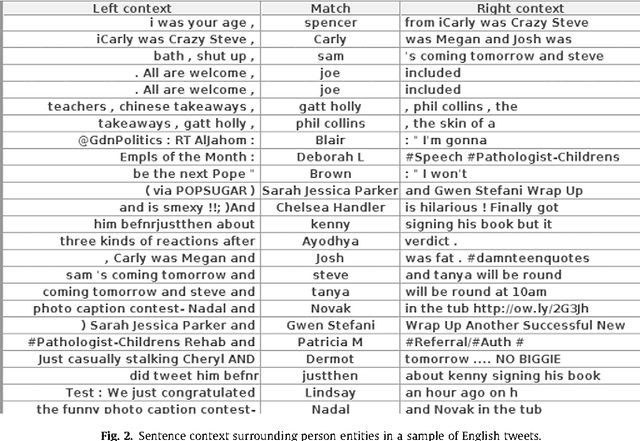

Analysis of Named Entity Recognition and Linking for Tweets

Oct 27, 2014

Abstract:Applying natural language processing for mining and intelligent information access to tweets (a form of microblog) is a challenging, emerging research area. Unlike carefully authored news text and other longer content, tweets pose a number of new challenges, due to their short, noisy, context-dependent, and dynamic nature. Information extraction from tweets is typically performed in a pipeline, comprising consecutive stages of language identification, tokenisation, part-of-speech tagging, named entity recognition and entity disambiguation (e.g. with respect to DBpedia). In this work, we describe a new Twitter entity disambiguation dataset, and conduct an empirical analysis of named entity recognition and disambiguation, investigating how robust a number of state-of-the-art systems are on such noisy texts, what the main sources of error are, and which problems should be further investigated to improve the state of the art.

* 35 pages, accepted to journal Information Processing and Management

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge